David Colquhoun

The "supplement" industry is a scam that dwarfs all other forms of alternative medicine. Sales are worth over $100 billion a year, a staggering sum. But the claims they make are largely untrue: plain fraudulent. Although the industry’s advertisements like to claim "naturalness". in fact most of the synthetic vitamins are manufactured by big pharma companies. The pharmaceutical industry has not been slow to cash in on an industry in which unverified claims can be made with impunity.

When I saw advertised Hotshot, "a proprietary formulation of organic ingredients" that is alleged to cure or prevent muscle cramps, I would have assumed that it was just another scam. Then I saw that the people behind it were very highly-regarded scientists, Rod MacKinnon and Bruce Bean, both of whom I have met.

The Hotshot’s website gives this background.

"For Dr. Rod MacKinnon, a Nobel Prize-winning neuroscientist/endurance athlete, the invention of HOTSHOT was personal.

After surviving life threatening muscle cramps while deep sea kayaking off the coast of Cape Cod, he discovered that existing cramp remedies – that target the muscle – didn’t work. Calling upon his Nobel Prize-winning expertise on ion channels, Rod reasoned that preventing and treating cramps began with focusing on the nerve, not the muscle.

Five years of scientific research later, Rod has perfected HOTSHOT, the kick-ass, proprietary formulation of organic ingredients, powerful enough to stop muscle cramps where they start. At the nerve.

Today, Rod’s genius solution has created a new category in sports nutrition: Neuro Muscular Performance (NMP). It’s how an athlete’s nerves and muscles work together in an optimal way. HOTSHOT boosts your NMP to stop muscle cramps. So you can push harder, train longer and finish stronger."

For a start, it’s pretty obvious that MacKinnon has not spent the last five years developing a cure for cramp. His publications don’t even mention the topic. Neither do Bruce Bean’s.

I’d like to thank Bruce Bean for answering some questions I put to him. He said it’s "designed to be as strong as possible in activating TRPV1 and TRPA1 channels". After some hunting I found that it contains

Filtered Water, Organic Cane Sugar, Organic Gum Arabic, Organic Lime Juice Concentrate, Pectin, Sea Salt, Natural Flavor, Organic Stevia Extract, Organic Cinnamon, Organic Ginger, Organic Capsaicin

The first ingredient is sugar: "the 1.7oz shot contains enough sugar to make a can of Coke blush with 5.9 grams per ounce vs. 3.3 per ounce of Coke".[ref].

The TRP (transient receptor potential) receptors form a family of 28 related ion channels,Their physiology is far from being well understood, but they are thought to be important for mediating taste and pain, The TRPV1 channel is also known as the receptor for capsaicin (found in chilli peppers). The TRPA1 responds to the active principle in Wasabi.

I’m quite happy to believe that most cramp is caused by unsychronised activity of motor nerves causing muscle fibres to contract in an uncordinated way (though it isn’t really known that this is the usual mechanism, or what triggers it in the first place), The problem is that there is no good reason at all to think that stimulating TRP receptors in the gastro-intestinal tract will stop, within a minute or so, the activity of motor nerves in the spinal cord.

But, as always, there is no point in discussing mechanisms until we are sure that there is a phenomenon to be explained. What is the actual evidence that Hotshot either prevents of cures cramps, as claimed? The Hotshot’s web site has pages about Our Science, Its title is The Truth about Muscle Cramps. That’s not a good start because it’s well known that nobody understands cramp.

So follow the link to See our Scientific Studies. It has three references, two are to unpublished work. The third is not about Hotshot, but about pickle juice. This was also the only reference sent to me by Bruce Bean. Its title is ‘Reflex Inhibition of Electrically Induced

Muscle Cramps in Hypohydrated Humans’, Miller et al,, 2010 [Download pdf]. Since it’s the only published work, it’s worth looking at in detail.

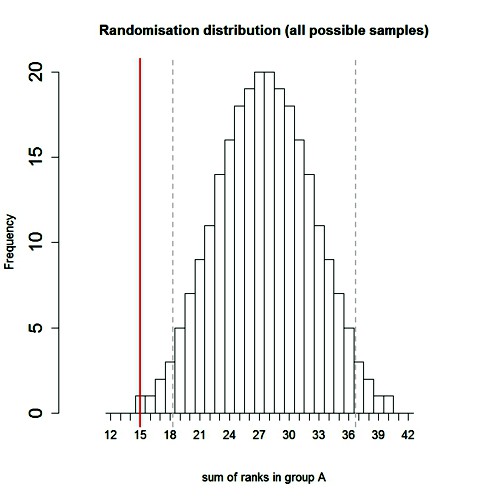

Miller et al., is not about exercise-induced cramp, but about a cramp-like condition that can be induced by electrical stimulation of a muscle in the sole of the foot (flexor hallucis brevis). The intention of the paper was to investigate anecdotal reports that pickle juice and prevent or stop cramps. It was a small study (only 10 subjects). After getting the subjects dehydrated, they cramp was induced electrically, and two seconds after it started, they drank either pickle juice or distilled water. They weren’t asked about pain: the extent of cramp was judged by electromyograph records. At least a week later, the test was repeated with the other drink (the order in which they were given was randomised). So it was a crossover design.

There was no detectable difference between water and pickle juice on the intensity of the cramp. But the duration of the cramp was said to be shorter. The mean duration after water was 133.7 ± 15.9 s and the mean duration after pickle juice was 84.6 ± 18.5 s. A t test gives P = 0.075. However each subject had both treatments and the mean reduction in duration was 49.1 ± 14.6 s and a paired t test gives P = 0.008. This is close to the 3-standard-deviation difference which I recommended as a minimal criterion, so what could possibly go wrong?.

The result certainly suggests that pickle juice might reduce the duration of cramps, but it’s far from conclusive, for the following reasons. First, it must have been very obvious indeed to the subjects whether they were drinking water or pickle juice. Secondly, paired t tests are not the right way to analyse crossover experiments, as explained here, Unfortunately the 10 differences are not given so there is no way to judge the consistency of the responses. Thirdly, two outcomes were measured (intensity and duration), and no correction was made for multiple comparisons. Finally, P = 0.008 is convincing evidence only if you assume that there’s a roughly 50:50 chance of the pickle-juice folk-lore being right before the experiment was started. For most folk remedies, that would be a pretty implausible assumption. The vast majority of folk remedies turn out to be useless when tested properly.

Nevertheless, the results are sufficiently suggestive that it might be worth testing Hotshot properly. One might have expected that would have been done before marketing started, It wasn’t.

Bruce Bean tells me that they tried it on friends who said that it worked. Perhaps that’s not so surprising: there can be no condition more susceptible than muscle cramps to self-deception because of regression to the mean

They found a business partner, Flex Pharma, and Mackinnon set up a company. Let’s see how they are doing.

Flex Pharma

The hyperbole in the advertisements for Hotshots is entirely legal in the USA. The infamous 1994 “Dietary Supplement Health and Education Act (DSHEA)” allows almost any claim to be made for herbs etc as long as they are described as a "dietary supplement". All they have to do is add in the small print:

"These statements have not been evaluated by the Food and Drug Administration. This product is not intended to diagnose, treat, cure or prevent any disease".

Of course medical claims are made: it’s sold to prevent and treat muscle cramp (and I can’t even find the weasel words on the web site).

As well as Hotshot, Flex Pharma are also testing a drug, FLX-787, a TRP receptor agonist of undisclosed structure. It is hoping get FDA approval for treatment of nocturnal leg cramps (NLCs) and treatment of spasticity in multiple sclerosis (MS) and amyotrophic lateral sclerosis (ALS) patients. It would be great if it works, but we still don’t know whether it does,

The financial press doesn’t seem to be very optimistic. When Flex Pharma was launched on the stock market at the beginning of 2015, its initial public offering, raised $$86.4 million, at $16 per share. The biotech boom of the previous few years was still strong. In 2016, the outlook seems less rosy. The investment advice site Seeking Alpha had a scathing evaluation in June 2016. Its title was "Flex Pharma: What A Load Of Cramp". It has some remarkably astute assessments of the pharmacology, as well as of financial risks. The summary reads thus:

- We estimate FLKS will burn at least 40 million of its $84 million in cash this year on clinical trials for FLX-787 and marketing spend for its new cramp supplement called “HOTSHOT.”

- Based on its high cash burn, we expect a large, dilutive equity raise is likely over the next 12 months.

- We believe the company’s recent study on nocturnal leg cramps (NLCs) may be flawed. We also highlight risks to its lead drug candidate, FLX-787, that we believe investors are currently overlooking.

- We highlight several competitive available alternatives to FLKS’s cramp products that we believe investors have not factored into current valuation.

- Only 2.82% of drugs from companies co-founded by CEO Westphal have achieved FDA approval.

The last bullet point refers to Flex Pharma’s CEO, Christoph Westphal MD PhD (described bi Fierce Biotech as "serial biotech entrepreneur"). Only two out of his 71 requests for FDA approval were successful.

On October 13th 2016 it was reported that early trials of FLX-787 had been disappointing. The shares plunged.

On October 17th 2016, Seeking Alpha posted another evaluation: “Flex Pharma Has Another Cramp“. Also StreetInsider,com. They were not optimistic. The former made the point (see above) that crossover trials are not what should be done. In fact the FDA have required that regular parallel RCTs should be done before FLX-787 can be approved.

Summary

Drug discovery is hard and it’s expensive. The record for small molecule discovery has not been good in the last few decades. Many new introductions have, at best, marginal efficacy, and at worst may do more harm than good. In the conditions for which understanding of causes is poor or non-existent, it’s impossible to design new drugs rationally. There are only too many such conditions: from low back pain to almost anything that involves the brain, knowledge of causes is fragmentary to non-existent. This leads guidance bodies to clutch at straws. Disappointing as this is, it’s not for want of trying. And it’s not surprising. Serious medical research hasn’t been going for long and the systems are very complicated.

But this is no excuse for pretending that things work on tha basis of the flimsiest of evidence, Bruce Bean advised me to try Hotshot on friends, and says that it doesn’t work for everybody. This is precisely what one is told by homeopaths, and just about every other sort of quack. Time and time again, that sort of evidence has proved to be misleading,

I have the greatest respect for the science that’s published by both Bruce Bean and Rod MacKinnon. I guess that they aren’t familiar with the sort of evidence that’s required to show that a new treatment works. That isn’t solved by describing a treament as a "dietary supplement".

I’ll confess that I’m a bit disappointed by their involvement with Flex Pharma, a company that makes totally unjustified claims. Or should one just say caveat emptor?

Follow-up

Before posting this, I sent it to Bruce Bean to be checked. Here was his response, which I’m posting in full (hoping not to lose a friend).

"Want to be UK representative for Hotshot? Sample on the way!"

"I do not see anything wrong with the facts. I have a different opinion – that it is perfectly appropriate to have different standards of proof of efficacy for consumer products made from general-recognized-as-safe ingredients and for an FDA-approved drug. I’d be happy for the opportunity to post something like the following your blog entry (and suffer any consequent further abuse) if there is an opportunity".

" I think it would be unfair to lump Hotshot with “dietary supplements” targeted to exploit the hopes of people with serious diseases who are desperate for magic cures. Hotshot is designed and marketed to athletes who experience exercise-induced cramping that can inhibit their training or performance – hardly a population of desperate people susceptible of exploitation. It costs only a few dollars for someone to try it. Lots of people use it regularly and find it helpful. I see nothing wrong with this and am glad that something that I personally found helpful is available for others to try. "

" Independently of Hotshot, Flex Pharma is hoping to develop treatments for cramping associated with diseases like ALS, MS, and idiopathic nocturnal leg cramps. These treatments are being tested in rigorous clinical trials that will be reviewed by the FDA. As with any drug development it is very expensive to do the clinical trials and there is no guarantee of success. I give credit to the investors who are underwriting the effort. The trials are openly publicly reported. I would note that Flex Pharma voluntarily reported results of a recent trial for night leg cramps that led to a nearly 50% drop in the stock price. I give the company credit for that openness and for spending a lot of money and a lot of effort to attempt to develop a treatment to help people – if it can pass the appropriately high hurdle of FDA approval."

" On Friday, I sent along 8 bottles of Hotshot by FedEx, correctly labeled for customs as a commercial sample. Of course, I’d be delighted if you would agree to act as UK representative for the product but absent that, it should at least convince you that the TRP stimulators are present at greater than homeopathic doses. If you can find people who get exercise-induced cramping that can’t be stretched out, please share with them."

6 January 2017

It seems that more than one Nobel prizewinner is willing to sell their names to dodgy businesses. The MIT Tech Review tweeted a link to Imagine Albert Einstein getting paid to put his picture on tin of anti-wrinkle cream. No fewer than seven Nobel prizewinners have lent their names to a “supplement” pill that’s claimed to prolong your life. Needless to say, there isn’t the slightest reason to think it works. What posesses these people beats me. Here are their names.

Aaron Ciechanover (Cancer Biology, Technion – Israel Institute of Technology).

Eric Kandel (Neuroscience, Columbia University).

Jack Szostak (Origins of Life & Telomeres, Harvard University).

Martin Karplus (Complex Chemical Systems, Harvard University).

Sir Richard Roberts(Biochemistry, New England Biolabs).

Thomas Südhof (Neuroscience, Stanford University).

Paul Modrich (Biochemistry, Duke University School of Medicine).

Then there’s the Amyway problem. Watch this space.

‘We know little about the effect of diet on health. That’s why so much is written about it’. That is the title of a post in which I advocate the view put by John Ioannidis that remarkably little is known about the health effects if individual nutrients. That ignorance has given rise to a vast industry selling advice that has little evidence to support it.

The 2016 Conference of the so-called "College of Medicine" had the title "Food, the Forgotten Medicine". This post gives some background information about some of the speakers at this event. I’m sorry it appears to be too ad hominem, but the only way to judge the meeting is via the track record of the speakers.

Quite a lot has been written here about the "College of Medicine". It is the direct successor of the Prince of Wales’ late, unlamented, Foundation for Integrated Health. But unlike the latter, its name is disguises its promotion of quackery. Originally it was going to be called the “College of Integrated Health”, but that wasn’t sufficently deceptive so the name was dropped.

For the history of the organisation, see

Don’t be deceived. The new “College of Medicine” is a fraud and delusion

The College of Medicine is in the pocket of Crapita Capita. Is Graeme Catto selling out?

The conference programme (download pdf) is a masterpiece of bait and switch. It is a mixture of very respectable people, and outright quacks. The former are invited to give legitimacy to the latter. The names may not be familiar to those who don’t follow the antics of the magic medicine community, so here is a bit of information about some of them.

The introduction to the meeting was by Michael Dixon and Catherine Zollman, both veterans of the Prince of Wales Foundation, and both devoted enthusiasts for magic medicne. Zollman even believes in the battiest of all forms of magic medicine, homeopathy (download pdf), for which she totally misrepresents the evidence. Zollman works now at the Penny Brohn centre in Bristol. She’s also linked to the "Portland Centre for integrative medicine" which is run by Elizabeth Thompson, another advocate of homeopathy. It came into being after NHS Bristol shut down the Bristol Homeopathic Hospital, on the very good grounds that it doesn’t work.

Now, like most magic medicine it is privatised. The Penny Brohn shop will sell you a wide range of expensive and useless "supplements". For example, Biocare Antioxidant capsules at £37 for 90. Biocare make several unjustified claims for their benefits. Among other unnecessary ingredients, they contain a very small amount of green tea. That’s a favourite of "health food addicts", and it was the subject of a recent paper that contains one of the daftest statistical solecisms I’ve ever encountered

"To protect against type II errors, no corrections were applied for multiple comparisons".

If you don’t understand that, try this paper.

The results are almost certainly false positives, despite the fact that it appeared in Lancet Neurology. It’s yet another example of broken peer review.

It’s been know for decades now that “antioxidant” is no more than a marketing term, There is no evidence of benefit and large doses can be harmful. This obviously doesn’t worry the College of Medicine.

Margaret Rayman was the next speaker. She’s a real nutritionist. Mixing the real with the crackpots is a standard bait and switch tactic.

Eleni Tsiompanou, came next. She runs yet another private "wellness" clinic, which makes all the usual exaggerated claims. She seems to have an obsession with Hippocrates (hint: medicine has moved on since then). Dr Eleni’s Joy Biscuits may or may not taste good, but their health-giving properties are make-believe.

Andrew Weil, from the University of Arizona

gave the keynote address. He’s described as "one of the world’s leading authorities on Nutrition and Health". That description alone is sufficient to show the fantasy land in which the College of Medicine exists. He’s a typical supplement salesman, presumably very rich. There is no excuse for not knowing about him. It was 1988 when Arnold Relman (who was editor of the New England Journal of Medicine) wrote A Trip to Stonesville: Some Notes on Andrew Weil, M.D..

“Like so many of the other gurus of alternative medicine, Weil is not bothered by logical contradictions in his argument, or encumbered by a need to search for objective evidence.”

This blog has mentioned his more recent activities, many times.

Alex Richardson, of Oxford Food and Behaviour Research (a charity, not part of the university) is an enthusiast for omega-3, a favourite of the supplement industry, She has published several papers that show little evidence of effectiveness. That looks entirely honest. On the other hand, their News section contains many links to the notorious supplement industry lobby site, Nutraingredients, one of the least reliable sources of information on the web (I get their newsletter, a constant source of hilarity and raised eyebrows). I find this worrying for someone who claims to be evidence-based. I’m told that her charity is funded largely by the supplement industry (though I can’t find any mention of that on the web site).

Stephen Devries was a new name to me. You can infer what he’s like from the fact that he has been endorsed byt Andrew Weil, and that his address is "Institute for Integrative Cardiology" ("Integrative" is the latest euphemism for quackery). Never trust any talk with a title that contains "The truth about". His was called "The scientific truth about fats and sugars," In a video, he claims that diet has been shown to reduce heart disease by 70%. which gives you a good idea of his ability to assess evidence. But the claim doubtless helps to sell his books.

Prof Tim Spector, of Kings College London, was next. As far as I know he’s a perfectly respectable scientist, albeit one with books to sell, But his talk is now online, and it was a bit like a born-again microbiome enthusiast. He seemed to be too impressed by the PREDIMED study, despite it’s statistical unsoundness, which was pointed out by Ioannidis. Little evidence was presented, though at least he was more sensible than the audience about the uselessness of multivitamin tablets.

Simon Mills talked on “Herbs and spices. Using Mother Nature’s pharmacy to maintain health and cure illness”. He’s a herbalist who has featured here many times. I can recommend especially his video about Hot and Cold herbs as a superb example of fantasy science.

Annie Anderson, is Professor of Public Health Nutrition and

Founder of the Scottish Cancer Prevention Network. She’s a respectable nutritionist and public health person, albeit with their customary disregard of problems of causality.

Patrick Holden is chair of the Sustainable Food Trust. He promotes "organic farming". Much though I dislike the cruelty of factory farms, the "organic" industry is largely a way of making food more expensive with no health benefits.

The Michael Pittilo 2016 Student Essay Prize was awarded after lunch. Pittilo has featured frequently on this blog as a result of his execrable promotion of quackery -see, in particular, A very bad report: gamma minus for the vice-chancellor.

Nutritional advice for patients with cancer. This discussion involved three people.

Professor Robert Thomas, Consultant Oncologist, Addenbrookes and Bedford Hospitals, Dr Clare Shaw, Consultant Dietitian, Royal Marsden Hospital and Dr Catherine Zollman, GP and Clinical Lead, Penny Brohn UK.

Robert Thomas came to my attention when I noticed that he, as a regular cancer consultant had spoken at a meeting of the quack charity, “YestoLife”. When I saw he was scheduled tp speak at another quack conference. After I’d written to him to point out the track records of some of the people at the meeting, he withdrew from one of them. See The exploitation of cancer patients is wicked. Carrot juice for lunch, then die destitute. The influence seems to have been temporary though. He continues to lend respectability to many dodgy meetings. He edits the Cancernet web site. This site lends credence to bizarre treatments like homeopathy and crystal healing. It used to sell hair mineral analysis, a well-known phony diagnostic method the main purpose of which is to sell you expensive “supplements”. They still sell the “Cancer Risk Nutritional Profile”. for £295.00, despite the fact that it provides no proven benefits.

Robert Thomas designed a food "supplement", Pomi-T: capsules that contain Pomegranate, Green tea, Broccoli and Curcumin. Oddly, he seems still to subscribe to the antioxidant myth. Even the supplement industry admits that that’s a lost cause, but that doesn’t stop its use in marketing. The one randomised trial of these pills for prostate cancer was inconclusive. Prostate Cancer UK says "We would not encourage any man with prostate cancer to start taking Pomi-T food supplements on the basis of this research". Nevertheless it’s promoted on Cancernet.co.uk and widely sold. The Pomi-T site boasts about the (inconclusive) trial, but says "Pomi-T® is not a medicinal product".

There was a cookery demonstration by Dale Pinnock "The medicinal chef" The programme does not tell us whether he made is signature dish "the Famous Flu Fighting Soup". Needless to say, there isn’t the slightest reason to believe that his soup has the slightest effect on flu.

In summary, the whole meeting was devoted to exaggerating vastly the effect of particular foods. It also acted as advertising for people with something to sell. Much of it was outright quackery, with a leavening of more respectable people, a standard part of the bait-and-switch methods used by all quacks in their attempts to make themselves sound respectable. I find it impossible to tell how much the participants actually believe what they say, and how much it’s a simple commercial drive.

The thing that really worries me is why someone like Phil Hammond supports this sort of thing by chairing their meetings (as he did for the "College of Medicine’s" direct predecessor, the Prince’s Foundation for Integrated Health. His defence of the NHS has made him something of a hero to me. He assured me that he’d asked people to stick to evidence. In that he clearly failed. I guess they must pay well.

Follow-up

This is my version of a post which I was asked to write for the Independent. It’s been published, though so many changes were made by the editor that I’m posting the original here (below).

Superstition is rife in all sports. Mostly it does no harm, and it might even have a placebo effect that’s sufficient to make a difference of 0.01%. That might just get you a medal. But what does matter is that superstition has given rise to an army of charlatans who are only to willing to sell their magic medicine to athletes, most of whom are not nearly as rich as Phelps.

So much has been said about cupping during the last week

that it’s hard to say much that’s original. Yesterday I did six radio interviews and two for TV, and today Associated Press TV came to film a piece about it. Everyone else must have been on holiday. The only one I’ve checked was the piece on the BBC News channel. That one didn’t seem to go too badly, so it’s here

BBC news coverage

It starts with the usual lengthy, but uninformative, pictures of someone being cupped, The cupper in this case was actually a chiropractor, Rizwhan Suleman. Chiropractic is, of course a totally different form of alternative medicine and its value has been totally discredited in the wake of the Simon Singh case. It’s not unusual for people to sell different therapies with conflicting beliefs. Truth is irrelevant. Once you’ve believed one impossible thing, it seems that the next ones become quite easy.

The presenter, Victoria Derbyshire, gave me a fair chance to debunk it afterwards.

Nevertheless, the programme suffered from the usual pretence that there is a controversy about the medical value of cupping. There isn’t. But despite Steve Jones’ excellent report to the BBC Trust, the media insist on giving equal time to flat-earth advocates. The report, (Review of impartiality and accuracy of the BBC’s coverage of science) was no doubt commissioned with good intentions, but it’s been largely ignored.

Still worse, the BBC News Channel, when it repeated the item (its cycle time is quite short) showed only Rizwhan Suleman and cut out my comments altogether. This is not false balance. It’s no balance whatsoever. A formal complaint has been sent. It is not the job of the BBC to provide free advertising to quacks.

After this, a friend drew my attention to a much worse programme on the subject.

The Jeremy Vine show on BBC Radio 2, at 12.00 on August 10th, 2016. This was presented by Vanessa Feltz. It was beyond appalling. There was absolutely zero attempt at balance, false or otherwise. The guest was described as being am "expert" on cupping. He was Yusef Noden, of the London Hijama Clinic, who "trained and qualified with the Hijama & Prophetic Medicine Institute". No doubt he’s a nice bloke, but he really could use a first year course in physiology. His words were pure make-believe. His repeated statements about "withdrawing toxins" are well know to be absolutely untrue. It was embarrassing to listen to. If you really want to hear it, here is an audio recording.

The Jeremy Vine show

This programme is one of the worst cases I’ve heard of the BBC mis-educating the public by providing free advertising for quite outrageous quackery. Another complaint will be submitted. The only form of opposition was a few callers who pointed out the nonsense, mixed with callers who endorsed it. That is not, by any stretch of the imagination, fair and balanced.

It’s interesting that, although cupping is often associated with Traditional Chinese Medicine, neither of the proponents in these two shows was Chinese, but rather they were Muslim. This should not be surprising as neither cupping nor acupuncture are exclusively Chinese. Similar myths have arisen in many places. My first encounter with this particular branch of magic medicine was when I was asked to make a podcast for “Things Unseen”, in which I debated with a Muslim hijama practitioner and an Indian Ayurvedic practitioner. It’s even harder to talk sense to practitioners of magic medicine who believe that god is on their side, as well as believing that selling nonsense is a good way to make a living.

An excellent history of the complex emergence of similar myths in different parts of the world has been published by Ben Kavoussi, under the title "Acupuncture is astrology with needles".

Now the original version of my blog for the Independent.

Cupping: Michael Phelps and Gwyneth Paltrow may be believers, but the truth behind it is what really sucks

The sight of Olympic swimmer, Michael Phelps, with bruises on his body caused by cupping resulted in something of a media feeding-frenzy this week. He’s a great athlete so cupping must be responsible for his performance, right? Just as cupping must be responsible for the complexion of an earlier enthusiast, Gwyneth Paltrow.

The main thing in common between Phelps and Paltrow is that they both have a great deal of money, and neither has much interest in how you distinguish truth from myth. They can afford to indulge any whim, however silly.

And cupping is pretty silly. It’s a pre-scientific medical practice that started in a time when there was no understanding of physiology, much like bloodletting. Indeed one version does involve a bit of bloodletting. Perhaps bloodletting is the best argument against the belief that it’s ancient wisdom, so it must work. It was a standard part of medical treatment for hundreds of years, and killed countless people.

It is desperately implausible that putting suction cups on your skin would benefit anything, so it’s not surprising that there is no worthwhile empirical evidence that it does. The Chinese version of cupping is related to acupuncture and, unlike cupping, acupuncture has been very thoroughly tested. Over 3000 trials have failed to show any benefit that’s big enough to benefit patients. Acupuncture is no more than a theatrical placebo. And even its placebo effects are too small to be useful.

At least it’s likely that cupping usually does no lasting damage.. We don’t know for sure because in the world of alternative medicine there is no system for recording bad effects (and there is a vested interest in not reporting them). In extreme cases, it can leave holes in your skin that pose a serious danger of infection, but most people probably end up with just broken capillaries and bruises. Why would anyone want that?

The answer to that question seems to be a mixture of wishful thinking about the benefits and vastly exaggerated claims made by the people who sell the product.

It’s typical that the sales people can’t even agree on what the benefits are alleged to be. If selling to athletes, the claim may be that it relieves pain, or that it aids recovery, or that it increases performance. Exactly the same cupping methods are sold to celebs with the claim that their beauty will be improved because cupping will “boost your immune system”. This claim is universal in the world of make-believe medicine, when the salespeople can think of nothing else. There is no surer sign of quackery. It means nothing whatsoever. No procedure is known to boost your immune system. And even if anything did, it would be more likely to cause inflammation and blood clots than to help you run faster or improve your complexion.

It’s certainly most unlikely that sucking up bits of skin into evacuated jars would have any noticeable effect on blood flow in underlying muscles, and so increase your performance. The salespeople would undoubtedly benefit from a first year physiology course.

Needless to say, they haven’t tried to actually measuring blood flow, or performance. To do that might reduce sales. As Kate Carter said recently “Eating jam out of those jars would probably have a more significant physical impact”.

The problem with all sports medicine is that tiny effects could make a difference. When three hour endurance events end with a second or so separating the winner from the rest, that is an effect of less than 0.01%. Such tiny effects will never be detectable experimentally. That leaves the door open to every charlatan to sell miracle treatments that might just work. If, like steroids, they do work, there is a good chance that they’ll harm your health in the long run.

You might be better off eating the jam.

Here is a very small selection of the many excellent accounts of cupping on the web.

There have been many good blogs. The mainstream media have, on the whole, been dire. Here are three that I like,

|

In July 2016, Orac posted in ScienceBlogs. "What’s the harm? Cupping edition". He used his expertise as a surgeon to explain the appalling wounds that can be produced by excessive cupping. |

Photo from news,com.au |

Timothy Caulfield, wrote "Olympic debunk!". He’s Chair in Health Law and Policy at the University of Alberta, and the author of Is Gwyneth Paltrow Wrong about Everything.

“The Olympics are a wonderful celebration of athletic performance. But they have also become an international festival of sports pseudoscience. It will take an Olympic–sized effort to fight this bunk and bring a win to the side of evidence-based practice.”

Jennifer Raff wrote Pseudoscience is common among elite athletes outside of the Olympics too…and it makes me furious. She works on the genomes of modern and ancient people at the University of Kansas, and, as though that were not a full-time job for most people, she writes blogs, books and she’s also "training (and occasionally competing) in Muay Thai, boxing, BJJ, and MMA".

"I’m completely unsurprised to find that pseudoscience is common among the elite athletes competing in the Olympics. I’ve seen similar things rampant in the combat sports world as well."

What she writes makes perfect sense. Just don’t bother with the comments section which is littered with Trump-like post-factual comments from anonymous conspiracy theorists.

Follow-up

Of all types of alternative medicine, acupuncture is the one that has received the most approval from regular medicine. The benefit of that is that it’s been tested more thoroughly than most others. The result is now clear. It doesn’t work. See the evidence in Acupuncture is a theatrical placebo.

This blog has documented many cases of misreported tests of acupuncture, often from people have a financial interests in selling it. Perhaps the most egregious spin came from the University of Exeter. It was published in a normal journal, and endorsed by the journal’s editor, despite showing clearly that acupuncture didn’t even have much placebo effect.

Acupuncture got a boost in 2009 from, of all unlikely sources, the National Institute for Health and Care Excellence (NICE). The judgements of NICE and the benefit / cost ratio of treatments are usually very good. But the guidance group that they assembled to judge treatments for low back pain was atypically incompetent when it came to assessment of evidence. They recommended acupuncture as one option. At the time I posted “NICE falls for Bait and Switch by acupuncturists and chiropractors: it has let down the public and itself“. That was soon followed by two more posts:

NICE fiasco, part 2. Rawlins should withdraw guidance and start again“,

and

“The NICE fiasco, Part 3. Too many vested interests, not enough honesty“.

At the time, NICE was being run by Michael Rawlins, an old friend. No doubt he was unaware of the bad guidance until it was too late and he felt obliged to defend it.

Although the 2008 guidance referred only to low back pain, it gave an opening for acupuncturists to penetrate the NHS. Like all quacks, they are experts at bait and switch. The penetration of quackery was exacerbated by the privatisation of physiotherapy services to organisations like Connect Physical Health which have little regard for evidence, but a good eye for sales. If you think that’s an exaggeration, read "Connect Physical Health sells quackery to NHS".

When David Haslam took over the reins at NICE, I was optimistic that the question would be revisited (it turned out that he was aware of this blog). I was not disappointed. This time the guidance group had much more critical members.

The new draft guidance on low back pain was released on 24 March 2016. The final guidance will not appear until September 2016, but last time the final version didn’t differ much from the draft.

Despite modern imaging methods, it still isn’t possible to pinpoint the precise cause of low back pain (LBP) so diagnoses are lumped together as non-specific low back pain (NSLBP).

The summary guidance is explicit.

“1.2.8 Do not offer acupuncture for managing non-specific low back 7 pain with or without sciatica.”

The evidence is summarised section 13.6 of the main report (page 493).There is a long list of other proposed treatments that are not recommended.

Because low back pain is so common, and so difficult to treat, many treatments have been proposed. Many of them, including acupuncture, have proved to be clutching at straws. It’s to the great credit of the new guidance group that they have resisted that temptation.

Among the other "do not offer" treatments are

- imaging (except in specialist setting)

- belts or corsets

- foot orthotics

- acupuncture

- ultrasound

- TENS or PENS

- opioids (for acute or chronic LBP)

- antidepressants (SSRI and others)

- anticonvulsants

- spinal injections

- spinal fusion for NSLBP (except as part of a randomised controlled trial)

- disc replacement

At first sight, the new guidance looks like an excellent clear-out of the myths that surround the treatment of low back pain.

The positive recommendations that are made are all for things that have modest effects (at best). For example “Consider a group exercise programme”, and “Consider manipulation, mobilisation”. The use of there word “consider”, rather than “offer” seems to be NICE-speak -an implicit suggestion that it doesn’t work very well. My only criticism of the report is that it doesn’t say sufficiently bluntly that non-specific low back pain is largely an unsolved problem. Most of what’s seen is probably a result of that most deceptive phenomenon, regression to the mean.

One pain specialist put it to me thus. “Think of the billions spent on back pain research over the years in order to reach the conclusion that nothing much works – shameful really.” Well perhaps not shameful: it isn’t for want of trying. It’s just a very difficult problem. But pretending that there are solutions doesn’t help anyone.

Follow-up

This post arose from a recent meeting at the Royal Society. It was organised by Julie Maxton to discuss the application of statistical methods to legal problems. I found myself sitting next to an Appeal Court Judge who wanted more explanation of the ideas. Here it is.

Some preliminaries

The papers that I wrote recently were about the problems associated with the interpretation of screening tests and tests of significance. They don’t allude to legal problems explicitly, though the problems are the same in principle. They are all open access. The first appeared in 2014:

http://rsos.royalsocietypublishing.org/content/1/3/140216

Since the first version of this post, March 2016, I’ve written two more papers and some popular pieces on the same topic. There’s a list of them at http://www.onemol.org.uk/?page_id=456.

I also made a video for YouTube of a recent talk.

In these papers I was interested in the false positive risk (also known as the false discovery rate) in tests of significance. It turned out to be alarmingly large. That has serious consequences for the credibility of the scientific literature. In legal terms, the false positive risk means the proportion of cases in which, on the basis of the evidence, a suspect is found guilty when in fact they are innocent. That has even more serious consequences.

Although most of what I want to say can be said without much algebra, it would perhaps be worth getting two things clear before we start.

The rules of probability.

(1) To get any understanding, it’s essential to understand the rules of probabilities, and, in particular, the idea of conditional probabilities. One source would be my old book, Lectures on Biostatistics (now free), The account on pages 19 to 24 give a pretty simple (I hope) description of what’s needed. Briefly, a vertical line is read as “given”, so Prob(evidence | not guilty) means the probability that the evidence would be observed given that the suspect was not guilty.

(2) Another potential confusion in this area is the relationship between odds and probability. The relationship between the probability of an event occurring, and the odds on the event can be illustrated by an example. If the probability of being right-handed is 0.9, then the probability of being not being right-handed is 0.1. That means that 9 people out of 10 are right-handed, and one person in 10 is not. In other words for every person who is not right-handed there are 9 who are right-handed. Thus the odds that a randomly-selected person is right-handed are 9 to 1. In symbols this can be written

\[ \mathrm{probability=\frac{odds}{1 + odds}} \]

In the example, the odds on being right-handed are 9 to 1, so the probability of being right-handed is 9 / (1+9) = 0.9.

Conversely,

\[ \mathrm{odds =\frac{probability}{1 – probability}} \]

In the example, the probability of being right-handed is 0.9, so the odds of being right-handed are 0.9 / (1 – 0.9) = 0.9 / 0.1 = 9 (to 1).

With these preliminaries out of the way, we can proceed to the problem.

The legal problem

The first problem lies in the fact that the answer depends on Bayes’ theorem. Although that was published in 1763, statisticians are still arguing about how it should be used to this day. In fact whenever it’s mentioned, statisticians tend to revert to internecine warfare, and forget about the user.

Bayes’ theorem can be stated in words as follows

\[ \mathrm{\text{posterior odds ratio} = \text{prior odds ratio} \times \text{likelihood ratio}} \]

“Posterior odds ratio” means the odds that the person is guilty, relative to the odds that they are innocent, in the light of the evidence, and that’s clearly what one wants to know. The “prior odds” are the odds that the person was guilty before any evidence was produced, and that is the really contentious bit.

Sometimes the need to specify the prior odds has been circumvented by using the likelihood ratio alone, but, as shown below, that isn’t a good solution.

The analogy with the use of screening tests to detect disease is illuminating.

Screening tests

A particularly straightforward application of Bayes’ theorem is in screening people to see whether or not they have a disease. It turns out, in many cases, that screening gives a lot more wrong results (false positives) than right ones. That’s especially true when the condition is rare (the prior odds that an individual suffers from the condition is small). The process of screening for disease has a lot in common with the screening of suspects for guilt. It matters because false positives in court are disastrous.

The screening problem is dealt with in sections 1 and 2 of my paper. or on this blog (and here). A bit of animation helps the slides, so you may prefer the Youtube version.

The rest of my paper applies similar ideas to tests of significance. In that case the prior probability is the probability that there is in fact a real effect, or, in the legal case, the probability that the suspect is guilty before any evidence has been presented. This is the slippery bit of the problem both conceptually, and because it’s hard to put a number on it.

But the examples below show that to ignore it, and to use the likelihood ratio alone, could result in many miscarriages of justice.

In the discussion of tests of significance, I took the view that it is not legitimate (in the absence of good data to the contrary) to assume any prior probability greater than 0.5. To do so would presume you know the answer before any evidence was presented. In the legal case a prior probability of 0.5 would mean assuming that there was a 50:50 chance that the suspect was guilty before any evidence was presented. A 50:50 probability of guilt before the evidence is known corresponds to a prior odds ratio of 1 (to 1) If that were true, the likelihood ratio would be a good way to represent the evidence, because the posterior odds ratio would be equal to the likelihood ratio.

It could be argued that 50:50 represents some sort of equipoise, but in the example below it is clearly too high, and if it is less that 50:50, use of the likelihood ratio runs a real risk of convicting an innocent person.

The following example is modified slightly from section 3 of a book chapter by Mortera and Dawid (2008). Philip Dawid is an eminent statistician who has written a lot about probability and the law, and he’s a member of the legal group of the Royal Statistical Society.

My version of the example removes most of the algebra, and uses different numbers.

Example: The island problem

The “island problem” (Eggleston 1983, Appendix 3) is an imaginary example that provides a good illustration of the uses and misuses of statistical logic in forensic identification.

A murder has been committed on an island, cut off from the outside world, on which 1001 (= N + 1) inhabitants remain. The forensic evidence at the scene consists of a measurement, x, on a “crime trace” characteristic, which can be assumed to come from the criminal. It might, for example, be a bit of the DNA sequence from the crime scene.

Say, for the sake of example, that the probability of a random member of the population having characteristic x is P = 0.004 (i.e. 0.4% ), so the probability that a random member of the population does not have the characteristic is 1 – P = 0.996. The mainland police arrive and arrest a random islander, Jack. It is found that Jack matches the crime trace. There is no other relevant evidence.

How should this match evidence be used to assess the claim that Jack is the murderer? We shall consider three arguments that have been used to address this question. The first is wrong. The second and third are right. (For illustration, we have taken N = 1000, P = 0.004.)

(1) Prosecutor’s fallacy

Prosecuting counsel, arguing according to his favourite fallacy, asserts that the probability that Jack is guilty is 1 – P , or 0.996, and that this proves guilt “beyond a reasonable doubt”.

The probability that Jack would show characteristic x if he were not guilty would be 0.4% i.e. Prob(Jack has x | not guilty) = 0.004. Therefore the probability of the evidence, given that Jack is guilty, Prob(Jack has x | Jack is guilty), is one 1 – 0.004 = 0.996.

But this is Prob(evidence | guilty) which is not what we want. What we need is the probability that Jack is guilty, given the evidence, P(Jack is guilty | Jack has characteristic x).

To mistake the latter for the former is the prosecutor’s fallacy, or the error of the transposed conditional.

Dawid gives an example that makes the distinction clear.

“As an analogy to help clarify and escape this common and seductive confusion, consider the difference between “the probability of having spots, if you have measles” -which is close to 1 and “the probability of having measles, if you have spots” -which, in the light of the many alternative possible explanations for spots, is much smaller.”

(2) Defence counter-argument

Counsel for the defence points out that, while the guilty party must have characteristic x, he isn’t the only person on the island to have this characteristic. Among the remaining N = 1000 innocent islanders, 0.4% have characteristic x, so the number who have it will be NP = 1000 x 0.004 = 4 . Hence the total number of islanders that have this characteristic must be 1 + NP = 5 . The match evidence means that Jack must be one of these 5 people, but does not otherwise distinguish him from any of the other members of it. Since just one of these is guilty, the probability that this is Jack is thus 1/5, or 0.2— very far from being “beyond all reasonable doubt”.

(3) Bayesian argument

The probability of the having characteristic x (the evidence) would be Prob(evidence | guilty) = 1 if Jack were guilty, but if Jack were not guilty it would be 0.4%, i.e. Prob(evidence | not guilty) = P. Hence the likelihood ratio in favour of guilt, on the basis of the evidence, is

\[ LR=\frac{\text{Prob(evidence } | \text{ guilty})}{\text{Prob(evidence }|\text{ not guilty})} = \frac{1}{P}=250 \]

In words, the evidence would be 250 times more probable if Jack were guilty than if he were innocent. While this seems strong evidence in favour of guilt, it still does not tell us what we want to know, namely the probability that Jack is guilty in the light of the evidence: Prob(guilty | evidence), or, equivalently, the odds ratio -the odds of guilt relative to odds of innocence, given the evidence,

To get that we must multiply the likelihood ratio by the prior odds on guilt, i.e. the odds on guilt before any evidence is presented. It’s often hard to get a numerical value for this. But in our artificial example, it is possible. We can argue that, in the absence of any other evidence, Jack is no more nor less likely to be the culprit than any other islander, so that the prior probability of guilt is 1/(N + 1), corresponding to prior odds on guilt of 1/N.

We can now apply Bayes’s theorem to obtain the posterior odds on guilt:

\[ \text {posterior odds} = \text{prior odds} \times LR = \left ( \frac{1}{N}\right ) \times \left ( \frac{1}{P} \right )= 0.25 \]

Thus the odds of guilt in the light of the evidence are 4 to 1 against. The corresponding posterior probability of guilt is

\[ Prob( \text{guilty } | \text{ evidence})= \frac{1}{1+NP}= \frac{1}{1+4}=0.2 \]

This is quite small –certainly no basis for a conviction.

This result is exactly the same as that given by the Defence Counter-argument’, (see above). That argument was simpler than the Bayesian argument. It didn’t explicitly use Bayes’ theorem, though it was implicit in the argument. The advantage of using the former is that it looks simpler. The advantage of the explicitly Bayesian argument is that it makes the assumptions more clear.

In summary The prosecutor’s fallacy suggested, quite wrongly, that the probability that Jack was guilty was 0.996. The likelihood ratio was 250, which also seems to suggest guilt, but it doesn’t give us the probability that we need. In stark contrast, the defence counsel’s argument, and equivalently, the Bayesian argument, suggested that the probability of Jack’s guilt as 0.2. or odds of 4 to 1 against guilt. The potential for wrong conviction is obvious.

Conclusions.

Although this argument uses an artificial example that is simpler than most real cases, it illustrates some important principles.

(1) The likelihood ratio is not a good way to evaluate evidence, unless there is good reason to believe that there is a 50:50 chance that the suspect is guilty before any evidence is presented.

(2) In order to calculate what we need, Prob(guilty | evidence), you need to give numerical values of how common the possession of characteristic x (the evidence) is the whole population of possible suspects (a reasonable value might be estimated in the case of DNA evidence), We also need to know the size of the population. In the case of the island example, this was 1000, but in general, that would be hard to answer and any answer might well be contested by an advocate who understood the problem.

These arguments lead to four conclusions.

(1) If a lawyer uses the prosecutor’s fallacy, (s)he should be told that it’s nonsense.

(2) If a lawyer advocates conviction on the basis of likelihood ratio alone, s(he) should be asked to justify the implicit assumption that there was a 50:50 chance that the suspect was guilty before any evidence was presented.

(3) If a lawyer uses Defence counter-argument, or, equivalently, the version of Bayesian argument given here, (s)he should be asked to justify the estimates of the numerical value given to the prevalence of x in the population (P) and the numerical value of the size of this population (N). A range of values of P and N could be used, to provide a range of possible values of the final result, the probability that the suspect is guilty in the light of the evidence.

(4) The example that was used is the simplest possible case. For more complex cases it would be advisable to ask a professional statistician. Some reliable people can be found at the Royal Statistical Society’s section on Statistics and the Law.

If you do ask a professional statistician, and they present you with a lot of mathematics, you should still ask these questions about precisely what assumptions were made, and ask for an estimate of the range of uncertainty in the value of Prob(guilty | evidence) which they produce.

Postscript: real cases

Another paper by Philip Dawid, Statistics and the Law, is interesting because it discusses some recent real cases: for example the wrongful conviction of Sally Clark because of the wrong calculation of the statistics for Sudden Infant Death Syndrome.

On Monday 21 March, 2016, Dr Waney Squier was struck off the medical register by the General Medical Council because they claimed that she misrepresented the evidence in cases of Shaken Baby Syndrome (SBS).

This verdict was questioned by many lawyers, including Michael Mansfield QC and Clive Stafford Smith, in a letter. “General Medical Council behaving like a modern inquisition”

The latter has already written “This shaken baby syndrome case is a dark day for science – and for justice“..

The evidence for SBS is based on the existence of a triad of signs (retinal bleeding, subdural bleeding and encephalopathy). It seems likely that these signs will be present if a baby has been shake, i.e Prob(triad | shaken) is high. But this is irrelevant to the question of guilt. For that we need Prob(shaken | triad). As far as I know, the data to calculate what matters are just not available.

It seem that the GMC may have fallen for the prosecutor’s fallacy. Or perhaps the establishment won’t tolerate arguments. One is reminded, once again, of the definition of clinical experience: “Making the same mistakes with increasing confidence over an impressive number of years.” (from A Sceptic’s Medical Dictionary by Michael O’Donnell. A Sceptic’s Medical Dictionary BMJ publishing, 1997).

Appendix (for nerds). Two forms of Bayes’ theorem

The form of Bayes’ theorem given at the start is expressed in terms of odds ratios. The same rule can be written in terms of probabilities. (This was the form used in the appendix of my paper.) For those interested in the details, it may help to define explicitly these two forms.

In terms of probabilities, the probability of guilt in the light of the evidence (what we want) is

\[ \text{Prob(guilty } | \text{ evidence}) = \text{Prob(evidence } | \text{ guilty}) \frac{\text{Prob(guilty })}{\text{Prob(evidence })} \]

In terms of odds ratios, the odds ratio on guilt, given the evidence (which is what we want) is

\[ \frac{ \text{Prob(guilty } | \text{ evidence})} {\text{Prob(not guilty } | \text{ evidence}} =

\left ( \frac{ \text{Prob(guilty)}} {\text {Prob((not guilty)}} \right )

\left ( \frac{ \text{Prob(evidence } | \text{ guilty})} {\text{Prob(evidence } | \text{ not guilty}} \right ) \]

or, in words,

\[ \text{posterior odds of guilt } =\text{prior odds of guilt} \times \text{likelihood ratio} \]

This is the precise form of the equation that was given in words at the beginning.

A derivation of the equivalence of these two forms is sketched in a document which you can download.

Follow-up

23 March 2016

It’s worth pointing out the following connection between the legal argument (above) and tests of significance.

(1) The likelihood ratio works only when there is a 50:50 chance that the suspect is guilty before any evidence is presented (so the prior probability of guilt is 0.5, or, equivalently, the prior odds ratio is 1).

(2) The false positive rate in signiifcance testing is close to the P value only when the prior probability of a real effect is 0.5, as shown in section 6 of the P value paper.

However there is another twist in the significance testing argument. The statement above is right if we take as a positive result any P < 0.05. If we want to interpret a value of P = 0.047 in a single test, then, as explained in section 10 of the P value paper, we should restrict attention to only those tests that give P close to 0.047. When that is done the false positive rate is 26% even when the prior is 0.5 (and much bigger than 30% if the prior is smaller –see extra Figure), That justifies the assertion that if you claim to have discovered something because you have observed P = 0.047 in a single test then there is a chance of at least 30% that you’ll be wrong. Is there, I wonder, any legal equivalent of this argument?

|

“Statistical regression to the mean predicts that patients selected for abnormalcy will, on the average, tend to improve. We argue that most improvements attributed to the placebo effect are actually instances of statistical regression.”

“Thus, we urge caution in interpreting patient improvements as causal effects of our actions and should avoid the conceit of assuming that our personal presence has strong healing powers.” |

In 1955, Henry Beecher published "The Powerful Placebo". I was in my second undergraduate year when it appeared. And for many decades after that I took it literally, They looked at 15 studies and found that an average 35% of them got "satisfactory relief" when given a placebo. This number got embedded in pharmacological folk-lore. He also mentioned that the relief provided by placebo was greatest in patients who were most ill.

Consider the common experiment in which a new treatment is compared with a placebo, in a double-blind randomised controlled trial (RCT). It’s common to call the responses measured in the placebo group the placebo response. But that is very misleading, and here’s why.

The responses seen in the group of patients that are treated with placebo arise from two quite different processes. One is the genuine psychosomatic placebo effect. This effect gives genuine (though small) benefit to the patient. The other contribution comes from the get-better-anyway effect. This is a statistical artefact and it provides no benefit whatsoever to patients. There is now increasing evidence that the latter effect is much bigger than the former.

How can you distinguish between real placebo effects and get-better-anyway effect?

The only way to measure the size of genuine placebo effects is to compare in an RCT the effect of a dummy treatment with the effect of no treatment at all. Most trials don’t have a no-treatment arm, but enough do that estimates can be made. For example, a Cochrane review by Hróbjartsson & Gøtzsche (2010) looked at a wide variety of clinical conditions. Their conclusion was:

“We did not find that placebo interventions have important clinical effects in general. However, in certain settings placebo interventions can influence patient-reported outcomes, especially pain and nausea, though it is difficult to distinguish patient-reported effects of placebo from biased reporting.”

In some cases, the placebo effect is barely there at all. In a non-blind comparison of acupuncture and no acupuncture, the responses were essentially indistinguishable (despite what the authors and the journal said). See "Acupuncturists show that acupuncture doesn’t work, but conclude the opposite"

So the placebo effect, though a real phenomenon, seems to be quite small. In most cases it is so small that it would be barely perceptible to most patients. Most of the reason why so many people think that medicines work when they don’t isn’t a result of the placebo response, but it’s the result of a statistical artefact.

Regression to the mean is a potent source of deception

The get-better-anyway effect has a technical name, regression to the mean. It has been understood since Francis Galton described it in 1886 (see Senn, 2011 for the history). It is a statistical phenomenon, and it can be treated mathematically (see references, below). But when you think about it, it’s simply common sense.

You tend to go for treatment when your condition is bad, and when you are at your worst, then a bit later you’re likely to be better, The great biologist, Peter Medawar comments thus.

|

"If a person is (a) poorly, (b) receives treatment intended to make him better, and (c) gets better, then no power of reasoning known to medical science can convince him that it may not have been the treatment that restored his health"

(Medawar, P.B. (1969:19). The Art of the Soluble: Creativity and originality in science. Penguin Books: Harmondsworth). |

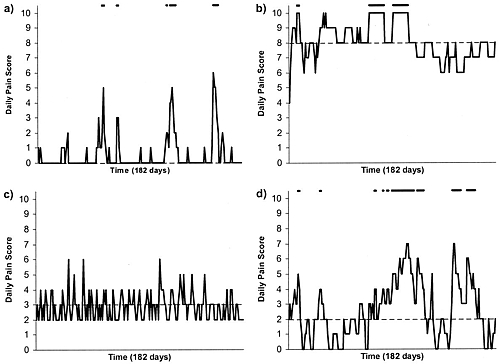

This is illustrated beautifully by measurements made by McGorry et al., (2001). Patients with low back pain recorded their pain (on a 10 point scale) every day for 5 months (they were allowed to take analgesics ad lib).

The results for four patients are shown in their Figure 2. On average they stay fairly constant over five months, but they fluctuate enormously, with different patterns for each patient. Painful episodes that last for 2 to 9 days are interspersed with periods of lower pain or none at all. It is very obvious that if these patients had gone for treatment at the peak of their pain, then a while later they would feel better, even if they were not actually treated. And if they had been treated, the treatment would have been declared a success, despite the fact that the patient derived no benefit whatsoever from it. This entirely artefactual benefit would be the biggest for the patients that fluctuate the most (e.g this in panels a and d of the Figure).

Figure 2 from McGorry et al, 2000. Examples of daily pain scores over a 6-month period for four participants. Note: Dashes of different lengths at the top of a figure designate an episode and its duration.

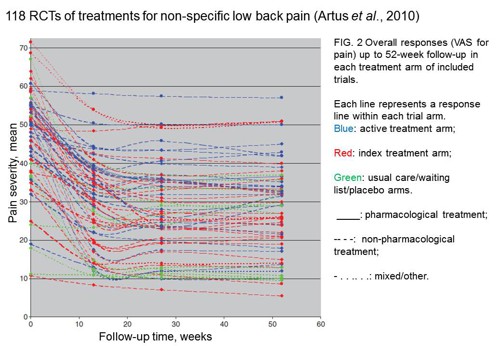

The effect is illustrated well by an analysis of 118 trials of treatments for non-specific low back pain (NSLBP), by Artus et al., (2010). The time course of pain (rated on a 100 point visual analogue pain scale) is shown in their Figure 2. There is a modest improvement in pain over a few weeks, but this happens regardless of what treatment is given, including no treatment whatsoever.

FIG. 2 Overall responses (VAS for pain) up to 52-week follow-up in each treatment arm of included trials. Each line represents a response line within each trial arm. Red: index treatment arm; Blue: active treatment arm; Green: usual care/waiting list/placebo arms. ____: pharmacological treatment; – – – -: non-pharmacological treatment; . . .. . .: mixed/other.

The authors comment

"symptoms seem to improve in a similar pattern in clinical trials following a wide variety of active as well as inactive treatments.", and "The common pattern of responses could, for a large part, be explained by the natural history of NSLBP".

In other words, none of the treatments work.

This paper was brought to my attention through the blog run by the excellent physiotherapist, Neil O’Connell. He comments

"If this finding is supported by future studies it might suggest that we can’t even claim victory through the non-specific effects of our interventions such as care, attention and placebo. People enrolled in trials for back pain may improve whatever you do. This is probably explained by the fact that patients enrol in a trial when their pain is at its worst which raises the murky spectre of regression to the mean and the beautiful phenomenon of natural recovery."

O’Connell has discussed the matter in recent paper, O’Connell (2015), from the point of view of manipulative therapies. That’s an area where there has been resistance to doing proper RCTs, with many people saying that it’s better to look at “real world” outcomes. This usually means that you look at how a patient changes after treatment. The hazards of this procedure are obvious from Artus et al.,Fig 2, above. It maximises the risk of being deceived by regression to the mean. As O’Connell commented

"Within-patient change in outcome might tell us how much an individual’s condition improved, but it does not tell us how much of this improvement was due to treatment."

In order to eliminate this effect it’s essential to do a proper RCT with control and treatment groups tested in parallel. When that’s done the control group shows the same regression to the mean as the treatment group. and any additional response in the latter can confidently attributed to the treatment. Anything short of that is whistling in the wind.

Needless to say, the suboptimal methods are most popular in areas where real effectiveness is small or non-existent. This, sad to say, includes low back pain. It also includes just about every treatment that comes under the heading of alternative medicine. Although these problems have been understood for over a century, it remains true that

|

"It is difficult to get a man to understand something, when his salary depends upon his not understanding it."

Upton Sinclair (1935) |

Responders and non-responders?

One excuse that’s commonly used when a treatment shows only a small effect in proper RCTs is to assert that the treatment actually has a good effect, but only in a subgroup of patients ("responders") while others don’t respond at all ("non-responders"). For example, this argument is often used in studies of anti-depressants and of manipulative therapies. And it’s universal in alternative medicine.

There’s a striking similarity between the narrative used by homeopaths and those who are struggling to treat depression. The pill may not work for many weeks. If the first sort of pill doesn’t work try another sort. You may get worse before you get better. One is reminded, inexorably, of Voltaire’s aphorism "The art of medicine consists in amusing the patient while nature cures the disease".

There is only a handful of cases in which a clear distinction can be made between responders and non-responders. Most often what’s observed is a smear of different responses to the same treatment -and the greater the variability, the greater is the chance of being deceived by regression to the mean.

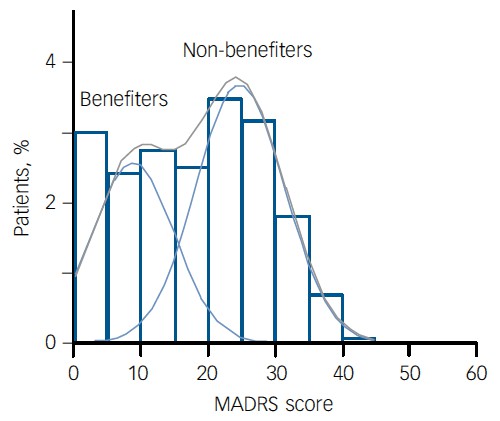

For example, Thase et al., (2011) looked at responses to escitalopram, an SSRI antidepressant. They attempted to divide patients into responders and non-responders. An example (Fig 1a in their paper) is shown.

The evidence for such a bimodal distribution is certainly very far from obvious. The observations are just smeared out. Nonetheless, the authors conclude

"Our findings indicate that what appears to be a modest effect in the grouped data – on the boundary of clinical significance, as suggested above – is actually a very large effect for a subset of patients who benefited more from escitalopram than from placebo treatment. "

I guess that interpretation could be right, but it seems more likely to be a marketing tool. Before you read the paper, check the authors’ conflicts of interest.

The bottom line is that analyses that divide patients into responders and non-responders are reliable only if that can be done before the trial starts. Retrospective analyses are unreliable and unconvincing.

Some more reading

Senn, 2011 provides an excellent introduction (and some interesting history). The subtitle is

"Here Stephen Senn examines one of Galton’s most important statistical legacies – one that is at once so trivial that it is blindingly obvious, and so deep that many scientists spend their whole career being fooled by it."

The examples in this paper are extended in Senn (2009), “Three things that every medical writer should know about statistics”. The three things are regression to the mean, the error of the transposed conditional and individual response.

You can read slightly more technical accounts of regression to the mean in McDonald & Mazzuca (1983) "How much of the placebo effect is statistical regression" (two quotations from this paper opened this post), and in Stephen Senn (2015) "Mastering variation: variance components and personalised medicine". In 1988 Senn published some corrections to the maths in McDonald (1983).

The trials that were used by Hróbjartsson & Gøtzsche (2010) to investigate the comparison between placebo and no treatment were looked at again by Howick et al., (2013), who found that in many of them the difference between treatment and placebo was also small. Most of the treatments did not work very well.

Regression to the mean is not just a medical deceiver: it’s everywhere

Although this post has concentrated on deception in medicine, it’s worth noting that the phenomenon of regression to the mean can cause wrong inferences in almost any area where you look at change from baseline. A classical example concern concerns the effectiveness of speed cameras. They tend to be installed after a spate of accidents, and if the accident rate is particularly high in one year it is likely to be lower the next year, regardless of whether a camera had been installed or not. To find the true reduction in accidents caused by installation of speed cameras, you would need to choose several similar sites and allocate them at random to have a camera or no camera. As in clinical trials. looking at the change from baseline can be very deceptive.

Statistical postscript

Lastly, remember that it you avoid all of these hazards of interpretation, and your test of significance gives P = 0.047. that does not mean you have discovered something. There is still a risk of at least 30% that your ‘positive’ result is a false positive. This is explained in Colquhoun (2014),"An investigation of the false discovery rate and the misinterpretation of p-values". I’ve suggested that one way to solve this problem is to use different words to describe P values: something like this.

|

P > 0.05 very weak evidence

P = 0.05 weak evidence: worth another look P = 0.01 moderate evidence for a real effect P = 0.001 strong evidence for real effect |

But notice that if your hypothesis is implausible, even these criteria are too weak. For example, if the treatment and placebo are identical (as would be the case if the treatment were a homeopathic pill) then it follows that 100% of positive tests are false positives.

Follow-up

12 December 2015

It’s worth mentioning that the question of responders versus non-responders is closely-related to the classical topic of bioassays that use quantal responses. In that field it was assumed that each participant had an individual effective dose (IED). That’s reasonable for the old-fashioned LD50 toxicity test: every animal will die after a sufficiently big dose. It’s less obviously right for ED50 (effective dose in 50% of individuals). The distribution of IEDs is critical, but it has very rarely been determined. The cumulative form of this distribution is what determines the shape of the dose-response curve for fraction of responders as a function of dose. Linearisation of this curve, by means of the probit transformation used to be a staple of biological assay. This topic is discussed in Chapter 10 of Lectures on Biostatistics. And you can read some of the history on my blog about Some pharmacological history: an exam from 1959.

Every day one sees politicians on TV assuring us that nuclear deterrence works because there no nuclear weapon has been exploded in anger since 1945. They clearly have no understanding of statistics.

With a few plausible assumptions, we can easily calculate that the time until the next bomb explodes could be as little as 20 years.

Be scared, very scared.

The first assumption is that bombs go off at random intervals. Since we have had only one so far (counting Hiroshima and Nagasaki as a single event), this can’t be verified. But given the large number of small influences that control when a bomb explodes (whether in war or by accident), it is the natural assumption to make. The assumption is given some credence by the observation that the intervals between wars are random [download pdf].

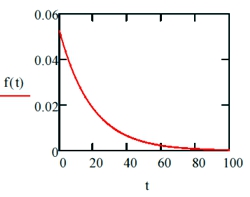

If the intervals between bombs are random, that implies that the distribution of the length of the intervals is exponential in shape, The nature of this distribution has already been explained in an earlier post about the random lengths of time for which a patient stays in an intensive care unit. If you haven’t come across an exponential distribution before, please look at that post before moving on.

All that we know is that 70 years have elapsed since the last bomb. so the interval until the next one must be greater than 70 years. The probability that a random interval is longer than 70 years can be found from the cumulative form of the exponential distribution.

If we denote the true mean interval between bombs as $\mu$ then the probability that an intervals is longer than 70 years is

\[ \text{Prob}\left( \text{interval > 70}\right)=\exp{\left(\frac{-70}{\mu_\mathrm{lo}}\right)} \]

We can get a lower 95% confidence limit (call it $\mu_\mathrm{lo}$) for the mean interval between bombs by the argument used in Lecture on Biostatistics, section 7.8 (page 108). If we imagine that $\mu_\mathrm{lo}$ were the true mean, we want it to be such that there is a 2.5% chance that we observe an interval that is greater than 70 years. That is, we want to solve

\[ \exp{\left(\frac{-70}{\mu_\mathrm{lo}}\right)} = 0.025\]

That’s easily solved by taking natural logs of both sides, giving

\[ \mu_\mathrm{lo} = \frac{-70}{\ln{\left(0.025\right)}}= 19.0\text{ years}\]

A similar argument leads to an upper confidence limit, $\mu_\mathrm{hi}$, for the mean interval between bombs, by solving

\[ \exp{\left(\frac{-70}{\mu_\mathrm{hi}}\right)} = 0.975\]

so

\[ \mu_\mathrm{hi} = \frac{-70}{\ln{\left(0.975\right)}}= 2765\text{ years}\]

If the worst case were true, and the mean interval between bombs was 19 years. then the distribution of the time to the next bomb would have an exponential probability density function, $f(t)$,

|

\[ f(t) = \frac{1}{19} \exp{\left(\frac{-70}{19}\right)} \]

There would be a 50% chance that the waiting time until the next bomb would be less than the median of this distribution, =19 ln(0.5) = 13.2 years. |

|

In summary, the observation that there has been no explosion for 70 years implies that the mean time until the next explosion lies (with 95% confidence) between 19 years and 2765 years. If it were 19 years, there would be a 50% chance that the waiting time to the next bomb could be less than 13.2 years. Thus there is no reason at all to think that nuclear deterrence works well enough to protect the world from incineration.

Another approach

My statistical colleague, the ace probabilist Alan Hawkes, suggested a slightly different approach to the problem, via likelihood. The likelihood of a particular value of the interval between bombs is defined as the probability of making the observation(s), given a particular value of $\mu$. In this case, there is one observation, that the interval between bombs is more than 70 years. The likelihood, $L\left(\mu\right)$, of any specified value of $\mu$ is thus

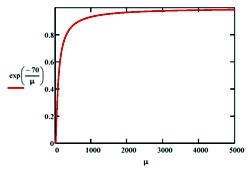

\[L\left(\mu\right)=\text{Prob}\left( \text{interval > 70 | }\mu\right) = \exp{\left(\frac{-70}{\mu}\right)} \]

|

If we plot this function (graph on right) shows that it increases with $\mu$ continuously, so the maximum likelihood estimate of $\mu$ is infinity. An infinite wait until the next bomb is perfect deterrence. |

But again we need confidence limits for this. Since the upper limit is infinite, the appropriate thing to calculate is a one-sided lower 95% confidence limit. This is found by solving

\[ \exp{\left(\frac{-70}{\mu_\mathrm{lo}}\right)} = 0.05\]

which gives

\[ \mu_\mathrm{lo} = \frac{-70}{\ln{\left(0.05\right)}}= 23.4\text{ years}\]

Summary

The first approach gives 95% confidence limits for the average time until we get incinerated as 19 years to 2765 years. The second approach gives the lower limit as 23.4 years. There is no important difference between the two methods of calculation. This shows that the bland assurances of politicians that “nuclear deterrence works” is not justified.

It is not the purpose of this post to predict when the next bomb will explode, but rather to point out that the available information tells us very little about that question. This seems important to me because it contradicts directly the frequent assurances that deterrence works.

The only consolation is that, since I’m now 79, it’s unlikely that I’ll live long enough to see the conflagration.

Anyone younger than me would be advised to get off their backsides and do something about it, before you are destroyed by innumerate politicians.

Postscript

While talking about politicians and war it seems relevant to reproduce Peter Kennard’s powerful image of the Iraq war.