metrics

|

Today, 25 September, is the first anniversary of the needless death of Stefan Grimm. This post is intended as a memorial. He should be remembered, in the hope that some good can come from his death. |

|

On 1 December 2014, I published the last email from Stefan Grimm, under the title “Publish and perish at Imperial College London: the death of Stefan Grimm“. Since then it’s been viewed 196,000 times. The day after it was posted, the server failed under the load.

Since than, I posted two follow-up pieces. On December 23, 2014 “Some experiences of life at Imperial College London. An external inquiry is needed after the death of Stefan Grimm“. Of course there was no external inquiry.

And on April 9, 2015, after the coroner’s report, and after Imperial’s internal inquiry, "The death of Stefan Grimm was “needless”. And Imperial has done nothing to prevent it happening again".

The tragedy featured in the introduction of the HEFCE report on the use of metrics.

|

“The tragic case of Stefan Grimm, whose suicide in September 2014 led Imperial College to launch a review of its use of performance metrics, is a jolting reminder that what’s at stake in these debates is more than just the design of effective management systems.”

“Metrics hold real power: they are constitutive of values, identities and livelihoods ” |

I had made no attempt to contact Grimm’s family, because I had no wish to intrude on their grief. But in July 2015, I received, out of the blue, a hand-written letter from Stefan Grimm’s mother. She is now 80 and living in Munich. I was told that his father, Dieter Grimm, had died of cancer when he was only 59. I also learned that Stefan Grimm was distantly related to Wilhelm Grimm, one of the Gebrüder Grimm.

The letter was very moving indeed. It said "Most of the infos about what happened in London, we got from you, what you wrote in the internet".

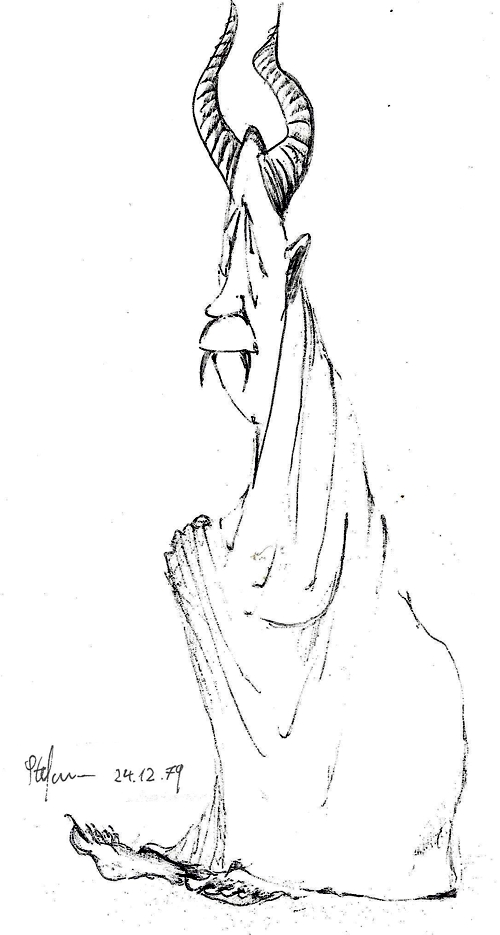

I responded as sympathetically as I could, and got a reply which included several of Stefan’s drawings, and then more from his sister. The drawings were done while he was young. They show amazing talent, but by the age of 25 he was too busy with science to expoit his artistic talents.

With his mother’s permission, I reproduce ten of his drawings here, as a memorial to a man who whose needless death was attributable to the very worst of the UK university system. He was killed by mindless and cruel "performance management", imposed by Imperial College London. The initial reaction of Imperial gave little hint of an improvement. I hope that their review of the metrics used to assess people will be a bit more sensible,

His real memorial lies in his published work, which continues to be cited regularly after his death.

His drawings are a reminder that there is more to human beings than getting grants. And that there is more to human beings than science.

Click the picture for an album of ten of his drawings. In the album there are also pictures of two books that were written for children by Stefan’s father, Dieter Grimm.

Dated Christmas eve,1979 (age 16)

Follow-up

Well well. It seems that Imperial are having an "HR Showcase: Supporting our people" on 15 October. And the introduction is being given by none other than Professor Martin Wilkins, the very person whose letter to Grimm must bear some responsibility for his death. I’ll be interested to hear whether he shows any contrition. I doubt whether any employees will dare to ask pointed questions at this meeting, but let’s hope they do.

The Higher Education Funding Council England (HEFCE) gives money to universities. The allocation that a university gets depends strongly on the periodical assessments of the quality of their research. Enormous amounts if time, energy and money go into preparing submissions for these assessments, and the assessment procedure distorts the behaviour of universities in ways that are undesirable. In the last assessment, four papers were submitted by each principal investigator, and the papers were read.

In an effort to reduce the cost of the operation, HEFCE has been asked to reconsider the use of metrics to measure the performance of academics. The committee that is doing this job has asked for submissions from any interested person, by June 20th.

This post is a draft for my submission. I’m publishing it here for comments before producing a final version for submission.

Draft submission to HEFCE concerning the use of metrics.

I’ll consider a number of different metrics that have been proposed for the assessment of the quality of an academic’s work.

Impact factors

The first thing to note is that HEFCE is one of the original signatories of DORA (http://am.ascb.org/dora/ ). The first recommendation of that document is

:"Do not use journal-based metrics, such as Journal Impact Factors, as a surrogate measure of the quality of individual research articles, to assess an individual scientist’s contributions, or in hiring, promotion, or funding decisions"

.Impact factors have been found, time after time, to be utterly inadequate as a way of assessing individuals, e.g. [1], [2]. Even their inventor, Eugene Garfield, says that. There should be no need to rehearse yet again the details. If HEFCE were to allow their use, they would have to withdraw from the DORA agreement, and I presume they would not wish to do this.

Article citations

Citation counting has several problems. Most of them apply equally to the H-index.

- Citations may be high because a paper is good and useful. They equally may be high because the paper is bad. No commercial supplier makes any distinction between these possibilities. It would not be in their commercial interests to spend time on that, but it’s critical for the person who is being judged. For example, Andrew Wakefield’s notorious 1998 paper, which gave a huge boost to the anti-vaccine movement had had 758 citations by 2012 (it was subsequently shown to be fraudulent).

- Citations take far too long to appear to be a useful way to judge recent work, as is needed for judging grant applications or promotions. This is especially damaging to young researchers, and to people (particularly women) who have taken a career break. The counts also don’t take into account citation half-life. A paper that’s still being cited 20 years after it was written clearly had influence, but that takes 20 years to discover,

- The citation rate is very field-dependent. Very mathematical papers are much less likely to be cited, especially by biologists, than more qualitative papers. For example, the solution of the missed event problem in single ion channel analysis [3,4] was the sine qua non for all our subsequent experimental work, but the two papers have only about a tenth of the number of citations of subsequent work that depended on them.

- Most suppliers of citation statistics don’t count citations of books or book chapters. This is bad for me because my only work with over 1000 citations is my 105 page chapter on methods for the analysis of single ion channels [5], which contained quite a lot of original work. It has had 1273 citations according to Google scholar but doesn’t appear at all in Scopus or Web of Science. Neither do the 954 citations of my statistics text book [6]

- There are often big differences between the numbers of citations reported by different commercial suppliers. Even for papers (as opposed to book articles) there can be a two-fold difference between the number of citations reported by Scopus, Web of Science and Google Scholar. The raw data are unreliable and commercial suppliers of metrics are apparently not willing to put in the work to ensure that their products are consistent or complete.

- Citation counts can be (and already are being) manipulated. The easiest way to get a large number of citations is to do no original research at all, but to write reviews in popular areas. Another good way to have ‘impact’ is to write indecisive papers about nutritional epidemiology. That is not behaviour that should command respect.

- Some branches of science are already facing something of a crisis in reproducibility [7]. One reason for this is the perverse incentives which are imposed on scientists. These perverse incentives include the assessment of their work by crude numerical indices.

- “Gaming” of citations is easy. (If students do it it’s called cheating: if academics do it is called gaming.) If HEFCE makes money dependent on citations, then this sort of cheating is likely to take place on an industrial scale. Of course that should not happen, but it would (disguised, no doubt, by some ingenious bureaucratic euphemisms).

- For example, Scigen is a program that generates spoof papers in computer science, by stringing together plausible phases. Over 100 such papers have been accepted for publication. By submitting many such papers, the authors managed to fool Google Scholar in to awarding the fictitious author an H-index greater than that of Albert Einstein http://en.wikipedia.org/wiki/SCIgen

- The use of citation counts has already encouraged guest authorships and such like marginally honest behaviour. There is no way to tell with an author on a paper has actually made any substantial contribution to the work, despite the fact that some journals ask for a statement about contribution.

- It has been known for 17 years that citation counts for individual papers are not detectably correlated with the impact factor of the journal in which the paper appears [1]. That doesn’t seem to have deterred metrics enthusiasts from using both. It should have done.

Given all these problems, it’s hard to see how citation counts could be useful to the REF, except perhaps in really extreme cases such as papers that get next to no citations over 5 or 10 years.

The H-index

This has all the disadvantages of citation counting, but in addition it is strongly biased against young scientists, and against women. This makes it not worth consideration by HEFCE.

Altmetrics

Given the role given to “impact” in the REF, the fact that altmetrics claim to measure impact might make them seem worthy of consideration at first sight. One problem is that the REF failed to make a clear distinction between impact on other scientists is the field and impact on the public.

Altmetrics measures an undefined mixture of both sorts if impact, with totally arbitrary weighting for tweets, Facebook mentions and so on. But the score seems to be related primarily to the trendiness of the title of the paper. Any paper about diet and health, however poor, is guaranteed to feature well on Twitter, as will any paper that has ‘penis’ in the title.

It’s very clear from the examples that I’ve looked at that few people who tweet about a paper have read more than the title. See Why you should ignore altmetrics and other bibliometric nightmares [8].

In most cases, papers were promoted by retweeting the press release or tweet from the journal itself. Only too often the press release is hyped-up. Metrics not only corrupt the behaviour of academics, but also the behaviour of journals. In the cases I’ve examined, reading the papers revealed that they were particularly poor (despite being in glamour journals): they just had trendy titles [8].

There could even be a negative correlation between the number of tweets and the quality of the work. Those who sell altmetrics have never examined this critical question because they ignore the contents of the papers. It would not be in their commercial interests to test their claims if the result was to show a negative correlation. Perhaps the reason why they have never tested their claims is the fear that to do so would reduce their income.

Furthermore you can buy 1000 retweets for $8.00 http://followers-and-likes.com/twitter/buy-twitter-retweets/ That’s outright cheating of course, and not many people would go that far. But authors, and journals, can do a lot of self-promotion on twitter that is totally unrelated to the quality of the work.

It’s worth noting that much good engagement with the public now appears on blogs that are written by scientists themselves, but the 3.6 million views of my blog do not feature in altmetrics scores, never mind Scopus or Web of Science. Altmetrics don’t even measure public engagement very well, never mind academic merit.

Evidence that metrics measure quality

Any metric would be acceptable only if it measured the quality of a person’s work. How could that proposition be tested? In order to judge this, one would have to take a random sample of papers, and look at their metrics 10 or 20 years after publication. The scores would have to be compared with the consensus view of experts in the field. Even then one would have to be careful about the choice of experts (in fields like alternative medicine for example, it would be important to exclude people whose living depended on believing in it). I don’t believe that proper tests have ever been done (and it isn’t in the interests of those who sell metrics to do it).

The great mistake made by almost all bibliometricians is that they ignore what matters most, the contents of papers. They try to make inferences from correlations of metric scores with other, equally dubious, measures of merit. They can’t afford the time to do the right experiment if only because it would harm their own “productivity”.

The evidence that metrics do what’s claimed for them is almost non-existent. For example, in six of the ten years leading up to the 1991 Nobel prize, Bert Sakmann failed to meet the metrics-based publication target set by Imperial College London, and these failures included the years in which the original single channel paper was published [9] and also the year, 1985, when he published a paper [10] that was subsequently named as a classic in the field [11]. In two of these ten years he had no publications whatsoever. See also [12].

Application of metrics in the way that it’s been done at Imperial and also at Queen Mary College London, would result in firing of the most original minds.

Gaming and the public perception of science

Every form of metric alters behaviour, in such a way that it becomes useless for its stated purpose. This is already well-known in economics, where it’s know as Goodharts’s law http://en.wikipedia.org/wiki/Goodhart’s_law “"When a measure becomes a target, it ceases to be a good measure”. That alone is a sufficient reason not to extend metrics to science. Metrics have already become one of several perverse incentives that control scientists’ behaviour. They have encouraged gaming, hype, guest authorships and, increasingly, outright fraud [13].

The general public has become aware of this behaviour and it is starting to do serious harm to perceptions of all science. As long ago as 1999, Haerlin & Parr [14] wrote in Nature, under the title How to restore Public Trust in Science,

“Scientists are no longer perceived exclusively as guardians of objective truth, but also as smart promoters of their own interests in a media-driven marketplace.”

And in January 17, 2006, a vicious spoof on a Science paper appeared, not in a scientific journal, but in the New York Times. See https://www.dcscience.net/?p=156

The use of metrics would provide a direct incentive to this sort of behaviour. It would be a tragedy not only for people who are misjudged by crude numerical indices, but also a tragedy for the reputation of science as a whole.

Conclusion

There is no good evidence that any metric measures quality, at least over the short time span that’s needed for them to be useful for giving grants or deciding on promotions). On the other hand there is good evidence that use of metrics provides a strong incentive to bad behaviour, both by scientists and by journals. They have already started to damage the public perception of science of the honesty of science.

The conclusion is obvious. Metrics should not be used to judge academic performance.

What should be done?

If metrics aren’t used, how should assessment be done? Roderick Floud was president of Universities UK from 2001 to 2003. He’s is nothing if not an establishment person. He said recently:

“Each assessment costs somewhere between £20 million and £100 million, yet 75 per cent of the funding goes every time to the top 25 universities. Moreover, the share that each receives has hardly changed during the past 20 years.

It is an expensive charade. Far better to distribute all of the money through the research councils in a properly competitive system.”

The obvious danger of giving all the money to the Research Councils is that people might be fired solely because they didn’t have big enough grants. That’s serious -it’s already happened at Kings College London, Queen Mary London and at Imperial College. This problem might be ameliorated if there were a maximum on the size of grants and/or on the number of papers a person could publish, as I suggested at the open data debate. And it would help if univerities appointed vice-chancellors with a better long term view than most seem to have at the moment.

Aggregate metrics? It’s been suggested that the problems are smaller if one looks at aggregated metrics for a whole department. rather than the metrics for individual people. Clearly looking at departments would average out anomalies. The snag is that it wouldn’t circumvent Goodhart’s law. If the money depended on the aggregate score, it would still put great pressure on universities to recruit people with high citations, regardless of the quality of their work, just as it would if individuals were being assessed. That would weigh against thoughtful people (and not least women).

The best solution would be to abolish the REF and give the money to research councils, with precautions to prevent people being fired because their research wasn’t expensive enough. If politicians insist that the "expensive charade" is to be repeated, then I see no option but to continue with a system that’s similar to the present one: that would waste money and distract us from our job.

1. Seglen PO (1997) Why the impact factor of journals should not be used for evaluating research. British Medical Journal 314: 498-502. [Download pdf]

2. Colquhoun D (2003) Challenging the tyranny of impact factors. Nature 423: 479. [Download pdf]

3. Hawkes AG, Jalali A, Colquhoun D (1990) The distributions of the apparent open times and shut times in a single channel record when brief events can not be detected. Philosophical Transactions of the Royal Society London A 332: 511-538. [Get pdf]

4. Hawkes AG, Jalali A, Colquhoun D (1992) Asymptotic distributions of apparent open times and shut times in a single channel record allowing for the omission of brief events. Philosophical Transactions of the Royal Society London B 337: 383-404. [Get pdf]

5. Colquhoun D, Sigworth FJ (1995) Fitting and statistical analysis of single-channel records. In: Sakmann B, Neher E, editors. Single Channel Recording. New York: Plenum Press. pp. 483-587.

6. David Colquhoun on Google Scholar. Available: http://scholar.google.co.uk/citations?user=JXQ2kXoAAAAJ&hl=en17-6-2014

7. Ioannidis JP (2005) Why most published research findings are false. PLoS Med 2: e124.[full text]

8. Colquhoun D, Plested AJ Why you should ignore altmetrics and other bibliometric nightmares. Available: https://www.dcscience.net/?p=6369

9. Neher E, Sakmann B (1976) Single channel currents recorded from membrane of denervated frog muscle fibres. Nature 260: 799-802.

10. Colquhoun D, Sakmann B (1985) Fast events in single-channel currents activated by acetylcholine and its analogues at the frog muscle end-plate. J Physiol (Lond) 369: 501-557. [Download pdf]

11. Colquhoun D (2007) What have we learned from single ion channels? J Physiol 581: 425-427.[Download pdf]

12. Colquhoun D (2007) How to get good science. Physiology News 69: 12-14. [Download pdf] See also https://www.dcscience.net/?p=182

13. Oransky, I. Retraction Watch. Available: http://retractionwatch.com/18-6-2014

14. Haerlin B, Parr D (1999) How to restore public trust in science. Nature 400: 499. 10.1038/22867 [doi].[Get pdf]

Follow-up

Some other posts on this topic

Why Metrics Cannot Measure Research Quality: A Response to the HEFCE Consultation

Gaming Google Scholar Citations, Made Simple and Easy

Manipulating Google Scholar Citations and Google Scholar Metrics: simple, easy and tempting

Driving Altmetrics Performance Through Marketing

Death by Metrics (October 30, 2013)

Not everything that counts can be counted

Using metrics to assess research quality By David Spiegelhalter “I am strongly against the suggestion that peer–review can in any way be replaced by bibliometrics”

1 July 2014

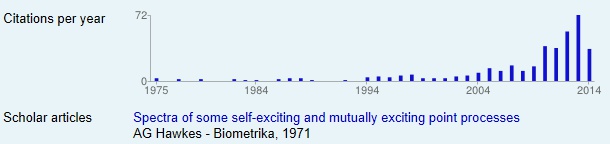

My brilliant statistical colleague, Alan Hawkes, not only laid the foundations for single molecule analysis (and made a career for me) . Before he got into that, he wrote a paper, Spectra of some self-exciting and mutually exciting point processes, (Biometrika 1971). In that paper he described a sort of stochastic process now known as a Hawkes process. In the simplest sort of stochastic process, the Poisson process, events are independent of each other. In a Hawkes process, the occurrence of an event affects the probability of another event occurring, so, for example, events may occur in clusters. Such processes were used for many years to describe the occurrence of earthquakes. More recently, it’s been noticed that such models are useful in finance, marketing, terrorism, burglary, social media, DNA analysis, and to describe invasive banana trees. The 1971 paper languished in relative obscurity for 30 years. Now the citation rate has shot threw the roof.

The papers about Hawkes processes are mostly highly mathematical. They are not the sort of thing that features on twitter. They are serious science, not just another ghastly epidemiological survey of diet and health. Anybody who cites papers of this sort is likely to be a real scientist. The surge in citations suggests to me that the 1971 paper was indeed an important bit of work (because the citations will be made by serious people). How does this affect my views about the use of citations? It shows that even highly mathematical work can achieve respectable citation rates, but it may take a long time before their importance is realised. If Hawkes had been judged by citation counting while he was applying for jobs and promotions, he’d probably have been fired. If his department had been judged by citations of this paper, it would not have scored well. It takes a long time to judge the importance of a paper and that makes citation counting almost useless for decisions about funding and promotion.

Stop press. Financial report casts doubt on Trainor’s claims

Science has a big problem. Most jobs are desperately insecure. It’s hard to do long term thorough work when you don’t know whether you’ll be able to pay your mortgage in a year’s time. The appalling career structure for young scientists has been the subject of much writing by the young (e.g. Jenny Rohn) and the old, e.g Bruce Alberts. Peter Lawrence (see also Real Lives and White Lies in the Funding of Scientific Research, and by me.

Until recently, this problem was largely restricted to post-doctoral fellows (postdocs). They already have PhDs and they are the people who do most of the experiments. Often large numbers of them work for a single principle investigator (PI). The PI spends most of his her time writing grant applications and traveling the world to hawk the wares of his lab. They also (to variable extents) teach students and deal with endless hassle from HR.

The salaries of most postdocs are paid from grants that last for three or sometimes five years. If that grant doesn’t get renewed. they are on the streets.

Universities have come to exploit their employees almost as badly as Amazon does.

The periodical research assessments not only waste large amounts of time and money, but they have distorted behaviour. In the hope of scoring highly, they recruit a lot of people before the submission, but as soon as that’s done with, they find that they can’t afford all of them, so some get cast aside like worn out old boots. Universities have allowed themselves to become dependent on "soft money" from grant-giving bodies. That strikes me as bad management.

The situation is even worse in the USA where most teaching staff rely on research grants to pay their salaries.

I have written three times about the insane methods that are being used to fire staff at Queen Mary College London (QMUL).

Is Queen Mary University of London trying to commit scientific suicide? (June 2012)

Queen Mary, University of London in The Times. Does Simon Gaskell care? (July 2012) and a version of it appeared th The Times (Thunderer column)

In which Simon Gaskell, of Queen Mary, University of London, makes a cock-up (August 2012)

The ostensible reason given there was to boost its ratings in university rankings. Their vice-chancellor, Simon Gaskell, seems to think that by firing people he can produce a university that’s full of Nobel prize-winners. The effect, of course, is just the opposite. Treating people like pawns in a game makes the good people leave and only those who can’t get a job with a better employer remain. That’s what I call bad management.

At QMUL people were chosen to be fired on the basis of a plain silly measure of their publication record, and by their grant income. That was combined with terrorisation of any staff who spoke out about the process (more on that coming soon).

Kings College London is now doing the same sort of thing. They have announced that they’ll fire 120 of the 777 staff in the schools of medicine and biomedical sciences, and the Institute of Psychiatry. These are humans, with children and mortgages to pay. One might ask why they were taken on the first place, if the university can’t afford them. That’s simply bad financial planning (or was it done in order to boost their Research Excellence submission?).

Surely it’s been obvious, at least since 2007, that hard financial times were coming, but that didn’t dent the hubris of the people who took an so many staff. HEFCE has failed to find a sensible way to fund universities. The attempt to separate the funding of teaching and research has just led to corruption.

The way in which people are to be chosen for the firing squad at Kings is crude in the extreme. If you are a professor at the Institute of Psychiatry then, unless you do a lot of teaching, you must have a grant income of at least £200,000 per year. You can read all the details in the Kings’ “Consultation document” that was sent to all employees. It’s headed "CONFIDENTIAL – Not for further circulation". Vice-chancellors still don’t seem to have realised that it’s no longer possible to keep things like this secret. In releasing it, I take ny cue from George Orwell.

"Journalism is printing what someone else does not want printed: everything else is public relations.”

There is no mention of the quality of your research, just income. Since in most sorts of research, the major cost is salaries, this rewards people who take on too many employees. Only too frequently, large groups are the ones in which students and research staff get the least supervision, and which bangs per buck are lowest. The university should be rewarding people who are deeply involved in research themselves -those with small groups. Instead, they are doing exactly the opposite.

Women are, I’d guess, less susceptible to the grandiosity of the enormous research group, so no doubt they will suffer disproportionately. PhD students will also suffer if their supervisor is fired while they are halfway through their projects.

An article in Times Higher Education pointed out

"According to the Royal Society’s 2010 report The Scientific Century: Securing our Future Prosperity, in the UK, 30 per cent of science PhD graduates go on to postdoctoral positions, but only around 4 per cent find permanent academic research posts. Less than half of 1 per cent of those with science doctorates end up as professors."

The panel that decides whether you’ll be fired consists of Professor Sir Robert Lechler, Professor Anne Greenough, Professor Simon Howell, Professor Shitij Kapur, Professor Karen O’Brien, Chris Mottershead, Rachel Parr & Carol Ford. If they had the slightest integrity, they’d refuse to implement such obviously silly criteria.

Universities in general. not only Kings and QMUL have become over-reliant on research funders to enhance their own reputations. PhD students and research staff are employed for the benefit of the university (and of the principle investigator), not for the benefit of the students or research staff, who are treated as expendable cost units, not as humans.

One thing that we expect of vice-chancellors is sensible financial planning. That seems to have failed at Kings. One would also hope that they would understand how to get good science. My only previous encounter with Kings’ vice chancellor, Rick Trainor, suggests that this is not where his talents lie. While he was president of the Universities UK (UUK), I suggested to him that degrees in homeopathy were not a good idea. His response was that of the true apparatchik.

“. . . degree courses change over time, are independently assessed for academic rigour and quality and provide a wider education than the simple description of the course might suggest”

That is hardly a response that suggests high academic integrity.

The students’ petition is on Change.org.

Follow-up

The problems that are faced in the UK are very similar to those in the USA. They have been described with superb clarity in “Rescuing US biomedical research from its systemic flaws“, This article, by Bruce Alberts, Marc W. Kirschner, Shirley Tilghman, and Harold Varmus, should be read by everyone. They observe that ” . . . little has been done to reform the system, primarily because it continues to benefit more established and hence more influential scientists”. I’d be more impressed by the senior people at Kings if they spent time trying to improve the system rather than firing people because their research is not sufficiently expensive.

10 June 2014

Progress on the cull, according to an anonymous correspondent

“The omnishambles that is KCL management

1) We were told we would receive our orange (at risk) or green letters (not at risk, this time) on Thursday PM 5th June as HR said that it’s not good to get bad news on a Friday!

2) We all got a letter on Friday that we would not be receiving our letters until Monday, so we all had a tense weekend

3) I finally got my letter on Monday, in my case it was “green” however a number of staff who work very hard at KCL doing teaching and research are “orange”, un bloody believable

As you can imagine the moral at King’s has dropped through the floor”

18 June 2014

Dorothy Bishop has written about the Trainor problem. Her post ends “One feels that if KCL were falling behind in a boat race, they’d respond by throwing out some of the rowers”.

The students’ petition can be found on the #KCLHealthSOS site. There is a reply to the petition, from Professor Sir Robert Lechler, and a rather better written response to it from students. Lechler’s response merely repeats the weasel words, and it attacks a few straw men without providing the slightest justification for the criteria that are being used to fire people. One can’t help noticing how often knighthoods go too the best apparatchiks rather than the best scientists.

14 July 2014

A 2013 report on Kings from Standard & Poor’s casts doubt on Trainor’s claims

Download the report from Standard and Poor’s Rating Service

A few things stand out.

- KCL is in a strong financial position with lower debt than other similar Universities and cash reserves of £194 million.

- The report says that KCL does carry some risk into the future especially that related to its large capital expansion program.

- The report specifically warns KCL over the consequences of any staff cuts. Particularly relevant are the following quotations

- Page p3 “Further staff-cost curtailment will be quite difficult …pressure to maintain its academic and non-academic service standards will weigh on its ability to cut costs further.”

- page 4 The report goes on to say (see the section headed outlook, especially the final paragraph) that any decrease in KCL’s academic reputation (e.g. consequent on staff cuts) would be likely to impair its ability to attract overseas students and therefore adversely affect its financial position.

- page 10 makes clear that KCL managers are privately aiming at 10% surplus, above the 6% operating surplus they talk about with us. However, S&P considers that ‘ambitious’. In other words KCL are shooting for double what a credit rating agency considers realistic.

One can infer from this that

- what staff have been told about the cuts being an immediate necessity is absolute nonsense

- KCL was warned against staff cuts by a credit agency

- the main problem KCL has is its overambitious building policy

- KCL is implementing a policy (staff cuts) which S & P warned against as they predict it may result in diminishing income.

What on earth is going on?

16 July 2014

I’ve been sent yet another damning document. The BMA’s response to Kings contains some numbers that seem to have escaped the attention of managers at Kings.

10 April 2015

King’s draft performance management plan for 2015

This document has just come to light (the highlighting is mine).

It’s labelled as "released for internal consultation". It seems that managers are slow to realise that it’s futile to try to keep secrets.

The document applies only to Institute of Psychiatry, Psychology and Neuroscience at King’s College London: "one of the global leaders in the fields" -the usual tedious blah that prefaces every document from every university.

It’s fascinating to me that the most cruel treatment of staff so often seems to arise in medical-related areas. I thought psychiatrists, of all people, were meant to understand people, not to kill them.

This document is not quite as crude as Imperial’s assessment, but it’s quite bad enough. Like other such documents, it pretends that it’s for the benefit of its victims. In fact it’s for the benefit of willy-waving managers who are obsessed by silly rankings.

Here are some of the sillier bits.

"The Head of Department is also responsible for ensuring that aspects of reward/recognition and additional support that are identified are appropriately followed through"

And, presumably, for firing people, but let’s not mention that.

"Academics are expected to produce original scientific publications of the highest quality that will significantly advance their field."

That’s what everyone has always tried to do. It can’t be compelled by performance managers. A large element of success is pure luck. That’s why they’re called experiments.

" However, it may take publications 12-18 months to reach a stable trajectory of citations, therefore, the quality of a journal (impact factor) and the judgment of knowledgeable peers can be alternative indicators of excellence."

It can also take 40 years for work to be cited. And there is little reason to believe that citations, especially those within 12-18 months, measure quality. And it is known for sure that "the quality of a journal (impact factor)" does not correlate with quality (or indeed with citations).

Later we read

"H Index and Citation Impact: These are good objective measures of the scientific impact of

publications"

NO, they are simply not a measure of quality (though this time they say “impact” rather than “excellence”).

The people who wrote that seem to be unaware of the most basic facts about science.

Then

"Carrying out high quality scientific work requires research teams"

Sometimes it does, sometimes it doesn’t. In the past the best work has been done by one or two people. In my field, think of Hodgkin & Huxley, Katz & Miledi or Neher & Sakmann. All got Nobel prizes. All did the work themselves. Performance managers might well have fired them before they got started.

By specifying minimum acceptable group sizes, King’s are really specifying minimum acceptable grant income, just like Imperial and Warwick. Nobody will be taken in by the thin attempt to disguise it.

The specification that a professor should have "Primary supervision of three or more PhD students, with additional secondary supervision." is particularly iniquitous. Everyone knows that far too many PhDs are being produced for the number of jobs that are available. This stipulation is not for the benefit of the young. It’s to ensure a supply of cheap labour to churn out more papers and help to lift the university’s ranking.

The document is not signed, but the document properties name its author. But she’s not a scientist and is presumably acting under orders, so please don’t blame her for this dire document. Blame the vice-chancellor.

Performance management is a direct incentive to do shoddy short-cut science.

No wonder that The Economist says "scientists are doing too much trusting and not enough verifying—to the detriment of the whole of science, and of humanity".

Feel ashamed.

Academic staff are going to be fired at Queen Mary University of London (QMUL). It’s possible that universities may have to contract a bit in hard times, so what’s wrong?

What’s wrong is that the victims are being selected in a way that I can describe only as insane. The criteria they use are guaranteed to produce a generation of second-rate spiv scientists, with a consequent progressive decline in QMUL’s reputation.

The firings, it seems, are nothing to do with hard financial times, but are a result of QMUL’s aim to raise its ranking in university league tables.

In the UK university league table, a university’s position is directly related to its government research funding. So they need to do well in the 2014 ‘Research Excellence Framework’ (REF). To achieve that they plan to recruit new staff with high research profiles, take on more PhD students and post-docs, obtain more research funding from grants, and get rid of staff who are not doing ‘good’ enough research.

So far, that’s exactly what every other university is trying to do. This sort of distortion is one of the harmful side-effects of the REF. But what’s particularly stupid about QMUL’s behaviour is the way they are going about it. You can assess your own chances of survival at QMUL’s School of Biological and Chemical Sciences from the following table, which is taken from an article by Jeremy Garwood (Lab Times Online. July 4, 2012). The numbers refer to the four year period from 2008 to 2011.

|

Category of staff |

Research Output Quantity |

Research Output |

Research Income (£) |

Research Income (£) |

|

Professor |

11 |

2 |

400,000 |

at least 200,000 |

|

Reader |

9 |

2 |

320,000 |

at least 150,000 |

|

Senior Lecturer |

7 |

1 |

260,000 |

at least 120,000 |

|

Lecturer |

5 |

1 |

200,000 |

at least 100,000 |

|

In addition to the three criteria, ‘Research Output ‐ quality’, ‘Research Output – quantity’, and ‘Research Income’, there is a minimum threshold of 1 PhD completion for staff at each academic level. All this data is “evidenced by objective metrics; publications cited in Web of Science, plus official QMUL metrics on grant income and PhD completion.” To survive, staff must meet the minimum threshold in three out of the four categories, except as follows: Demonstration of activity at an exceptional level in either ‘research outputs’ or ‘research income’, termed an ‘enhanced threshold’, is “sufficient” to justify selection regardless of levels of activity in the other two categories. And what are these enhanced thresholds? |

|

The university notes that the above criteria “are useful as entry standards into the new school, but they fall short of the levels of activity that will be expected from staff in the future. These metrics should not, therefore, be regarded as targets for future performance.” This means that those who survived the redundancy criteria will simply have to do better. But what is to reassure them that it won’t be their turn next time should they fail to match the numbers? To help them, Queen Mary is proposing to introduce ‘D3’ performance management (www.unions.qmul.ac.uk/ucu/docs/d3-part-one.doc). Based on more ‘administrative physics’, D3 is shorthand for ‘Direction × Delivery × Development.’ Apparently “all three are essential to a successful team or organisation. The multiplication indicates that where one is absent/zero, then the sum is zero!” D3 is based on principles of accountability: “A sign of a mature organisation is where its members acknowledge that they face choices, they make commitments and are ready to be held to account for discharging these commitments, accepting the consequences rather than seeking to pass responsibility.” Inspired? |

I presume the D3 document must have been written by an HR person. It has all the incoherent use of buzzwords so typical of HR. And it says "sum" when it means "product" (oh dear, innumeracy is rife).

The criteria are utterly brainless. The use of impact factors for assessing people has been discredited at least since Seglen (1997) showed that the number of citations that a paper gets is not perceptibly correlated with the impact factor of the journal in which it’s published. The reason for this is the distribution of the number of citations for papers in a particular journal is enormously skewed. This means that high-impact journals get most of their citations from a few articles.

|

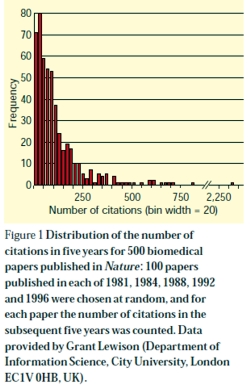

The distribution for Nature is shown in Fig. 1. Far from being gaussian, it is even more skewed than a geometric distribution; the mean number of citations is 114, but 69% of papers have fewer than the mean, and 24% have fewer than 30 citations. One paper has 2,364 citations but 35 have 10 or fewer. ISI data for citations in 2001 of the 858 papers published in Nature in 1999 show that the 80 most-cited papers (16% of all papers) account for half of all the citations (from Colquhoun, 2003)

|

|

The Institute of Scientific Information, ISI, is guilty of the unsound statistical practice of characterizing a distribution by its mean only, with no indication of its shape or even its spread. School of Biological and Chemical Sciences-QMUL is expecting everyone has to be above average in the new regime. Anomalously, the thresholds for psychologists are lower because it is said that it’s more difficult for them to get grants. This undermines even the twisted logic applied at the outset.

All this stuff about skewed distributions is, no doubt, a bit too technical for HR people to understand. Which, of course, is precisely why they should have nothing to do with assessing people.

At a time when so may PhDs fail to get academic jobs we should be limiting the numbers. But QMUL requires everyone to have a PhD student, not for the benefit of the student, but to increase its standing in league tables. That is deeply unethical.

The demand to have two papers in journals with impact factor greater than seven is nonsense. In physiology, for example, there are only four journals with an impact factor greater that seven and three of them are review journals that don’t publish original research. The two best journals for electrophysiology are Journal of Physiology (impact factor 4.98, in 2010) and Journal of General Physiology (IF 4.71). These are the journals that publish papers that get you into the Royal Society or even Nobel prizes. But for QMUL, they don’t count.

I have been lucky to know well three Nobel prize winners. Andrew Huxley. Bernard Katz, and Bert Sakmann. I doubt that any of them would pass the criteria laid down for a professor by QMUL. They would have been fired.

The case of Sakmann is analysed in How to Get Good Science, [pdf version]. In the 10 years from 1976 to 1985, when Sakmann rose to fame, he published an average of 2.6 papers per year (range 0 to 6). In two of these 10 years he had no publications at all. In the 4 year period (1976 – 1979 ) that started with the paper that brought him to fame (Neher & Sakmann, 1976) he published 9 papers, just enough for the Reader grade, but in the four years from 1979 – 1982 he had 6 papers, in 2 of which he was neither first nor last author. His job would have been in danger if he’d worked at QMUL. In 1991 Sakmann, with Erwin Neher, got the Nobel Prize for Physiology or Medicine.

The most offensive thing of the lot is the way you can buy yourself out if you publish 26 papers in the 4 year period. Sakmann came nowhere near this. And my own total, for the entire time from my first paper (1963) until I was elected to the Royal Society (May 1985) was 27 papers (and 7 book chapters). I would have been fired.

Peter Higgs had no papers at all from the time he moved to Edinburgh in 1960, until 1964 when his two paper’s on what’s now called the Higgs’ Boson were published in Physics Letters. That journal now has an impact factor less than 7 so Queen Mary would not have counted them as “high quality” papers, and he would not have been returnable for the REF. He too would have been fired.

The encouragement to publish large numbers of papers is daft. I have seen people rejected from the Royal Society for publishing too much. If you are publishing a paper every six weeks, you certainly aren’t writing them, and possibly not even reading them. Most likely you are appending your name to somebody else’s work with little or no checking of the data. Such numbers can be reached only by unethical behaviour, as described by Peter Lawrence in The Mismeasurement of Science. Like so much managerialism, the rules provide an active encouragement to dishonesty.

In the face of such a boneheaded approach to assessment of your worth, it’s the duty of any responsible academic to point out the harm that’s being done to the College. Richard Horton, in the Lancet, did so in Bullying at Barts. There followed quickly letters from Stuart McDonald and Nick Wright, who used the Nuremburg defence, pointing out that the Dean (Tom Macdonald) was just obeying orders from above. That has never been as acceptable defence. If Macdonald agreed with the procedure, he should be fired for incompetence. If he did not agree with it he should have resigned.

It’s a pity, because Tom Macdonald was one of the people with whom I corresponded in support of Barts’ students who, very reasonably, objected to having course work marked by homeopaths (see St Bartholomew’s teaches antiscience, but students revolt, and, later, Bad medicine. Barts sinks further into the endarkenment). In that case he was not unreasonable, and, a mere two years later I heard that he’d taken action.

To cap it all, two academics did their job by applying a critical eye to what’s going on at Queen Mary. They wrote to the Lancet under the title Queen Mary: nobody expects the Spanish Inquisition

"For example, one of the “metrics” for research output at professorial level is to have published at least two papers in journals with impact factors of 7 or more. This is ludicrous, of course—a triumph of vanity as sensible as selecting athletes on the basis of their brand of track suit. But let us follow this “metric” for a moment. How does the Head of School fair? Zero, actually. He fails. Just consult Web of Science. Take care though, the result is classified information. HR’s “data” are marked Private and Confidential. Some things must be believed. To question them is heresy."

Astoundingly, the people who wrote this piece are now under investigation for “gross misconduct”. This is behaviour worthy of the University of Poppleton, as pointed out by the inimitable Laurie Taylor, in Times Higher Education (June 7)

|

The rustle of censorship It appears that last week’s edition of our sister paper, The Poppleton Evening News, carried a letter from Dr Gene Ohm of our Biology Department criticising this university’s metrics-based redundancy programme. We now learn that, following the precedent set by Queen Mary, University of London, Dr Ohm could be found guilty of “gross misconduct” and face “disciplinary proceedings leading to dismissal” for having the effrontery to raise such issues in a public place. Louise Bimpson, the corporate director of our ever-expanding human resources team, admitted that this response might appear “severe” but pointed out that Poppleton was eager to follow the disciplinary practices set by such soon-to-be members of the prestigious Russell Group as Queen Mary. Thus it was only to be expected that we would seek to emulate its espousal of draconian censorship. She hoped this clarified the situation. |

David Bignell, emeritus professor of zoology at Queen Mary hit the nail on the head.

"These managers worry me. Too many are modest achievers, retired from their own studies, intoxicated with jargon, delusional about corporate status and forever banging the metrics gong. Crucially, they don’t lead by example."

What the managers at Queen Mary have failed to notice is that the best academics can choose where to go.

People are being told to pack their bags and move out with one day’s notice. Access to journals stopped, email address removed, and you may need to be accompanied to your (ex)-office. Good scientists are being treated like criminals.

What scientist in their right mind would want to work at QMUL, now that their dimwitted assessment methods, and their bullying tactics, are public knowledge?

The responsibility must lie with the principal, Simon Gaskell. And we know what the punishment is for bringing your university into disrepute.

Follow-up

Send an email. You may want to join the many people who have already written to QMUL’s principal, Simon Gaskell (principal@qmul.ac.uk), and/or to Sir Nicholas Montagu, Chairman of Council, n.montagu@qmul.ac.uk.

Sunday 1 July 2012. Since this blog was posted after lunch on Friday 29th June, it has had around 9000 visits from 72 countries. Here is one of 17 maps showing the origins of 200 of the hits in the last two days

The tweets about QMUL are collected in a Storify timeline.

I’m reminded of a 2008 comment, on a post about the problems imposed by HR, In-human resources, science and pizza.

Thanks for that – I LOVED IT. It’s fantastic that the truth of HR (I truly hate that phrase) has been so ruthlessly exposed. Should be part of the School Handbook. Any VC who stripped out all the BS would immediately retain and attract good people and see their productivity soar.

That’s advice that Queen Mary should heed.

Part of the reason for that popularity was Ben Goldacre’s tweet, to his 201,000 followers

“destructive, unethical and crude metric incentives in academia (spotlight QMUL) bit.ly/MFHk2H by @david_colquhoun”

3 July 2012. I have come by a copy of this email, which was sent to Queen Mary by a senior professor from the USA (word travels fast on the web). It shows just how easy it is to destroy the reputation of an institution.

|

Sir Nicholas Montagu, Chairman of Council, and Principal Gaskell, I was appalled to read the criteria devised by your University to evaluate its faculty. There are so flawed it is hard to know where to begin. Your criteria are antithetical to good scientific research. The journals are littered with weak publications, which are generated mainly by scientists who feel the pressure to publish, no matter whether the results are interesting, valid, or meaningful. The literature is flooded by sheer volume of these publications. Your attempt to require “quality” research is provided by the requirement for publications in “high Impact Factor” journals. IF has been discredited among scientists for many reasons: it is inaccurate in not actually reflecting the merit of the specific paper, it is biased toward fields with lots of scientists, etc. The demand for publications in absurdly high IF journals encourages, and practically enforces scientific fraud. I have personally experienced those reviews from Nature demanding one or two more “final” experiments that will clinch the publication. The authors KNOW how these experiments MUST turn out. If they want their Nature paper (and their very academic survival if they are at a brutal, anti-scientific university like QMUL), they must get the “right” answer. The temptation to fudge the data to get this answer is extreme. Some scientists may even be able to convince themselves that each contrary piece of data that they discard to ensure the “correct” answer is being discarded for a valid reason. But the result is that scientific misconduct occurs. I did not see in your criteria for “success” at QMUL whether you discount retracted papers from the tally of high IF publications, or perhaps the retraction itself counts as yet another high IF publication! Your requirement for each faculty to have one or more postdocs or students promotes the abusive exploitation of these individuals for their cheap labor, and ignores the fact that they are being “trained” for jobs that do not exist. The “standards” you set are fantastically unrealistic. For example, funding is not graded, but a sharp step function – we have 1 or 2 or 0 grants and even if the average is above your limits, no one could sustain this continuously. Once you have fired every one of your faculty, which will almost certainly happen within 1-2 rounds of pogroms, where will you find legitimate scientists who are willing to join such a ludicrous University? |

4 July 2012.

Professor John F. Allen is Professor of Biochemistry at Queen Mary, University of London, and distinguished in the fields of Photosynthesis, Chloroplasts, Mitochondria, Genome function and evolution and Redox signalling. He, with a younger colleague, wrote a letter to the Lancet, Queen Mary: nobody expects the Spanish Inquisition. It is an admirable letter, the sort of thing any self-respecting academic should write. But not according to HR. On 14 May, Allen got a letter from HR, which starts thus.

|

14th May 2012 Dear Professor Allen I am writing to inform you that the College had decided to commence a factfinding investigation into the below allegation: That in writing and/or signing your name to a letter entitled "Queen Mary: nobody expects the Spanish Inquisition," (enclosed) which was published in the Lancet online on 4th May 2012, you sought to bring the Head of School of Biological and Chemical Sciences and the Dean for Research in the School of Medicine and Dentistry into disrepute. . . . . Sam Holborn |

Download the entire letter. It is utterly disgraceful bullying. If anyone is bringing Queen Mary into disrepute, it is Sam Holborn and the principal, Simon Gaskell.

Here’s another letter, from the many that have been sent. This is from a researcher in the Netherlands.

|

Dear Sir Nicholas,

I am addressing this to you in the hope that you were not directly involved in creating this extremely stupid set of measures that have been thought up, not to improve the conduct of science at QMUL, but to cheat QMUL’s way up the league tables over the heads of the existing academic staff. Others have written more succinctly about the crass stupidity of your Human Resources department than I could, and their apparent ignorance of how science actually works. As your principal must bear full responsibility for the introduction of these measures, I am not sending him a copy of this mail. I am pretty sure that his “principal” mail address will no longer be operative. We have had a recent scandal in the Netherlands where a social psychology professor, who even won a national “Man of the Year” award, as well as as a very large amount of research money, was recently exposed as having faked all the data that went into a total number of articles running into three figures. This is not the sort of thing one wants to happen to one’s own university. He would have done well according to your REF .. before he was found out. Human Resources departments have gained too much power, and are completely incompetent when it comes to judging academic standards. Let them get on with the old dull, and gobbledigook-free, tasks that personnel departments should be carrying out. |

5 July 2012.

Here’s another letter. It’s from a member of academic staff at QMUL, someone who is not himself threatened with being fired. It certainly shows that I’m not making a fuss about nothing. Rather, I’m the only person old enough to say what needs to be said without fear of losing my job and my house.

|

Dear Prof. Colquhoun,

I am an academic staff member in SBCS, QMUL. I am writing from my personal email account because the risks of using my work account to send this email are too great. I would like to thank you for highlighting our problems and how we have been treated by our employer (Queen Mary University of London), in your blog. I would please urge you to continue to tweet and blog about our plight, and staff in other universities experiencing similarly horrific working conditions. I am not threatened with redundancy by QMUL, and in fact my research is quite successful. Nevertheless, the last nine months have been the most stressful of all my years of academic life. The best of my colleagues in SBCS, QMUL are leaving already and I hope to leave, if I can find another job in London. Staff do indeed feel very unfairly treated, intimidated and bullied. I never thought a job at a university could come to this.

Thank you again for your support. It really does matter to the many of us who cannot really speak out openly at present.

Best regards,

|

In a later letter, the same person pointed out

"There are many of us who would like to speak more openly, but we simply cannot."

"I have mortgage . . . . Losing my job would probably mean losing my home too at this point."

"The plight of our female staff has not even been mentioned. We already had very few female staff. And with restructuring, female staff are more likely to be forced into teaching-only contracts or indeed fired"."

"total madness in the current climate – who would want to join us unless desperate for a job!"

“fuss about nothing” – absolutely not. It is potentially a perfect storm leading to teaching and research disaster for a university! Already the reputation of our university has been greatly damaged. And senior staff keep blaming and targeting the “messengers"."

6 July 2012.

Througn the miracle of WiFi, this is coming from Newton, MA. The Lancet today has another editorial on the Queen Mary scandal.

"As hopeful scientists prepare their applications to QMUL, they should be aware that, behind the glossy advertising, a sometimes harsh, at times repressive, and disturbingly unforgiving culture awaits them."

That sums it up nicely.

24 July 2012. I’m reminded by Nature writer, Richard van Noorden (@Richvn) that Nature itself has wriiten at least twice about the iniquity of judging people by impact factors. In 2005 Not-so-deep impact said

"Only 50 out of the roughly 1,800 citable items published in those two years received more than 100 citations in 2004. The great majority of our papers received fewer than 20 citations."

"None of this would really matter very much, were it not for the unhealthy reliance on impact factors by administrators and researchers’ employers worldwide to assess the scientific quality of nations and institutions, and often even to judge individuals."

And, more recently, in Assessing assessment” (2010).

29 July 2012. Jonathan L Rees. of the University of Edinburgh, ends his blog:

"I wonder what career advice I should offer to a young doctor circa 2012. Apart from not taking a job at Queen Mary of course. "

How to select candidates

I have, at various times, been asked how I would select candidates for a job, if not by counting papers and impact factors. This is a slightly modified version of a comment that I left on a blog, which describes roughly what I’d advocate

After a pilot study the entire Research Excellence Framework (which attempts to assess the quality of research in every UK university) made the following statement.

“No sub-panel will make any use of journal impact factors, rankings, lists or the perceived standing of publishers in assessing the quality of research outputs”

It seems that the REF is paying attention to the science not to bibliometricians.

It has been the practice at UCL to ask people to nominate their best papers (2 -4 papers depending on age). We then read the papers and asked candidates hard questions about them (not least about the methods section). It’s a method that I learned a long time ago from Stephen Heinemann, a senior scientist at the Salk Institute. It’s often been surprising to learn how little some candidates know about the contents of papers which they themselves select as their best. One aim of this is to find out how much the candidate understands the principles of what they are doing, as opposed to following a recipe.

Of course we also seek the opinions of people who know the work, and preferably know the person. Written references have suffered so much from ‘grade inflation’ that they are often worthless, but a talk on the telephone to someone that knows both the work, and the candidate, can be useful, That, however, is now banned by HR who seem to feel that any knowledge of the candidate’s ability would lead to bias.

It is not true that use of metrics is universal and thank heavens for that. There are alternatives and we use them.

Incidentally, the reason that I have described the Queen Mary procedures as insane, brainless and dimwitted is because their aim to increase their ratings is likely to be frustrated. No person in their right mind would want to work for a place that treats its employees like that, if they had any other option. And it is very odd that their attempt to improve their REF rating uses criteria that have been explicitly ruled out by the REF. You can’t get more brainless than that.

This discussion has been interesting to me, if only because it shows how little bibliometricians understand how to get good science.

This is a fuller version, with links, of the comment piece published in Times Higher Education on 10 April 2008. Download newspaper version here.

If you still have any doubt about the problems of directed research, look at the trenchant editorial in Nature (3 April, 2008. Look also at the editorial in Science by Bruce Alberts. The UK’s establishment is busy pushing an agenda that is already fading in the USA.

Since this went to press, more sense about “Brain Gym” has appeared. First Jeremy Paxman had a good go on Newsnight. Skeptobot has posted links to the videos of the broadcast, which have now appeared on YouTube.

Then, in the Education Guardian, Charlie Brooker started his article about “Brain Gym” thus

Dr Aust’s cogent comments are at “Brain Gym” loses its trousers. |

|

The Times Higher’s subeditor removed my snappy title and substituted this.

So here it is.

“HR is like many parts of modern businesses: a simple expense, and a burden on the backs of the productive workers”, “They don’t sell or produce: they consume. They are the amorphous support services” .

So wrote Luke Johnson recently in the Financial Times. He went on, “Training advisers are employed to distract everyone from doing their job with pointless courses”. Luke Johnson is no woolly-minded professor. He is in the Times’ Power 100 list, he organised the acquisition of PizzaExpress before he turned 30 and he now runs Channel 4 TV.

Why is it that Human Resources (you know, the folks we used to call Personnel) have acquired such a bad public image? It is not only in universities that this has happened. It seems to be universal, and worldwide. Well here are a few reasons.

Like most groups of people, HR is intent on expanding its power and status. That is precisely why they changed their name from Personnel to HR. As Personnel Managers they were seen as a service, and even, heaven forbid, on the side of the employees. As Human Resources they become part of the senior management team, and see themselves not as providing a service, but as managing people. My concern is the effect that change is having on science, but it seems that the effects on pizza sales are not greatly different.

The problem with having HR people (or lawyers, or any other non-scientists) managing science is simple. They have no idea how it works. They seem to think that every activity

can be run as though it was Wal-Mart That idea is old-fashioned even in management circles. Good employers have hit on the bright idea that people work best when they are not constantly harassed and when they feel that they are assessed fairly. If the best people don’t feel that, they just leave at the first opportunity. That is why the culture of managerialism and audit. though rampant, will do harm in the end to any university that embraces it.

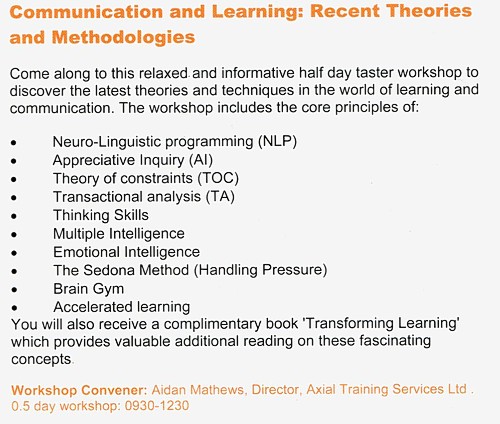

As it happens, there was a good example this week of the damage that can be inflicted on intellectual standards by the HR mentality. As a research assistant, I was sent the Human Resources Division Staff Development and Training booklet. Some of the courses they run are quite reasonable. Others amount to little more than the promotion of quackery. Here are three examples. We are offered a courses in “Self-hypnosis”, in “Innovations for Researchers” and in “Communication and Learning: Recent Theories and Methodologies”. What’s wrong with them?

“Self-hypnosis” seems to be nothing more than a pretentious word for relaxation. The person who is teaching researchers to innovate left science straight after his PhD and then did courses in “neurolinguistic programming” and life-coaching (the Carole Caplin of academia perhaps?). How that qualifies him to teach scientists to be innovative in research may not be obvious.

The third course teaches, among other things, the “core principles” of neurolinguistic programming, the Sedona method (“Your key to lasting happiness, success, peace and well-being”), and, wait for it, Brain Gym. This booklet arrived within a day or two of Ben

Goldacre’s spectacular demolition of Brain Gym “Nonsense dressed up as neuroscience”

“Brain Gym is a set of perfectly good fun exercise break ideas for kids, which costs a packet and comes attached to a bizarre and entirely bogus pseudoscientific explanatory framework”

“This ridiculousness comes at very great cost, paid for by you, the taxpayer, in thousands of state schools. It is peddled directly to your children by their credulous and apparently moronic teachers”

And now, it seems, peddled to your researchers by your credulous and

moronic HR department.

Neurolinguistic programming is an equally discredited form of psycho-babble, the dubious status of which was highlighted in a Beyerstein’s 1995 review, from Simon Fraser University.

“ Pop-psychology. The human potential movement and the fringe areas of psychotherapy also harbor a number of other scientifically questionable panaceas. Among these are Scientology, Neurolinguistic Programming, Re-birthing and Primal Scream Therapy which have never provided a scientifically acceptable rationale or evidence to support their therapeutic claims.”

The intellectual standards for many of the training courses that are inflicted on young researchers seem to be roughly on a par with the self-help pages of a downmarket women’s magazine. It is the Norman Vincent Peale approach to education. Uhuh, sorry, not education, but training. Michael O’Donnell defined Education as “Elitist activity. Cost ineffective. Unpopular with Grey Suits . Now largely replaced by Training .”

In the UK most good universities have stayed fairly free of quackery (the exceptions being the sixteen post-1992 universities that give BSc degrees in things like homeopathy). But now it is creeping in though the back door of credulous HR departments. Admittedly UCL Hospitals Trust recently advertised for spiritual healers, but that is the NHS not a university. The job specification form for spiritual healers was, it’s true, a pretty good example of the HR box-ticking mentality. You are in as long as you could tick the box to say that you have a “Full National Federation of Spiritual Healer certificate. or a full Reiki Master qualification, and two years post certificate experience”. To the HR mentality, it doesn’t matter a damn if you have a certificate in balderdash, as long as you have the piece of paper. How would they know the difference?

A lot of the pressure for this sort of nonsense comes, sadly, from a government that is obsessed with measuring the unmeasurable. Again, real management people have already worked this out. The management editor of the Guardian, said

“What happens when bad measures drive out good is strikingly described in an article in the current Economic Journal. Investigating the effects of competition in the NHS, Carol Propper and her colleagues made an extraordinary discovery. Under competition, hospitals improved their patient waiting times. At the same time, the death-rate e emergency heart-attack admissions substantially increased.”

Two new government initiatives provide beautiful examples of the HR mentality in action, They are Skills for Health, and the recently-created Complementary and Natural Healthcare Council.(already dubbed OfQuack).

The purpose of the Natural Healthcare Council .seems to be to implement a box-ticking exercise that will have the effect of giving a government stamp of approval to treatments that don’t work. Polly Toynbee summed it up when she wrote about “ Quackery

and superstition – available soon on the NHS “ . The advertisement for its CEO has already appeared, It says that main function of the new body will be to enhance public protection and confidence in the use of complementary therapists. Shouldn’t it be decreasing confidence in quacks, not increasing it? But, disgracefully, they will pay no attention at all to whether the treatments work. And the advertisement refers you to

the Prince of Wales’ Foundation for Integrated Health for more information (hang on, aren’t we supposed to have a constitutional monarchy?).

Skills for Health, or rather that unofficial branch of government, the Prince of Wales’ Foundation, had been busy making ‘competences’ for distant healing, with a helpful bulletted list.

“This workforce competence is applicable to:

- healing in the presence of the client

- distant healing in contact with the client

- distant healing not in contact with the client”

And they have done the same for homeopathy and its kindred delusions. The one thing they never consider is whether they are writing ‘competences’ in talking gobbledygook. When I phoned them to try to find out who was writing this stuff (they wouldn’t say), I made a passing joke about writing competences in talking to trees. The answer came back, in all seriousness,

“You’d have to talk to LANTRA, the land-based organisation for that”,

“LANTRA which is the sector council for the land-based industries uh, sector, not with us sorry . . . areas such as horticulture etc.”.

Anyone for competences in sense of humour studies?

The “unrepentant capitalist” Luke Johnson, in the FT, said

“I have radically downsized HR in several companies I have run, and business has gone all the better for it.”

Now there’s a thought.

The follow-up

The provost’s newletter for 24th June 2008 could just be a delayed reaction to this piece? For no obvious reason, it starts thus.

“(1) what’s management about?

Human resources often gets a bad name in universities, because as academics we seem to sense instinctively that management isn’t for us. We are autonomous lone scholars who work hours well beyond those expected, inspired more by intellectual curiosity than by objectives and targets. Yet a world-class institution like UCL obviously requires high quality management, a theme that I reflect on whenever I chair the Human Resources Policy Committee, or speak at one of the regular meetings to welcome new staff to UCL. The competition is tough, and resources are scarce, so they need to be efficiently used. The drive for better management isn’t simply a preoccupation of some distant UCL bureaucracy, but an important responsibility for all of us. UCL is a single institution, not a series of fiefdoms; each of us contributes to the academic mission and good management permeates everything we do. I despair at times when quite unnecessary functional breakdowns are brought to my attention, sometimes even leading to proceedings in the Employment Tribunal, when it is clear that early and professional management could have stopped the rot from setting in years before. UCL has long been a leader in providing all newly appointed heads of department with special training in management, and the results have been impressive. There is, to say the least, a close correlation between high performing departments and the quality of their academic leadership. At its best, the ethos of UCL lies in working hard but also in working smart; in understanding that UCL is a world-class institution and not the place for a comfortable existence free from stretch and challenge; yet also a good place for highly-motivated people who are also smart about getting the work-life balance right.”

I don’t know quite what to make of this. Is it really a defence of the Brain Gym mentality?

Of course everyone wants good management. That’s obvious, and we really don’t need a condescending lecture about it. The interesting question is whether we are getting it.

There is nothing one can really object to in this lecture, apart from the stunning post hoc ergo propter hoc fallacy implicit in “UCL has long been a leader in providing all newly appointed heads of department with special training in management, and the results have been impressive.”. That’s worthy of a nutritional therapist.

Before I started writing this response at 08.25 I had already got an email from a talented and hard-working senior postdoc. “Let’s start our beautiful working day with this charging thought of the week:”.

He was obviously rather insulted at the suggestion that it was necessary to lecture academics with words like ” not the place for a comfortable existence free from stretch and challenge; yet also a good place for highly-motivated people who are also smart about getting the work-life balance right.”. I suppose nobody had thought of that until HR wrote it down in a “competence”?

To provoke this sort of reaction in our most talented young scientists could, arguably, be regarded as unfortunate.

I don’t blame the postdoc for feeling a bit insulted by this little homily.

So do I.

Now back to science.

This is an old joke which can be found in many places on the web, with minor variations. I came across it in an article by Gustav Born in 2002 (BIF Futura, 17, 78 – 86) and reproduce what he said. It has never been more relevant, so it’s well worth repeating. The title of the article was British medical education and research in the new century.

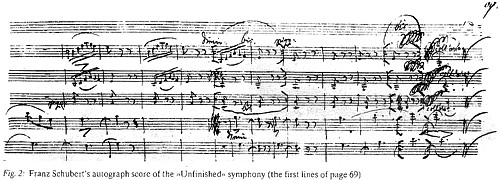

“The other deleterious development in UK research is increased bureaucratic control. Bureaucracy is notoriously bad for all creative activities. The story is told of a company chairman who was given a ticket to a concert in which Schubert’s Unfinished Symphony was to be played. Unable to go himself, he passed the ticket on to his colleague, the director in charge of administration and personnel. The next day the chairman asked, ‘Did you enjoy the concert?’ His colleague replied, ‘My report will be on your desk this afternoon’. This puzzled the chairman, who later received the following:

Report on attendance at a musical concert dated 14 November 1989. Item 3.

Schubert’s Unfinished Symphony.

- The attendance of the orchestra conductor is unnecessary for public performance. The orchestra has obviously practiced and has the prior authorization from the conductor to play the symphony at a predetermined level of quality. Considerable money could be saved by merely having the conductor critique the performance during a retrospective peer-review meeting.

- For considerable periods, the four oboe players had nothing to do. Their numbers should be reduced, and their work spread over the whole orchestra, thus eliminating the peaks and valleys of activity.

- All twelve violins were playing identical notes with identical motions. This was unnecessary duplication. If a larger volume is required, this could be obtained through electronic amplification which has reached very high levels of reproductive quality.

- Much effort was expended in playing sixteenth notes, or semi-quavers. This seems to me an excessive refinement, as listeners are unable to distinguish such rapid playing. It is recommended that all notes be rounded up to the nearest semi-quaver. If this were done, it would be possible to use trainees and lower-grade operatives more extensively.

- No useful purpose would appear to be served by repeating with horns the same passage that has already been handled by the strings. If all such redundant passages were eliminated, as determined by a utilization committee, the concert could have been reduced from two hours to twenty minutes with great savings in salaries and overhead.

- In fact, if Schubert had attended to these matters on a cost containment basis, he probably would have been able to finish his symphony.