randomization

‘We know little about the effect of diet on health. That’s why so much is written about it’. That is the title of a post in which I advocate the view put by John Ioannidis that remarkably little is known about the health effects if individual nutrients. That ignorance has given rise to a vast industry selling advice that has little evidence to support it.

The 2016 Conference of the so-called "College of Medicine" had the title "Food, the Forgotten Medicine". This post gives some background information about some of the speakers at this event. I’m sorry it appears to be too ad hominem, but the only way to judge the meeting is via the track record of the speakers.

Quite a lot has been written here about the "College of Medicine". It is the direct successor of the Prince of Wales’ late, unlamented, Foundation for Integrated Health. But unlike the latter, its name is disguises its promotion of quackery. Originally it was going to be called the “College of Integrated Health”, but that wasn’t sufficently deceptive so the name was dropped.

For the history of the organisation, see

Don’t be deceived. The new “College of Medicine” is a fraud and delusion

The College of Medicine is in the pocket of Crapita Capita. Is Graeme Catto selling out?

The conference programme (download pdf) is a masterpiece of bait and switch. It is a mixture of very respectable people, and outright quacks. The former are invited to give legitimacy to the latter. The names may not be familiar to those who don’t follow the antics of the magic medicine community, so here is a bit of information about some of them.

The introduction to the meeting was by Michael Dixon and Catherine Zollman, both veterans of the Prince of Wales Foundation, and both devoted enthusiasts for magic medicne. Zollman even believes in the battiest of all forms of magic medicine, homeopathy (download pdf), for which she totally misrepresents the evidence. Zollman works now at the Penny Brohn centre in Bristol. She’s also linked to the "Portland Centre for integrative medicine" which is run by Elizabeth Thompson, another advocate of homeopathy. It came into being after NHS Bristol shut down the Bristol Homeopathic Hospital, on the very good grounds that it doesn’t work.

Now, like most magic medicine it is privatised. The Penny Brohn shop will sell you a wide range of expensive and useless "supplements". For example, Biocare Antioxidant capsules at £37 for 90. Biocare make several unjustified claims for their benefits. Among other unnecessary ingredients, they contain a very small amount of green tea. That’s a favourite of "health food addicts", and it was the subject of a recent paper that contains one of the daftest statistical solecisms I’ve ever encountered

"To protect against type II errors, no corrections were applied for multiple comparisons".

If you don’t understand that, try this paper.

The results are almost certainly false positives, despite the fact that it appeared in Lancet Neurology. It’s yet another example of broken peer review.

It’s been know for decades now that “antioxidant” is no more than a marketing term, There is no evidence of benefit and large doses can be harmful. This obviously doesn’t worry the College of Medicine.

Margaret Rayman was the next speaker. She’s a real nutritionist. Mixing the real with the crackpots is a standard bait and switch tactic.

Eleni Tsiompanou, came next. She runs yet another private "wellness" clinic, which makes all the usual exaggerated claims. She seems to have an obsession with Hippocrates (hint: medicine has moved on since then). Dr Eleni’s Joy Biscuits may or may not taste good, but their health-giving properties are make-believe.

Andrew Weil, from the University of Arizona

gave the keynote address. He’s described as "one of the world’s leading authorities on Nutrition and Health". That description alone is sufficient to show the fantasy land in which the College of Medicine exists. He’s a typical supplement salesman, presumably very rich. There is no excuse for not knowing about him. It was 1988 when Arnold Relman (who was editor of the New England Journal of Medicine) wrote A Trip to Stonesville: Some Notes on Andrew Weil, M.D..

“Like so many of the other gurus of alternative medicine, Weil is not bothered by logical contradictions in his argument, or encumbered by a need to search for objective evidence.”

This blog has mentioned his more recent activities, many times.

Alex Richardson, of Oxford Food and Behaviour Research (a charity, not part of the university) is an enthusiast for omega-3, a favourite of the supplement industry, She has published several papers that show little evidence of effectiveness. That looks entirely honest. On the other hand, their News section contains many links to the notorious supplement industry lobby site, Nutraingredients, one of the least reliable sources of information on the web (I get their newsletter, a constant source of hilarity and raised eyebrows). I find this worrying for someone who claims to be evidence-based. I’m told that her charity is funded largely by the supplement industry (though I can’t find any mention of that on the web site).

Stephen Devries was a new name to me. You can infer what he’s like from the fact that he has been endorsed byt Andrew Weil, and that his address is "Institute for Integrative Cardiology" ("Integrative" is the latest euphemism for quackery). Never trust any talk with a title that contains "The truth about". His was called "The scientific truth about fats and sugars," In a video, he claims that diet has been shown to reduce heart disease by 70%. which gives you a good idea of his ability to assess evidence. But the claim doubtless helps to sell his books.

Prof Tim Spector, of Kings College London, was next. As far as I know he’s a perfectly respectable scientist, albeit one with books to sell, But his talk is now online, and it was a bit like a born-again microbiome enthusiast. He seemed to be too impressed by the PREDIMED study, despite it’s statistical unsoundness, which was pointed out by Ioannidis. Little evidence was presented, though at least he was more sensible than the audience about the uselessness of multivitamin tablets.

Simon Mills talked on “Herbs and spices. Using Mother Nature’s pharmacy to maintain health and cure illness”. He’s a herbalist who has featured here many times. I can recommend especially his video about Hot and Cold herbs as a superb example of fantasy science.

Annie Anderson, is Professor of Public Health Nutrition and

Founder of the Scottish Cancer Prevention Network. She’s a respectable nutritionist and public health person, albeit with their customary disregard of problems of causality.

Patrick Holden is chair of the Sustainable Food Trust. He promotes "organic farming". Much though I dislike the cruelty of factory farms, the "organic" industry is largely a way of making food more expensive with no health benefits.

The Michael Pittilo 2016 Student Essay Prize was awarded after lunch. Pittilo has featured frequently on this blog as a result of his execrable promotion of quackery -see, in particular, A very bad report: gamma minus for the vice-chancellor.

Nutritional advice for patients with cancer. This discussion involved three people.

Professor Robert Thomas, Consultant Oncologist, Addenbrookes and Bedford Hospitals, Dr Clare Shaw, Consultant Dietitian, Royal Marsden Hospital and Dr Catherine Zollman, GP and Clinical Lead, Penny Brohn UK.

Robert Thomas came to my attention when I noticed that he, as a regular cancer consultant had spoken at a meeting of the quack charity, “YestoLife”. When I saw he was scheduled tp speak at another quack conference. After I’d written to him to point out the track records of some of the people at the meeting, he withdrew from one of them. See The exploitation of cancer patients is wicked. Carrot juice for lunch, then die destitute. The influence seems to have been temporary though. He continues to lend respectability to many dodgy meetings. He edits the Cancernet web site. This site lends credence to bizarre treatments like homeopathy and crystal healing. It used to sell hair mineral analysis, a well-known phony diagnostic method the main purpose of which is to sell you expensive “supplements”. They still sell the “Cancer Risk Nutritional Profile”. for £295.00, despite the fact that it provides no proven benefits.

Robert Thomas designed a food "supplement", Pomi-T: capsules that contain Pomegranate, Green tea, Broccoli and Curcumin. Oddly, he seems still to subscribe to the antioxidant myth. Even the supplement industry admits that that’s a lost cause, but that doesn’t stop its use in marketing. The one randomised trial of these pills for prostate cancer was inconclusive. Prostate Cancer UK says "We would not encourage any man with prostate cancer to start taking Pomi-T food supplements on the basis of this research". Nevertheless it’s promoted on Cancernet.co.uk and widely sold. The Pomi-T site boasts about the (inconclusive) trial, but says "Pomi-T® is not a medicinal product".

There was a cookery demonstration by Dale Pinnock "The medicinal chef" The programme does not tell us whether he made is signature dish "the Famous Flu Fighting Soup". Needless to say, there isn’t the slightest reason to believe that his soup has the slightest effect on flu.

In summary, the whole meeting was devoted to exaggerating vastly the effect of particular foods. It also acted as advertising for people with something to sell. Much of it was outright quackery, with a leavening of more respectable people, a standard part of the bait-and-switch methods used by all quacks in their attempts to make themselves sound respectable. I find it impossible to tell how much the participants actually believe what they say, and how much it’s a simple commercial drive.

The thing that really worries me is why someone like Phil Hammond supports this sort of thing by chairing their meetings (as he did for the "College of Medicine’s" direct predecessor, the Prince’s Foundation for Integrated Health. His defence of the NHS has made him something of a hero to me. He assured me that he’d asked people to stick to evidence. In that he clearly failed. I guess they must pay well.

Follow-up

|

“Statistical regression to the mean predicts that patients selected for abnormalcy will, on the average, tend to improve. We argue that most improvements attributed to the placebo effect are actually instances of statistical regression.”

“Thus, we urge caution in interpreting patient improvements as causal effects of our actions and should avoid the conceit of assuming that our personal presence has strong healing powers.” |

In 1955, Henry Beecher published "The Powerful Placebo". I was in my second undergraduate year when it appeared. And for many decades after that I took it literally, They looked at 15 studies and found that an average 35% of them got "satisfactory relief" when given a placebo. This number got embedded in pharmacological folk-lore. He also mentioned that the relief provided by placebo was greatest in patients who were most ill.

Consider the common experiment in which a new treatment is compared with a placebo, in a double-blind randomised controlled trial (RCT). It’s common to call the responses measured in the placebo group the placebo response. But that is very misleading, and here’s why.

The responses seen in the group of patients that are treated with placebo arise from two quite different processes. One is the genuine psychosomatic placebo effect. This effect gives genuine (though small) benefit to the patient. The other contribution comes from the get-better-anyway effect. This is a statistical artefact and it provides no benefit whatsoever to patients. There is now increasing evidence that the latter effect is much bigger than the former.

How can you distinguish between real placebo effects and get-better-anyway effect?

The only way to measure the size of genuine placebo effects is to compare in an RCT the effect of a dummy treatment with the effect of no treatment at all. Most trials don’t have a no-treatment arm, but enough do that estimates can be made. For example, a Cochrane review by Hróbjartsson & Gøtzsche (2010) looked at a wide variety of clinical conditions. Their conclusion was:

“We did not find that placebo interventions have important clinical effects in general. However, in certain settings placebo interventions can influence patient-reported outcomes, especially pain and nausea, though it is difficult to distinguish patient-reported effects of placebo from biased reporting.”

In some cases, the placebo effect is barely there at all. In a non-blind comparison of acupuncture and no acupuncture, the responses were essentially indistinguishable (despite what the authors and the journal said). See "Acupuncturists show that acupuncture doesn’t work, but conclude the opposite"

So the placebo effect, though a real phenomenon, seems to be quite small. In most cases it is so small that it would be barely perceptible to most patients. Most of the reason why so many people think that medicines work when they don’t isn’t a result of the placebo response, but it’s the result of a statistical artefact.

Regression to the mean is a potent source of deception

The get-better-anyway effect has a technical name, regression to the mean. It has been understood since Francis Galton described it in 1886 (see Senn, 2011 for the history). It is a statistical phenomenon, and it can be treated mathematically (see references, below). But when you think about it, it’s simply common sense.

You tend to go for treatment when your condition is bad, and when you are at your worst, then a bit later you’re likely to be better, The great biologist, Peter Medawar comments thus.

|

"If a person is (a) poorly, (b) receives treatment intended to make him better, and (c) gets better, then no power of reasoning known to medical science can convince him that it may not have been the treatment that restored his health"

(Medawar, P.B. (1969:19). The Art of the Soluble: Creativity and originality in science. Penguin Books: Harmondsworth). |

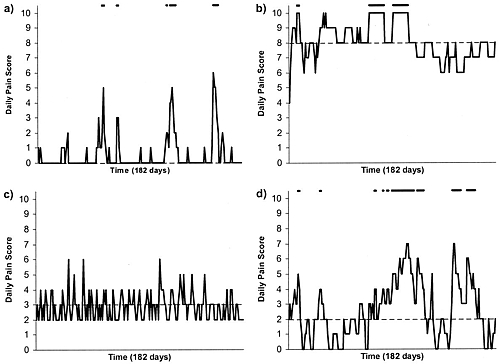

This is illustrated beautifully by measurements made by McGorry et al., (2001). Patients with low back pain recorded their pain (on a 10 point scale) every day for 5 months (they were allowed to take analgesics ad lib).

The results for four patients are shown in their Figure 2. On average they stay fairly constant over five months, but they fluctuate enormously, with different patterns for each patient. Painful episodes that last for 2 to 9 days are interspersed with periods of lower pain or none at all. It is very obvious that if these patients had gone for treatment at the peak of their pain, then a while later they would feel better, even if they were not actually treated. And if they had been treated, the treatment would have been declared a success, despite the fact that the patient derived no benefit whatsoever from it. This entirely artefactual benefit would be the biggest for the patients that fluctuate the most (e.g this in panels a and d of the Figure).

Figure 2 from McGorry et al, 2000. Examples of daily pain scores over a 6-month period for four participants. Note: Dashes of different lengths at the top of a figure designate an episode and its duration.

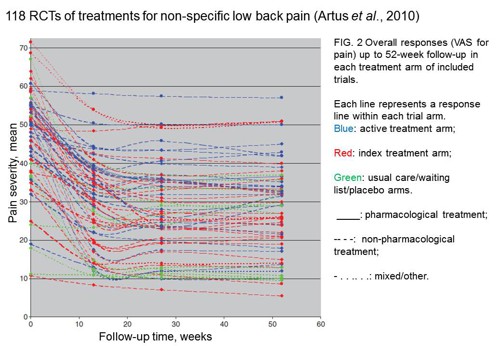

The effect is illustrated well by an analysis of 118 trials of treatments for non-specific low back pain (NSLBP), by Artus et al., (2010). The time course of pain (rated on a 100 point visual analogue pain scale) is shown in their Figure 2. There is a modest improvement in pain over a few weeks, but this happens regardless of what treatment is given, including no treatment whatsoever.

FIG. 2 Overall responses (VAS for pain) up to 52-week follow-up in each treatment arm of included trials. Each line represents a response line within each trial arm. Red: index treatment arm; Blue: active treatment arm; Green: usual care/waiting list/placebo arms. ____: pharmacological treatment; – – – -: non-pharmacological treatment; . . .. . .: mixed/other.

The authors comment

"symptoms seem to improve in a similar pattern in clinical trials following a wide variety of active as well as inactive treatments.", and "The common pattern of responses could, for a large part, be explained by the natural history of NSLBP".

In other words, none of the treatments work.

This paper was brought to my attention through the blog run by the excellent physiotherapist, Neil O’Connell. He comments

"If this finding is supported by future studies it might suggest that we can’t even claim victory through the non-specific effects of our interventions such as care, attention and placebo. People enrolled in trials for back pain may improve whatever you do. This is probably explained by the fact that patients enrol in a trial when their pain is at its worst which raises the murky spectre of regression to the mean and the beautiful phenomenon of natural recovery."

O’Connell has discussed the matter in recent paper, O’Connell (2015), from the point of view of manipulative therapies. That’s an area where there has been resistance to doing proper RCTs, with many people saying that it’s better to look at “real world” outcomes. This usually means that you look at how a patient changes after treatment. The hazards of this procedure are obvious from Artus et al.,Fig 2, above. It maximises the risk of being deceived by regression to the mean. As O’Connell commented

"Within-patient change in outcome might tell us how much an individual’s condition improved, but it does not tell us how much of this improvement was due to treatment."

In order to eliminate this effect it’s essential to do a proper RCT with control and treatment groups tested in parallel. When that’s done the control group shows the same regression to the mean as the treatment group. and any additional response in the latter can confidently attributed to the treatment. Anything short of that is whistling in the wind.

Needless to say, the suboptimal methods are most popular in areas where real effectiveness is small or non-existent. This, sad to say, includes low back pain. It also includes just about every treatment that comes under the heading of alternative medicine. Although these problems have been understood for over a century, it remains true that

|

"It is difficult to get a man to understand something, when his salary depends upon his not understanding it."

Upton Sinclair (1935) |

Responders and non-responders?

One excuse that’s commonly used when a treatment shows only a small effect in proper RCTs is to assert that the treatment actually has a good effect, but only in a subgroup of patients ("responders") while others don’t respond at all ("non-responders"). For example, this argument is often used in studies of anti-depressants and of manipulative therapies. And it’s universal in alternative medicine.

There’s a striking similarity between the narrative used by homeopaths and those who are struggling to treat depression. The pill may not work for many weeks. If the first sort of pill doesn’t work try another sort. You may get worse before you get better. One is reminded, inexorably, of Voltaire’s aphorism "The art of medicine consists in amusing the patient while nature cures the disease".

There is only a handful of cases in which a clear distinction can be made between responders and non-responders. Most often what’s observed is a smear of different responses to the same treatment -and the greater the variability, the greater is the chance of being deceived by regression to the mean.

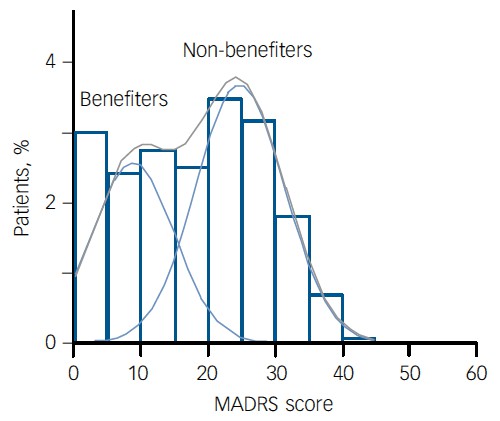

For example, Thase et al., (2011) looked at responses to escitalopram, an SSRI antidepressant. They attempted to divide patients into responders and non-responders. An example (Fig 1a in their paper) is shown.

The evidence for such a bimodal distribution is certainly very far from obvious. The observations are just smeared out. Nonetheless, the authors conclude

"Our findings indicate that what appears to be a modest effect in the grouped data – on the boundary of clinical significance, as suggested above – is actually a very large effect for a subset of patients who benefited more from escitalopram than from placebo treatment. "

I guess that interpretation could be right, but it seems more likely to be a marketing tool. Before you read the paper, check the authors’ conflicts of interest.

The bottom line is that analyses that divide patients into responders and non-responders are reliable only if that can be done before the trial starts. Retrospective analyses are unreliable and unconvincing.

Some more reading

Senn, 2011 provides an excellent introduction (and some interesting history). The subtitle is

"Here Stephen Senn examines one of Galton’s most important statistical legacies – one that is at once so trivial that it is blindingly obvious, and so deep that many scientists spend their whole career being fooled by it."

The examples in this paper are extended in Senn (2009), “Three things that every medical writer should know about statistics”. The three things are regression to the mean, the error of the transposed conditional and individual response.

You can read slightly more technical accounts of regression to the mean in McDonald & Mazzuca (1983) "How much of the placebo effect is statistical regression" (two quotations from this paper opened this post), and in Stephen Senn (2015) "Mastering variation: variance components and personalised medicine". In 1988 Senn published some corrections to the maths in McDonald (1983).

The trials that were used by Hróbjartsson & Gøtzsche (2010) to investigate the comparison between placebo and no treatment were looked at again by Howick et al., (2013), who found that in many of them the difference between treatment and placebo was also small. Most of the treatments did not work very well.

Regression to the mean is not just a medical deceiver: it’s everywhere

Although this post has concentrated on deception in medicine, it’s worth noting that the phenomenon of regression to the mean can cause wrong inferences in almost any area where you look at change from baseline. A classical example concern concerns the effectiveness of speed cameras. They tend to be installed after a spate of accidents, and if the accident rate is particularly high in one year it is likely to be lower the next year, regardless of whether a camera had been installed or not. To find the true reduction in accidents caused by installation of speed cameras, you would need to choose several similar sites and allocate them at random to have a camera or no camera. As in clinical trials. looking at the change from baseline can be very deceptive.

Statistical postscript

Lastly, remember that it you avoid all of these hazards of interpretation, and your test of significance gives P = 0.047. that does not mean you have discovered something. There is still a risk of at least 30% that your ‘positive’ result is a false positive. This is explained in Colquhoun (2014),"An investigation of the false discovery rate and the misinterpretation of p-values". I’ve suggested that one way to solve this problem is to use different words to describe P values: something like this.

|

P > 0.05 very weak evidence

P = 0.05 weak evidence: worth another look P = 0.01 moderate evidence for a real effect P = 0.001 strong evidence for real effect |

But notice that if your hypothesis is implausible, even these criteria are too weak. For example, if the treatment and placebo are identical (as would be the case if the treatment were a homeopathic pill) then it follows that 100% of positive tests are false positives.

Follow-up

12 December 2015

It’s worth mentioning that the question of responders versus non-responders is closely-related to the classical topic of bioassays that use quantal responses. In that field it was assumed that each participant had an individual effective dose (IED). That’s reasonable for the old-fashioned LD50 toxicity test: every animal will die after a sufficiently big dose. It’s less obviously right for ED50 (effective dose in 50% of individuals). The distribution of IEDs is critical, but it has very rarely been determined. The cumulative form of this distribution is what determines the shape of the dose-response curve for fraction of responders as a function of dose. Linearisation of this curve, by means of the probit transformation used to be a staple of biological assay. This topic is discussed in Chapter 10 of Lectures on Biostatistics. And you can read some of the history on my blog about Some pharmacological history: an exam from 1959.

In the course of thinking about metrics, I keep coming across cases of over-promoted research. An early case was “Why honey isn’t a wonder cough cure: more academic spin“. More recently, I noticed these examples.

“Effect of Vitamin E and Memantine on Functional Decline in Alzheimer Disease".(Spoiler -very little), published in the Journal of the American Medical Association. ”

and ” Primary Prevention of Cardiovascular Disease with a Mediterranean Diet” , in the New England Journal of Medicine (which had second highest altmetric score in 2013)

and "Sleep Drives Metabolite Clearance from the Adult Brain", published in Science

In all these cases, misleading press releases were issued by the journals themselves and by the universities. These were copied out by hard-pressed journalists and made headlines that were certainly not merited by the work. In the last three cases, hyped up tweets came from the journals. The responsibility for this hype must eventually rest with the authors. The last two papers came second and fourth in the list of highest altmetric scores for 2013

Here are to two more very recent examples. It seems that every time I check a highly tweeted paper, it turns out that it is very second rate. Both papers involve fMRI imaging, and since the infamous dead salmon paper, I’ve been a bit sceptical about them. But that is irrelevant to what follows.

Boost your memory with electricity

That was a popular headline at the end of August. It referred to a paper in Science magazine:

“Targeted enhancement of cortical-hippocampal brain networks and associative memory” (Wang, JX et al, Science, 29 August, 2014)

This study was promoted by the Northwestern University "Electric current to brain boosts memory". And Science tweeted along the same lines.

|

Science‘s link did not lead to the paper, but rather to a puff piece, "Rebooting memory with magnets". Again all the emphasis was on memory, with the usual entirely speculative stuff about helping Alzheimer’s disease. But the paper itself was behind Science‘s paywall. You couldn’t read it unless your employer subscribed to Science.

|

|

|

All the publicity led to much retweeting and a big altmetrics score. Given that the paper was not open access, it’s likely that most of the retweeters had not actually read the paper. |

|

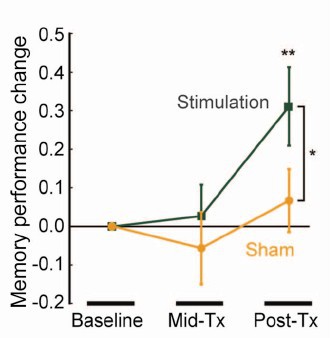

When you read the paper, you found that is mostly not about memory at all. It was mostly about fMRI. In fact the only reference to memory was in a subsection of Figure 4. This is the evidence.

That looks desperately unconvincing to me. The test of significance gives P = 0.043. In an underpowered study like this, the chance of this being a false discovery is probably at least 50%. A result like this means, at most, "worth another look". It does not begin to justify all the hype that surrounded the paper. The journal, the university’s PR department, and ultimately the authors, must bear the responsibility for the unjustified claims.

Science does not allow online comments following the paper, but there are now plenty of sites that do. NHS Choices did a fairly good job of putting the paper into perspective, though they failed to notice the statistical weakness. A commenter on PubPeer noted that Science had recently announced that it would tighten statistical standards. In this case, they failed. The age of post-publication peer review is already reaching maturity

Boost your memory with cocoa

Another glamour journal, Nature Neuroscience, hit the headlines on October 26, 2014, in a paper that was publicised in a Nature podcast and a rather uninformative press release.

"Enhancing dentate gyrus function with dietary flavanols improves cognition in older adults. Brickman et al., Nat Neurosci. 2014. doi: 10.1038/nn.3850.".

The journal helpfully lists no fewer that 89 news items related to this study. Mostly they were something like “Drinking cocoa could improve your memory” (Kat Lay, in The Times). Only a handful of the 89 reports spotted the many problems.

A puff piece from Columbia University’s PR department quoted the senior author, Dr Small, making the dramatic claim that

“If a participant had the memory of a typical 60-year-old at the beginning of the study, after three months that person on average had the memory of a typical 30- or 40-year-old.”

|

Like anything to do with diet, the paper immediately got circulated on Twitter. No doubt most of the people who retweeted the message had not read the (paywalled) paper. The links almost all led to inaccurate press accounts, not to the paper itself. |

|

But some people actually read the paywalled paper and post-publication review soon kicked in. Pubmed Commons is a good site for that, because Pubmed is where a lot of people go for references. Hilda Bastian kicked off the comments there (her comment was picked out by Retraction Watch). Her conclusion was this.

"It’s good to see claims about dietary supplements tested. However, the results here rely on a chain of yet-to-be-validated assumptions that are still weakly supported at each point. In my opinion, the immodest title of this paper is not supported by its contents."

(Hilda Bastian runs the Statistically Funny blog -“The comedic possibilities of clinical epidemiology are known to be limitless”, and also a Scientific American blog about risk, Absolutely Maybe.)

NHS Choices spotted most of the problems too, in "A mug of cocoa is not a cure for memory problems". And so did Ian Musgrave of the University of Adelaide who wrote "Most Disappointing Headline Ever (No, Chocolate Will Not Improve Your Memory)",

Here are some of the many problems.

- The paper was not about cocoa. Drinks containing 900 mg cocoa flavanols (as much as in about 25 chocolate bars) and 138 mg of (−)-epicatechin were compared with much lower amounts of these compounds

- The abstract, all that most people could read, said that subjects were given "high or low cocoa–containing diet for 3 months". Bit it wasn’t a test of cocoa: it was a test of a dietary "supplement".

- The sample was small (37ppeople altogether, split between four groups), and therefore under-powered for detection of the small effect that was expected (and observed)

- The authors declared the result to be "significant" but you had to hunt through the paper to discover that this meant P = 0.04 (hint -it’s 6 lines above Table 1). That means that there is around a 50% chance that it’s a false discovery.

- The test was short -only three months

- The test didn’t measure memory anyway. It measured reaction speed, They did test memory retention too, and there was no detectable improvement. This was not mentioned in the abstract, Neither was the fact that exercise had no detectable effect.

- The study was funded by the Mars bar company. They, like many others, are clearly looking for a niche in the huge "supplement" market,

The claims by the senior author, in a Columbia promotional video that the drink produced "an improvement in memory" and "an improvement in memory performance by two or three decades" seem to have a very thin basis indeed. As has the statement that "we don’t need a pharmaceutical agent" to ameliorate a natural process (aging). High doses of supplements are pharmaceutical agents.

To be fair, the senior author did say, in the Columbia press release, that "the findings need to be replicated in a larger study—which he and his team plan to do". But there is no hint of this in the paper itself, or in the title of the press release "Dietary Flavanols Reverse Age-Related Memory Decline". The time for all the publicity is surely after a well-powered study, not before it.

The high altmetrics score for this paper is yet another blow to the reputation of altmetrics.

One may well ask why Nature Neuroscience and the Columbia press office allowed such extravagant claims to be made on such a flimsy basis.

What’s going wrong?

These two papers have much in common. Elaborate imaging studies are accompanied by poor functional tests. All the hype focusses on the latter. These led me to the speculation ( In Pubmed Commons) that what actually happens is as follows.

- Authors do big imaging (fMRI) study.

- Glamour journal says coloured blobs are no longer enough and refuses to publish without functional information.

- Authors tag on a small human study.

- Paper gets published.

- Hyped up press releases issued that refer mostly to the add on.

- Journal and authors are happy.

- But science is not advanced.

It’s no wonder that Dorothy Bishop wrote "High-impact journals: where newsworthiness trumps methodology".

It’s time we forgot glamour journals. Publish open access on the web with open comments. Post-publication peer review is working

But boycott commercial publishers who charge large amounts for open access. It shouldn’t cost more than about £200, and more and more are essentially free (my latest will appear shortly in Royal Society Open Science).

Follow-up

Hilda Bastian has an excellent post about the dangers of reading only the abstract "Science in the Abstract: Don’t Judge a Study by its Cover"

4 November 2014

I was upbraided on Twitter by Euan Adie, founder of Almetric.com, because I didn’t click through the altmetric symbol to look at the citations "shouldn’t have to tell you to look at the underlying data David" and "you could have saved a lot of Google time". But when I did do that, all I found was a list of media reports and blogs -pretty much the same as Nature Neuroscience provides itself.

More interesting, I found that my blog wasn’t listed and neither was PubMed Commons. When I asked why, I was told "needs to regularly cite primary research. PubMed, PMC or repository links”. But this paper is behind a paywall. So I provide (possibly illegally) a copy of it, so anyone can verify my comments. The result is that altmetric’s dumb algorithms ignore it. In order to get counted you have to provide links that lead nowhere.

So here’s a link to the abstract (only) in Pubmed for the Science paper http://www.ncbi.nlm.nih.gov/pubmed/25170153 and here’s the link for the Nature Neuroscience paper http://www.ncbi.nlm.nih.gov/pubmed/25344629

It seems that altmetrics doesn’t even do the job that it claims to do very efficiently.

It worked. By later in the day, this blog was listed in both Nature‘s metrics section and by altmetrics. com. But comments on Pubmed Commons were still missing, That’s bad because it’s an excellent place for post-publications peer review.

One of my scientific heroes is Bernard Katz. The closing words of his inaugural lecture, as professor of biophysics at UCL, hang on the wall of my office as a salutory reminder to refrain from talking about ‘how the brain works’. After speaking about his discoveries about synaptic transmission, he ended thus.

|

"My time is up and very glad I am, because I have been leading myself right up to a domain on which I should not dare to trespass, not even in an Inaugural Lecture. This domain contains the awkward problems of mind and matter about which so much has been talked and so little can be said, and having told you of my pedestrian disposition, I hope you will give me leave to stop at this point and not to hazard any further guesses." |

The question of what to eat for good health is truly a topic about "which so much has been talked and so little can be said"

That was emphasized yet again by an editorial in the British Medical Journal written by my favourite epidemiologist. John Ioannidis. He has been at the forefront of debunking hype. Its title is “Implausible results in human nutrition research” (BMJ, 2013;347:f6698.

Get pdf).

The gist is given by the memorable statement

"Almost every single nutrient imaginable has peer reviewed publications associating it with almost any outcome."

and the subtitle

“Definitive solutions won’t come from another million observational papers or small randomized trials“.

Being a bit obsessive about causality, this paper is music to my ears. The problem of causality was understood perfectly by Samuel Johnson, in 1756, and he was a lexicographer, not a scientist. Yet it’s widely ignored by epidemiologists.

The problem of causality is often mentioned in the introduction to papers that describe survey data, yet by the end of the paper, it’s usually forgotten, and public health advice is issued.

Ioannidis’ editorial vindicates my own views, as an amateur epidemiologist, on the results of the endless surveys of diet and health.

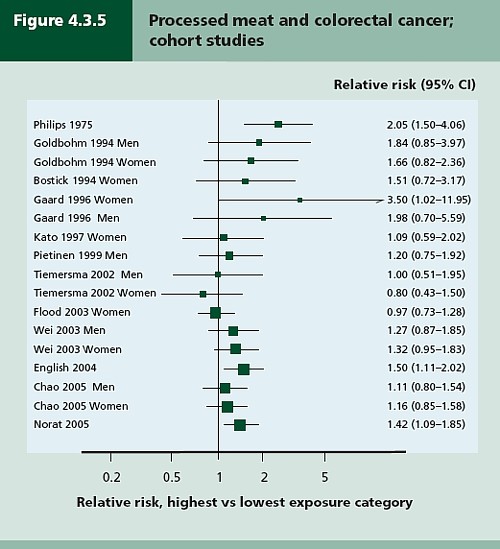

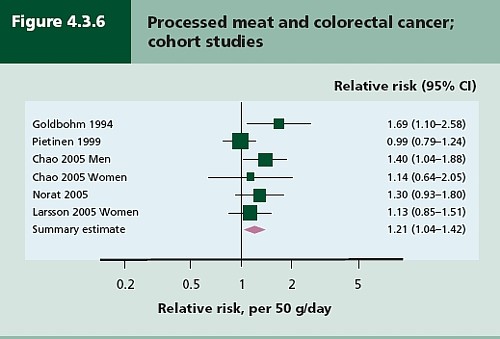

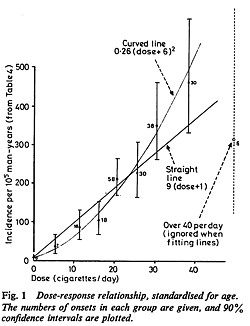

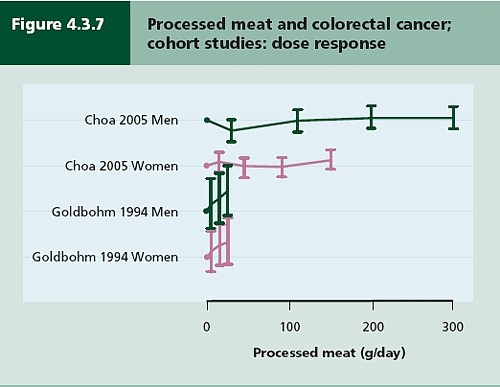

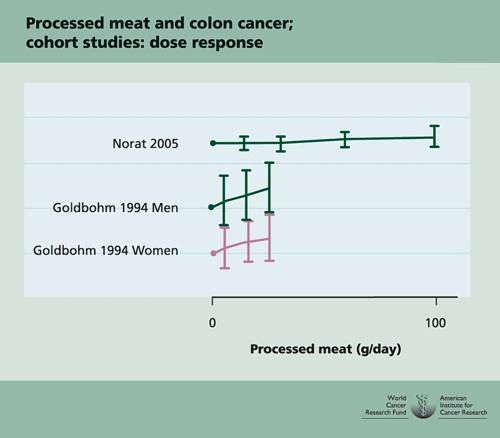

- Diet and health. What can you believe: or does bacon kill you (2009) in which I look at the World Cancer Research Fund’s evidence for causality (next to none in my opinion). Through this I got to know Gary Taubes, whose explanation of causality in the New York Times is the best popular account I’ve ever seen.

- How big is the risk from eating red meat now: an update (2012) This was based on the WCRF update – the risk was roughly halved though it didn’t say that in the press release.

- Another update. Red meat doesn’t kill you, but the spin is fascinating (2013). Update after the EPIC results in which the risk essentially vanished: good news which you could find only by digging into Table 3.

There is nothing new about the problem. It’s been written about many times. Young & Karr (Significance, 8, 116 – 120, 2011: get pdf) said "Any claim coming from an observational study is most likely to be wrong". Out of 52 claims made in 12 observational studies, not a single one was confirmed when tested by randomised controlled trials.

Another article cited by Ioannidis, "Myths, Presumptions, and Facts about Obesity" (Casazza et al , NEJM, 2013), debunks many myths, but the list of conflicts of interests declared by the authors is truly horrendous (and at least one of their conclusions has been challenged, albeit by people with funding from Kellogg’s). The frequent conflicts of interest in nutrition research make a bad situation even worse.

The quotation in bold type continues thus.

"On 25 October 2013, PubMed listed 291 papers with the keywords “coffee OR caffeine” and 741 with “soy,” many of which referred to associations. In this literature of epidemic proportions, how many results are correct? Many findings are entirely implausible. Relative risks that suggest we can halve the burden of cancer with just a couple of servings a day of a single nutrient still circulate widely in peer reviewed journals.

However, on the basis of dozens of randomized trials, single nutrients are unlikely to have relative risks less than 0.90 for major clinical outcomes when extreme tertiles of population intake are compared—most are greater than 0.95. For overall mortality, relative risks are typically greater than 0.995, if not entirely null. The respective absolute risk differences would be trivial. Observational studies and even randomized trials of single nutrients seem hopeless, with rare exceptions. Even minimal confounding or other biases create noise that exceeds any genuine effect. Big datasets just confer spurious precision status to noise."

And, later,

"According to the latest burden of disease study, 26% of deaths and 14% of disability adjusted life years in the United States are attributed to dietary risk factors, even without counting the impact of obesity. No other risk factor comes anywhere close to diet in these calculations (not even tobacco and physical inactivity). I suspect this is yet another implausible result. It builds on risk estimates from the same data of largely implausible nutritional studies discussed above. Moreover, socioeconomic factors are not considered at all, although they may be at the root of health problems. Poor diet may partly be a correlate or one of several paths through which social factors operate on health."

Another field that is notorious for producing false positives, wirh false attribution of causality, is the detection of biomarkers. A critical discussion can be found in the paper by Broadhurst & Kell (2006), "False discoveries in metabolomics and related experiments".

"Since the early days of transcriptome analysis (Golub et al., 1999), many workers have looked to detect different gene expression in cancerous versus normal tissues. Partly because of the expense of transcriptomics (and the inherent noise in such data (Schena, 2000; Tu et al., 2002; Cui and Churchill, 2003; Liang and Kelemen, 2006)), the numbers of samples and their replicates is often small while the number of candidate genes is typically in the thousands. Given the above, there is clearly a great danger that most of these will not in practice withstand scrutiny on deeper analysis (despite the ease with which one can create beautiful heat maps and any number of ‘just-so’ stories to explain the biological relevance of anything that is found in preliminary studies!). This turns out to be the case, and we review a recent analysis (Ein-Dor et al., 2006) of a variety of such studies."

The fields of metabolomics, proteomics and transcriptomics are plagued by statistical problems (as well as being saddled with ghastly pretentious names).

What’s to be done?

Barker Bausell, in his demolition of research on acupuncture, said:

[Page39] “But why should nonscientists care one iota about something as esoteric as causal inference? I believe that the answer to this question is because the making of causal inferences is part of our job description as Homo Sapiens.”

The problem, of course, is that humans are very good at attributing causality when it does not exist. That has led to confusion between correlation and cause on an industrial scale, not least in attempts to work out the effects of diet on health.

More than in any other field it is hard to do the RCTs that could, in principle, sort out the problem. It’s hard to allocate people at random to different diets, and even harder to make people stick to those diets for the many years that are needed.

We can probably say by now that no individual food carries a large risk, or affords very much protection. The fact that we are looking for quite small effects means that even when RCTs are possible huge samples will be needed to get clear answers. Most RCTs are too short, and too small (under-powered) and that leads to overestimation of the size of effects.

That’s a problem that plagues experimental pyschology too, and has led to a much-discussed crisis in reproducibility.

"Supplements" of one sort and another are ubiquitous in sports. Nobody knows whether they work, and the margin between winning and losing is so tiny that it’s very doubtful whether we ever will know. We can expect irresponsible claims to continue unabated.

The best thing that can be done in the short term is to stop doing large observational studies altogether. It’s now clear that inferences made from them are likely to be wrong. And, sad to say, we need to view with great skepticism anything that is funded by the food industry. And make a start on large RCTs whenever that is possible. Perhaps the hardest goal of all is to end the "publish or perish" culture which does so much to prevent the sort of long term experiments which would give the information we want.

Ioannidis’ article ends with the statement

"I am co-investigator in a randomized trial of a low carbohydrate versus low fat diet that is funded by the US National Institutes of Health and the non-profit Nutrition Science Initiative."

It seems he is putting his money where his mouth is.

Until we have the results, we shall continue to be bombarded with conflicting claims made by people who are doing their best with flawed methods, as well as by those trying to sell fad diets. Don’t believe them. The famous "5-a-day" advice that we are constantly bombarded with does no harm, but it has no sound basis.

As far as I can guess, the only sound advice about healthy eating for most people is

- don’t eat too much

- don’t eat all the same thing

You can’t make much money out of that advice.

No doubt that is why you don’t hear it very often.

Follow-up

Two relevant papers that show the unreliability of observational studies,

"Nearly 80,000 observational studies were published in the decade 1990–2000 (Naik 2012). In the following decade, the number of studies grew to more than 260,000". Madigan et al. (2014)

“. . . the majority of observational studies would declare statistical significance when no effect is present” Schuemie et al., (2012)

20 March 2014

On 20 March 2014, I gave a talk on this topic at the Cambridge Science Festival (more here). After the event my host, Yvonne Noblis, sent me some (doubtless cherry-picked) feedback she’d had about the talk.

I have in the past, taken an occasional interest in the philosophy of science. But in a lifetime doing science, I have hardly ever heard a scientist mention the subject. It is, on the whole, a subject that is of interest only to philosophers.

|

It’s true that some philosophers have had interesting things to say about the nature of inductive inference, but during the 20th century the real advances in that area came from statisticians, not from philosophers. So I long since decided that it would be more profitable to spend my time trying to understand R.A Fisher, rather than read even Karl Popper. It is harder work to do that, but it seemed the way to go.

|

|

This post is based on the last part of chapter titled “In Praise of Randomisation. The importance of causality in medicine and its subversion by philosophers of science“. A talk was given at the meeting at the British Academy in December 2007, and the book will be launched on November 28th 2011 (good job it wasn’t essential for my CV with delays like that). The book is published by OUP for the British Academy, under the title Evidence, Inference and Enquiry (edited by Philip Dawid, William Twining, and Mimi Vasilaki, 504 pages, £85.00). The bulk of my contribution has already appeared here, in May 2009, under the heading Diet and health. What can you believe: or does bacon kill you?. It is one of the posts that has given me the most satisfaction, if only because Ben Goldacre seemed to like it, and he has done more than anyone to explain the critical importance of randomisation for assessing treatments and for assessing social interventions.

Having long since decided that it was Fisher, rather than philosophers, who had the answers to my questions, why bother to write about philosophers at all? It was precipitated by joining the London Evidence Group. Through that group I became aware that there is a group of philosophers of science who could, if anyone took any notice of them, do real harm to research. It seems surprising that the value of randomisation should still be disputed at this stage, and of course it is not disputed by anybody in the business. It was thoroughly established after the start of small sample statistics at the beginning of the 20th century. Fisher’s work on randomisation and the likelihood principle put inference on a firm footing by the mid-1930s. His popular book, The Design of Experiments made the importance of randomisation clear to a wide audience, partly via his famous example of the lady tasting tea. The development of randomisation tests made it transparently clear (perhaps I should do a blog post on their beauty). By the 1950s. the message got through to medicine, in large part through Austin Bradford Hill.

Despite this, there is a body of philosophers who dispute it. And of course it is disputed by almost all practitioners of alternative medicine (because their treatments usually fail the tests). Here are some examples.

“Why there’s no cause to randomise” is the rather surprising title of a report by Worrall (2004; see also Worral, 2010), from the London School of Economics. The conclusion of this paper is

“don’t believe the bad press that ‘observational studies’ or ‘historically controlled trials’ get – so long as they are properly done (that is, serious thought has gone in to the possibility of alternative explanations of the outcome), then there is no reason to think of them as any less compelling than an RCT.”

In my view this conclusion is seriously, and dangerously, wrong –it ignores the enormous difficulty of getting evidence for causality in real life, and it ignores the fact that historically controlled trials have very often given misleading results in the past, as illustrated by the diet problem.. Worrall’s fellow philosopher, Nancy Cartwright (Are RCTs the Gold Standard?, 2007), has made arguments that in some ways resemble those of Worrall.

Many words are spent on defining causality but, at least in the clinical setting the meaning is perfectly simple. If the association between eating bacon and colorectal cancer is causal then if you stop eating bacon you’ll reduce the risk of cancer. If the relationship is not causal then if you stop eating bacon it won’t help at all. No amount of Worrall’s “serious thought” will substitute for the real evidence for causality that can come only from an RCT: Worrall seems to claim that sufficient brain power can fill in missing bits of information. It can’t. I’m reminded inexorably of the definition of “Clinical experience. Making the same mistakes with increasing confidence over an impressive number of years.” In Michael O’Donnell’s A Sceptic’s Medical Dictionary.

At the other philosophical extreme, there are still a few remnants of post-modernist rhetoric to be found in obscure corners of the literature. Two extreme examples are the papers by Holmes et al. and by Christine Barry. Apart from the fact that they weren’t spoofs, both of these papers bear a close resemblance to Alan Sokal’s famous spoof paper, Transgressing the boundaries: towards a transformative hermeneutics of quantum gravity (Sokal, 1996). The acceptance of this spoof by a journal, Social Text, and the subsequent book, Intellectual Impostures, by Sokal & Bricmont (Sokal & Bricmont, 1998), exposed the astonishing intellectual fraud if postmodernism (for those for whom it was not already obvious). A couple of quotations will serve to give a taste of the amazing material that can appear in peer-reviewed journals. Barry (2006) wrote

“I wish to problematise the call from within biomedicine for more evidence of alternative medicine’s effectiveness via the medium of the randomised clinical trial (RCT).”

“Ethnographic research in alternative medicine is coming to be used politically as a challenge to the hegemony of a scientific biomedical construction of evidence.”

“The science of biomedicine was perceived as old fashioned and rejected in favour of the quantum and chaos theories of modern physics.”

“In this paper, I have deconstructed the powerful notion of evidence within biomedicine, . . .”

The aim of this paper, in my view, is not obtain some subtle insight into the process of inference but to try to give some credibility to snake-oil salesmen who peddle quack cures. The latter at least make their unjustified claims in plain English.

The similar paper by Holmes, Murray, Perron & Rail (Holmes et al., 2006) is even more bizarre.

“Objective The philosophical work of Deleuze and Guattari proves to be useful in showing how health sciences are colonised (territorialised) by an all-encompassing scientific research paradigm “that of post-positivism ” but also and foremost in showing the process by which a dominant ideology comes to exclude alternative forms of knowledge, therefore acting as a fascist structure. “,

It uses the word fascism, or some derivative thereof, 26 times. And Holmes, Perron & Rail (Murray et al., 2007)) end a similar tirade with

“We shall continue to transgress the diktats of State Science.”

It may be asked why it is even worth spending time on these remnants of the utterly discredited postmodernist movement. One reason is that rather less extreme examples of similar thinking still exist in some philosophical circles.

Take, for example, the views expressed papers such as Miles, Polychronis and Grey (2006), Miles & Loughlin (2006), Miles, Loughlin & Polychronis (Miles et al., 2007) and Loughlin (2007).. These papers form part of the authors’ campaign against evidence-based medicine, which they seem to regard as some sort of ideological crusade, or government conspiracy. Bizarrely they seem to think that evidence-based medicine has something in common with the managerial culture that has been the bane of not only medicine but of almost every occupation (and which is noted particularly for its disregard for evidence). Although couched in the sort of pretentious language favoured by postmodernists, in fact it ends up defending the most simple-minded forms of quackery. Unlike Barry (2006), they don’t mention alternative medicine explicitly, but the agenda is clear from their attacks on Ben Goldacre. For example, Miles, Loughlin & Polychronis (Miles et al., 2007) say this.

“Loughlin identifies Goldacre [2006] as a particularly luminous example of a commentator who is able not only to combine audacity with outrage, but who in a very real way succeeds in manufacturing a sense of having been personally offended by the article in question. Such moralistic posturing acts as a defence mechanism to protect cherished assumptions from rational scrutiny and indeed to enable adherents to appropriate the ‘moral high ground’, as well as the language of ‘reason’ and ‘science’ as the exclusive property of their own favoured approaches. Loughlin brings out the Orwellian nature of this manoeuvre and identifies a significant implication.”

If Goldacre and others really are engaged in posturing then their primary offence, at least according to the Sartrean perspective adopted by Murray et al. is not primarily intellectual, but rather it is moral. Far from there being a moral requirement to ‘bend a knee’ at the EBM altar, to do so is to violate one’s primary duty as an autonomous being.”

This ferocious attack seems to have been triggered because Goldacre has explained in simple words what constitutes evidence and what doesn’t. He has explained in a simple way how to do a proper randomised controlled trial of homeopathy. And he he dismantled a fraudulent Qlink pendant, purported to shield you from electromagnetic radiation but which turned out to have no functional components (Goldacre, 2007). This is described as being “Orwellian”, a description that seems to me to be downright bizarre.

In fact, when faced with real-life examples of what happens when you ignore evidence, those who write theoretical papers that are critical about evidence-based medicine may behave perfectly sensibly. Although Andrew Miles edits a journal, (Journal of Evaluation in Clinical Practice), that has been critical of EBM for years. Yet when faced with a course in alternative medicine run by people who can only be described as quacks, he rapidly shut down the course (A full account has appeared on this blog).

It is hard to decide whether the language used in these papers is Marxist or neoconservative libertarian. Whatever it is, it clearly isn’t science. It may seem odd that postmodernists (who believe nothing) end up as allies of quacks (who’ll believe anything). The relationship has been explained with customary clarity by Alan Sokal, in his essay Pseudoscience and Postmodernism: Antagonists or Fellow-Travelers? (Sokal, 2006).

Conclusions

Of course RCTs are not the only way to get knowledge. Often they have not been done, and sometimes it is hard to imagine how they could be done (though not nearly as often as some people would like to say).

It is true that RCTs tell you only about an average effect in a large population. But the same is true of observational epidemiology. That limitation is nothing to do with randomisation, it is a result of the crude and inadequate way in which diseases are classified (as discussed above). It is also true that randomisation doesn’t guarantee lack of bias in an individual case, but only in the long run. But it is the best that can be done. The fact remains that randomization is the only way to be sure of causality, and making mistakes about causality can harm patients, as it did in the case of HRT.

Raymond Tallis (1999), in his review of Sokal & Bricmont, summed it up nicely

“Academics intending to continue as postmodern theorists in the interdisciplinary humanities after S & B should first read Intellectual Impostures and ask themselves whether adding to the quantity of confusion and untruth in the world is a good use of the gift of life or an ethical way to earn a living. After S & B, they may feel less comfortable with the glamorous life that can be forged in the wake of the founding charlatans of postmodern Theory. Alternatively, they might follow my friend Roger into estate agency — though they should check out in advance that they are up to the moral rigours of such a profession.”

The conclusions that I have drawn were obvious to people in the business a half a century ago. (Doll & Peto, 1980) said

“If we are to recognize those important yet moderate real advances in therapy which can save thousands of lives, then we need more large randomised trials than at present, not fewer. Until we have them treatment of future patients will continue to be determined by unreliable evidence.”

The towering figures are R.A. Fisher, and his followers who developed the ideas of randomisation and maximum likelihood estimation. In the medical area, Bradford Hill, Archie Cochrane, Iain Chalmers had the important ideas worked out a long time ago.

In contrast, philosophers like Worral, Cartwright, Holmes, Barry, Loughlin and Polychronis seem to me to make no contribution to the accumulation of useful knowledge, and in some cases to hinder it. It’s true that the harm they do is limited, but that is because they talk largely to each other. Very few working scientists are even aware of their existence. Perhaps that is just as well.

References

Cartwright N (2007). Are RCTs the Gold Standard? Biosocieties (2007), 2: 11-20

Colquhoun, D (2010) University of Buckingham does the right thing. The Faculty of Integrated Medicine has been fired. https://www.dcscience.net/?p=2881

Miles A & Loughlin M (2006). Continuing the evidence-based health care debate in 2006. The progress and price of EBM. J Eval Clin Pract 12, 385-398.

Miles A, Loughlin M, & Polychronis A (2007). Medicine and evidence: knowledge and action in clinical practice. J Eval Clin Pract 13, 481-503.

Miles A, Polychronis A, & Grey JE (2006). The evidence-based health care debate – 2006. Where are we now? J Eval Clin Pract 12, 239-247.

Murray SJ, Holmes D, Perron A, & Rail G (2007).

Deconstructing the evidence-based discourse in health sciences: truth, power and fascis. Int J Evid Based Healthc 2006; : 4, 180–186.

Sokal AD (1996). Transgressing the Boundaries: Towards a Transformative Hermeneutics of Quantum Gravity. Social Text 46/47, Science Wars, 217-252.

Sokal AD (2006). Pseudoscience and Postmodernism: Antagonists or Fellow-Travelers? In Archaeological Fantasies, ed. Fagan GG, Routledge,an imprint of Taylor & Francis Books Ltd.

Sokal AD & Bricmont J (1998). Intellectual Impostures, New edition, 2003, Economist Books ed. Profile Books.

Tallis R. (1999) Sokal and Bricmont: Is this the beginning of the end of the dark ages in the humanities?

Worrall J. (2004) Why There’s No Cause to Randomize. Causality: Metaphysics and Methods.Technical Report 24/04 . 2004.

Worrall J (2010). Evidence: philosophy of science meets medicine. J Eval Clin Pract 16, 356-362.

Follow-up

Iain Chalmers has drawn my attention to a some really interesting papers in the James Lind Library

An account of early trials is given by Chalmers I, Dukan E, Podolsky S, Davey Smith G (2011). The adoption of unbiased treatment allocation schedules in clinical trials during the 19th and early 20th centuries. Fisher was not the first person to propose randomised trials, but he is the person who put it on a sound mathematical basis.

Another fascinating paper is Chalmers I (2010). Why the 1948 MRC trial of streptomycin used treatment allocation based on random numbers.

The distinguished statistician, David Cox contributed, Cox DR (2009). Randomization for concealment.

Incidentally, if anyone still thinks there are ethical objections to random allocation, they should read the account of retrolental fibroplasia outbreak in the 1950s, Silverman WA (2003). Personal reflections on lessons learned from randomized trials involving newborn infants, 1951 to 1967.

Chalmers also pointed out that Antony Eagle of Exeter College Oxford had written about Goldacre’s epistemology. He describes himself as a “formal epistemologist”. I fear that his criticisms seem to me to be carping and trivial. Once again, a philosopher has failed to make a contribution to the progress of knowledge.

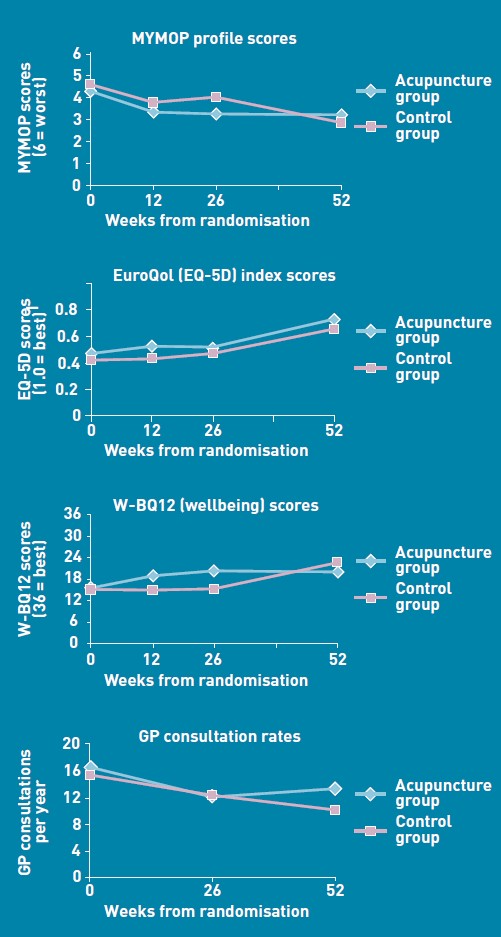

One wonders about the standards of peer review at the British Journal of General Practice. The June issue has a paper, "Acupuncture for ‘frequent attenders’ with medically unexplained symptoms: a randomised controlled trial (CACTUS study)". It has lots of numbers, but the result is very easy to see. Just look at their Figure.

There is no need to wade through all the statistics; it’s perfectly obvious at a glance that acupuncture has at best a tiny and erratic effect on any of the outcomes that were measured.

But this is not what the paper said. On the contrary, the conclusions of the paper said

|

Conclusion The addition of 12 sessions of five-element acupuncture to usual care resulted in improved health status and wellbeing that was sustained for 12 months. |

How on earth did the authors manage to reach a conclusion like that?

The first thing to note is that many of the authors are people who make their living largely from sticking needles in people, or advocating alternative medicine. The authors are Charlotte Paterson, Rod S Taylor, Peter Griffiths, Nicky Britten, Sue Rugg, Jackie Bridges, Bruce McCallum and Gerad Kite, on behalf of the CACTUS study team. The senior author, Gerad Kite MAc , is principal of the London Institute of Five-Element Acupuncture London. The first author, Charlotte Paterson, is a well known advocate of acupuncture. as is Nicky Britten.

The conflicts of interest are obvious, but nonetheless one should welcome a “randomised controlled trial” done by advocates of alternative medicine. In fact the results shown in the Figure are both interesting and useful. They show that acupuncture does not even produce any substantial placebo effect. It’s the authors’ conclusions that are bizarre and partisan. Peer review is indeed a broken process.

That’s really all that needs to be said, but for nerds, here are some more details.

How was the trial done?

The description "randomised" is fair enough, but there were no proper controls and the trial was not blinded. It was what has come to be called a "pragmatic" trial, which means a trial done without proper controls. They are, of course, much loved by alternative therapists because their therapies usually fail in proper trials. It’s much easier to get an (apparently) positive result if you omit the controls. But the fascinating thing about this study is that, despite the deficiencies in design, the result is essentially negative.

The authors themselves spell out the problems.

“Group allocation was known by trial researchers, practitioners, and patients”

So everybody (apart from the statistician) knew what treatment a patient was getting. This is an arrangement that is guaranteed to maximise bias and placebo effects.

"Patients were randomised on a 1:1 basis to receive 12 sessions of acupuncture starting immediately (acupuncture group) or starting in 6 months’ time (control group), with both groups continuing to receive usual care."

So it is impossible to compare acupuncture and control groups at 12 months, contrary to what’s stated in Conclusions.

"Twelve sessions, on average 60 minutes in length, were provided over a 6-month period at approximately weekly, then fortnightly and monthly intervals"

That sounds like a pretty expensive way of getting next to no effect.

"All aspects of treatment, including discussion and advice, were individualised as per normal five-element acupuncture practice. In this approach, the acupuncturist takes an in-depth account of the patient’s current symptoms and medical history, as well as general health and lifestyle issues. The patient’s condition is explained in terms of an imbalance in one of the five elements, which then causes an imbalance in the whole person. Based on this elemental diagnosis, appropriate points are used to rebalance this element and address not only the presenting conditions, but the person as a whole".

Does this mean that the patients were told a lot of mumbo jumbo about “five elements” (fire earth, metal, water, wood)? If so, anyone with any sense would probably have run a mile from the trial.

"Hypotheses directed at the effect of the needling component of acupuncture consultations require sham-acupuncture controls which while appropriate for formulaic needling for single well-defined conditions, have been shown to be problematic when dealing with multiple or complex conditions, because they interfere with the participative patient–therapist interaction on which the individualised treatment plan is developed. 37–39 Pragmatic trials, on the other hand, are appropriate for testing hypotheses that are directed at the effect of the complex intervention as a whole, while providing no information about the relative effect of different components."

Put simply that means: we don’t use sham acupuncture controls so we can’t distinguish an effect of the needles from placebo effects, or get-better-anyway effects.

"Strengths and limitations: The ‘black box’ study design precludes assigning the benefits of this complex intervention to any one component of the acupuncture consultations, such as the needling or the amount of time spent with a healthcare professional."

"This design was chosen because, without a promise of accessing the acupuncture treatment, major practical and ethical problems with recruitment and retention of participants were anticipated. This is because these patients have very poor self-reported health (Table 3), have not been helped by conventional treatment, and are particularly desperate for alternative treatment options.".

It’s interesting that the patients were “desperate for alternative treatment”. Again it seems that every opportunity has been given to maximise non-specific placebo, and get-well-anyway effects.

There is a lot of statistical analysis and, unsurprisingly, many of the differences don’t reach statistical significance. Some do (just) but that is really quite irrelevant. Even if some of the differences are real (not a result of random variability), a glance at the figures shows that their size is trivial.

My conclusions

(1) This paper, though designed to be susceptible to almost every form of bias, shows staggeringly small effects. It is the best evidence I’ve ever seen that not only are needles ineffective, but that placebo effects, if they are there at all, are trivial in size and have no useful benefit to the patient in this case..

(2) The fact that this paper was published with conclusions that appear to contradict directly what the data show, is as good an illustration as any I’ve seen that peer review is utterly ineffective as a method of guaranteeing quality. Of course the editor should have spotted this. It appears that quality control failed on all fronts.

Follow-up

In the first four days of this post, it got over 10,000 hits (almost 6,000 unique visitors).

Margaret McCartney has written about this too, in The British Journal of General Practice does acupuncture badly.

The Daily Mail exceeds itself in an article by Jenny Hope whch says “Millions of patients with ‘unexplained symptoms’ could benefit from acupuncture on the NHS, it is claimed”. I presume she didn’t read the paper.

The Daily Telegraph scarcely did better in Acupuncture has significant impact on mystery illnesses. The author if this, very sensibly, remains anonymous.

Many “medical information” sites churn out the press release without engaging the brain, but most of the other newspapers appear, very sensibly, to have ignored ther hyped up press release. Among the worst was Pulse, an online magazine for GPs. At least they’ve publish the comments that show their report was nonsense.

The Daily Mash has given this paper a well-deserved spoofing in Made-up medicine works on made-up illnesses.

“Professor Henry Brubaker, of the Institute for Studies, said: “To truly assess the efficacy of acupuncture a widespread double-blind test needs to be conducted over a series of years but to be honest it’s the equivalent of mapping the DNA of pixies or conducting a geological study of Narnia.” ”

There is no truth whatsoever in the rumour being spread on Twitter that I’m Professor Brubaker.

Euan Lawson, also known as Northern Doctor, has done another excellent job on the Paterson paper: BJGP and acupuncture – tabloid medical journalism. Most tellingly, he reproduces the press release from the editor of the BJGP, Professor Roger Jones DM, FRCP, FRCGP, FMedSci.

"Although there are countless reports of the benefits of acupuncture for a range of medical problems, there have been very few well-conducted, randomised controlled trials. Charlotte Paterson’s work considerably strengthens the evidence base for using acupuncture to help patients who are troubled by symptoms that we find difficult both to diagnose and to treat."

Oooh dear. The journal may have a new look, but it would be better if the editor read the papers before writing press releases. Tabloid journalism seems an appropriate description.

Andy Lewis at Quackometer, has written about this paper too, and put it into historical context. In Of the Imagination, as a Cause and as a Cure of Disorders of the Body. “In 1800, John Haygarth warned doctors how we may succumb to belief in illusory cures. Some modern doctors have still not learnt that lesson”. It’s sad that, in 2011, a medical journal should fall into a trap that was pointed out so clearly in 1800. He also points out the disgracefully inaccurate Press release issued by the Peninsula medical school.

Some tweets

Twitter info 426 clicks on http://bit.ly/mgIQ6e alone at 15.30 on 1 June (and that’s only the hits via twitter). By July 8th this had risen to 1,655 hits via Twitter, from 62 different countries,

@followthelemur Selina

MASSIVE peer review fail by the British Journal of General Practice http://bit.ly/mgIQ6e (via @david_colquhoun)

@david_colquhoun David Colquhoun

Appalling paper in Brit J Gen Practice: Acupuncturists show that acupuncture doesn’t work, but conclude the opposite http://bit.ly/mgIQ6e

Retweeted by gentley1300 and 36 others

@david_colquhoun David Colquhoun.

I deny the Twitter rumour that I’m Professor Henry Brubaker as in Daily Mash http://bit.ly/mt1xhX (just because of http://bit.ly/mgIQ6e )

@brunopichler Bruno Pichler

http://tinyurl.com/3hmvan4 Made-up medicine works on made-up illnesses (me thinks Henry Brubaker is actually @david_colquhoun)

@david_colquhoun David Colquhoun,

HEHE RT @brunopichler: http://tinyurl.com/3hmvan4 Made-up medicine works on made-up illnesses

@psweetman Pauline Sweetman

Read @david_colquhoun’s take on the recent ‘acupuncture effective for unexplained symptoms’ nonsense: bit.ly/mgIQ6e

@bodyinmind Body In Mind

RT @david_colquhoun: ‘Margaret McCartney (GP) also blogged acupuncture nonsense http://bit.ly/j6yP4j My take http://bit.ly/mgIQ6e’

@abritosa ABS

Br J Gen Practice mete a pata na poça: RT @david_colquhoun […] appalling acupuncture nonsense http://bit.ly/j6yP4j http://bit.ly/mgIQ6e

@jodiemadden Jodie Madden

amusing!RT @david_colquhoun: paper in Brit J Gen Practice shows that acupuncture doesn’t work,but conclude the opposite http://bit.ly/mgIQ6e

@kashfarooq Kash Farooq

Unbelievable: acupuncturists show that acupuncture doesn’t work, but conclude the opposite. http://j.mp/ilUALC by @david_colquhoun

@NeilOConnell Neil O’Connell

Gobsmacking spin RT @david_colquhoun: Acupuncturists show that acupuncture doesn’t work, but conclude the opposite http://bit.ly/mgIQ6e

@euan_lawson Euan Lawson (aka Northern Doctor)

Aye too right RT @david_colquhoun @iansample @BenGoldacre Guardian should cover dreadful acupuncture paper http://bit.ly/mgIQ6e

@noahWG Noah Gray

Acupuncturists show that acupuncture doesn’t work, but conclude the opposite, from @david_colquhoun: http://bit.ly/l9KHLv

8 June 2011 I drew the attention of the editor of BJGP to the many comments that have been made on this paper. He assured me that the matter would be discussed at a meeting of the editorial board of the journal. Tonight he sent me the result of this meeting.

|

Subject: BJGP Dear Prof Colquhoun We discussed your emails at yesterday’s meeting of the BJGP Editorial Board, attended by 12 Board members and the Deputy Editor The Board was unanimous in its support for the integrity of the Journal’s peer review process for the Paterson et al paper – which was accepted after revisions were made in response to two separate rounds of comments from two reviewers and myself – and could find no reason either to retract the paper or to release the reviewers’ comments Some Board members thought that the results were presented in an overly positive way; because the study raises questions about research methodology and the interpretation of data in pragmatic trials attempting to measure the effects of complex interventions, we will be commissioning a Debate and Analysis article on the topic. In the meantime we would encourage you to contribute to this debate throught the usual Journal channels Roger Jones Professor Roger Jones MA DM FRCP FRCGP FMedSci FHEA FRSA |

It is one thing to make a mistake, It is quite another thing to refuse to admit it. This reply seems to me to be quite disgraceful.

20 July 2011. The proper version of the story got wider publicity when Margaret McCartney wrote about it in the BMJ. The first rapid response to this article was a lengthy denial by the authors of the obvious conclusion to be drawn from the paper. They merely dig themselves deeper into a hole. The second response was much shorter (and more accurate).

|

Thank you Dr McCartney Richard Watson, General Practitioner The fact that none of the authors of the paper or the editor of BJGP have bothered to try and defend themselves speaks volumes. Like many people I glanced at the report before throwing it away with an incredulous guffaw. You bothered to look into it and refute it – in a real journal. That last comment shows part of the problem with them publishing, and promoting, such drivel. It makes you wonder whether anything they publish is any good, and that should be a worry for all GPs. |

30 July 2011. The British Journal of General Practice has published nine letters that object to this study. Some of them concentrate on problems with the methods. others point out what I believe to be the main point, there us essentially no effect there to be explained. In the public interest, I am posting the responses here [download pdf file]

Thers is also a response from the editor and from the authors. Both are unapologetic. It seems that the editor sees nothing wrong with the peer review process.

I don’t recall ever having come across such incompetence in a journal’s editorial process.

Here’s all he has to say.

|

The BJGP Editorial Board considered this correspondence recently. The Board endorsed the Journal’s peer review process and did not consider that there was a case for retraction of the paper or for releasing the peer reviews. The Board did, however, think that the results of the study were highlighted by the Journal in an overly-positive manner. However,many of the criticisms published above are addressed by the authors themselves in the full paper.

|

If you subscribe to the views of Paterson et al, you may want to buy a T-shirt that has a revised version of the periodic table.

5 August 2011. A meeting with the editor of BJGP

Yesterday I met a member of the editorial board of BJGP. We agreed that the data are fine and should not be retracted. It’s the conclusions that should be retracted. I was also told that the referees’ reports were "bland". In the circumstances that merely confirmed my feeling that the referees failed to do a good job.

Today I met the editor, Roger Jones, himself. He was clearly upset by my comment and I have now changed it to refer to the whole editorial process rather than to him personally. I was told, much to my surprise, that the referees were not acupuncturists but “statisticians”. That I find baffling. It soon became clear that my differences with Professor Jones turned on interpretations of statistics.