regression to the mean

‘We know little about the effect of diet on health. That’s why so much is written about it’. That is the title of a post in which I advocate the view put by John Ioannidis that remarkably little is known about the health effects if individual nutrients. That ignorance has given rise to a vast industry selling advice that has little evidence to support it.

The 2016 Conference of the so-called "College of Medicine" had the title "Food, the Forgotten Medicine". This post gives some background information about some of the speakers at this event. I’m sorry it appears to be too ad hominem, but the only way to judge the meeting is via the track record of the speakers.

Quite a lot has been written here about the "College of Medicine". It is the direct successor of the Prince of Wales’ late, unlamented, Foundation for Integrated Health. But unlike the latter, its name is disguises its promotion of quackery. Originally it was going to be called the “College of Integrated Health”, but that wasn’t sufficently deceptive so the name was dropped.

For the history of the organisation, see

Don’t be deceived. The new “College of Medicine” is a fraud and delusion

The College of Medicine is in the pocket of Crapita Capita. Is Graeme Catto selling out?

The conference programme (download pdf) is a masterpiece of bait and switch. It is a mixture of very respectable people, and outright quacks. The former are invited to give legitimacy to the latter. The names may not be familiar to those who don’t follow the antics of the magic medicine community, so here is a bit of information about some of them.

The introduction to the meeting was by Michael Dixon and Catherine Zollman, both veterans of the Prince of Wales Foundation, and both devoted enthusiasts for magic medicne. Zollman even believes in the battiest of all forms of magic medicine, homeopathy (download pdf), for which she totally misrepresents the evidence. Zollman works now at the Penny Brohn centre in Bristol. She’s also linked to the "Portland Centre for integrative medicine" which is run by Elizabeth Thompson, another advocate of homeopathy. It came into being after NHS Bristol shut down the Bristol Homeopathic Hospital, on the very good grounds that it doesn’t work.

Now, like most magic medicine it is privatised. The Penny Brohn shop will sell you a wide range of expensive and useless "supplements". For example, Biocare Antioxidant capsules at £37 for 90. Biocare make several unjustified claims for their benefits. Among other unnecessary ingredients, they contain a very small amount of green tea. That’s a favourite of "health food addicts", and it was the subject of a recent paper that contains one of the daftest statistical solecisms I’ve ever encountered

"To protect against type II errors, no corrections were applied for multiple comparisons".

If you don’t understand that, try this paper.

The results are almost certainly false positives, despite the fact that it appeared in Lancet Neurology. It’s yet another example of broken peer review.

It’s been know for decades now that “antioxidant” is no more than a marketing term, There is no evidence of benefit and large doses can be harmful. This obviously doesn’t worry the College of Medicine.

Margaret Rayman was the next speaker. She’s a real nutritionist. Mixing the real with the crackpots is a standard bait and switch tactic.

Eleni Tsiompanou, came next. She runs yet another private "wellness" clinic, which makes all the usual exaggerated claims. She seems to have an obsession with Hippocrates (hint: medicine has moved on since then). Dr Eleni’s Joy Biscuits may or may not taste good, but their health-giving properties are make-believe.

Andrew Weil, from the University of Arizona

gave the keynote address. He’s described as "one of the world’s leading authorities on Nutrition and Health". That description alone is sufficient to show the fantasy land in which the College of Medicine exists. He’s a typical supplement salesman, presumably very rich. There is no excuse for not knowing about him. It was 1988 when Arnold Relman (who was editor of the New England Journal of Medicine) wrote A Trip to Stonesville: Some Notes on Andrew Weil, M.D..

“Like so many of the other gurus of alternative medicine, Weil is not bothered by logical contradictions in his argument, or encumbered by a need to search for objective evidence.”

This blog has mentioned his more recent activities, many times.

Alex Richardson, of Oxford Food and Behaviour Research (a charity, not part of the university) is an enthusiast for omega-3, a favourite of the supplement industry, She has published several papers that show little evidence of effectiveness. That looks entirely honest. On the other hand, their News section contains many links to the notorious supplement industry lobby site, Nutraingredients, one of the least reliable sources of information on the web (I get their newsletter, a constant source of hilarity and raised eyebrows). I find this worrying for someone who claims to be evidence-based. I’m told that her charity is funded largely by the supplement industry (though I can’t find any mention of that on the web site).

Stephen Devries was a new name to me. You can infer what he’s like from the fact that he has been endorsed byt Andrew Weil, and that his address is "Institute for Integrative Cardiology" ("Integrative" is the latest euphemism for quackery). Never trust any talk with a title that contains "The truth about". His was called "The scientific truth about fats and sugars," In a video, he claims that diet has been shown to reduce heart disease by 70%. which gives you a good idea of his ability to assess evidence. But the claim doubtless helps to sell his books.

Prof Tim Spector, of Kings College London, was next. As far as I know he’s a perfectly respectable scientist, albeit one with books to sell, But his talk is now online, and it was a bit like a born-again microbiome enthusiast. He seemed to be too impressed by the PREDIMED study, despite it’s statistical unsoundness, which was pointed out by Ioannidis. Little evidence was presented, though at least he was more sensible than the audience about the uselessness of multivitamin tablets.

Simon Mills talked on “Herbs and spices. Using Mother Nature’s pharmacy to maintain health and cure illness”. He’s a herbalist who has featured here many times. I can recommend especially his video about Hot and Cold herbs as a superb example of fantasy science.

Annie Anderson, is Professor of Public Health Nutrition and

Founder of the Scottish Cancer Prevention Network. She’s a respectable nutritionist and public health person, albeit with their customary disregard of problems of causality.

Patrick Holden is chair of the Sustainable Food Trust. He promotes "organic farming". Much though I dislike the cruelty of factory farms, the "organic" industry is largely a way of making food more expensive with no health benefits.

The Michael Pittilo 2016 Student Essay Prize was awarded after lunch. Pittilo has featured frequently on this blog as a result of his execrable promotion of quackery -see, in particular, A very bad report: gamma minus for the vice-chancellor.

Nutritional advice for patients with cancer. This discussion involved three people.

Professor Robert Thomas, Consultant Oncologist, Addenbrookes and Bedford Hospitals, Dr Clare Shaw, Consultant Dietitian, Royal Marsden Hospital and Dr Catherine Zollman, GP and Clinical Lead, Penny Brohn UK.

Robert Thomas came to my attention when I noticed that he, as a regular cancer consultant had spoken at a meeting of the quack charity, “YestoLife”. When I saw he was scheduled tp speak at another quack conference. After I’d written to him to point out the track records of some of the people at the meeting, he withdrew from one of them. See The exploitation of cancer patients is wicked. Carrot juice for lunch, then die destitute. The influence seems to have been temporary though. He continues to lend respectability to many dodgy meetings. He edits the Cancernet web site. This site lends credence to bizarre treatments like homeopathy and crystal healing. It used to sell hair mineral analysis, a well-known phony diagnostic method the main purpose of which is to sell you expensive “supplements”. They still sell the “Cancer Risk Nutritional Profile”. for £295.00, despite the fact that it provides no proven benefits.

Robert Thomas designed a food "supplement", Pomi-T: capsules that contain Pomegranate, Green tea, Broccoli and Curcumin. Oddly, he seems still to subscribe to the antioxidant myth. Even the supplement industry admits that that’s a lost cause, but that doesn’t stop its use in marketing. The one randomised trial of these pills for prostate cancer was inconclusive. Prostate Cancer UK says "We would not encourage any man with prostate cancer to start taking Pomi-T food supplements on the basis of this research". Nevertheless it’s promoted on Cancernet.co.uk and widely sold. The Pomi-T site boasts about the (inconclusive) trial, but says "Pomi-T® is not a medicinal product".

There was a cookery demonstration by Dale Pinnock "The medicinal chef" The programme does not tell us whether he made is signature dish "the Famous Flu Fighting Soup". Needless to say, there isn’t the slightest reason to believe that his soup has the slightest effect on flu.

In summary, the whole meeting was devoted to exaggerating vastly the effect of particular foods. It also acted as advertising for people with something to sell. Much of it was outright quackery, with a leavening of more respectable people, a standard part of the bait-and-switch methods used by all quacks in their attempts to make themselves sound respectable. I find it impossible to tell how much the participants actually believe what they say, and how much it’s a simple commercial drive.

The thing that really worries me is why someone like Phil Hammond supports this sort of thing by chairing their meetings (as he did for the "College of Medicine’s" direct predecessor, the Prince’s Foundation for Integrated Health. His defence of the NHS has made him something of a hero to me. He assured me that he’d asked people to stick to evidence. In that he clearly failed. I guess they must pay well.

Follow-up

|

“Statistical regression to the mean predicts that patients selected for abnormalcy will, on the average, tend to improve. We argue that most improvements attributed to the placebo effect are actually instances of statistical regression.”

“Thus, we urge caution in interpreting patient improvements as causal effects of our actions and should avoid the conceit of assuming that our personal presence has strong healing powers.” |

In 1955, Henry Beecher published "The Powerful Placebo". I was in my second undergraduate year when it appeared. And for many decades after that I took it literally, They looked at 15 studies and found that an average 35% of them got "satisfactory relief" when given a placebo. This number got embedded in pharmacological folk-lore. He also mentioned that the relief provided by placebo was greatest in patients who were most ill.

Consider the common experiment in which a new treatment is compared with a placebo, in a double-blind randomised controlled trial (RCT). It’s common to call the responses measured in the placebo group the placebo response. But that is very misleading, and here’s why.

The responses seen in the group of patients that are treated with placebo arise from two quite different processes. One is the genuine psychosomatic placebo effect. This effect gives genuine (though small) benefit to the patient. The other contribution comes from the get-better-anyway effect. This is a statistical artefact and it provides no benefit whatsoever to patients. There is now increasing evidence that the latter effect is much bigger than the former.

How can you distinguish between real placebo effects and get-better-anyway effect?

The only way to measure the size of genuine placebo effects is to compare in an RCT the effect of a dummy treatment with the effect of no treatment at all. Most trials don’t have a no-treatment arm, but enough do that estimates can be made. For example, a Cochrane review by Hróbjartsson & Gøtzsche (2010) looked at a wide variety of clinical conditions. Their conclusion was:

“We did not find that placebo interventions have important clinical effects in general. However, in certain settings placebo interventions can influence patient-reported outcomes, especially pain and nausea, though it is difficult to distinguish patient-reported effects of placebo from biased reporting.”

In some cases, the placebo effect is barely there at all. In a non-blind comparison of acupuncture and no acupuncture, the responses were essentially indistinguishable (despite what the authors and the journal said). See "Acupuncturists show that acupuncture doesn’t work, but conclude the opposite"

So the placebo effect, though a real phenomenon, seems to be quite small. In most cases it is so small that it would be barely perceptible to most patients. Most of the reason why so many people think that medicines work when they don’t isn’t a result of the placebo response, but it’s the result of a statistical artefact.

Regression to the mean is a potent source of deception

The get-better-anyway effect has a technical name, regression to the mean. It has been understood since Francis Galton described it in 1886 (see Senn, 2011 for the history). It is a statistical phenomenon, and it can be treated mathematically (see references, below). But when you think about it, it’s simply common sense.

You tend to go for treatment when your condition is bad, and when you are at your worst, then a bit later you’re likely to be better, The great biologist, Peter Medawar comments thus.

|

"If a person is (a) poorly, (b) receives treatment intended to make him better, and (c) gets better, then no power of reasoning known to medical science can convince him that it may not have been the treatment that restored his health"

(Medawar, P.B. (1969:19). The Art of the Soluble: Creativity and originality in science. Penguin Books: Harmondsworth). |

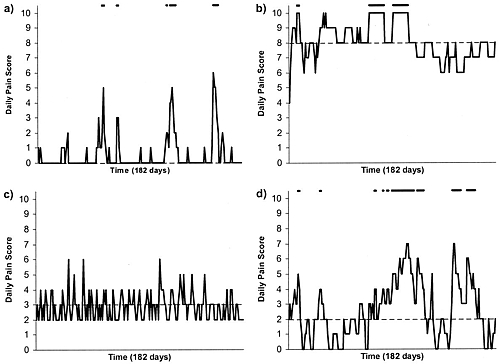

This is illustrated beautifully by measurements made by McGorry et al., (2001). Patients with low back pain recorded their pain (on a 10 point scale) every day for 5 months (they were allowed to take analgesics ad lib).

The results for four patients are shown in their Figure 2. On average they stay fairly constant over five months, but they fluctuate enormously, with different patterns for each patient. Painful episodes that last for 2 to 9 days are interspersed with periods of lower pain or none at all. It is very obvious that if these patients had gone for treatment at the peak of their pain, then a while later they would feel better, even if they were not actually treated. And if they had been treated, the treatment would have been declared a success, despite the fact that the patient derived no benefit whatsoever from it. This entirely artefactual benefit would be the biggest for the patients that fluctuate the most (e.g this in panels a and d of the Figure).

Figure 2 from McGorry et al, 2000. Examples of daily pain scores over a 6-month period for four participants. Note: Dashes of different lengths at the top of a figure designate an episode and its duration.

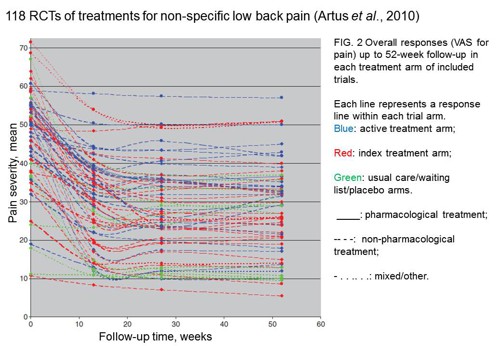

The effect is illustrated well by an analysis of 118 trials of treatments for non-specific low back pain (NSLBP), by Artus et al., (2010). The time course of pain (rated on a 100 point visual analogue pain scale) is shown in their Figure 2. There is a modest improvement in pain over a few weeks, but this happens regardless of what treatment is given, including no treatment whatsoever.

FIG. 2 Overall responses (VAS for pain) up to 52-week follow-up in each treatment arm of included trials. Each line represents a response line within each trial arm. Red: index treatment arm; Blue: active treatment arm; Green: usual care/waiting list/placebo arms. ____: pharmacological treatment; – – – -: non-pharmacological treatment; . . .. . .: mixed/other.

The authors comment

"symptoms seem to improve in a similar pattern in clinical trials following a wide variety of active as well as inactive treatments.", and "The common pattern of responses could, for a large part, be explained by the natural history of NSLBP".

In other words, none of the treatments work.

This paper was brought to my attention through the blog run by the excellent physiotherapist, Neil O’Connell. He comments

"If this finding is supported by future studies it might suggest that we can’t even claim victory through the non-specific effects of our interventions such as care, attention and placebo. People enrolled in trials for back pain may improve whatever you do. This is probably explained by the fact that patients enrol in a trial when their pain is at its worst which raises the murky spectre of regression to the mean and the beautiful phenomenon of natural recovery."

O’Connell has discussed the matter in recent paper, O’Connell (2015), from the point of view of manipulative therapies. That’s an area where there has been resistance to doing proper RCTs, with many people saying that it’s better to look at “real world” outcomes. This usually means that you look at how a patient changes after treatment. The hazards of this procedure are obvious from Artus et al.,Fig 2, above. It maximises the risk of being deceived by regression to the mean. As O’Connell commented

"Within-patient change in outcome might tell us how much an individual’s condition improved, but it does not tell us how much of this improvement was due to treatment."

In order to eliminate this effect it’s essential to do a proper RCT with control and treatment groups tested in parallel. When that’s done the control group shows the same regression to the mean as the treatment group. and any additional response in the latter can confidently attributed to the treatment. Anything short of that is whistling in the wind.

Needless to say, the suboptimal methods are most popular in areas where real effectiveness is small or non-existent. This, sad to say, includes low back pain. It also includes just about every treatment that comes under the heading of alternative medicine. Although these problems have been understood for over a century, it remains true that

|

"It is difficult to get a man to understand something, when his salary depends upon his not understanding it."

Upton Sinclair (1935) |

Responders and non-responders?

One excuse that’s commonly used when a treatment shows only a small effect in proper RCTs is to assert that the treatment actually has a good effect, but only in a subgroup of patients ("responders") while others don’t respond at all ("non-responders"). For example, this argument is often used in studies of anti-depressants and of manipulative therapies. And it’s universal in alternative medicine.

There’s a striking similarity between the narrative used by homeopaths and those who are struggling to treat depression. The pill may not work for many weeks. If the first sort of pill doesn’t work try another sort. You may get worse before you get better. One is reminded, inexorably, of Voltaire’s aphorism "The art of medicine consists in amusing the patient while nature cures the disease".

There is only a handful of cases in which a clear distinction can be made between responders and non-responders. Most often what’s observed is a smear of different responses to the same treatment -and the greater the variability, the greater is the chance of being deceived by regression to the mean.

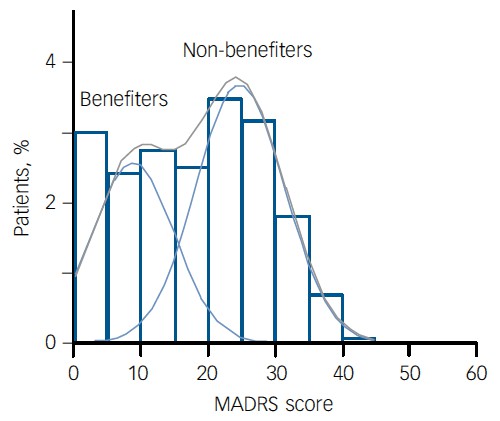

For example, Thase et al., (2011) looked at responses to escitalopram, an SSRI antidepressant. They attempted to divide patients into responders and non-responders. An example (Fig 1a in their paper) is shown.

The evidence for such a bimodal distribution is certainly very far from obvious. The observations are just smeared out. Nonetheless, the authors conclude

"Our findings indicate that what appears to be a modest effect in the grouped data – on the boundary of clinical significance, as suggested above – is actually a very large effect for a subset of patients who benefited more from escitalopram than from placebo treatment. "

I guess that interpretation could be right, but it seems more likely to be a marketing tool. Before you read the paper, check the authors’ conflicts of interest.

The bottom line is that analyses that divide patients into responders and non-responders are reliable only if that can be done before the trial starts. Retrospective analyses are unreliable and unconvincing.

Some more reading

Senn, 2011 provides an excellent introduction (and some interesting history). The subtitle is

"Here Stephen Senn examines one of Galton’s most important statistical legacies – one that is at once so trivial that it is blindingly obvious, and so deep that many scientists spend their whole career being fooled by it."

The examples in this paper are extended in Senn (2009), “Three things that every medical writer should know about statistics”. The three things are regression to the mean, the error of the transposed conditional and individual response.

You can read slightly more technical accounts of regression to the mean in McDonald & Mazzuca (1983) "How much of the placebo effect is statistical regression" (two quotations from this paper opened this post), and in Stephen Senn (2015) "Mastering variation: variance components and personalised medicine". In 1988 Senn published some corrections to the maths in McDonald (1983).

The trials that were used by Hróbjartsson & Gøtzsche (2010) to investigate the comparison between placebo and no treatment were looked at again by Howick et al., (2013), who found that in many of them the difference between treatment and placebo was also small. Most of the treatments did not work very well.

Regression to the mean is not just a medical deceiver: it’s everywhere

Although this post has concentrated on deception in medicine, it’s worth noting that the phenomenon of regression to the mean can cause wrong inferences in almost any area where you look at change from baseline. A classical example concern concerns the effectiveness of speed cameras. They tend to be installed after a spate of accidents, and if the accident rate is particularly high in one year it is likely to be lower the next year, regardless of whether a camera had been installed or not. To find the true reduction in accidents caused by installation of speed cameras, you would need to choose several similar sites and allocate them at random to have a camera or no camera. As in clinical trials. looking at the change from baseline can be very deceptive.

Statistical postscript

Lastly, remember that it you avoid all of these hazards of interpretation, and your test of significance gives P = 0.047. that does not mean you have discovered something. There is still a risk of at least 30% that your ‘positive’ result is a false positive. This is explained in Colquhoun (2014),"An investigation of the false discovery rate and the misinterpretation of p-values". I’ve suggested that one way to solve this problem is to use different words to describe P values: something like this.

|

P > 0.05 very weak evidence

P = 0.05 weak evidence: worth another look P = 0.01 moderate evidence for a real effect P = 0.001 strong evidence for real effect |

But notice that if your hypothesis is implausible, even these criteria are too weak. For example, if the treatment and placebo are identical (as would be the case if the treatment were a homeopathic pill) then it follows that 100% of positive tests are false positives.

Follow-up

12 December 2015

It’s worth mentioning that the question of responders versus non-responders is closely-related to the classical topic of bioassays that use quantal responses. In that field it was assumed that each participant had an individual effective dose (IED). That’s reasonable for the old-fashioned LD50 toxicity test: every animal will die after a sufficiently big dose. It’s less obviously right for ED50 (effective dose in 50% of individuals). The distribution of IEDs is critical, but it has very rarely been determined. The cumulative form of this distribution is what determines the shape of the dose-response curve for fraction of responders as a function of dose. Linearisation of this curve, by means of the probit transformation used to be a staple of biological assay. This topic is discussed in Chapter 10 of Lectures on Biostatistics. And you can read some of the history on my blog about Some pharmacological history: an exam from 1959.