Research Funding

The Higher Education Funding Council England (HEFCE) gives money to universities. The allocation that a university gets depends strongly on the periodical assessments of the quality of their research. Enormous amounts if time, energy and money go into preparing submissions for these assessments, and the assessment procedure distorts the behaviour of universities in ways that are undesirable. In the last assessment, four papers were submitted by each principal investigator, and the papers were read.

In an effort to reduce the cost of the operation, HEFCE has been asked to reconsider the use of metrics to measure the performance of academics. The committee that is doing this job has asked for submissions from any interested person, by June 20th.

This post is a draft for my submission. I’m publishing it here for comments before producing a final version for submission.

Draft submission to HEFCE concerning the use of metrics.

I’ll consider a number of different metrics that have been proposed for the assessment of the quality of an academic’s work.

Impact factors

The first thing to note is that HEFCE is one of the original signatories of DORA (http://am.ascb.org/dora/ ). The first recommendation of that document is

:"Do not use journal-based metrics, such as Journal Impact Factors, as a surrogate measure of the quality of individual research articles, to assess an individual scientist’s contributions, or in hiring, promotion, or funding decisions"

.Impact factors have been found, time after time, to be utterly inadequate as a way of assessing individuals, e.g. [1], [2]. Even their inventor, Eugene Garfield, says that. There should be no need to rehearse yet again the details. If HEFCE were to allow their use, they would have to withdraw from the DORA agreement, and I presume they would not wish to do this.

Article citations

Citation counting has several problems. Most of them apply equally to the H-index.

- Citations may be high because a paper is good and useful. They equally may be high because the paper is bad. No commercial supplier makes any distinction between these possibilities. It would not be in their commercial interests to spend time on that, but it’s critical for the person who is being judged. For example, Andrew Wakefield’s notorious 1998 paper, which gave a huge boost to the anti-vaccine movement had had 758 citations by 2012 (it was subsequently shown to be fraudulent).

- Citations take far too long to appear to be a useful way to judge recent work, as is needed for judging grant applications or promotions. This is especially damaging to young researchers, and to people (particularly women) who have taken a career break. The counts also don’t take into account citation half-life. A paper that’s still being cited 20 years after it was written clearly had influence, but that takes 20 years to discover,

- The citation rate is very field-dependent. Very mathematical papers are much less likely to be cited, especially by biologists, than more qualitative papers. For example, the solution of the missed event problem in single ion channel analysis [3,4] was the sine qua non for all our subsequent experimental work, but the two papers have only about a tenth of the number of citations of subsequent work that depended on them.

- Most suppliers of citation statistics don’t count citations of books or book chapters. This is bad for me because my only work with over 1000 citations is my 105 page chapter on methods for the analysis of single ion channels [5], which contained quite a lot of original work. It has had 1273 citations according to Google scholar but doesn’t appear at all in Scopus or Web of Science. Neither do the 954 citations of my statistics text book [6]

- There are often big differences between the numbers of citations reported by different commercial suppliers. Even for papers (as opposed to book articles) there can be a two-fold difference between the number of citations reported by Scopus, Web of Science and Google Scholar. The raw data are unreliable and commercial suppliers of metrics are apparently not willing to put in the work to ensure that their products are consistent or complete.

- Citation counts can be (and already are being) manipulated. The easiest way to get a large number of citations is to do no original research at all, but to write reviews in popular areas. Another good way to have ‘impact’ is to write indecisive papers about nutritional epidemiology. That is not behaviour that should command respect.

- Some branches of science are already facing something of a crisis in reproducibility [7]. One reason for this is the perverse incentives which are imposed on scientists. These perverse incentives include the assessment of their work by crude numerical indices.

- “Gaming” of citations is easy. (If students do it it’s called cheating: if academics do it is called gaming.) If HEFCE makes money dependent on citations, then this sort of cheating is likely to take place on an industrial scale. Of course that should not happen, but it would (disguised, no doubt, by some ingenious bureaucratic euphemisms).

- For example, Scigen is a program that generates spoof papers in computer science, by stringing together plausible phases. Over 100 such papers have been accepted for publication. By submitting many such papers, the authors managed to fool Google Scholar in to awarding the fictitious author an H-index greater than that of Albert Einstein http://en.wikipedia.org/wiki/SCIgen

- The use of citation counts has already encouraged guest authorships and such like marginally honest behaviour. There is no way to tell with an author on a paper has actually made any substantial contribution to the work, despite the fact that some journals ask for a statement about contribution.

- It has been known for 17 years that citation counts for individual papers are not detectably correlated with the impact factor of the journal in which the paper appears [1]. That doesn’t seem to have deterred metrics enthusiasts from using both. It should have done.

Given all these problems, it’s hard to see how citation counts could be useful to the REF, except perhaps in really extreme cases such as papers that get next to no citations over 5 or 10 years.

The H-index

This has all the disadvantages of citation counting, but in addition it is strongly biased against young scientists, and against women. This makes it not worth consideration by HEFCE.

Altmetrics

Given the role given to “impact” in the REF, the fact that altmetrics claim to measure impact might make them seem worthy of consideration at first sight. One problem is that the REF failed to make a clear distinction between impact on other scientists is the field and impact on the public.

Altmetrics measures an undefined mixture of both sorts if impact, with totally arbitrary weighting for tweets, Facebook mentions and so on. But the score seems to be related primarily to the trendiness of the title of the paper. Any paper about diet and health, however poor, is guaranteed to feature well on Twitter, as will any paper that has ‘penis’ in the title.

It’s very clear from the examples that I’ve looked at that few people who tweet about a paper have read more than the title. See Why you should ignore altmetrics and other bibliometric nightmares [8].

In most cases, papers were promoted by retweeting the press release or tweet from the journal itself. Only too often the press release is hyped-up. Metrics not only corrupt the behaviour of academics, but also the behaviour of journals. In the cases I’ve examined, reading the papers revealed that they were particularly poor (despite being in glamour journals): they just had trendy titles [8].

There could even be a negative correlation between the number of tweets and the quality of the work. Those who sell altmetrics have never examined this critical question because they ignore the contents of the papers. It would not be in their commercial interests to test their claims if the result was to show a negative correlation. Perhaps the reason why they have never tested their claims is the fear that to do so would reduce their income.

Furthermore you can buy 1000 retweets for $8.00 http://followers-and-likes.com/twitter/buy-twitter-retweets/ That’s outright cheating of course, and not many people would go that far. But authors, and journals, can do a lot of self-promotion on twitter that is totally unrelated to the quality of the work.

It’s worth noting that much good engagement with the public now appears on blogs that are written by scientists themselves, but the 3.6 million views of my blog do not feature in altmetrics scores, never mind Scopus or Web of Science. Altmetrics don’t even measure public engagement very well, never mind academic merit.

Evidence that metrics measure quality

Any metric would be acceptable only if it measured the quality of a person’s work. How could that proposition be tested? In order to judge this, one would have to take a random sample of papers, and look at their metrics 10 or 20 years after publication. The scores would have to be compared with the consensus view of experts in the field. Even then one would have to be careful about the choice of experts (in fields like alternative medicine for example, it would be important to exclude people whose living depended on believing in it). I don’t believe that proper tests have ever been done (and it isn’t in the interests of those who sell metrics to do it).

The great mistake made by almost all bibliometricians is that they ignore what matters most, the contents of papers. They try to make inferences from correlations of metric scores with other, equally dubious, measures of merit. They can’t afford the time to do the right experiment if only because it would harm their own “productivity”.

The evidence that metrics do what’s claimed for them is almost non-existent. For example, in six of the ten years leading up to the 1991 Nobel prize, Bert Sakmann failed to meet the metrics-based publication target set by Imperial College London, and these failures included the years in which the original single channel paper was published [9] and also the year, 1985, when he published a paper [10] that was subsequently named as a classic in the field [11]. In two of these ten years he had no publications whatsoever. See also [12].

Application of metrics in the way that it’s been done at Imperial and also at Queen Mary College London, would result in firing of the most original minds.

Gaming and the public perception of science

Every form of metric alters behaviour, in such a way that it becomes useless for its stated purpose. This is already well-known in economics, where it’s know as Goodharts’s law http://en.wikipedia.org/wiki/Goodhart’s_law “"When a measure becomes a target, it ceases to be a good measure”. That alone is a sufficient reason not to extend metrics to science. Metrics have already become one of several perverse incentives that control scientists’ behaviour. They have encouraged gaming, hype, guest authorships and, increasingly, outright fraud [13].

The general public has become aware of this behaviour and it is starting to do serious harm to perceptions of all science. As long ago as 1999, Haerlin & Parr [14] wrote in Nature, under the title How to restore Public Trust in Science,

“Scientists are no longer perceived exclusively as guardians of objective truth, but also as smart promoters of their own interests in a media-driven marketplace.”

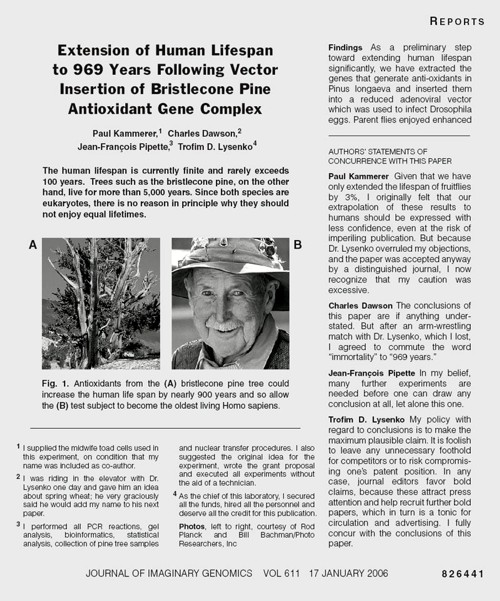

And in January 17, 2006, a vicious spoof on a Science paper appeared, not in a scientific journal, but in the New York Times. See https://www.dcscience.net/?p=156

The use of metrics would provide a direct incentive to this sort of behaviour. It would be a tragedy not only for people who are misjudged by crude numerical indices, but also a tragedy for the reputation of science as a whole.

Conclusion

There is no good evidence that any metric measures quality, at least over the short time span that’s needed for them to be useful for giving grants or deciding on promotions). On the other hand there is good evidence that use of metrics provides a strong incentive to bad behaviour, both by scientists and by journals. They have already started to damage the public perception of science of the honesty of science.

The conclusion is obvious. Metrics should not be used to judge academic performance.

What should be done?

If metrics aren’t used, how should assessment be done? Roderick Floud was president of Universities UK from 2001 to 2003. He’s is nothing if not an establishment person. He said recently:

“Each assessment costs somewhere between £20 million and £100 million, yet 75 per cent of the funding goes every time to the top 25 universities. Moreover, the share that each receives has hardly changed during the past 20 years.

It is an expensive charade. Far better to distribute all of the money through the research councils in a properly competitive system.”

The obvious danger of giving all the money to the Research Councils is that people might be fired solely because they didn’t have big enough grants. That’s serious -it’s already happened at Kings College London, Queen Mary London and at Imperial College. This problem might be ameliorated if there were a maximum on the size of grants and/or on the number of papers a person could publish, as I suggested at the open data debate. And it would help if univerities appointed vice-chancellors with a better long term view than most seem to have at the moment.

Aggregate metrics? It’s been suggested that the problems are smaller if one looks at aggregated metrics for a whole department. rather than the metrics for individual people. Clearly looking at departments would average out anomalies. The snag is that it wouldn’t circumvent Goodhart’s law. If the money depended on the aggregate score, it would still put great pressure on universities to recruit people with high citations, regardless of the quality of their work, just as it would if individuals were being assessed. That would weigh against thoughtful people (and not least women).

The best solution would be to abolish the REF and give the money to research councils, with precautions to prevent people being fired because their research wasn’t expensive enough. If politicians insist that the "expensive charade" is to be repeated, then I see no option but to continue with a system that’s similar to the present one: that would waste money and distract us from our job.

1. Seglen PO (1997) Why the impact factor of journals should not be used for evaluating research. British Medical Journal 314: 498-502. [Download pdf]

2. Colquhoun D (2003) Challenging the tyranny of impact factors. Nature 423: 479. [Download pdf]

3. Hawkes AG, Jalali A, Colquhoun D (1990) The distributions of the apparent open times and shut times in a single channel record when brief events can not be detected. Philosophical Transactions of the Royal Society London A 332: 511-538. [Get pdf]

4. Hawkes AG, Jalali A, Colquhoun D (1992) Asymptotic distributions of apparent open times and shut times in a single channel record allowing for the omission of brief events. Philosophical Transactions of the Royal Society London B 337: 383-404. [Get pdf]

5. Colquhoun D, Sigworth FJ (1995) Fitting and statistical analysis of single-channel records. In: Sakmann B, Neher E, editors. Single Channel Recording. New York: Plenum Press. pp. 483-587.

6. David Colquhoun on Google Scholar. Available: http://scholar.google.co.uk/citations?user=JXQ2kXoAAAAJ&hl=en17-6-2014

7. Ioannidis JP (2005) Why most published research findings are false. PLoS Med 2: e124.[full text]

8. Colquhoun D, Plested AJ Why you should ignore altmetrics and other bibliometric nightmares. Available: https://www.dcscience.net/?p=6369

9. Neher E, Sakmann B (1976) Single channel currents recorded from membrane of denervated frog muscle fibres. Nature 260: 799-802.

10. Colquhoun D, Sakmann B (1985) Fast events in single-channel currents activated by acetylcholine and its analogues at the frog muscle end-plate. J Physiol (Lond) 369: 501-557. [Download pdf]

11. Colquhoun D (2007) What have we learned from single ion channels? J Physiol 581: 425-427.[Download pdf]

12. Colquhoun D (2007) How to get good science. Physiology News 69: 12-14. [Download pdf] See also https://www.dcscience.net/?p=182

13. Oransky, I. Retraction Watch. Available: http://retractionwatch.com/18-6-2014

14. Haerlin B, Parr D (1999) How to restore public trust in science. Nature 400: 499. 10.1038/22867 [doi].[Get pdf]

Follow-up

Some other posts on this topic

Why Metrics Cannot Measure Research Quality: A Response to the HEFCE Consultation

Gaming Google Scholar Citations, Made Simple and Easy

Manipulating Google Scholar Citations and Google Scholar Metrics: simple, easy and tempting

Driving Altmetrics Performance Through Marketing

Death by Metrics (October 30, 2013)

Not everything that counts can be counted

Using metrics to assess research quality By David Spiegelhalter “I am strongly against the suggestion that peer–review can in any way be replaced by bibliometrics”

1 July 2014

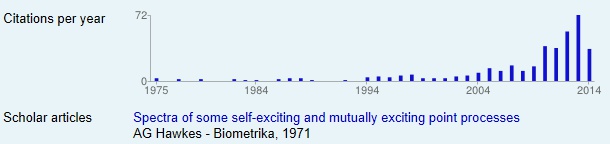

My brilliant statistical colleague, Alan Hawkes, not only laid the foundations for single molecule analysis (and made a career for me) . Before he got into that, he wrote a paper, Spectra of some self-exciting and mutually exciting point processes, (Biometrika 1971). In that paper he described a sort of stochastic process now known as a Hawkes process. In the simplest sort of stochastic process, the Poisson process, events are independent of each other. In a Hawkes process, the occurrence of an event affects the probability of another event occurring, so, for example, events may occur in clusters. Such processes were used for many years to describe the occurrence of earthquakes. More recently, it’s been noticed that such models are useful in finance, marketing, terrorism, burglary, social media, DNA analysis, and to describe invasive banana trees. The 1971 paper languished in relative obscurity for 30 years. Now the citation rate has shot threw the roof.

The papers about Hawkes processes are mostly highly mathematical. They are not the sort of thing that features on twitter. They are serious science, not just another ghastly epidemiological survey of diet and health. Anybody who cites papers of this sort is likely to be a real scientist. The surge in citations suggests to me that the 1971 paper was indeed an important bit of work (because the citations will be made by serious people). How does this affect my views about the use of citations? It shows that even highly mathematical work can achieve respectable citation rates, but it may take a long time before their importance is realised. If Hawkes had been judged by citation counting while he was applying for jobs and promotions, he’d probably have been fired. If his department had been judged by citations of this paper, it would not have scored well. It takes a long time to judge the importance of a paper and that makes citation counting almost useless for decisions about funding and promotion.

The proposals made here are intended to improve postgraduate education with little harm to undergraduate education and no extra cost. It is not intended to get the government off the hook when it comes to funding of either teaching or research. The recent Royal Society report, The Scientific Century: securing our future prosperity, makes it very clear that research funding in the UK is already low.

|

There is a good summary of the financial case at Science is Vital. Even before cuts the UK invested only 1.8% of its GDP in R&D in 2007. This is short of the UK’s own target of 2.5%, and further behind the EU target of 3.8%. If you haven’t already, sign their petition. |

The article reproduced here is the original 800-word version of proposals made already on this blog.

It was published today in The Times, in a 500 word version that was skilfully shortened by Times journalist, Robbie Millen [download web version, or print version] . It made the Thunderer column (page 22) It was written before I had seen the Browne report on University finance, Comments on that will be added in the follow-up.

Honours degrees have had their day

Universities have problems. The competition for research money is already intense in the extreme, and many excellent research applications get turned down. Vice-chancellors want students to pay huge fees. A financial crisis looms. It is time for a rethink the entire university system.

The traditional honours degree has had its day

The UK’s honours degree system is a relic left over from the time when a tiny fraction of the population went to university. The aim is now for half the population to get some sort of higher education, and the old system doesn’t work. It tries to get children from school to the level where they can start research in only three years. Even in its heyday it often failed to do that. Now teachers in vastly bigger third year classes try to teach quite advanced stuff to students most of whom have long since decided that they don’t want to do research. It’s just as well they decided that, because academia doesn’t have jobs for half the population.

The research funding system is strained to breaking point

Vince Cable’s cockup over the amount of money spent on mediocre science has long since been corrected But despite the intense competition for research funds, anyone who listens to Radio 4’s Today Programme (I do), or reads the Daily Mail (I don’t) might get the impression that some pretty trivial research gets published. One reason for this is that science reporters always prefer the simple and trivial to basic research. But another reason is that the system places enormous pressure to publish vast amounts. Quantity matters more than quality. The Research Assessment Exercise determines the funds that a university gets from government, and although started with the best of intentions, it has done more to reduce the quality of research than any other single change in the last 20 years.

Promotion in universities is dependent on publication, and so is university funding. Since 1992, when John Major’s government converted polytechnics into universities at a stroke of the pen, their staff too have been expected to publish to be promoted. We need a lot of teachers to cope with 50 percent of the population, but there just aren’t enough good researchers to go round. It is a truth universally acknowledged that advanced teaching should be done by people who are themselves doing research, but the numbers don’t add up. So what can be done?

Another way to organise higher education

The first essential is to abolish the honours degree (cue howls of outrage from the deeply conservative vice-chancellors). It is simply too specialist for an age of mass education. Rather, there should be more general first degrees. They should still, by and large, aim to produce critical thinking rather than being vocational, but cover a wider range of subjects to a lower level,

If this were done, the necessity to have the first degrees taught by active researchers would decrease. Many of them could be taught in ‘teaching only’ institutions. They could do it more cheaply too, if their staff were not under pressure to publish papers constantly. It would take fewer people and less space. It isn’t ideal, but I see no other way to increase the numbers in higher education without spending much more than we do now.

After the first degree, that modest fraction of students who had the ability and desire to get more specialist knowledge would go to graduate school. There they could be taught at a rather higher level than the present third year of an honours degree, and be prepared for research, if that is what they wanted to do.

Hang on though, isn’t it the case that UK Universities already have graduate schools? Yes, but they are largely offshoots of HR that provide courses in advanced powerpoint and life-style psychobabble. Vast amounts of money have been wasted in the “Roberts Agenda”. What we need is real graduate schools that teach advanced stuff. Education not training.

There is another problem. It is very hard now for anyone in research to find time to think about their subject. Most of their time is occupied writing grant applications (with 15% chance of success), churning out trivial papers and teaching. If much of the lower level undergraduate teaching were to be done, more cheaply, in places that did little or no research, the saving would, with luck, fund the extra year for the minority who go on the graduate school. The research intensive universities would do less undergraduate teaching. Their staff would have more time to do research and teach the graduate school. They would turn into something more like Institutes of Advanced Studies.

A lot of details would have to be worked out, and it isn’t ideal, just the least bad solution I can think of. It has not escaped my attention that this system has some resemblance to that in the USA. The USA does rather well in science. Perhaps we should try it.

What we should not copy is the high fees charged in the USA. Education is a public good, and the costs should be met by people paying according to their means. I think that is called income tax.

Follow-up

The Browne report is a retrogressive disaster

As I understand it. the recommendations not only remove the cap on fees but also make it more expensive for most people to repay loans. It is the most retrogressive thing that has happened in education in my lifetime. According to an analysis cited in the Guardian

“Graduates earning between £35,000 and £60,000 a year are likely to have to pay back more in fees and interest than those earning more than £100,000”

That is far to the right of anything that Mrs Thatcher contemplated. If it were to be adopted, it would be a national disgrace.

The Lib Dems are our only hope to stop the recommendations being implemented. They must hold the line.

15 October 2010.

It is getting clearer now. Numbers from the Social Market Foundation (SMF) were quoted in the Financial Times as showing that the rich pay less than the poor for their degrees. At first the Institute of Fiscal Studies (IFS) seemed to disagree. Now the IFS has rethought the analysis and there is little difference between the predictions of the liberal (SMF) and conservative predictions, There is, unsurprisingly, some difference in the spin. ISF says that you only pay less in the top two deciles of lifetime income, ie. the top 20 percent. That’s not quite the point though.

Both analyses agree that anyone above the median income pays back much the same (until it decreases for the top 20 percent). In other words, unless you earn less than around £22k. there is little or no progressive element whatsoever.

It is an ill thought-out disaster.

This classic was published in issue 1692 of New Scientist magazine, 25 November 1989. Frank Watt was head of the Scanning Proton Microprobe Unit in the Department of Physics at Oxford University. He wrote for the New Scientist an article on Microscopes with proton power.

In the light of yesterday’s fuss about research funding, Modest revolt to save research from red tape , this seemed like a good time to revive Watt’s article. It was written near to the end of the reign of Margaret Thatcher, about a year before she was deposed by her own party. It shows how little has changed.

It is now the fashion for grant awarding agencies to ask what percentage of your time will be spent on the project. I recently reviewed a grant in which the applicants had specified this to four significant figures, They’d

pretended they knew, a year before starting the work that they could say how much time they’d spend with an accuracy better than three hours. Stupid questions evoke stupid answers.

The article gave rise to some political follow-up in New Scientist, here and here.

Playing the game – The art of getting money for research

WELL, that’s it,’ thought the Captain. ‘We have the best ship in the world, the most experienced crew, and navigators par excellence. All we need now is a couple of tons of ship’s biscuits and it’s off to the ends of the world.’ NRC (Nautical Research Council) grant application no MOD2154, September 1761. Applicant: J. Cook. Aim: To explore the world. Requirements: Supplies for a three-year voyage. Total cost: Pounds sterling 21 7s 6d. January 1762: Application MOD2154,rejected by the Tall Ships subcommittee Reasons for rejection: ‘Damn,’ thought the Captain, ‘that’s nailed our vitals to the plank good and NRC grant application no MOD2279, April 1762. Applicant J. Cook. Aim: To explore the world beyond Africa in a clockwise direction and discover a large continent positioned between New Guinea and the South Pole. Requirements: Supplies for a three-year voyage. Total cost: Pounds sterling 21 7s 7d (adjusted for inflation). September 1762: Application MOD2279 rejected by the Castle and Moats subcommittee Reasons for rejection. (a) Why discover a large continent when there are hundreds of castles in Britain. (b) It is highly unlikely that Cook’s ship will fit into the average moat. ‘Damn,’ thought the Captain, ‘shiver me timbers, the application has gone to the wrong committee.’ ‘Come back in six months,’ he told his sturdy crew. Dear NRC, Why did our application MOD2279 go to the Castle and Moats subcommittee instead of the Tall Ships committee? Yours sincerely, James Cook. Dear Mr Cock, The Tall Ships subcommittee has been rationalised, and has been replaced by the Castle and Moats subcommittee and the Law and Order subcommittee. Yours sincerely, NRC. ‘Damn,’ thought the Captain, ‘rummage me topsail, we’ll never get off the ground like this. I had better read up on this new chaos theory.’ In fact the Captain did not do this, but instead took advice from an old sea dog who had just been awarded a grant of Pounds sterling 2 million from the emergency drawbridge fund to research the theory of gravity. NRC Application no MOD2391, January 1763 (to be considered by the Law and Order subcommittee). Applicant J. Cook. Aim: Feasibility study for the transportation of rascals, rogues and vagabonds to a remote continent on the other side of the world. Initial pilot studies involve a three-year return journey to a yet undiscovered land mass called Australia . Requirements: Supplies for a three-year voyage. Total cost: Pounds sterling 21 7s 8d (again adjusted for inflation). Dear Mr Coko, We are pleased to inform you that your application MOD2391 has been successful. Unfortunately, due to the financial crisis at the moment, the subcommittee has recommended that funding for your three-year round trip to Australia be cut to 18 months. Yours sincerely, NRC. |

Follow-up

A letter to Mr Darwin A correspondent draws my attention to another lovely piece from the EMBO Reports Journal )Vol 10, 2009), by Frank Gannon. [Download the pdf.]

Dear Dr Darwin

” . . . . In a further comment, referee three decries your descriptive approach, which leaves the task of explaining the ‘how’ to others. His/her view is that any publications resulting from your work will inevitably be acceptable only to lowimpact specialist journals; even worse, they might be publishable only as a monograph. As our agency is judged by the quality of the work that we support—measured by the average impact factor of the papers that result from our funding—this is a strongly negative comment”

Rather sadly, this excellent editorial had to accompanied by a pusillanimous disclaimer

This Editorial represents the personal views of Frank Gannon and not those of Science Foundation Ireland or the European Molecular Biology Organization.