HEFCE

This is very quick synopsis of the 500 pages of a report on the use of metrics in the assessment of research. It’s by far the most thorough bit of work I’ve seen on the topic. It was written by a group, chaired by James Wilsdon, to investigate the possible role of metrics in the assessment of research.

The report starts with a bang. The foreword says

|

"Too often, poorly designed evaluation criteria are “dominating minds, distorting behaviour and determining careers.”1 At their worst, metrics can contribute to what Rowan Williams, the former Archbishop of Canterbury, calls a “new barbarity” in our universities." "The tragic case of Stefan Grimm, whose suicide in September 2014 led Imperial College to launch a review of its use of performance metrics, is a jolting reminder that what’s at stake in these debates is more than just the design of effective management systems." "Metrics hold real power: they are constitutive of values, identities and livelihoods " |

And the conclusions (page 12 and Chapter 9.5) are clear that metrics alone can measure neither the quality of research, nor its impact.

"no set of numbers,however broad, is likely to be able to capture the multifaceted and nuanced judgements on the quality of research outputs that the REF process currently provides"

"Similarly, for the impact component of the REF, it is not currently feasible to use quantitative indicators in place of narrative impact case studies, or the impact template"

These conclusions are justified in great detail in 179 pages of the main report, 200 pages of the literature review, and 87 pages of Correlation analysis of REF2014 scores and metrics

The correlation analysis shows clearly that, contrary to some earlier reports, all of the many metrics that are considered predict the outcome of the 2014 REF far too poorly to be used as a substitute for reading the papers.

There is the inevitable bit of talk about the "judicious" use of metrics tp support peer review (with no guidance about what judicious use means in real life) but this doesn’t detract much from an excellent and thorough job.

Needless to say, I like these conclusions since they are quite similar to those recommended in my submission to the report committee, over a year ago.

Of course peer review is itself fallible. Every year about 8 million researchers publish 2.5 million articles in 28,000 peer-reviewed English language journals (STM report 2015 and graphic, here). It’s pretty obvious that there are not nearly enough people to review carefully such vast outputs. That’s why I’ve said that any paper, however bad, can now be printed in a journal that claims to be peer-reviewed. Nonetheless, nobody has come up with a better system, so we are stuck with it.

It’s certainly possible to judge that some papers are bad. It’s possible, if you have enough expertise, to guess whether or not the conclusions are justified. But no method exists that can judge what the importance of a paper will be in 10 or 20 year’s time. I’d like to have seen a frank admission of that.

If the purpose of research assessment is to single out papers that will be considered important in the future, that job is essentially impossible. From that point of view, the cost of research assessment could be reduced to zero by trusting people to appoint the best people they can find, and just give the same amount of money to each of them. I’m willing to bet that the outcome would be little different. Departments have every incentive to pick good people, and scientists’ vanity is quite sufficient motive for them to do their best.

Such a radical proposal wasn’t even considered in the report, which is a pity. Perhaps they were just being realistic about what’s possible in the present climate of managerialism.

Other recommendations include

"HEIs should consider signing up to the San Francisco Declaration on Research Assessment (DORA)"

4. "Journal-level metrics, such as the Journal Impact Factor (JIF), should not be used."

It’s astonishing that it should be still necessary to deplore the JIF almost 20 years after it was totally discredited. Yet it still mesmerizes many scientists. I guess that shows just how stupid scientists can be outside their own specialist fields.

DORA has over 570 organisational and 12,300 individual signatories, BUT only three universities in the UK have signed (Sussex, UCL and Manchester). That’s a shocking indictment of the way (all the other) universities are run.

One of the signatories of DORA is the Royal Society.

"The RS makes limited use of research metrics in its work. In its publishing activities, ever since it signed DORA, the RS has removed the JIF from its journal home pages and marketing materials, and no longer uses them as part of its publishing strategy. As authors still frequently ask about JIFs, however, the RS does provide them, but only as one of a number of metrics".

That’s a start. I’ve advocated making it a condition to get any grant or fellowship, that the university should have signed up to DORA and Athena Swan (with checks to make sure they are actually obeyed).

And that leads on naturally to one of the most novel and appealing recommendations in the report.

|

"A blog will be set up at http://www.ResponsibleMetrics.org "every year we will award a “Bad Metric” prize to the most |

This should be really interesting. Perhaps I should open a book for which university is the first to win "Bad Metric" prize.

The report covers just about every aspect of research assessment: perverse incentives, whether to include author self-citations, normalisation of citation impact indicators across fields and what to do about the order of authors on multi-author papers.

It’s concluded that there are no satisfactory ways of doing any of these things. Those conclusions are sometimes couched in diplomatic language which may, uh, reduce their impact, but they are clear enough.

The perverse incentives that are imposed by university rankings are considered too. They are commercial products and if universities simply ignored them, they’d vanish. One important problem with rankings is that they never come with any assessment of their errors. It’s been known how to do this at least since Goldstein & Spiegelhalter (1996, League Tables and Their Limitations: Statistical Issues in Comparisons Institutional Performance). Commercial producers of rankings don’t do it, because to do so would reduce the totally spurious impression of precision in the numbers they sell. Vice-chancellors might bully staff less if they knew that the changes they produce are mere random errors.

Metrics, and still more altmetrics, are far too crude to measure the quality of science. To hope to do that without reading the paper is pie in the sky (even reading it, it’s often impossible to tell).

The only bit of the report that I’m not entirely happy about is the recommendation to spend more money investigating the metrics that the report has just debunked. It seems to me that there will never be a way of measuring the quality of work without reading it. To spend money on a futile search for new metrics would take money away from science itself. I’m not convinced that it would be money well-spent.

Follow-up

This is a slightly-modified version of the article that appeared in BMJ blogs yesterday, but with more links to original sources, and a picture. There are already some comments in the BMJ.

The original article, diplomatically, did not link directly to UCL’s Grand Challenge of Human Wellbeing, a well-meaning initiative which, I suspect, will not prove to be value for money when it comes to practical action.

Neither, when referring to the bad effects of disempowerment on human wellbeing (as elucidated by, among others, UCL’s Michael Marmot), did I mention the several ways in which staff have been disempowered and rendered voiceless at UCL during the last five years. Although these actions have undoubtedly had a bad effect on the wellbeing of UCL’s staff, it seemed a litlle unfair to single out UCL since similar things are happening in most universities. Indeed the fact that it has been far worse at Imperial College (at least in medicine) has probably saved UCL from being denuded. One must be thankful for small mercies.

There is, i think, a lesson to be learned from the fact that formal initiatives in wellbeing are springing up at a time when university managers are set on taking actions that have exactly the opposite effect. A ‘change manager’ is not an adequate substitute for a vote. Who do they imagine is being fooled?

The A to Z of the wellbeing industry

From angelic reiki to patient-centred care

Nobody could possibly be against wellbeing. It would be like opposing motherhood and apple pie. There is a whole spectrum of activities under the wellbeing banner, from the undoubtedly well-meaning patient-centred care at one end, to downright barmy new-age claptrap at the other end. The only question that really matters is, how much of it works?

Let’s start at the fruitcake end of the spectrum.

One thing is obvious. Wellbeing is big business. And if it is no more than a branch of the multi-billion-dollar positive-thinking industry, save your money and get on with your life.

In June 2010, Northamptonshire NHS Foundation Trust sponsored a “Festival of Wellbeing” that included a complementary therapy taster day. In a BBC interview one practitioner used the advertising opportunity, paid for by the NHS, to say “I’m an angelic reiki master teacher and also an angel therapist.” “Angels are just flying spirits, 100 percent just pure light from heaven. They are all around us. Everybody has a guardian angel.” Another said “I am a member of the British Society of Dowsers and use a crystal pendulum to dowse in treatment sessions. Sessions may include a combination of meditation, colour breathing, crystals, colour scarves, and use of a light box.” You couldn’t make it up.

The enormous positive-thinking industry is no better. Barbara Ehrenreich’s book, Smile Or Die: How Positive Thinking Fooled America and the World, explains how dangerous the industry is, because, as much as guardian angels, it is based on myth and delusion. It simply doesn’t work (except for those who make fortunes by promoting it). She argues that it fosters the sort of delusion that gave us the financial crisis (and pessimistic bankers were fired for being right). Her interest in the industry started when she was diagnosed with cancer. She says

”When I was diagnosed, what I found was constant exhortations to be positive, to be cheerful, to even embrace the disease as if it were a gift. If that’s a gift, take me off your Christmas list,”

It is quite clear that positive thinking does nothing whatsoever to prolong your life (Schofield et al 2004; Coyne et al 2007; 2,3), any more than it will cure tuberculosis or cholera. “Encouraging patients to “be positive” only may add to the burden of having cancer while providing little benefit” (Schofield et al 2004). Far from being helpful, it can be rather cruel.

Just about every government department, the NHS, BIS, HEFCE, and NICE, has produced long reports on wellbeing and stress at work. It’s well known that income is correlated strongly with health (Marmot, M., 2004). For every tube stop you go east of Westminster you lose a year of life expectancy (London Health Observatory). It’s been proposed that what matters is inequality of income (Wilkinson & Pickett, 2009). The nature of the evidence doesn’t allow such a firm conclusion (Lynch et al. 2004), but that isn’t really the point. The real problem is that nobody has come up with good solutions. Sadly the recommendations at the ends of all these reports don’t amount to a hill of beans. Nobody knows what to do, partly because pilot studies are rarely randomised so causality is always dubious, and partly because the obvious steps are either managerially inconvenient, ideologically unacceptable, or too expensive.

Take two examples:

Sir Michael Marmot’s famous Whitehall study (Marmot, M., 2004) has shown that a major correlate of illness is lack of control over one’s own fate: disempowerment. What has been done about it?

In universities it has proved useful to managers to increase centralisation and to disempower academics, precisely the opposite of what Marmot recommends.

|

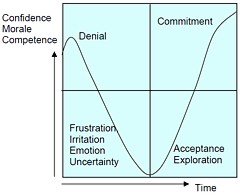

As long as it’s convenient to managers they are not going to change policy. Rather, they hand the job to the HR department which appoints highly paid “change managers,” who add to the stress by sending you stupid graphs that show you emerging from the slough of despond into eternal light once you realise that you really wanted to be disempowered after all. Or they send you on some silly “resilience” course. |

|

A second example comes from debt. According to a BIS report (Mental Capital and Wellbeing), debt is an even stronger risk factor for mental disorder than low income. So what is the government’s response to that? To treble tuition fees to ensure that almost all graduates will stay in debt for most of their lifetime. And this was done despite the fact that the £9k fees will save nothing for the taxpayer: in fact they’ll cost more than the £3k fees. The rise has happened, presumably, because the ideological reasons overrode the government’s own ideas on how to make people happy.

Nothing illustrates better the futility of the wellbeing industry than the response that is reported to have been given to a reporter who posed as an applicant for a “health, safety, and wellbeing adviser” with a local council. When he asked what “wellbeing” advice would involve, a member of the council’s human resources team said: “We are not really sure yet as we have only just added that to the role. We’ll want someone to make sure that staff take breaks, go for walks — that kind of stuff.”

The latest wellbeing notion to re-emerge is the happiness survey. Jeremy Bentham advocated “the greatest happiness for the greatest number,” but neglected to say how you measure it. A YouGov poll asks, “what about your general well-being right now, on a scale from 1 to 10.” I have not the slightest idea about how to answer such a question. As always some things are good, some are bad, and anyway wellbeing relative to whom? Writing this is fun. Trying to solve an algebraic problem is fun. Constant battling with university management in order to be able to do these things is not fun. The whole exercise smacks of the sort of intellectual arrogance that led psychologists in the 1930s to claim that they could sum up a person’s intelligence in a single number. That claim was wrong and it did great social harm.

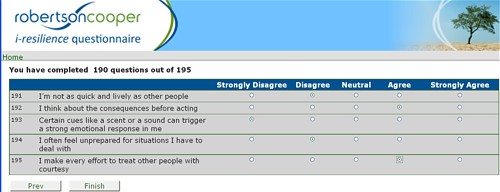

HEFCE has spent a large amount of money setting up “pilot studies” of wellbeing in nine universities. Only one is randomised, so there will be no evidence for causality. The design of the pilots is contracted to a private company, Robertson Cooper, which declines to give full details but it seems likely that the results will be about as useless as the notorious Durham fish oil “trials”(Goldacre, 2008).

Lastly we get to the sensible end of the spectrum: patient-centred care. Again this has turned into an industry with endless meetings and reports and very few conclusions. Epstein & Street (2011) say

“Helping patients to be more active in consultations changes centuries of physician-dominated dialogues to those that engage patients as active participants. Training physicians to be more mindful, informative, and empathic transforms their role from one characterized by authority to one that has the goals of partnership, solidarity, empathy, and collaboration.”

That’s fine, but the question that is constantly avoided is what happens when a patient with metastatic breast cancer expresses a strong preference for Vitamin C or Gerson therapy, as advocated by the YesToLife charity. The fact of the matter is that the relationship can’t be equal when one party, usually (but not invariably) the doctor, knows a lot more about the problem than the other.

What really matters above all to patients is getting better. Anyone in their right mind would prefer a grumpy condescending doctor who correctly diagnoses their tumour, to an empathetic doctor who misses it. It’s fine for medical students to learn social skills but there is a real danger of so much time being spent on it that they can no longer make a correct diagnosis. Put another way, there is confusion between caring and curing. It is curing that matters most to patients. It is this confusion that forms the basis of the bait and switch tactics (see also here) used by magic medicine advocates to gain the respectability that they crave but rarely deserve.

If, as is only too often the case, the patient can’t be cured, then certainly they should be cared for. That’s a moral obligation when medicine fails in its primary aim. There is a lot of talk about individualised care. It is a buzzword of quacks and also of the libertarian wing which says NICE is too prescriptive. It sounds great, but it helps only if the individualised treatment actually works.

Nobody knows how often medicine fails to be “patient-centred.”. Even less does anyone know whether patient-centred care can improve the actual health of patients. There is a strong tendency to do small pilot trials that are as likely to mislead as inform. One properly randomised trial (Kinmonth et al., 1998) concluded

“those committed to achieving the benefits of patient centred consulting should not lose the focus on disease management.”

Non-randomised studies may produce more optimistic conclusions (e.g. Hojat et al, 2011), but there is no way to tell if this is simply because doctors find it easy to be empathetic with patients who have better outcomes.

Obviously I’m in favour of doctors being nice to patients and to listening to their wishes. But there is a real danger that it will be seen as more important than curing. There is also a real danger that it will open the doors to all sorts of quacks who claim to provide individualised empathic treatment, but end up recommending Gerson therapy for metastatic breast cancer. The new College of Medicine, which in reality is simply a reincarnation of the late unlamented Prince’s Foundation for Integrated Health, lists as its founder Capita, the private healthcare provider that will, no doubt, be happy to back the herbalists and homeopaths in the College of Medicine, and, no doubt, to make a profit from selling their wares to the NHS.

In my own experience as a patient, there is not nearly as much of a problem with patient centred care as the industry makes out. Others have been less lucky, as shown by the mid-Staffordshire disaster (Delamothe, 2010), That seems to have resulted from PR being given priority over patients. Perhaps all that’s needed is to save money on all the endless reports and meetings (“the best substitute for work”), ban use of PR agencies (paid lying) and to spend the money on more doctors and nurses so they can give time to people who need it. This is a job that will be hindered considerably by the government’s proposals to sell off NHS work to private providers who will be happy to make money from junk medicine.

Reference

Wilkinson. R & Pickett, K. 2009 , The Spirit Level, ISBN 978 1 84614 039 6

A footnote on Robertson Cooper and "resilience"

I took up the offer of Robertson Cooper to do their free "resilience" assessment, the company to which HEFCE has paid an undisclosed amount of money.

The first problem arose when it asked about your job. There was no option for scientist, mathematician, university or research, so I was forced to choose "education and training". (a funny juxtaposition since training is arguably the antithesis of education). It had 195 questions. mostly as unanswerable as in the YouGov happiness survey. I particularly liked question 124 "I see little point in many of the theoretical models I come across". The theoretical models that I come across most are Markov models for the intramolecular changes in a receptor molecule when it binds a ligand (try, for example, Joint distributions of apparent open and shut times of single-ion channels and maximum likelihood fitting of mechanisms). I doubt the person who wrote the question has ever heard of a model of that sort. The answer to that question (and most of the others) would not be worth the paper they are written on.

The whole exercise struck me as the worst sort of vacuous HR psychobabble. It is worrying that HEFCE thinks it is worth spending money on it.