screening

The two posts on this blog about the hazards of s=ignificance testing have proved quite popular. See Part 1: the screening problem, and Part 2: Part 2: the false discovery rate. They’ve had over 20,000 hits already (though I still have to find a journal that will print the paper based on them).

Yet another Alzheiner’s screening story hit the headlines recently and the facts got sorted out in the follow up section of the screening post. If you haven’t read that already, it might be helpful to do so before going on to this post.

This post has already appeared on the Sense about Science web site. They asked me to explain exactly what was meant by the claim that the screening test had an "accuracy of 87%". That was mentioned in all the media reports, no doubt because it was the only specification of the quality of the test in the press release. Here is my attempt to explain what it means.

The "accuracy" of screening tests

Anything about Alzheimer’s disease is front line news in the media. No doubt that had not escaped the notice of Kings College London when they issued a press release about a recent study of a test for development of dementia based on blood tests. It was widely hailed in the media as a breakthrough in dementia research. For example, the BBC report is far from accurate). The main reason for the inaccurate reports is, as so often, the press release. It said

"They identified a combination of 10 proteins capable of predicting whether individuals with MCI would develop Alzheimer’s disease within a year, with an accuracy of 87 percent"

The original paper says

"Sixteen proteins correlated with disease severity and cognitive decline. Strongest associations were in the MCI group with a panel of 10 proteins predicting progression to AD (accuracy 87%, sensitivity 85% and specificity 88%)."

What matters to the patient is the probability that, if they come out positive when tested, they will actually get dementia. The Guardian quoted Dr James Pickett, head of research at the Alzheimer’s Society, as saying

"These 10 proteins can predict conversion to dementia with less than 90% accuracy, meaning one in 10 people would get an incorrect result."

That statement simply isn’t right (or, at least, it’s very misleading). The proper way to work out the relevant number has been explained in many places -I did it recently on my blog.

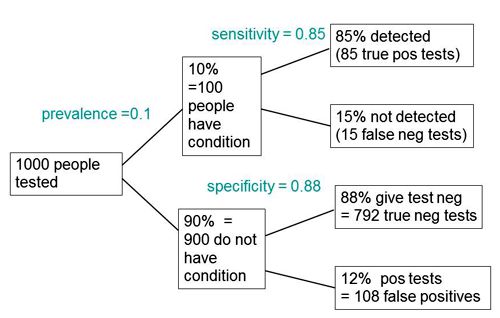

The easiest way to work it out is to make a tree diagram. The diagram is like that previously discussed here, but with a sensitivity of 85% and a specificity of 88%, as specified in the paper.

In order to work out the number we need, we have to specify the true prevalence of people who will develop dementia, in the population being tested. In the tree diagram, this has been taken as 10%. The diagram shows that, out of 1000 people tested, there are 85 + 108 = 193 with a positive test result. Out ot this 193, rather more than half (108) are false positives, so if you test positive there is a 56% chance that it’s a false alarm (108/193 = 0.56). A false discovery rate of 56% is far too high for a good test.

This figure of 56% seems to be the basis for a rather good post by NHS Choices with the title “Blood test for Alzheimer’s ‘no better than coin toss’

If the prevalence were taken as 5% (a value that’s been given for the over-60 age group) that fraction of false alarms would rise to a disastrous 73%.

How are these numbers related to the claim that the test is "87% accurate"? That claim was parroted in most of the media reports, and it is why Dr Pickett said "one in 10 people would get an incorrect result".

The paper itself didn’t define "accuracy" anywhere, and I wasn’t familiar with the term in this context (though Stephen Senn pointed out that it is mentioned briefly in the Wiikipedia entry for Sensitivity and Specificity). The senior author confirmed that "accuracy" means the total fraction of tests, positive or negative, that give the right result. We see from the tree diagram that, out of 1000 tests, there are 85 correct positive tests and 792 correct negative tests, so the accuracy (with a prevalence of 0.1) is (85 + 792)/1000 = 88%, close to the value that’s cited in the paper.

Accuracy, defined in this way, seems to me not to be a useful measure at all. It conflates positive and negative results and they need to be kept separate to understand the problem. Inspection of the tree diagram shows that it can be expressed algebraically as

accuracy = (sensitivity × prevalence) + (specificity × (1 − prevalence))

It is therefore merely a weighted mean of sensitivity and specificity (weighted by the prevalence). With the numbers in this case, it varies from 0.88 (when prevalence = 0) to 0.85 (when prevalence = 1). Thus it will inevitably give a much more flattering view of the test than the false discovery rate.

No doubt, it is too much to expect that a hard-pressed journalist would have time to figure this out, though it isn’t clear that they wouldn’t have time to contact someone who understands it. But it is clear that it should have been explained in the press release. It wasn’t.

In fact, reading the paper shows that the test was not being proposed as a screening test for dementia at all. It was proposed as a way to select patients for entry into clinical trials. The population that was being tested was very different from the general population of old people, being patients who come to memory clinics in trials centres (the potential trials population)

How best to select patients for entry into clinical trials is a matter of great interest to people who are running trials. It is of very little interest to the public. So all this confusion could have been avoided if Kings had refrained from issuing a press release at all, for a paper like this.

I guess universities think that PR is more important than accuracy.

That’s a bad mistake in an age when pretentions get quickly punctured on the web.

This post first appeared on the Sense about Science web site.

This post is about why screening healthy people is generally a bad idea. It is the first in a series of posts on the hazards of statistics.

There is nothing new about it: Graeme Archer recently wrote a similar piece in his Telegraph blog. But the problems are consistently ignored by people who suggest screening tests, and by journals that promote their work. It seems that it can’t be said often enough.

The reason is that most screening tests give a large number of false positives. If your test comes out positive, your chance of actually having the disease is almost always quite small. False positive tests cause alarm, and they may do real harm, when they lead to unnecessary surgery or other treatments.

Tests for Alzheimer’s disease have been in the news a lot recently. They make a good example, if only because it’s hard to see what good comes of being told early on that you might get Alzheimer’s later when there are no good treatments that can help with that news. But worse still, the news you are given is usually wrong anyway.

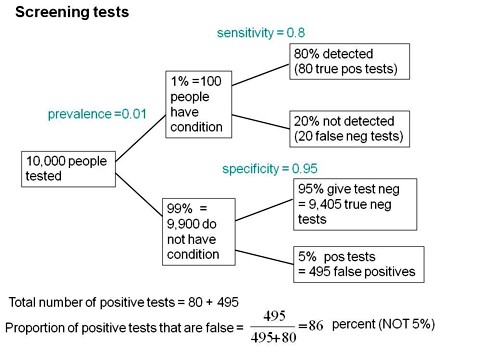

Consider a recent paper that described a test for "mild cognitive impairment" (MCI), a condition that may, but often isn’t, a precursor of Alzheimer’s disease. The 15-minute test was published in the Journal of Neuropsychiatry and Clinical Neurosciences by Scharre et al (2014). The test sounded pretty good. It had a specificity of 95% and a sensitivity of 80%.

Specificity (95%) means that 95% of people who are healthy will get the correct diagnosis: the test will be negative.

Sensitivity (80%) means that 80% of people who have MCI will get the correct diagnosis: the test will be positive.

To understand the implication of these numbers we need to know also the prevalence of MCI in the population that’s being tested. That was estimated as 1% of people have MCI. Or, for over-60s only, 5% of people have MCI. Now the calculation is easy. Suppose 10.000 people are tested. 1% (100 people) will have MCI, of which 80% (80 people) will be diagnosed correctly. And 9,900 do not have MCI, of which 95% will test negative (correctly). The numbers can be laid out in a tree diagram.

The total number of positive tests is 80 + 495 = 575, of which 495 are false positives. The fraction of tests that are false positives is 495/575= 86%.

Thus there is a 14% chance that if you test positive, you actually have MCI. 86% of people will be alarmed unnecessarily.

Even for people over 60. among whom 5% of the population have MC!, the test is gives the wrong result (54%) more often than it gives the right result (46%).

The test is clearly worse than useless. That was not made clear by the authors, or by the journal. It was not even made clear by NHS Choices.

It should have been.

It’s easy to put the tree diagram in the form of an equation. Denote sensitivity as sens, specificity as spec and prevalence as prev.

The probability that a positive test means that you actually have the condition is given by

\[\frac{sens.prev}{sens.prev + \left(1-spec\right)\left(1-prev\right) }\; \]

In the example above, sens = 0.8, spec = 0.95 and prev = 0.01, so the fraction of positive tests that give the right result is

\[\frac{0.8 \times 0.01}{0.8 \times 0.01 + \left(1 – 0.95 \right)\left(1 – 0.01\right) }\; = 0.139 \]

So 13.9% of positive tests are right, and 86% are wrong, as found in the tree diagram.

The lipid test for Alzheimers’

Another Alzheimers’ test has been in the headlines very recently. It performs even worse than the 15-minute test, but nobody seems to have noticed. It was published in Nature Medicine, by Mapstone et al. (2014). According to the paper, the sensitivity is 90% and the specificity is 90%, so, by constructing a tree, or by using the equation, the probability that you are ill, given that you test positive is a mere 8% (for a prevalence of 1%). And even for over-60s (prevalence 5%), the value is only 32%, so two-thirds of positive tests are still wrong. Again this was not pointed out by the authors. Nor was it mentioned by Nature Medicine in its commentary on the paper. And once again, NHS Choices missed the point.

Why does there seem to be a conspiracy of silence about the deficiencies of screening tests? It has been explained very clearly by people like Margaret McCartney who understand the problems very well. Is it that people are incapable of doing the calculations? Surely not. Is it that it’s better for funding to pretend you’ve invented a good test, when you haven’t? Do journals know that anything to do with Alzheimers’ will get into the headlines, and don’t want to pour cold water on a good story?

Whatever the explanation, it’s bad science that can harm people.

Follow-up

March 12 2014. This post was quickly picked up by the ampp3d blog, run by the Daily Mirror. Conrad Quilty-Harper showed some nice animations under the heading How a “90% accurate” Alzheimer’s test can be wrong 92% of the time.

March 12 2014.

As so often, the journal promoted the paper in a way that wasn’t totally accurate. Hype is more important than accuracy, I guess.

June 12 2014.

The empirical evidence shows that “general health checks” (a euphemism for mass screening of the healthy) simply don’t help. See review by Gøtzsche, Jørgensen & Krogsbøll (2014) in BMJ. They conclude

“Doctors should not offer general health checks to their patients,and governments should abstain from introducing health check programmes, as the Danish minister of health did when she learnt about the results of the Cochrane review and the Inter99 trial. Current programmes, like the one in the United Kingdom,should be abandoned.”

8 July 2014

Yet another over-hyped screening test for Alzheimer’s in the media. And once again. the hype originated in the press release, from Kings College London this time. The press release says

"They identified a combination of 10 proteins capable of predicting whether individuals with MCI would develop Alzheimer’s disease within a year, with an accuracy of 87 percent"

The term “accuracy” is not defined in the press release. And it isn’t defined in the original paper either. I’ve written to senior author, Simon Lovestone to try to find out what it means. The original paper says

"Sixteen proteins correlated with disease severity and cognitive decline. Strongest associations were in the MCI group with a panel of 10 proteins predicting progression to AD (accuracy 87%, sensitivity 85% and specificity 88%)."

A simple calculation, as shown above, tells us that with sensitivity 85% and specificity 88%. the fraction of people who have a positive test who are diagnosed correctly is 44%. So 56% of positive results are false alarms. These numbers assume that the prevalence of the condition in the population being tested is 10%, a higher value than assumed in other studies. If the prevalence were only 5% the results would be still worse: 73% of positive tests would be wrong. Either way, that’s not good enough to be useful as a diagnostic method.

In one of the other recent cases of Alzheimer’s tests, six months ago, NHS Choices fell into the same trap. They changed it a bit after I pointed out the problem in the comments. They seem to have learned their lesson because their post on this study was titled “Blood test for Alzheimer’s ‘no better than coin toss’ “. That’s based on the 56% of false alarms mention above.

The reports on BBC News and other media totally missed the point. But, as so often, their misleading reports were based on a misleading press release. That means that the university, and ultimately the authors, are to blame.

I do hope that the hype has no connection with the fact that Conflicts if Interest section of the paper says

"SL has patents filed jointly with Proteome Sciences plc related to these findings"

What it doesn’t mention is that, according to Google patents, Kings College London is also a patent holder, and so has a vested interest in promoting the product.

Is it really too much to expect that hard-pressed journalists might do a simple calculation, or phone someone who can do it for them? Until that happens, misleading reports will persist.

9 July 2014

It was disappointing to see that the usually excellent Sarah Boseley in the Guardian didn’t spot the problem either. And still more worrying that she quotes Dr James Pickett, head of research at the Alzheimer’s Society, as saying

These 10 proteins can predict conversion to dementia with less than 90% accuracy, meaning one in 10 people would get an incorrect result.

That number is quite wrong. It isn’t 1 in 10, it’s rather more than 1 in 2.

A resolution

After corresponding with the author, I now see what is going on more clearly.

The word "accuracy" was not defined in the paper, but was used in the press release and widely cited in the media. What it means is the ratio of the total number of true results (true positives + true negatives) to the total number of all results. This doesn’t seem to me to be useful number to give at all, because it conflates false negatives and false positives into a single number. If a condition is rare, the number of true negatives will be large (as shown above), but this does not make it a good test. What matters most to patients is not accuracy, defined in this way, but the false discovery rate.

The author makes it clear that the results are not intended to be a screening test for Alzheimer’s. It’s obvious from what’s been said that it would be a lousy test. Rather, the paper was intended to identify patients who would eventually (well, within only 18 months) get dementia. The denominator (always the key to statistical problems) in this case is the highly atypical patients that who come to memory clinics in trials centres (the potential trials population). The prevalence in this very restricted population may indeed be higher that the 10 percent that I used above.

Reading between the lines of the press release, you might have been able to infer some of thus (though not the meaning of “accuracy”). The fact that the media almost universally wrote up the story as a “breakthrough” in Alzeimer’s detection, is a consequence of the press release and of not reading the original paper.

I wonder whether it is proper for press releases to be issued at all for papers like this, which address a narrow technical question (selection of patients for trials). That us not a topic of great public interest. It’s asking for misinterpretation and that’s what it got.

I don’t suppose that it escaped the attention of the PR people at Kings that anything that refers to dementia is front page news, whether it’s of public interest or not. When we had an article in Nature in 2008, I remember long discussions about a press release with the arts graduate who wrote it (not at our request). In the end we decides that the topic was not of sufficient public interest to merit a press release and insisted that none was issued. Perhaps that’s what should have happened in this case too.

This discussion has certainly illustrated the value of post-publication peer review. See, especially, the perceptive comments, below, from Humphrey Rang and from Dr Aston and from Dr Kline.

14 July 2014. Sense about Science asked me to write a guest blog to explain more fully the meaning of "accuracy", as used in the paper and press release. It’s appeared on their site and will be reposted on this blog soon.

It must be admitted that the human genome has yet to live up to its potential. The hype that greeted the first complete genome sequence has, ten years on, proved to be a bit exaggerated. It’s going to take longer to make sense of it than was thought at first. That’s pretty normal in science. Commerce, though, can’t wait. Big business has taken over and is trying to sell you all sorts of sequencing, with vastly exaggerated claims about what you can infer from the results.

There are two main areas that are being exploited commercially, health and ancestry. Let’s look at an example of each of them.

Private health screening is wildly oversold

There has been a long-running controversy about the value of screening for things like breast cancer. For a superb account, read Dr Margaret McCartney’s book, "The Patient Paradox: Why sexed-up medicine is bad for your health", “Our obsession with screening swallows up the time of NHS staff and the money of healthy people who pay thousands to private companies for tests they don’t need. Meanwhile, the truly sick are left to wrestle with disjointed services and confusing options”.

Many companies now offer DNA sequencing. It’s become so bad that a website, http://privatehealthscreen.org/ has been set up to monitor the dubious claims made by these companies. It’s run by Dr Peter Deveson, Dr Margaret McCartney, Dr Jon Tomlinson and others. They are on twitter as @PeteDeveson, @MgtMcCartney, @mellojonny. They explain clearly what’s wrong. They show examples of advertisements and explain the problems.

Genetic screening for ancestry is wildly-oversold

Many companies are now offering to tell you about your ancestors on the basis of your DNA. I’ll deal with only one example here because it is a case where legal threats were used to try to suppress legitimate criticism. The problem arose initially in an interview on BBC Radio 4’s morning news programme. Play the interview.

Today. I should make it clear that I’m a huge fan of Today. I listen every morning. I’m especially enthusiastic when the presenters include John Humphrys and James Naughtie. The quality of the interviews with politicians is generally superb. Humphrys’ interview with his own boss, George Entwhistle, was widely credited with sealing Entwhistle’s resignation. But I have often thought that is not always as good on science as it is on politics. It has suffered from the "false balance" problem, and from the fact that you don’t get to debate directly with your opponent. Everything goes through the presenter who, only too often, doesn’t ask the right questions.

These problems featured in Steve Jones’ report on the quality of BBC Science reporting, which was commissioned by the BBC Trust, and reported in August 2011. The programmes produced by science departments are generally superb. Just think of the Natural History Unit and David Attenborough’s programmes. But the news departments are more variable. One of Jones’ recommendations was that there should be an overall science editor. In January 2012, David Shukman was appointed to this job. But it seems that neither Shukman, nor Today‘s own science editor, Tom Feilden, was consulted about the offending interview.

My knowledge of genetics is not good enough to provide a critical commentary. But UCL has world-class experts in the area. The account that follows is based mainly on a draft written by two colleagues, Mark Thomas and David Balding.

Vincent Plagnol has posted on Genomes Unzipped a blog that explains in more detail the abuse of science, and the resort to legal threats by a public figure to try to cover up his errors and exaggerations.

|

The interview was with the Rector of St Andrews University, Alastair Moffat, who also runs several businesses, including Britains DNA, Scotlands DNA, Irelands DNA, and Yorkshires DNA.

|

“Inside all of us lies a hidden history, the story of an immense journey told by our DNA.” |

All four sites are essentially identical (including lack of apostrophes. These companies will, for a fee, type some genetic markers in either the maternally-inherited mtDNA (mitochondrial DNA), or the male-only Y chromosome, and provide the customer with a report on their ancestry.

We’ll come back to the accuracy of these reports below, but as a preliminary guide, consider some of the claims made by Moffat on the BBC Radio 4 Today programme on July 9, 2012:

- there is scientific evidence for the existence of Adam and Eve

- nine people in the UK have the same DNA as the Queen of Sheba

- there is a man in Caithness who is “Eve’s grandson” because he differs by only two mutations from her DNA

- 33% of all men are extremely closely associated with the founding lineages of Britain

- we found people that have got Berber and Tuareg ancestry from the Saharan nomads

There was a persistent theme in the interview that DNA testing by Moffat’s company was “bringing the Bible to life” — of course their activities neither support nor detract from anything in the Bible. If you want to either laugh or cry at the shocking range of errors and exaggerations in the interview, listen to it yourself and then read the Genomes Unzipped blog. For example, Piagnol points out that::

"A bit of clarification on chromosome Y and mtDNA: these data represent only a small portion of the human genome and only provide information about the male (fathers of fathers of fathers…) and female (mother of mothers of mothers…) lineages. As an illustration, going back 12 generations (so 300 years approximately) we each have around 4,000 ancestors. mtDNA and chromosome Y DNA only provide information about 2 of them. So these markers provide a very limited window into our ancient ancestry."

Of most concern is that Moffat’s for-profit business was presented as a scientific study in which listeners were twice invited to participate. He admitted that they have to pay but “we subsidise it massively”. This phrase is important because it suggests that this is a genetic history project of such importance and public interest that it has been subsidised by the government or a charitable body. This doesn’t seem plausible – Britains DNA charges £170 for either mtDNA or Y typing, which is comparable with their competitors. There is nothing on the Britain’s DNA website to indicate that it is subsidised by anybody.

Instead of producing evidence, Moffat paid a lawyer to suppress criticism

When challenged by my two academic colleagues, Mark Thomas and David Balding, on this and other problems arising from the interview, Moffat failed to either clarify or withdraw the "massively subsidised" claim. Instead, his two challengers received letters from solicitors threatening legal action for defamation unless they fulfil conditions such as that they will not state that Mr Moffat’s science is wrong or untrue. In the face of so many obvious dubious claims in the Today interview, it would be a dereliction of the duty of an academic not to point out what is wrong.

The interview certainly sounded exaggerated to me. Luckily, a colleague of Thomas and Balding, Vincent Plagnol (lecturer in statistical genetics), has written a detailed refutation of many of Moffat’s claims on his post Exaggerations and errors in the promotion of genetic ancestry testing, on the Genomes Unzipped blog.

Thomas and Balding maintain that oversimpliifed and incorrect statements appear also on the web site of Britains DNA.

The interview sounded more like advertising than science

It is clearly unacceptable for a person in high public office to make a claim on national radio that appears to be untrue and intended to support his business interests, yet to refuse to withdraw or clarify it when challenged.

Complaints were made both by Thomas and Balding to the BBC, but they met with the usual defensive reply. The BBC seemed to be more interested in entertainment value than in science, in this case.

A few more aspects of this story are interesting. One of them is the role of Moffat’s business partners, Dr Jim Wilson of Edinburgh University and Dr Gianpiero Cavalleri of the Royal College of Surgeons in Ireland. It was these two academics to whom both Thomas and Balding sent emails about their concerns. And it was these emails that elicited the legal threat. They refused to clarify the “massively subsidised” claim, and they have not publicly disassociated themselves from the many misleading statements made by Moffat, from which as business partners they stand to benefit financially (they are both listed as directors and shareholders of The Moffat Partnership Ltd at Companies House). They have both responded to Thomas and Balding, misrepresenting their statements in a way that would support legal action.

Did James Naughtie think his interview was entertainment, not science?

Another interesting but depressing aspect to the story is the role of the BBC’s interviewer Jim Naughtie. In the face of the most outrageous claims about Eve, the Queen of Sheba and "bringing the Bible to life”, that a moment’s thought would have suggested can’t be true, Naughtie asked no sceptical, challenging or probing question. He even commented twice on individuals having "pure" DNA, which is appalling: nobody’s DNA is any purer than another’s; has he not heard of eugenics? Naughtie gave Moffat two opportunities to promote his business, even with details of the web address.

It turns out that Naughtie and Moffat are old friends: as Chancellor of the University of Stirling, Naughtie invited Moffat to sit on its Court, and he posted on YouTube a short video of him supporting Moffat’s campaign for Rector of St Andrews. No such connection was mentioned during the interview.

This interview is not the first. Naughtie also interviewed Moffat (broadcast on 1st June 2011) about a rather silly exercise in claiming – on the basis of Y-chromosome data – that Jim Naughtie is an Englishman . Again Naughtie gave Moffat ample opportunity to promote his business and as far as I am aware none of Moffat’s commercial rivals has been given any comparable opportunity for free business promotion on the BBC.

No suggestion is being made that there is anything corrupt about this. Naughtie is not a scientist, and couldn’t be expected to challenge scientific claims. The most likely interpretation of events is that Naughtie thought the subject was interesting (it is) and invited a friend to talk about it. But it was a mistake to do this without involving Today‘s science editor, Tom Feilden, or the new BBC science editor, David Shukman, and especially to fail to invite a real expert in the area to challenge the claims. There are lots of such experts close to the BBC in London.

Many other companies cash in on the ancestry industry.

Britains DNA is only one of many genetic ancestry companies that make scientifically unsupported claims about what can be inferred from Y chromosome or from mtDNA variants about an individual’s ancestry. This is a big industry, and arouses much public interest, yet in truth what can reliably be inferred from such tests is limited.

Our number of ancestors roughly doubles every generation in the past, so the maternal-only and paternal-only lineages rapidly become negligible among the large numbers of ancestors that each of us had even just 10 or 20 generations ago. Because migration is ubiquitous in human history, those ancestors are likely to have had diverse origins and the origins of just two of them (paternal-only and maternal-only lineages) may not give a good guide to your overall ancestry. Moreover if you have a DNA type that is today common in a particular part of the world, it doesn’t follow that your ancestors came from that location: such genetic tests can say little about where your ancestors were at different times in the past.

Faced with these limitations, but desiring to sell genetic tests, many companies succumb to the temptation to exaggerate and mislead potential clients about the implications of their tests, in some cases leading to disappointed clients who feel betrayed by the scientists in whom they placed trust.

If you have a few hundred pounds to spare, by all means get yourself sequenced for fun. But don’t imagine that the results will tell you much.

Follow-up

25 February 2013, Mark Thomas follwed up this post in the Guardian

“To claim someone has ‘Viking ancestors’ is no better than astrology. Exaggerated claims from genetic ancestry testing companies undermine serious research into human genetic history”

10 March 2014

After more than a year of struggling, the BBC did eventually uphold a complaint about this affair. Full accuonts can be found on the web site of UCL’s Molecular and Cultural Evolution Lab and on Debbie Kennett’s site