The Higher Education Funding Council England (HEFCE) gives money to universities. The allocation that a university gets depends strongly on the periodical assessments of the quality of their research. Enormous amounts if time, energy and money go into preparing submissions for these assessments, and the assessment procedure distorts the behaviour of universities in ways that are undesirable. In the last assessment, four papers were submitted by each principal investigator, and the papers were read.

In an effort to reduce the cost of the operation, HEFCE has been asked to reconsider the use of metrics to measure the performance of academics. The committee that is doing this job has asked for submissions from any interested person, by June 20th.

This post is a draft for my submission. I’m publishing it here for comments before producing a final version for submission.

Draft submission to HEFCE concerning the use of metrics.

I’ll consider a number of different metrics that have been proposed for the assessment of the quality of an academic’s work.

Impact factors

The first thing to note is that HEFCE is one of the original signatories of DORA (http://am.ascb.org/dora/ ). The first recommendation of that document is

:"Do not use journal-based metrics, such as Journal Impact Factors, as a surrogate measure of the quality of individual research articles, to assess an individual scientist’s contributions, or in hiring, promotion, or funding decisions"

.Impact factors have been found, time after time, to be utterly inadequate as a way of assessing individuals, e.g. [1], [2]. Even their inventor, Eugene Garfield, says that. There should be no need to rehearse yet again the details. If HEFCE were to allow their use, they would have to withdraw from the DORA agreement, and I presume they would not wish to do this.

Article citations

Citation counting has several problems. Most of them apply equally to the H-index.

- Citations may be high because a paper is good and useful. They equally may be high because the paper is bad. No commercial supplier makes any distinction between these possibilities. It would not be in their commercial interests to spend time on that, but it’s critical for the person who is being judged. For example, Andrew Wakefield’s notorious 1998 paper, which gave a huge boost to the anti-vaccine movement had had 758 citations by 2012 (it was subsequently shown to be fraudulent).

- Citations take far too long to appear to be a useful way to judge recent work, as is needed for judging grant applications or promotions. This is especially damaging to young researchers, and to people (particularly women) who have taken a career break. The counts also don’t take into account citation half-life. A paper that’s still being cited 20 years after it was written clearly had influence, but that takes 20 years to discover,

- The citation rate is very field-dependent. Very mathematical papers are much less likely to be cited, especially by biologists, than more qualitative papers. For example, the solution of the missed event problem in single ion channel analysis [3,4] was the sine qua non for all our subsequent experimental work, but the two papers have only about a tenth of the number of citations of subsequent work that depended on them.

- Most suppliers of citation statistics don’t count citations of books or book chapters. This is bad for me because my only work with over 1000 citations is my 105 page chapter on methods for the analysis of single ion channels [5], which contained quite a lot of original work. It has had 1273 citations according to Google scholar but doesn’t appear at all in Scopus or Web of Science. Neither do the 954 citations of my statistics text book [6]

- There are often big differences between the numbers of citations reported by different commercial suppliers. Even for papers (as opposed to book articles) there can be a two-fold difference between the number of citations reported by Scopus, Web of Science and Google Scholar. The raw data are unreliable and commercial suppliers of metrics are apparently not willing to put in the work to ensure that their products are consistent or complete.

- Citation counts can be (and already are being) manipulated. The easiest way to get a large number of citations is to do no original research at all, but to write reviews in popular areas. Another good way to have ‘impact’ is to write indecisive papers about nutritional epidemiology. That is not behaviour that should command respect.

- Some branches of science are already facing something of a crisis in reproducibility [7]. One reason for this is the perverse incentives which are imposed on scientists. These perverse incentives include the assessment of their work by crude numerical indices.

- “Gaming” of citations is easy. (If students do it it’s called cheating: if academics do it is called gaming.) If HEFCE makes money dependent on citations, then this sort of cheating is likely to take place on an industrial scale. Of course that should not happen, but it would (disguised, no doubt, by some ingenious bureaucratic euphemisms).

- For example, Scigen is a program that generates spoof papers in computer science, by stringing together plausible phases. Over 100 such papers have been accepted for publication. By submitting many such papers, the authors managed to fool Google Scholar in to awarding the fictitious author an H-index greater than that of Albert Einstein http://en.wikipedia.org/wiki/SCIgen

- The use of citation counts has already encouraged guest authorships and such like marginally honest behaviour. There is no way to tell with an author on a paper has actually made any substantial contribution to the work, despite the fact that some journals ask for a statement about contribution.

- It has been known for 17 years that citation counts for individual papers are not detectably correlated with the impact factor of the journal in which the paper appears [1]. That doesn’t seem to have deterred metrics enthusiasts from using both. It should have done.

Given all these problems, it’s hard to see how citation counts could be useful to the REF, except perhaps in really extreme cases such as papers that get next to no citations over 5 or 10 years.

The H-index

This has all the disadvantages of citation counting, but in addition it is strongly biased against young scientists, and against women. This makes it not worth consideration by HEFCE.

Altmetrics

Given the role given to “impact” in the REF, the fact that altmetrics claim to measure impact might make them seem worthy of consideration at first sight. One problem is that the REF failed to make a clear distinction between impact on other scientists is the field and impact on the public.

Altmetrics measures an undefined mixture of both sorts if impact, with totally arbitrary weighting for tweets, Facebook mentions and so on. But the score seems to be related primarily to the trendiness of the title of the paper. Any paper about diet and health, however poor, is guaranteed to feature well on Twitter, as will any paper that has ‘penis’ in the title.

It’s very clear from the examples that I’ve looked at that few people who tweet about a paper have read more than the title. See Why you should ignore altmetrics and other bibliometric nightmares [8].

In most cases, papers were promoted by retweeting the press release or tweet from the journal itself. Only too often the press release is hyped-up. Metrics not only corrupt the behaviour of academics, but also the behaviour of journals. In the cases I’ve examined, reading the papers revealed that they were particularly poor (despite being in glamour journals): they just had trendy titles [8].

There could even be a negative correlation between the number of tweets and the quality of the work. Those who sell altmetrics have never examined this critical question because they ignore the contents of the papers. It would not be in their commercial interests to test their claims if the result was to show a negative correlation. Perhaps the reason why they have never tested their claims is the fear that to do so would reduce their income.

Furthermore you can buy 1000 retweets for $8.00 http://followers-and-likes.com/twitter/buy-twitter-retweets/ That’s outright cheating of course, and not many people would go that far. But authors, and journals, can do a lot of self-promotion on twitter that is totally unrelated to the quality of the work.

It’s worth noting that much good engagement with the public now appears on blogs that are written by scientists themselves, but the 3.6 million views of my blog do not feature in altmetrics scores, never mind Scopus or Web of Science. Altmetrics don’t even measure public engagement very well, never mind academic merit.

Evidence that metrics measure quality

Any metric would be acceptable only if it measured the quality of a person’s work. How could that proposition be tested? In order to judge this, one would have to take a random sample of papers, and look at their metrics 10 or 20 years after publication. The scores would have to be compared with the consensus view of experts in the field. Even then one would have to be careful about the choice of experts (in fields like alternative medicine for example, it would be important to exclude people whose living depended on believing in it). I don’t believe that proper tests have ever been done (and it isn’t in the interests of those who sell metrics to do it).

The great mistake made by almost all bibliometricians is that they ignore what matters most, the contents of papers. They try to make inferences from correlations of metric scores with other, equally dubious, measures of merit. They can’t afford the time to do the right experiment if only because it would harm their own “productivity”.

The evidence that metrics do what’s claimed for them is almost non-existent. For example, in six of the ten years leading up to the 1991 Nobel prize, Bert Sakmann failed to meet the metrics-based publication target set by Imperial College London, and these failures included the years in which the original single channel paper was published [9] and also the year, 1985, when he published a paper [10] that was subsequently named as a classic in the field [11]. In two of these ten years he had no publications whatsoever. See also [12].

Application of metrics in the way that it’s been done at Imperial and also at Queen Mary College London, would result in firing of the most original minds.

Gaming and the public perception of science

Every form of metric alters behaviour, in such a way that it becomes useless for its stated purpose. This is already well-known in economics, where it’s know as Goodharts’s law http://en.wikipedia.org/wiki/Goodhart’s_law “"When a measure becomes a target, it ceases to be a good measure”. That alone is a sufficient reason not to extend metrics to science. Metrics have already become one of several perverse incentives that control scientists’ behaviour. They have encouraged gaming, hype, guest authorships and, increasingly, outright fraud [13].

The general public has become aware of this behaviour and it is starting to do serious harm to perceptions of all science. As long ago as 1999, Haerlin & Parr [14] wrote in Nature, under the title How to restore Public Trust in Science,

“Scientists are no longer perceived exclusively as guardians of objective truth, but also as smart promoters of their own interests in a media-driven marketplace.”

And in January 17, 2006, a vicious spoof on a Science paper appeared, not in a scientific journal, but in the New York Times. See https://www.dcscience.net/?p=156

The use of metrics would provide a direct incentive to this sort of behaviour. It would be a tragedy not only for people who are misjudged by crude numerical indices, but also a tragedy for the reputation of science as a whole.

Conclusion

There is no good evidence that any metric measures quality, at least over the short time span that’s needed for them to be useful for giving grants or deciding on promotions). On the other hand there is good evidence that use of metrics provides a strong incentive to bad behaviour, both by scientists and by journals. They have already started to damage the public perception of science of the honesty of science.

The conclusion is obvious. Metrics should not be used to judge academic performance.

What should be done?

If metrics aren’t used, how should assessment be done? Roderick Floud was president of Universities UK from 2001 to 2003. He’s is nothing if not an establishment person. He said recently:

“Each assessment costs somewhere between £20 million and £100 million, yet 75 per cent of the funding goes every time to the top 25 universities. Moreover, the share that each receives has hardly changed during the past 20 years.

It is an expensive charade. Far better to distribute all of the money through the research councils in a properly competitive system.”

The obvious danger of giving all the money to the Research Councils is that people might be fired solely because they didn’t have big enough grants. That’s serious -it’s already happened at Kings College London, Queen Mary London and at Imperial College. This problem might be ameliorated if there were a maximum on the size of grants and/or on the number of papers a person could publish, as I suggested at the open data debate. And it would help if univerities appointed vice-chancellors with a better long term view than most seem to have at the moment.

Aggregate metrics? It’s been suggested that the problems are smaller if one looks at aggregated metrics for a whole department. rather than the metrics for individual people. Clearly looking at departments would average out anomalies. The snag is that it wouldn’t circumvent Goodhart’s law. If the money depended on the aggregate score, it would still put great pressure on universities to recruit people with high citations, regardless of the quality of their work, just as it would if individuals were being assessed. That would weigh against thoughtful people (and not least women).

The best solution would be to abolish the REF and give the money to research councils, with precautions to prevent people being fired because their research wasn’t expensive enough. If politicians insist that the "expensive charade" is to be repeated, then I see no option but to continue with a system that’s similar to the present one: that would waste money and distract us from our job.

1. Seglen PO (1997) Why the impact factor of journals should not be used for evaluating research. British Medical Journal 314: 498-502. [Download pdf]

2. Colquhoun D (2003) Challenging the tyranny of impact factors. Nature 423: 479. [Download pdf]

3. Hawkes AG, Jalali A, Colquhoun D (1990) The distributions of the apparent open times and shut times in a single channel record when brief events can not be detected. Philosophical Transactions of the Royal Society London A 332: 511-538. [Get pdf]

4. Hawkes AG, Jalali A, Colquhoun D (1992) Asymptotic distributions of apparent open times and shut times in a single channel record allowing for the omission of brief events. Philosophical Transactions of the Royal Society London B 337: 383-404. [Get pdf]

5. Colquhoun D, Sigworth FJ (1995) Fitting and statistical analysis of single-channel records. In: Sakmann B, Neher E, editors. Single Channel Recording. New York: Plenum Press. pp. 483-587.

6. David Colquhoun on Google Scholar. Available: http://scholar.google.co.uk/citations?user=JXQ2kXoAAAAJ&hl=en17-6-2014

7. Ioannidis JP (2005) Why most published research findings are false. PLoS Med 2: e124.[full text]

8. Colquhoun D, Plested AJ Why you should ignore altmetrics and other bibliometric nightmares. Available: https://www.dcscience.net/?p=6369

9. Neher E, Sakmann B (1976) Single channel currents recorded from membrane of denervated frog muscle fibres. Nature 260: 799-802.

10. Colquhoun D, Sakmann B (1985) Fast events in single-channel currents activated by acetylcholine and its analogues at the frog muscle end-plate. J Physiol (Lond) 369: 501-557. [Download pdf]

11. Colquhoun D (2007) What have we learned from single ion channels? J Physiol 581: 425-427.[Download pdf]

12. Colquhoun D (2007) How to get good science. Physiology News 69: 12-14. [Download pdf] See also https://www.dcscience.net/?p=182

13. Oransky, I. Retraction Watch. Available: http://retractionwatch.com/18-6-2014

14. Haerlin B, Parr D (1999) How to restore public trust in science. Nature 400: 499. 10.1038/22867 [doi].[Get pdf]

Follow-up

Some other posts on this topic

Why Metrics Cannot Measure Research Quality: A Response to the HEFCE Consultation

Gaming Google Scholar Citations, Made Simple and Easy

Manipulating Google Scholar Citations and Google Scholar Metrics: simple, easy and tempting

Driving Altmetrics Performance Through Marketing

Death by Metrics (October 30, 2013)

Not everything that counts can be counted

Using metrics to assess research quality By David Spiegelhalter “I am strongly against the suggestion that peer–review can in any way be replaced by bibliometrics”

1 July 2014

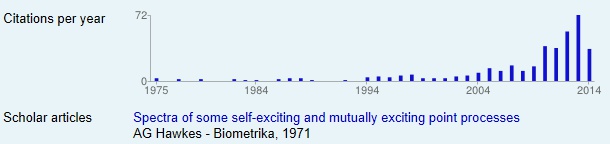

My brilliant statistical colleague, Alan Hawkes, not only laid the foundations for single molecule analysis (and made a career for me) . Before he got into that, he wrote a paper, Spectra of some self-exciting and mutually exciting point processes, (Biometrika 1971). In that paper he described a sort of stochastic process now known as a Hawkes process. In the simplest sort of stochastic process, the Poisson process, events are independent of each other. In a Hawkes process, the occurrence of an event affects the probability of another event occurring, so, for example, events may occur in clusters. Such processes were used for many years to describe the occurrence of earthquakes. More recently, it’s been noticed that such models are useful in finance, marketing, terrorism, burglary, social media, DNA analysis, and to describe invasive banana trees. The 1971 paper languished in relative obscurity for 30 years. Now the citation rate has shot threw the roof.

The papers about Hawkes processes are mostly highly mathematical. They are not the sort of thing that features on twitter. They are serious science, not just another ghastly epidemiological survey of diet and health. Anybody who cites papers of this sort is likely to be a real scientist. The surge in citations suggests to me that the 1971 paper was indeed an important bit of work (because the citations will be made by serious people). How does this affect my views about the use of citations? It shows that even highly mathematical work can achieve respectable citation rates, but it may take a long time before their importance is realised. If Hawkes had been judged by citation counting while he was applying for jobs and promotions, he’d probably have been fired. If his department had been judged by citations of this paper, it would not have scored well. It takes a long time to judge the importance of a paper and that makes citation counting almost useless for decisions about funding and promotion.

David, thank you for working on this important submission. I was somewhat amused by the “Science paper”. There is some similarity to a very telling story from my own field of research. I will provide it here, in case you wish to use it, as it makes one of the points against metrics very pointedly. The story stems from the laboratory of two prominent scientists – Bill Orr and Rajindar Sohal. They published in Science in 1994 a paper entitled “EXTENSION OF LIFE-SPAN BY OVEREXPRESSION OF SUPEROXIDE-DISMUTASE AND CATALASE IN DROSOPHILA-MELANOGASTER”. The paper received attention and citations, it was questioned by a number of follow-up studies. The authors honourably responded and repeated a lot of their experiments applying more vigorous controls – they published ten years later a paper in Experimental Gerontology entitled “Does overexpression of Cu,Zn-SOD extend life span in Drosophila melanogaster?”. Their answer was no.

Let us now look at the metrics of these articles. To be stringent, let us only look at the metrics for the decade following the publication of the second paper, that is the citations accumulated for these publications between 2004-2013, when they were both available and when the authors were saying in their latter paper that the former conclusions were mistaken. Good solid science vs splashy results from a poorly controlled (as it emerged from a lot of subsequent work and related improvements in the way questions were asked in the field) study. The data are from Web of Science

Science paper: 351 citations

Experimental Gerontology paper: 62 citations

*

David, this is excellent stuff. Three additional points/examples occur to me

1. Ed B Lewis won the Nobel Prize in 1995 for his fantastically original work on Drosophila developmental genetics. It was the opposite of fashion-led research and was not much appreciated until very late. One day I should tell you the inside story of how his Nature paper got published, its a bit long to write now. His publication record would place him perhaps at a lower level than Sakmann. His work was published very intermittently his H-factor is only 28 (WOS) from a very long life in Science.

2. Citation inconsistency, An example: my paper that touches on psychology and social science as well as autism and hormones “Men Women and Ghosts in Science” Plos Biology, viewed ca 70,000 times Web of Science citations 23, Google Scholar 93

3. Cambridge University cannot hire more than a few teachers, there seems to be no money. Yet to staff the offices that work out ways to optimise the University’s complex preparation of the RAE, there appears to have been plenty of money. After all, clever manipulation of the RAE brings financial rewards while taking on a new lecturer just costs money. Its a no-brainer!

you would have to find out more about these offices as i cannot work out who does what but there are plenty of them:

http://www.admin.cam.ac.uk/offices/research/contact/list.aspx

http://www.admin.cam.ac.uk/offices/rso/

@pal

Thanks Peter. They are all excellent points. They only problem is to prevent it from becoming so long that nobody reads it. Your first two points both depend on looking at particular papers. That’s what bibliometricians never do, and why their evidence is so poor,

David, this is great. Unfortunately I don’t have anything to add, but I do work for a journal so I’ll try and spread your ideas about metrics around the office. Goodhart’s law offers a particularly valuable lesson to those of us on this side of the publishing divide.

Ironically, our inhouse metrics people largely agree with you, but I suspect those further up the foodchain want a simple score by which to judge our “productivity”.

As an impressionable young scientist everything seems like a giant mess!

On one hand you have esteemed scientists telling you the H-index, and all bibliometrics for that matter, are useless allied with Nature, Science and Cell distorting ‘good science’. Then the work place is driving you to strive to publish in these journals while all the time keeping an eye on your citations.

While everything that’s said makes perfect sense isn’t it easier for older scientists to have these views rather than younger ones. If I suddenly stop caring about citations my career would be over before it’s begun. If I suddenly said I’m not going to attempt to publish in Nature, Science or Cell I would be equally doomed. The reason being that regardless of how utopian we would like things to be people will judge me as an individual everyday on the impact factor of the journals I publish in and the number of citations I accrue.

Therefore, isn’t it a more fruitful use of our time to discuss what should be done rather than why bibliometrics need to go the way of the dodo?!

To be frank, as someone who left healthcare to pursue a PhD (in electrophysiology I may add) and condemn myself (tongue in cheek) to a life of relative poverty as a scientist it all leads me to the rather unfortunate conclusion of: ‘what was the point!’

@Impressionable_scientist

I can only agree when you say “As an impressionable young scientist everything seems like a giant mess!”

You are undoubtedly right to say that it’s much easier for old scientists to be critical than young. That’s why I now spend quite a lot of time going on about the problems. My aim is to speak out for those who are terrified to do so themselves.

The problem lies in the fact that the publish-or-perish culture was itself imposed by senior scientists (like young ones, some senior scientists are more sensible than others: they tend to lose touch with reality when they become deans). There are still too many who are entranced by impact factors, but the light is beginning to dawn. More and more realise the sheer stupidity of impact factors.

I have made some suggestions about what can be done to help at the end of my post at http://www.dcscience.net/?p=4873 I’m perfectly serious about limiting the number of publications and grant sizes.

One problem is that publishing is changing fast now, and nobody is sure yet where it’s going. The fact that open access is mandated by research councils is a big step forward. More and more places are emerging where you can publish cheaply, or even free, with open access. How much longer will people be willing to pay £5000 to publish in Nature Communications, when they can get the same result much cheaper elsewhere. It’s hard to see how Nature and Elsevier can survive that sort of competition in the long run. And it’s not clear how much longer universities will be willing to pay extortionate amounts to the old publishers.

The question of how well a candidate understood the problem was always important, for me at least. It’s one of the iniquitous aspects of the publish-or-perish culture that young scientists are not given the time to learn the tools of their trade. In my particular business, I had to learn a lot about statistics, matrix algebra, stochastic processes and computer programming. That resulted in several years during which I published little. These days, I might well have been fired before I’d got started properly.

On the bright side, I also suspect that the perception of young scientists is a bit worse than the reality. Certainly it was always the policy in my department to ask job candidates to nominate their best papers (up to four, but fewer for young candidates). Then we read the papers and asked questions about them, not least about the methods section. That quickly revealed whether they were guest authors or not (a few were). We certainly tried to judge the contents of the paper and to ignore where it was published. In fact the brevity of Nature papers put them at something of a disadvantage in this process. You did better with a small number of longish, thorough, papers, and without too many co-authors so the candidates contribution and understanding was clear.

I think a system like this is used in many good labs. I suspect that the better the university you are applying to. the less impact factors matter, and the more depth and understanding matter. But I haven’t got hard data to back up these views, so I’m certain about how widespread the sensible practices are.

I really believe that, in the end, a few thorough long papers will be better for your career than dozens of slim and dubious results. Of course there is always an element of pure luck in whether you come up with something impressive. But as Louis Pasteur said “Chance favors only the prepared mind.” All I can say is. Good Luck!

*There are metrics of rigour available – we have scored >1000 publications from top 5 Russel Group institutions, and its not happy reading. See the detail, and other bits (apologies for self promotion) in my Inaugural at https://www.youtube.com/watch?v=H34rIz_yMIU

@Maclomaclee

Thanks very much for sending that link to your inaugural lecture. Everyone should listen to it, and act on it. It would be fascinating to compare the number of citations etc for the best papers and the worst.

The inaugural lecture shows one good approach to evaluating the quality of publications. They looked at whether the article mentions particular features that determine the statistical rigour of an experiment.

It is possible, but hard, to generalise that approach through computer-assisted content analysis. Think of a combination of the techniques used by humanities scholars to compare Shakespeare’s plays to Marlow’s, and the sentiment analysis fims do on Twitter feeds to estimate emotional reactions to particular products.

It would work something like this. You get experienced reviewers to mark in several articles the phrases they think indicvate something important about the quality of the article from their disciplinary perspective. Create large sets of positive and negative phrases connected to particular constructs. Then use machine learning algorithms on a large sample of articles to find which combinations of phrases are useful in distinguishing articles on these human-defined multiple criteria. After some rounds of refinement, algorithmically producing profile of articles checked by multiple reviewers, it could be possible to develop a good content analysis test set. Then use this to generate profile visualisations of the strength and weakness of an article, and build it in to Google Scholar, so that every article has a quality profile.

@davenewman

I suspect that what you suggest is easier said than done. But by all means try it: then wait 10 years so that the importance of the paper can be assessed realistically. It amazes me that people who invent metrics for scientific merit seem to feel no obligation to test their products. But I guess that might reduce sales.

[…] absurd. For those interested in why they are absurd, see Colquhoun’s discussion of them here and here. It would seem obvious to most people that in order to assess the quality of research it […]

[…] impact factors and citation numbers; these would certainly be cheaper although are really a no better way to assess the quality of research. Where the original RAE was used to apportion grant money, […]

[…] by journals, university PR people and authors themselves. These result in no small part from the culture of metrics and the mismeasurement of science. The REF has added to the […]