Alzheimer’s

In the course of thinking about metrics, I keep coming across cases of over-promoted research. An early case was “Why honey isn’t a wonder cough cure: more academic spin“. More recently, I noticed these examples.

“Effect of Vitamin E and Memantine on Functional Decline in Alzheimer Disease".(Spoiler -very little), published in the Journal of the American Medical Association. ”

and ” Primary Prevention of Cardiovascular Disease with a Mediterranean Diet” , in the New England Journal of Medicine (which had second highest altmetric score in 2013)

and "Sleep Drives Metabolite Clearance from the Adult Brain", published in Science

In all these cases, misleading press releases were issued by the journals themselves and by the universities. These were copied out by hard-pressed journalists and made headlines that were certainly not merited by the work. In the last three cases, hyped up tweets came from the journals. The responsibility for this hype must eventually rest with the authors. The last two papers came second and fourth in the list of highest altmetric scores for 2013

Here are to two more very recent examples. It seems that every time I check a highly tweeted paper, it turns out that it is very second rate. Both papers involve fMRI imaging, and since the infamous dead salmon paper, I’ve been a bit sceptical about them. But that is irrelevant to what follows.

Boost your memory with electricity

That was a popular headline at the end of August. It referred to a paper in Science magazine:

“Targeted enhancement of cortical-hippocampal brain networks and associative memory” (Wang, JX et al, Science, 29 August, 2014)

This study was promoted by the Northwestern University "Electric current to brain boosts memory". And Science tweeted along the same lines.

|

Science‘s link did not lead to the paper, but rather to a puff piece, "Rebooting memory with magnets". Again all the emphasis was on memory, with the usual entirely speculative stuff about helping Alzheimer’s disease. But the paper itself was behind Science‘s paywall. You couldn’t read it unless your employer subscribed to Science.

|

|

|

All the publicity led to much retweeting and a big altmetrics score. Given that the paper was not open access, it’s likely that most of the retweeters had not actually read the paper. |

|

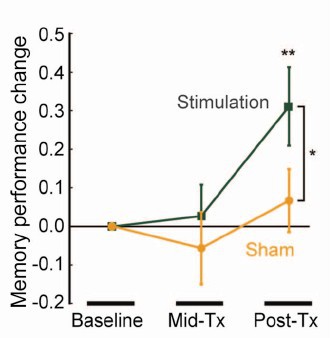

When you read the paper, you found that is mostly not about memory at all. It was mostly about fMRI. In fact the only reference to memory was in a subsection of Figure 4. This is the evidence.

That looks desperately unconvincing to me. The test of significance gives P = 0.043. In an underpowered study like this, the chance of this being a false discovery is probably at least 50%. A result like this means, at most, "worth another look". It does not begin to justify all the hype that surrounded the paper. The journal, the university’s PR department, and ultimately the authors, must bear the responsibility for the unjustified claims.

Science does not allow online comments following the paper, but there are now plenty of sites that do. NHS Choices did a fairly good job of putting the paper into perspective, though they failed to notice the statistical weakness. A commenter on PubPeer noted that Science had recently announced that it would tighten statistical standards. In this case, they failed. The age of post-publication peer review is already reaching maturity

Boost your memory with cocoa

Another glamour journal, Nature Neuroscience, hit the headlines on October 26, 2014, in a paper that was publicised in a Nature podcast and a rather uninformative press release.

"Enhancing dentate gyrus function with dietary flavanols improves cognition in older adults. Brickman et al., Nat Neurosci. 2014. doi: 10.1038/nn.3850.".

The journal helpfully lists no fewer that 89 news items related to this study. Mostly they were something like “Drinking cocoa could improve your memory” (Kat Lay, in The Times). Only a handful of the 89 reports spotted the many problems.

A puff piece from Columbia University’s PR department quoted the senior author, Dr Small, making the dramatic claim that

“If a participant had the memory of a typical 60-year-old at the beginning of the study, after three months that person on average had the memory of a typical 30- or 40-year-old.”

|

Like anything to do with diet, the paper immediately got circulated on Twitter. No doubt most of the people who retweeted the message had not read the (paywalled) paper. The links almost all led to inaccurate press accounts, not to the paper itself. |

|

But some people actually read the paywalled paper and post-publication review soon kicked in. Pubmed Commons is a good site for that, because Pubmed is where a lot of people go for references. Hilda Bastian kicked off the comments there (her comment was picked out by Retraction Watch). Her conclusion was this.

"It’s good to see claims about dietary supplements tested. However, the results here rely on a chain of yet-to-be-validated assumptions that are still weakly supported at each point. In my opinion, the immodest title of this paper is not supported by its contents."

(Hilda Bastian runs the Statistically Funny blog -“The comedic possibilities of clinical epidemiology are known to be limitless”, and also a Scientific American blog about risk, Absolutely Maybe.)

NHS Choices spotted most of the problems too, in "A mug of cocoa is not a cure for memory problems". And so did Ian Musgrave of the University of Adelaide who wrote "Most Disappointing Headline Ever (No, Chocolate Will Not Improve Your Memory)",

Here are some of the many problems.

- The paper was not about cocoa. Drinks containing 900 mg cocoa flavanols (as much as in about 25 chocolate bars) and 138 mg of (−)-epicatechin were compared with much lower amounts of these compounds

- The abstract, all that most people could read, said that subjects were given "high or low cocoa–containing diet for 3 months". Bit it wasn’t a test of cocoa: it was a test of a dietary "supplement".

- The sample was small (37ppeople altogether, split between four groups), and therefore under-powered for detection of the small effect that was expected (and observed)

- The authors declared the result to be "significant" but you had to hunt through the paper to discover that this meant P = 0.04 (hint -it’s 6 lines above Table 1). That means that there is around a 50% chance that it’s a false discovery.

- The test was short -only three months

- The test didn’t measure memory anyway. It measured reaction speed, They did test memory retention too, and there was no detectable improvement. This was not mentioned in the abstract, Neither was the fact that exercise had no detectable effect.

- The study was funded by the Mars bar company. They, like many others, are clearly looking for a niche in the huge "supplement" market,

The claims by the senior author, in a Columbia promotional video that the drink produced "an improvement in memory" and "an improvement in memory performance by two or three decades" seem to have a very thin basis indeed. As has the statement that "we don’t need a pharmaceutical agent" to ameliorate a natural process (aging). High doses of supplements are pharmaceutical agents.

To be fair, the senior author did say, in the Columbia press release, that "the findings need to be replicated in a larger study—which he and his team plan to do". But there is no hint of this in the paper itself, or in the title of the press release "Dietary Flavanols Reverse Age-Related Memory Decline". The time for all the publicity is surely after a well-powered study, not before it.

The high altmetrics score for this paper is yet another blow to the reputation of altmetrics.

One may well ask why Nature Neuroscience and the Columbia press office allowed such extravagant claims to be made on such a flimsy basis.

What’s going wrong?

These two papers have much in common. Elaborate imaging studies are accompanied by poor functional tests. All the hype focusses on the latter. These led me to the speculation ( In Pubmed Commons) that what actually happens is as follows.

- Authors do big imaging (fMRI) study.

- Glamour journal says coloured blobs are no longer enough and refuses to publish without functional information.

- Authors tag on a small human study.

- Paper gets published.

- Hyped up press releases issued that refer mostly to the add on.

- Journal and authors are happy.

- But science is not advanced.

It’s no wonder that Dorothy Bishop wrote "High-impact journals: where newsworthiness trumps methodology".

It’s time we forgot glamour journals. Publish open access on the web with open comments. Post-publication peer review is working

But boycott commercial publishers who charge large amounts for open access. It shouldn’t cost more than about £200, and more and more are essentially free (my latest will appear shortly in Royal Society Open Science).

Follow-up

Hilda Bastian has an excellent post about the dangers of reading only the abstract "Science in the Abstract: Don’t Judge a Study by its Cover"

4 November 2014

I was upbraided on Twitter by Euan Adie, founder of Almetric.com, because I didn’t click through the altmetric symbol to look at the citations "shouldn’t have to tell you to look at the underlying data David" and "you could have saved a lot of Google time". But when I did do that, all I found was a list of media reports and blogs -pretty much the same as Nature Neuroscience provides itself.

More interesting, I found that my blog wasn’t listed and neither was PubMed Commons. When I asked why, I was told "needs to regularly cite primary research. PubMed, PMC or repository links”. But this paper is behind a paywall. So I provide (possibly illegally) a copy of it, so anyone can verify my comments. The result is that altmetric’s dumb algorithms ignore it. In order to get counted you have to provide links that lead nowhere.

So here’s a link to the abstract (only) in Pubmed for the Science paper http://www.ncbi.nlm.nih.gov/pubmed/25170153 and here’s the link for the Nature Neuroscience paper http://www.ncbi.nlm.nih.gov/pubmed/25344629

It seems that altmetrics doesn’t even do the job that it claims to do very efficiently.

It worked. By later in the day, this blog was listed in both Nature‘s metrics section and by altmetrics. com. But comments on Pubmed Commons were still missing, That’s bad because it’s an excellent place for post-publications peer review.

The two posts on this blog about the hazards of s=ignificance testing have proved quite popular. See Part 1: the screening problem, and Part 2: Part 2: the false discovery rate. They’ve had over 20,000 hits already (though I still have to find a journal that will print the paper based on them).

Yet another Alzheiner’s screening story hit the headlines recently and the facts got sorted out in the follow up section of the screening post. If you haven’t read that already, it might be helpful to do so before going on to this post.

This post has already appeared on the Sense about Science web site. They asked me to explain exactly what was meant by the claim that the screening test had an "accuracy of 87%". That was mentioned in all the media reports, no doubt because it was the only specification of the quality of the test in the press release. Here is my attempt to explain what it means.

The "accuracy" of screening tests

Anything about Alzheimer’s disease is front line news in the media. No doubt that had not escaped the notice of Kings College London when they issued a press release about a recent study of a test for development of dementia based on blood tests. It was widely hailed in the media as a breakthrough in dementia research. For example, the BBC report is far from accurate). The main reason for the inaccurate reports is, as so often, the press release. It said

"They identified a combination of 10 proteins capable of predicting whether individuals with MCI would develop Alzheimer’s disease within a year, with an accuracy of 87 percent"

The original paper says

"Sixteen proteins correlated with disease severity and cognitive decline. Strongest associations were in the MCI group with a panel of 10 proteins predicting progression to AD (accuracy 87%, sensitivity 85% and specificity 88%)."

What matters to the patient is the probability that, if they come out positive when tested, they will actually get dementia. The Guardian quoted Dr James Pickett, head of research at the Alzheimer’s Society, as saying

"These 10 proteins can predict conversion to dementia with less than 90% accuracy, meaning one in 10 people would get an incorrect result."

That statement simply isn’t right (or, at least, it’s very misleading). The proper way to work out the relevant number has been explained in many places -I did it recently on my blog.

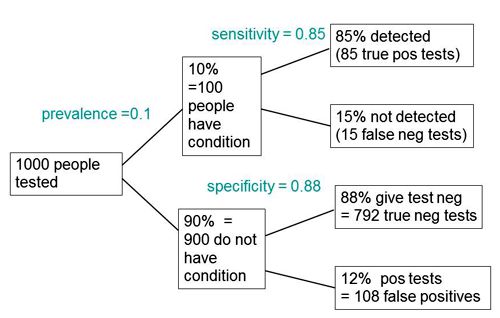

The easiest way to work it out is to make a tree diagram. The diagram is like that previously discussed here, but with a sensitivity of 85% and a specificity of 88%, as specified in the paper.

In order to work out the number we need, we have to specify the true prevalence of people who will develop dementia, in the population being tested. In the tree diagram, this has been taken as 10%. The diagram shows that, out of 1000 people tested, there are 85 + 108 = 193 with a positive test result. Out ot this 193, rather more than half (108) are false positives, so if you test positive there is a 56% chance that it’s a false alarm (108/193 = 0.56). A false discovery rate of 56% is far too high for a good test.

This figure of 56% seems to be the basis for a rather good post by NHS Choices with the title “Blood test for Alzheimer’s ‘no better than coin toss’

If the prevalence were taken as 5% (a value that’s been given for the over-60 age group) that fraction of false alarms would rise to a disastrous 73%.

How are these numbers related to the claim that the test is "87% accurate"? That claim was parroted in most of the media reports, and it is why Dr Pickett said "one in 10 people would get an incorrect result".

The paper itself didn’t define "accuracy" anywhere, and I wasn’t familiar with the term in this context (though Stephen Senn pointed out that it is mentioned briefly in the Wiikipedia entry for Sensitivity and Specificity). The senior author confirmed that "accuracy" means the total fraction of tests, positive or negative, that give the right result. We see from the tree diagram that, out of 1000 tests, there are 85 correct positive tests and 792 correct negative tests, so the accuracy (with a prevalence of 0.1) is (85 + 792)/1000 = 88%, close to the value that’s cited in the paper.

Accuracy, defined in this way, seems to me not to be a useful measure at all. It conflates positive and negative results and they need to be kept separate to understand the problem. Inspection of the tree diagram shows that it can be expressed algebraically as

accuracy = (sensitivity × prevalence) + (specificity × (1 − prevalence))

It is therefore merely a weighted mean of sensitivity and specificity (weighted by the prevalence). With the numbers in this case, it varies from 0.88 (when prevalence = 0) to 0.85 (when prevalence = 1). Thus it will inevitably give a much more flattering view of the test than the false discovery rate.

No doubt, it is too much to expect that a hard-pressed journalist would have time to figure this out, though it isn’t clear that they wouldn’t have time to contact someone who understands it. But it is clear that it should have been explained in the press release. It wasn’t.

In fact, reading the paper shows that the test was not being proposed as a screening test for dementia at all. It was proposed as a way to select patients for entry into clinical trials. The population that was being tested was very different from the general population of old people, being patients who come to memory clinics in trials centres (the potential trials population)

How best to select patients for entry into clinical trials is a matter of great interest to people who are running trials. It is of very little interest to the public. So all this confusion could have been avoided if Kings had refrained from issuing a press release at all, for a paper like this.

I guess universities think that PR is more important than accuracy.

That’s a bad mistake in an age when pretentions get quickly punctured on the web.

This post first appeared on the Sense about Science web site.

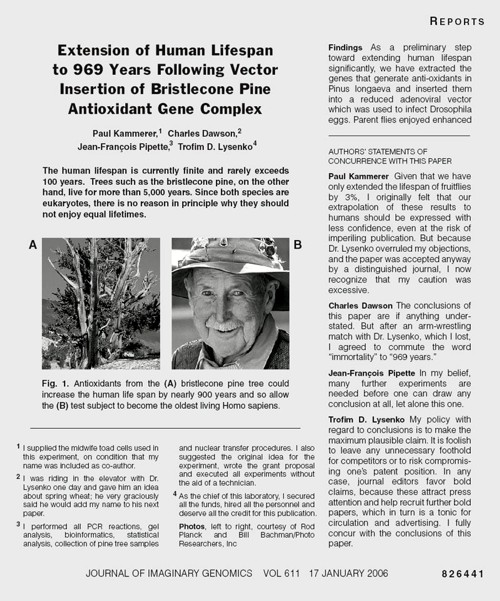

[This an update of a 2006 post on my old blog]

The New York Times (17 January 2006) published a beautiful spoof that illustrates only too clearly some of the bad practices that have developed in real science (as well as in quackery). It shows that competition, when taken to excess, leads to dishonesty.

More to the point, it shows that the public is well aware of the dishonesty that has resulted from the publish or perish culture, which has been inflicted on science by numbskull senior administrators (many of them scientists, or at least ex-scientists). Part of the blame must attach to "bibliometricians" who have armed administrators with simple-minded tools the usefulness is entirely unverified. Bibliometricians are truly the quacks of academia. They care little about evidence as long as they can sell the product.

The spoof also illustrates the folly of allowing the hegemony of a handful of glamour journals to hold scientists in thrall. This self-inflicted wound adds to the pressure to produce trendy novelties rather than solid long term work.

It also shows the only-too-frequent failure of peer review to detect problems.

The future lies on publication on the web, with post-publication peer review. It has been shown by sites like PubPeer that anonymous post-publication review can work very well indeed. This would be far cheaper, and a good deal better than the present extortion practised on universities by publishers. All it needs is for a few more eminent people like mathematician Tim Gowers to speak out (see Elsevier – my part in its downfall).

Recent Nobel-prizewinner Randy Schekman has helped with his recent declaration that "his lab will no longer send papers to Nature, Cell and Science as they distort scientific process"

The spoof is based on the fraudulent papers by Korean cloner, Woo Suk Hwang, which were published in Science, in 2005. As well as the original fraud, this sad episode exposed the practice of ‘guest authorship’, putting your name on a paper when you have done little or no work, and cannot vouch for the results. The last (‘senior’) author on the 2005 paper, was Gerald Schatten, Director of the Pittsburgh Development Center. It turns out that Schatten had not seen any of the original data and had contributed very little to the paper, beyond lobbying Scienceto accept it. A University of Pittsburgh panel declared Schatten guilty of “research misbehavior”, though he was, amazingly, exonerated of “research misconduct”. He still has his job. Click here for an interesting commentary.

The New York Times carried a mock editorial to introduce the spoof..

One Last Question: Who Did the Work? By NICHOLAS WADE In the wake of the two fraudulent articles on embryonic stem cells published in Science by the South Korean researcher Hwang Woo Suk, Donald Kennedy, the journal’s editor, said last week that he would consider adding new requirements that authors “detail their specific contributions to the research submitted,” and sign statements that they agree with the conclusions of their article. A statement of authors’ contributions has long been championed by Drummond Rennie, deputy editor of The Journal of the American Medical Association, Explicit statements about the conclusions could bring to light many reservations that individual authors would not otherwise think worth mentioning. The article shown [below] from a future issue of the Journal of imaginary Genomics, annotated in the manner required by Science‘s proposed reforms, has been released ahead of its embargo date. |

The old-fashioned typography makes it obvious that the spoof is intended to mock a paper in Science.

The problem with this spoof is its only too accurate description of what can happen at the worst end of science.

Something must be done if we are to justify the money we get and and we are to retain the confidence of the public

My suggestions are as follows

- Nature Science and Cell should become news magazines only. Their glamour value distorts science and encourages dishonesty

- All print journals are outdated. We need cheap publishing on the web, with open access and post-publication peer review. The old publishers would go the same way as the handloom weavers. Their time has past.

- Publish or perish has proved counterproductive. You’d get better science if you didn’t have any performance management at all. All that’s needed is peer review of grant applications.

- It’s better to have many small grants than fewer big ones. The ‘celebrity scientist’, running a huge group funded by many grants has not worked well. It’s led to poor mentoring and exploitation of junior scientists.

- There is a good case for limiting the number of original papers that an individual can publish per year, and/or total grant funding. Fewer but more complete papers would benefit everyone.

- Everyone should read, learn and inwardly digest Peter Lawrence’s The Mismeasurement of Science.

Follow-up

3 January 2014.

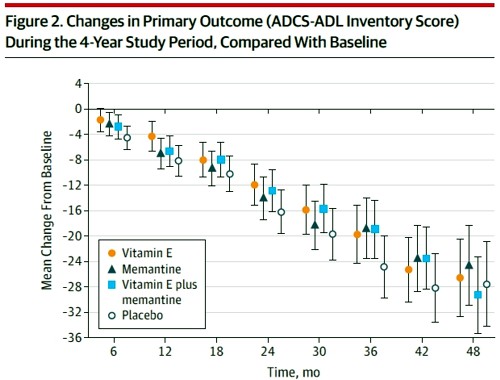

Yet another good example of hype was in the news. “Effect of Vitamin E and Memantine on Functional Decline in Alzheimer Disease“. It was published in the Journal of the American Medical Association. The study hit the newspapers on January 1st with headlines like Vitamin E may slow Alzheimer’s Disease (see the excellent analyis by Gary Schwitzer). The supplement industry was ecstatic. But the paper was behind a paywall. It’s unlikely that many of the tweeters (or journalists) had actually read it.

The trial was a well-designed randomised controlled trial that compared four treatments: placebo, vitamin E, memantine and Vitamin E + memantine.

Reading the paper gives a rather different impression from the press release. Look at the pre-specified primary outcome of the trial.

The primary outcome measure was

" . . the Alzheimer’s Disease Cooperative Study/Activities of Daily Living (ADCSADL) Inventory.12 The ADCS-ADL Inventory is designed to assess functional abilities to perform activities of daily living in Alzheimer patients with a broad range of dementia severity. The total score ranges from 0 to 78 with lower scores indicating worse function."

It looks as though any difference that might exist between the four treaments is trivial in size. In fact the mean difference between Vitamin E and placebos was only 3.15 (on a 78 point scale) with 95% confidence limits from 0.9 to 5.4. This gave a modest P = 0.03 (when properly corrected for multiple comparisons), a result that will impress only those people who regard P = 0.05 as a sort of magic number. Since the mean effect is so trivial in size that it doesn’t really matter if the effect is real anyway.

It is not mentioned in the coverage that none of the four secondary outcomes achieved even a modest P = 0.05 There was no detectable effect of Vitamin E on

- Mean annual rate of cognitive decline (Alzheimer Disease Assessment Scale–Cognitive Subscale)

- Mean annual rate of cognitive decline (Mini-Mental State Examination)

- Mean annual rate of increased symptoms

- Mean annual rate of increased caregiver time,

The only graph that appeared to show much effect was The Dependence Scale. This scale

“assesses 6 levels of functional dependence. Time to event is the time to loss of 1 dependence level (increase in dependence). We used an interval-censored model assuming a Weibull distribution because the time of the event was known only at the end of a discrete interval of time (every 6 months).”

It’s presented as a survival (Kaplan-Meier) plot. And it is this somewhat obscure secondary outcome that was used by the Journal of the American Medical Assocciation for its publicity.

Note also that memantine + Vitamin E was indistinguishable from placebo. There are two ways to explain this: either Vitamin E has no effect, or memantine is an antagonist of Vitamin E. There are no data on the latter, but it’s certainly implausible.

The trial used a high dose of Vitamin E (2000 IU/day). No toxic effects of Vitamin E were reported, though a 2005 meta-analysis concluded that doses greater than 400 IU/d "may increase all-cause mortality and should be avoided".

In my opinion, the outcome of this trial should have been something like “Vitamin E has, at most, trivial effects on the progress of Alzheimer’s disease”.

Both the journal and the authors are guilty of disgraceful hype. This continual raising of false hopes does nothing to help patients. But it does damage the reputation of the journal and of the authors.

|

This paper constitutes yet another failure of altmetrics. (see more examples on this blog). Not surprisingly, given the title, It was retweeted widely, but utterly uncritically. Bad science was promoted. And JAMA must take much of the blame for publishing it and promoting it. |

|

The last few weeks have produced yet another example of how selective reporting can give a very misleading impression.

As usual, the reluctance of the media to report important negative results is, in part, to blame..

The B vitamins are a favourite of the fraudulent supplements industry. One of theit pet propositions is that they will prevent dementia. The likes of Patrick Holford were, no doubt, delighted when a study from Oxford University, published on September 8th, seemed to confirm their ideas. The paper was Homocysteine-Lowering by B Vitamins Slows the Rate of Accelerated Brain Atrophy in Mild Cognitive Impairment: A Randomized Controlled Trial. (Smith AD, Smith SM, de Jager CA, Whitbread P, Johnston C, et al. (2010)).

The main problem with this paper was that it did not measure dementia at all, but a surrogate outcome, brain shrinkage. There are other problems too. They were quickly pointed out in blogs, particularly by the excellent Carl Heneghan of the Oxford Centre for Evidence Based Medicine, at Vitamin B and slowing the rate of Brain Atrophy: the numbers don’t add up. Some detailed comments on this post were posted at Evidence Matters, David Smith, B vitamins and Alzheimer’s Disease.

This paper was reported very widely indeed. A Google search for ‘Vitamin B Alzheimer’s Smith 2010’ gives over 90,000 hits at the time of posting. Most of those I’ve checked report this paper uncritically. The Daily Mail headline was 10p pill to beat Alzheimer’s disease: Vitamin B halts memory loss in breakthrough British trial, though in fairness to Fiona MacRae, she did include at the end

“The Alzheimer’s Society gave the research a cautious welcome. Professor Clive Ballard said: ‘This could change the lives of thousands of people at risk of dementia. However, previous studies looking at B vitamins have been very disappointing and we wouldn’t want to raise people’s expectations yet.’ “

That caution was justified because a mere two weeks later, on September 22nd, another paper appeared, in the journal Neurology.. The paper is Vitamins B12, B6, and folic acid for cognition in older men, by Ford et al. It appears to contradict directly Smith et al. but it didn’t measure the same thing. This one measured what actually matters.

“The primary outcome of interest was the change in the cognitive subscale of the Alzheimer’s Disease Assessment Scale (ADAS-cog). A secondary aim of the study was to determine if supplementation with vitamins decreased the risk of cognitive impairment and dementia over 8 years.”

The conclusion was negative.

“Conclusions: The daily supplementation of vitamins B12, B6, and folic acid does not benefit cognitive function in older men, nor does it reduce the risk of cognitive impairment or dementia.”

Disgracefully, this paper has hardly been reported at all.

It is an excellent example of how the public is misled because of the reluctance of the media to publish negative results. Sadly that reluctance is sometimes also shown by academic journals, but not in this case.

Two things went wrong, The first was near-universal failure to evaluate critically the Smith et al paper. The second was to ignore the paper that measured what actually matters.

It isn’t as though there wasn’t a bit of relevant history, Prof Smith was one of the scientific advisors for Patrick Holford’s Food for the Brain survey. This survey was, quie rightly, criticised for being uninterpretable. When asked about this, Smith admitted as much, as recounted in Food for the Brain: Child Survey. A proper job?.

Plenty has been written about Patrick Holford, here and elsewhere. There is even a web site that is largely devoted to dispelling his myths, Holford Watch. He merited an enture chapter in Ben Goldacre’s book, Bad Science. He is an archetypal pill salesman and the sciencey talk seems to be largely used as a sales tool.

It might have been relevant too, to notice that the Smith et al. paper stated

|

Competing interests: Dr. A. D. Smith is named as an inventor on two patents held by the University of Oxford on the use of folic acid to treat Alzheimer’s disease (US6008221; US6127370); under the University’s rules he could benefit financially if the patent is exploited. |

There is, of course, no reason to think that the interpretation of the data was influenced by he fact that the first author had a financial interest in the outcome. In fact university managers encourage that sort of thing strongly.

Personally, I’m more in sympathy with the view expressed by Strohman (1997)

“academic biologists and corporate researchers have become indistinguishable, and special awards are given for collaborations between these two sectors for behaviour that used to be cited as a conflict of interest”.

When it comes to vitamin pills, caveat emptor.