[This an update of a 2006 post on my old blog]

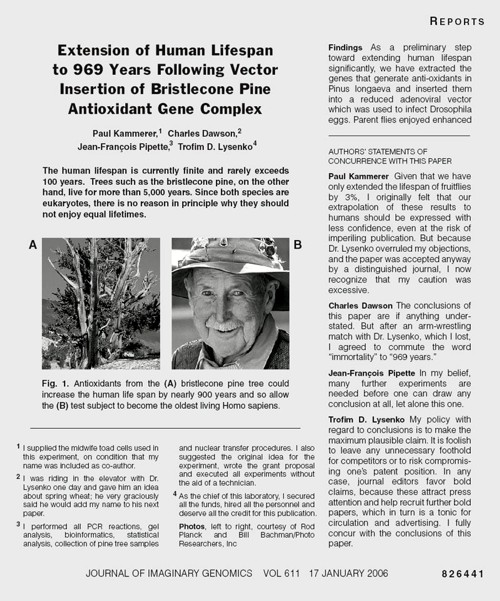

The New York Times (17 January 2006) published a beautiful spoof that illustrates only too clearly some of the bad practices that have developed in real science (as well as in quackery). It shows that competition, when taken to excess, leads to dishonesty.

More to the point, it shows that the public is well aware of the dishonesty that has resulted from the publish or perish culture, which has been inflicted on science by numbskull senior administrators (many of them scientists, or at least ex-scientists). Part of the blame must attach to "bibliometricians" who have armed administrators with simple-minded tools the usefulness is entirely unverified. Bibliometricians are truly the quacks of academia. They care little about evidence as long as they can sell the product.

The spoof also illustrates the folly of allowing the hegemony of a handful of glamour journals to hold scientists in thrall. This self-inflicted wound adds to the pressure to produce trendy novelties rather than solid long term work.

It also shows the only-too-frequent failure of peer review to detect problems.

The future lies on publication on the web, with post-publication peer review. It has been shown by sites like PubPeer that anonymous post-publication review can work very well indeed. This would be far cheaper, and a good deal better than the present extortion practised on universities by publishers. All it needs is for a few more eminent people like mathematician Tim Gowers to speak out (see Elsevier – my part in its downfall).

Recent Nobel-prizewinner Randy Schekman has helped with his recent declaration that "his lab will no longer send papers to Nature, Cell and Science as they distort scientific process"

The spoof is based on the fraudulent papers by Korean cloner, Woo Suk Hwang, which were published in Science, in 2005. As well as the original fraud, this sad episode exposed the practice of ‘guest authorship’, putting your name on a paper when you have done little or no work, and cannot vouch for the results. The last (‘senior’) author on the 2005 paper, was Gerald Schatten, Director of the Pittsburgh Development Center. It turns out that Schatten had not seen any of the original data and had contributed very little to the paper, beyond lobbying Scienceto accept it. A University of Pittsburgh panel declared Schatten guilty of “research misbehavior”, though he was, amazingly, exonerated of “research misconduct”. He still has his job. Click here for an interesting commentary.

The New York Times carried a mock editorial to introduce the spoof..

One Last Question: Who Did the Work? By NICHOLAS WADE In the wake of the two fraudulent articles on embryonic stem cells published in Science by the South Korean researcher Hwang Woo Suk, Donald Kennedy, the journal’s editor, said last week that he would consider adding new requirements that authors “detail their specific contributions to the research submitted,” and sign statements that they agree with the conclusions of their article. A statement of authors’ contributions has long been championed by Drummond Rennie, deputy editor of The Journal of the American Medical Association, Explicit statements about the conclusions could bring to light many reservations that individual authors would not otherwise think worth mentioning. The article shown [below] from a future issue of the Journal of imaginary Genomics, annotated in the manner required by Science‘s proposed reforms, has been released ahead of its embargo date. |

The old-fashioned typography makes it obvious that the spoof is intended to mock a paper in Science.

The problem with this spoof is its only too accurate description of what can happen at the worst end of science.

Something must be done if we are to justify the money we get and and we are to retain the confidence of the public

My suggestions are as follows

- Nature Science and Cell should become news magazines only. Their glamour value distorts science and encourages dishonesty

- All print journals are outdated. We need cheap publishing on the web, with open access and post-publication peer review. The old publishers would go the same way as the handloom weavers. Their time has past.

- Publish or perish has proved counterproductive. You’d get better science if you didn’t have any performance management at all. All that’s needed is peer review of grant applications.

- It’s better to have many small grants than fewer big ones. The ‘celebrity scientist’, running a huge group funded by many grants has not worked well. It’s led to poor mentoring and exploitation of junior scientists.

- There is a good case for limiting the number of original papers that an individual can publish per year, and/or total grant funding. Fewer but more complete papers would benefit everyone.

- Everyone should read, learn and inwardly digest Peter Lawrence’s The Mismeasurement of Science.

Follow-up

3 January 2014.

Yet another good example of hype was in the news. “Effect of Vitamin E and Memantine on Functional Decline in Alzheimer Disease“. It was published in the Journal of the American Medical Association. The study hit the newspapers on January 1st with headlines like Vitamin E may slow Alzheimer’s Disease (see the excellent analyis by Gary Schwitzer). The supplement industry was ecstatic. But the paper was behind a paywall. It’s unlikely that many of the tweeters (or journalists) had actually read it.

The trial was a well-designed randomised controlled trial that compared four treatments: placebo, vitamin E, memantine and Vitamin E + memantine.

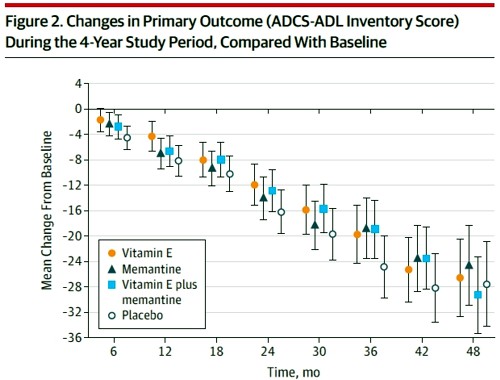

Reading the paper gives a rather different impression from the press release. Look at the pre-specified primary outcome of the trial.

The primary outcome measure was

" . . the Alzheimer’s Disease Cooperative Study/Activities of Daily Living (ADCSADL) Inventory.12 The ADCS-ADL Inventory is designed to assess functional abilities to perform activities of daily living in Alzheimer patients with a broad range of dementia severity. The total score ranges from 0 to 78 with lower scores indicating worse function."

It looks as though any difference that might exist between the four treaments is trivial in size. In fact the mean difference between Vitamin E and placebos was only 3.15 (on a 78 point scale) with 95% confidence limits from 0.9 to 5.4. This gave a modest P = 0.03 (when properly corrected for multiple comparisons), a result that will impress only those people who regard P = 0.05 as a sort of magic number. Since the mean effect is so trivial in size that it doesn’t really matter if the effect is real anyway.

It is not mentioned in the coverage that none of the four secondary outcomes achieved even a modest P = 0.05 There was no detectable effect of Vitamin E on

- Mean annual rate of cognitive decline (Alzheimer Disease Assessment Scale–Cognitive Subscale)

- Mean annual rate of cognitive decline (Mini-Mental State Examination)

- Mean annual rate of increased symptoms

- Mean annual rate of increased caregiver time,

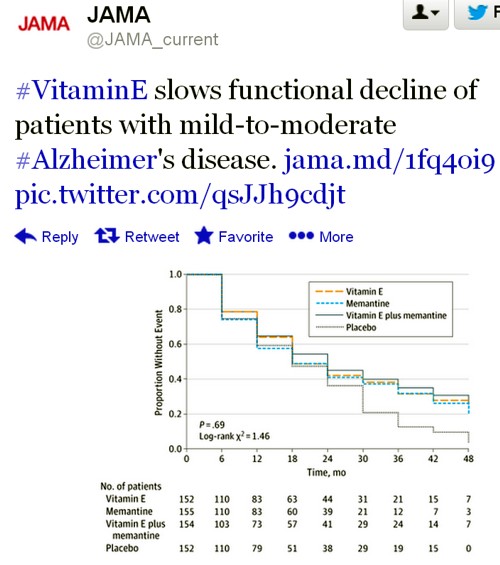

The only graph that appeared to show much effect was The Dependence Scale. This scale

“assesses 6 levels of functional dependence. Time to event is the time to loss of 1 dependence level (increase in dependence). We used an interval-censored model assuming a Weibull distribution because the time of the event was known only at the end of a discrete interval of time (every 6 months).”

It’s presented as a survival (Kaplan-Meier) plot. And it is this somewhat obscure secondary outcome that was used by the Journal of the American Medical Assocciation for its publicity.

Note also that memantine + Vitamin E was indistinguishable from placebo. There are two ways to explain this: either Vitamin E has no effect, or memantine is an antagonist of Vitamin E. There are no data on the latter, but it’s certainly implausible.

The trial used a high dose of Vitamin E (2000 IU/day). No toxic effects of Vitamin E were reported, though a 2005 meta-analysis concluded that doses greater than 400 IU/d "may increase all-cause mortality and should be avoided".

In my opinion, the outcome of this trial should have been something like “Vitamin E has, at most, trivial effects on the progress of Alzheimer’s disease”.

Both the journal and the authors are guilty of disgraceful hype. This continual raising of false hopes does nothing to help patients. But it does damage the reputation of the journal and of the authors.

|

This paper constitutes yet another failure of altmetrics. (see more examples on this blog). Not surprisingly, given the title, It was retweeted widely, but utterly uncritically. Bad science was promoted. And JAMA must take much of the blame for publishing it and promoting it. |

|

David

Also on the Kaplan-Meier plot look at the subject drop off. Started with about 150 in each group, then about half after one year and by 3 year only 20 subjects left…not a great experimental design. How do they get data at month 48 in placebo with zero subjects!

@robbo

Thanks for that. You are right: the huge dropout rate is yet another reason why the study is unreliable. What was JAMA thinking about?

Here is another example of excessive hype: http://biofundamentalist.blogspot.com/2014/01/autism-mice-and-dangers-of-scientific.html

@Klymkowsky

Thanks for that link. This time it was Cell. With JAMA here, and the New England Journal of Medicine and Science in the next post, the roll call of misbehaving glamour journals is almost complete.

Just wondering on the topic of the Vitamin E. was a correction used to account for all the secondary aims not found to be significant, because if a conservative measure like Bonferroni was used, then the .03 if replicable still indicates a very small chance that Vitamin E does not have at least some effect, (less than 3 %) so assuming no other methodological limitations, the implications of this for treatment are large, because increasing Vitamin E treatment is likely to have no adverse side effects nor interfere with other treatment, making the small effect size partially irrelevant. further, from my limited understanding of how Vitamins work, the small effect may be due to the small percentage of the population suffering from deficiency. if so, it is important for people with the condition to consider Vitamin E just in case. Perhaps it is not ground breaking, not as amazing as it is reported, but it still seems to me valuable research and at least in line with the reports, even if it is minor. a much better example of media misreporting, is a recent paper on life after death, which investigated people’s perceptions during their time “officially dead” before being resuscitated medically. obviously the colloquial use of the term “life after death” created a huge unneeded stir in people who skimmed the abstract, read the title, and went wild.

[…] in the sort of scientific malpractice that was recently pilloried viciously, but accurately, in the New York Times, and a further fall in the public’s trust in science. That trust is already disastrously low, and […]

[…] Another is scientists’ own vanity, which leads to the PR department issuing disgracefully hyped up press releases. […]