acupuncture

This piece is almost identical with today’s Spectator Health article.

This week there has been enormously wide coverage in the press for one of the worst papers on acupuncture that I’ve come across. As so often, the paper showed the opposite of what its title and press release, claimed. For another stunning example of this sleight of hand, try Acupuncturists show that acupuncture doesn’t work, but conclude the opposite: journal fails, published in the British Journal of General Practice).

Presumably the wide coverage was a result of the hyped-up press release issued by the journal, BMJ Acupuncture in Medicine. That is not the British Medical Journal of course, but it is, bafflingly, published by the BMJ Press group, and if you subscribe to press releases from the real BMJ. you also get them from Acupuncture in Medicine. The BMJ group should not be mixing up press releases about real medicine with press releases about quackery. There seems to be something about quackery that’s clickbait for the mainstream media.

As so often, the press release was shockingly misleading: It said

Acupuncture may alleviate babies’ excessive crying Needling twice weekly for 2 weeks reduced crying time significantly

This is totally untrue. Here’s why.

|

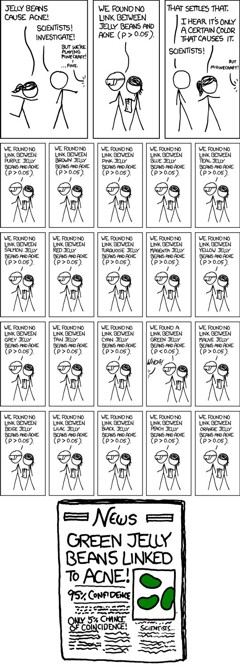

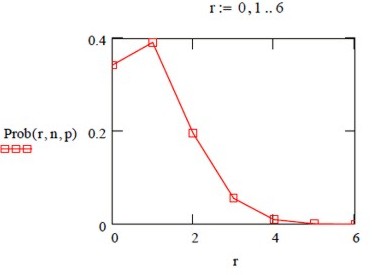

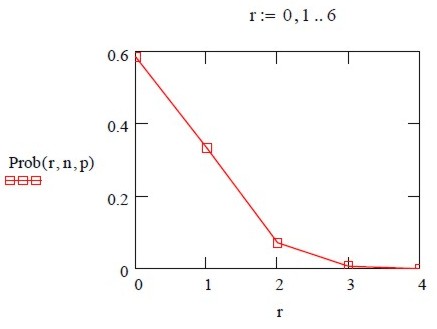

Luckily the Science Media Centre was on the case quickly: read their assessment. The paper made the most elementary of all statistical mistakes. It failed to make allowance for the jelly bean problem. The paper lists 24 different tests of statistical significance and focusses attention on three that happen to give a P value (just) less than 0.05, and so were declared to be "statistically significant". If you do enough tests, some are bound to come out “statistically significant” by chance. They are false postives, and the conclusions are as meaningless as “green jelly beans cause acne” in the cartoon. This is called P-hacking and it’s a well known cause of problems. It was evidently beyond the wit of the referees to notice this naive mistake. It’s very doubtful whether there is anything happening but random variability. And that’s before you even get to the problem of the weakness of the evidence provided by P values close to 0.05. There’s at least a 30% chance of such values being false positives, even if it were not for the jelly bean problem, and a lot more than 30% if the hypothesis being tested is implausible. I leave it to the reader to assess the plausibility of the hypothesis that a good way to stop a baby crying is to stick needles into the poor baby. If you want to know more about P values try Youtube or here, or here. |

One of the people asked for an opinion on the paper was George Lewith, the well-known apologist for all things quackish. He described the work as being a "good sized fastidious well conducted study ….. The outcome is clear". Thus showing an ignorance of statistics that would shame an undergraduate.

On the Today Programme, I was interviewed by the formidable John Humphrys, along with the mandatory member of the flat-earth society whom the BBC seems to feel obliged to invite along for "balance". In this case it was professional acupuncturist, Mike Cummings, who is an associate editor of the journal in which the paper appeared. Perhaps he’d read the Science media centre’s assessment before he came on, because he said, quite rightly, that

"in technical terms the study is negative" "the primary outcome did not turn out to be statistically significant"

to which Humphrys retorted, reasonably enough, “So it doesn’t work”. Cummings’ response to this was a lot of bluster about how unfair it was for NICE to expect a treatment to perform better than placebo. It was fascinating to hear Cummings admit that the press release by his own journal was simply wrong.

Listen to the interview here

Another obvious flaw of the study is that the nature of the control group. It is not stated very clearly but it seems that the baby was left alone with the acupuncturist for 10 minutes. A far better control would have been to have the baby cuddled by its mother, or by a nurse. That’s what was used by Olafsdottir et al (2001) in a study that showed cuddling worked just as well as another form of quackery, chiropractic, to stop babies crying.

Manufactured doubt is a potent weapon of the alternative medicine industry. It’s the same tactic as was used by the tobacco industry. You scrape together a few lousy papers like this one and use them to pretend that there’s a controversy. For years the tobacco industry used this tactic to try to persuade people that cigarettes didn’t give you cancer, and that nicotine wasn’t addictive. The main stream media obligingly invite the representatives of the industry who convey to the reader/listener that there is a controversy, when there isn’t.

Acupuncture is no longer controversial. It just doesn’t work -see Acupuncture is a theatrical placebo: the end of a myth. Try to imagine a pill that had been subjected to well over 3000 trials without anyone producing convincing evidence for a clinically useful effect. It would have been abandoned years ago. But by manufacturing doubt, the acupuncture industry has managed to keep its product in the news. Every paper on the subject ends with the words "more research is needed". No it isn’t.

Acupuncture is pre-scientific idea that was moribund everywhere, even in China, until it was revived by Mao Zedong as part of the appalling Great Proletarian Revolution. Now it is big business in China, and 100 percent of the clinical trials that come from China are positive.

if you believe them, you’ll truly believe anything.

Follow-up

29 January 2017

Soon after the Today programme in which we both appeared, the acupuncturist, Mike Cummings, posted his reaction to the programme. I thought it worth posting the original version in full. Its petulance and abusiveness are quite remarkable.

I thank Cummings for giving publicity to the video of our appearance, and for referring to my Wikipedia page. I leave it to the reader to judge my competence, and his, in the statistics of clinical trials. And it’s odd to be described as a "professional blogger" when the 400+ posts on dcscience.net don’t make a penny -in fact they cost me money. In contrast, he is the salaried medical director of the British Medical Acupuncture Society.

It’s very clear that he has no understanding of the error of the transposed conditional, nor even the mulltiple comparison problem (and neither, it seems, does he know the meaning of the word ‘protagonist’).

I ignored his piece, but several friends complained to the BMJ for allowing such abusive material on their blog site. As a result a few changes were made. The “baying mob” is still there, but the Wikipedia link has gone. I thought that readers might be interested to read the original unexpurgated version. It shows, better than I ever could, the weakness of the arguments of the alternative medicine community. To quote Upton Sinclair:

“It is difficult to get a man to understand something, when his salary depends upon his not understanding it.”

It also shows that the BBC still hasn’t learned the lessons in Steve Jones’ excellent “Review of impartiality and accuracy of the BBC’s coverage of science“. Every time I appear in such a programme, they feel obliged to invite a member of the flat earth society to propagate their make-believe.

Acupuncture for infantile colic – misdirection in the media or over-reaction from a sceptic blogger?26 Jan, 17 | by Dr Mike Cummings So there has been a big response to this paper press released by BMJ on behalf of the journal Acupuncture in Medicine. The response has been influenced by the usual characters – retired professors who are professional bloggers and vocal critics of anything in the realm of complementary medicine. They thrive on oiling up and flexing their EBM muscles for a baying mob of fellow sceptics (see my ‘stereotypical mental image’ here). Their target in this instant is a relatively small trial on acupuncture for infantile colic.[1] Deserving of being press released by virtue of being the largest to date in the field, but by no means because it gave a definitive answer to the question of the efficacy of acupuncture in the condition. We need to wait for an SR where the data from the 4 trials to date can be combined. So what about the research itself? I have already said that the trial was not definitive, but it was not a bad trial. It suffered from under-recruiting, which meant that it was underpowered in terms of the statistical analysis. But it was prospectively registered, had ethical approval and the protocol was published. Primary and secondary outcomes were clearly defined, and the only change from the published protocol was to combine the two acupuncture groups in an attempt to improve the statistical power because of under recruitment. The fact that this decision was made after the trial had begun means that the results would have to be considered speculative. For this reason the editors of Acupuncture in Medicine insisted on alteration of the language in which the conclusions were framed to reflect this level of uncertainty. DC has focussed on multiple statistical testing and p values. These are important considerations, and we could have insisted on more clarity in the paper. P values are a guide and the 0.05 level commonly adopted must be interpreted appropriately in the circumstances. In this paper there are no definitive conclusions, so the p values recorded are there to guide future hypothesis generation and trial design. There were over 50 p values reported in this paper, so by chance alone you must expect some to be below 0.05. If one is to claim statistical significance of an outcome at the 0.05 level, ie a 1:20 likelihood of the event happening by chance alone, you can only perform the test once. If you perform the test twice you must reduce the p value to 0.025 if you want to claim statistical significance of one or other of the tests. So now we must come to the predefined outcomes. They were clearly stated, and the results of these are the only ones relevant to the conclusions of the paper. The primary outcome was the relative reduction in total crying time (TC) at 2 weeks. There were two significance tests at this point for relative TC. For a statistically significant result, the p values would need to be less than or equal to 0.025 – neither was this low, hence my comment on the Radio 4 Today programme that this was technically a negative trial (more correctly ‘not a positive trial’ – it failed to disprove the null hypothesis ie that the samples were drawn from the same population and the acupuncture intervention did not change the population treated). Finally to the secondary outcome – this was the number of infants in each group who continued to fulfil the criteria for colic at the end of each intervention week. There were four tests of significance so we need to divide 0.05 by 4 to maintain the 1:20 chance of a random event ie only draw conclusions regarding statistical significance if any of the tests resulted in a p value at or below 0.0125. Two of the 4 tests were below this figure, so we say that the result is unlikely to have been chance alone in this case. With hindsight it might have been good to include this explanation in the paper itself, but as editors we must constantly balance how much we push authors to adjust their papers, and in this case the editor focussed on reducing the conclusions to being speculative rather than definitive. A significant result in a secondary outcome leads to a speculative conclusion that acupuncture ‘may’ be an effective treatment option… but further research will be needed etc… Now a final word on the 3000 plus acupuncture trials that DC loves to mention. His point is that there is no consistent evidence for acupuncture after over 3000 RCTs, so it clearly doesn’t work. He first quoted this figure in an editorial after discussing the largest, most statistically reliable meta-analysis to date – the Vickers et al IPDM.[2] DC admits that there is a small effect of acupuncture over sham, but follows the standard EBM mantra that it is too small to be clinically meaningful without ever considering the possibility that sham (gentle acupuncture plus context of acupuncture) can have clinically relevant effects when compared with conventional treatments. Perhaps now the best example of this is a network meta-analysis (NMA) using individual patient data (IPD), which clearly demonstrates benefits of sham acupuncture over usual care (a variety of best standard or usual care) in terms of health-related quality of life (HRQoL).[3] |

30 January 2017

I got an email from the BMJ asking me to take part in a BMJ Head-to-Head debate about acupuncture. I did one of these before, in 2007, but it generated more heat than light (the only good thing to come out of it was the joke about leprechauns). So here is my polite refusal.

|

Hello Thanks for the invitation, Perhaps you should read the piece that I wrote after the Today programme Why don’t you do these Head to Heads about genuine controversies? To do them about homeopathy or acupuncture is to fall for the “manufactured doubt” stratagem that was used so effectively by the tobacco industry to promote smoking. It’s the favourite tool of snake oil salesman too, and th BMJ should see that and not fall for their tricks. Such pieces night be good clickbait, but they are bad medicine and bad ethics. All the best David |

Of all types of alternative medicine, acupuncture is the one that has received the most approval from regular medicine. The benefit of that is that it’s been tested more thoroughly than most others. The result is now clear. It doesn’t work. See the evidence in Acupuncture is a theatrical placebo.

This blog has documented many cases of misreported tests of acupuncture, often from people have a financial interests in selling it. Perhaps the most egregious spin came from the University of Exeter. It was published in a normal journal, and endorsed by the journal’s editor, despite showing clearly that acupuncture didn’t even have much placebo effect.

Acupuncture got a boost in 2009 from, of all unlikely sources, the National Institute for Health and Care Excellence (NICE). The judgements of NICE and the benefit / cost ratio of treatments are usually very good. But the guidance group that they assembled to judge treatments for low back pain was atypically incompetent when it came to assessment of evidence. They recommended acupuncture as one option. At the time I posted “NICE falls for Bait and Switch by acupuncturists and chiropractors: it has let down the public and itself“. That was soon followed by two more posts:

NICE fiasco, part 2. Rawlins should withdraw guidance and start again“,

and

“The NICE fiasco, Part 3. Too many vested interests, not enough honesty“.

At the time, NICE was being run by Michael Rawlins, an old friend. No doubt he was unaware of the bad guidance until it was too late and he felt obliged to defend it.

Although the 2008 guidance referred only to low back pain, it gave an opening for acupuncturists to penetrate the NHS. Like all quacks, they are experts at bait and switch. The penetration of quackery was exacerbated by the privatisation of physiotherapy services to organisations like Connect Physical Health which have little regard for evidence, but a good eye for sales. If you think that’s an exaggeration, read "Connect Physical Health sells quackery to NHS".

When David Haslam took over the reins at NICE, I was optimistic that the question would be revisited (it turned out that he was aware of this blog). I was not disappointed. This time the guidance group had much more critical members.

The new draft guidance on low back pain was released on 24 March 2016. The final guidance will not appear until September 2016, but last time the final version didn’t differ much from the draft.

Despite modern imaging methods, it still isn’t possible to pinpoint the precise cause of low back pain (LBP) so diagnoses are lumped together as non-specific low back pain (NSLBP).

The summary guidance is explicit.

“1.2.8 Do not offer acupuncture for managing non-specific low back 7 pain with or without sciatica.”

The evidence is summarised section 13.6 of the main report (page 493).There is a long list of other proposed treatments that are not recommended.

Because low back pain is so common, and so difficult to treat, many treatments have been proposed. Many of them, including acupuncture, have proved to be clutching at straws. It’s to the great credit of the new guidance group that they have resisted that temptation.

Among the other "do not offer" treatments are

- imaging (except in specialist setting)

- belts or corsets

- foot orthotics

- acupuncture

- ultrasound

- TENS or PENS

- opioids (for acute or chronic LBP)

- antidepressants (SSRI and others)

- anticonvulsants

- spinal injections

- spinal fusion for NSLBP (except as part of a randomised controlled trial)

- disc replacement

At first sight, the new guidance looks like an excellent clear-out of the myths that surround the treatment of low back pain.

The positive recommendations that are made are all for things that have modest effects (at best). For example “Consider a group exercise programme”, and “Consider manipulation, mobilisation”. The use of there word “consider”, rather than “offer” seems to be NICE-speak -an implicit suggestion that it doesn’t work very well. My only criticism of the report is that it doesn’t say sufficiently bluntly that non-specific low back pain is largely an unsolved problem. Most of what’s seen is probably a result of that most deceptive phenomenon, regression to the mean.

One pain specialist put it to me thus. “Think of the billions spent on back pain research over the years in order to reach the conclusion that nothing much works – shameful really.” Well perhaps not shameful: it isn’t for want of trying. It’s just a very difficult problem. But pretending that there are solutions doesn’t help anyone.

Follow-up

|

“Statistical regression to the mean predicts that patients selected for abnormalcy will, on the average, tend to improve. We argue that most improvements attributed to the placebo effect are actually instances of statistical regression.”

“Thus, we urge caution in interpreting patient improvements as causal effects of our actions and should avoid the conceit of assuming that our personal presence has strong healing powers.” |

In 1955, Henry Beecher published "The Powerful Placebo". I was in my second undergraduate year when it appeared. And for many decades after that I took it literally, They looked at 15 studies and found that an average 35% of them got "satisfactory relief" when given a placebo. This number got embedded in pharmacological folk-lore. He also mentioned that the relief provided by placebo was greatest in patients who were most ill.

Consider the common experiment in which a new treatment is compared with a placebo, in a double-blind randomised controlled trial (RCT). It’s common to call the responses measured in the placebo group the placebo response. But that is very misleading, and here’s why.

The responses seen in the group of patients that are treated with placebo arise from two quite different processes. One is the genuine psychosomatic placebo effect. This effect gives genuine (though small) benefit to the patient. The other contribution comes from the get-better-anyway effect. This is a statistical artefact and it provides no benefit whatsoever to patients. There is now increasing evidence that the latter effect is much bigger than the former.

How can you distinguish between real placebo effects and get-better-anyway effect?

The only way to measure the size of genuine placebo effects is to compare in an RCT the effect of a dummy treatment with the effect of no treatment at all. Most trials don’t have a no-treatment arm, but enough do that estimates can be made. For example, a Cochrane review by Hróbjartsson & Gøtzsche (2010) looked at a wide variety of clinical conditions. Their conclusion was:

“We did not find that placebo interventions have important clinical effects in general. However, in certain settings placebo interventions can influence patient-reported outcomes, especially pain and nausea, though it is difficult to distinguish patient-reported effects of placebo from biased reporting.”

In some cases, the placebo effect is barely there at all. In a non-blind comparison of acupuncture and no acupuncture, the responses were essentially indistinguishable (despite what the authors and the journal said). See "Acupuncturists show that acupuncture doesn’t work, but conclude the opposite"

So the placebo effect, though a real phenomenon, seems to be quite small. In most cases it is so small that it would be barely perceptible to most patients. Most of the reason why so many people think that medicines work when they don’t isn’t a result of the placebo response, but it’s the result of a statistical artefact.

Regression to the mean is a potent source of deception

The get-better-anyway effect has a technical name, regression to the mean. It has been understood since Francis Galton described it in 1886 (see Senn, 2011 for the history). It is a statistical phenomenon, and it can be treated mathematically (see references, below). But when you think about it, it’s simply common sense.

You tend to go for treatment when your condition is bad, and when you are at your worst, then a bit later you’re likely to be better, The great biologist, Peter Medawar comments thus.

|

"If a person is (a) poorly, (b) receives treatment intended to make him better, and (c) gets better, then no power of reasoning known to medical science can convince him that it may not have been the treatment that restored his health"

(Medawar, P.B. (1969:19). The Art of the Soluble: Creativity and originality in science. Penguin Books: Harmondsworth). |

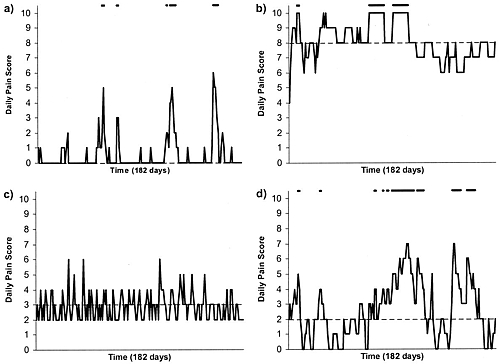

This is illustrated beautifully by measurements made by McGorry et al., (2001). Patients with low back pain recorded their pain (on a 10 point scale) every day for 5 months (they were allowed to take analgesics ad lib).

The results for four patients are shown in their Figure 2. On average they stay fairly constant over five months, but they fluctuate enormously, with different patterns for each patient. Painful episodes that last for 2 to 9 days are interspersed with periods of lower pain or none at all. It is very obvious that if these patients had gone for treatment at the peak of their pain, then a while later they would feel better, even if they were not actually treated. And if they had been treated, the treatment would have been declared a success, despite the fact that the patient derived no benefit whatsoever from it. This entirely artefactual benefit would be the biggest for the patients that fluctuate the most (e.g this in panels a and d of the Figure).

Figure 2 from McGorry et al, 2000. Examples of daily pain scores over a 6-month period for four participants. Note: Dashes of different lengths at the top of a figure designate an episode and its duration.

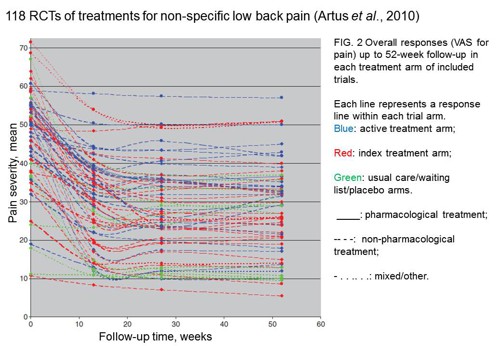

The effect is illustrated well by an analysis of 118 trials of treatments for non-specific low back pain (NSLBP), by Artus et al., (2010). The time course of pain (rated on a 100 point visual analogue pain scale) is shown in their Figure 2. There is a modest improvement in pain over a few weeks, but this happens regardless of what treatment is given, including no treatment whatsoever.

FIG. 2 Overall responses (VAS for pain) up to 52-week follow-up in each treatment arm of included trials. Each line represents a response line within each trial arm. Red: index treatment arm; Blue: active treatment arm; Green: usual care/waiting list/placebo arms. ____: pharmacological treatment; – – – -: non-pharmacological treatment; . . .. . .: mixed/other.

The authors comment

"symptoms seem to improve in a similar pattern in clinical trials following a wide variety of active as well as inactive treatments.", and "The common pattern of responses could, for a large part, be explained by the natural history of NSLBP".

In other words, none of the treatments work.

This paper was brought to my attention through the blog run by the excellent physiotherapist, Neil O’Connell. He comments

"If this finding is supported by future studies it might suggest that we can’t even claim victory through the non-specific effects of our interventions such as care, attention and placebo. People enrolled in trials for back pain may improve whatever you do. This is probably explained by the fact that patients enrol in a trial when their pain is at its worst which raises the murky spectre of regression to the mean and the beautiful phenomenon of natural recovery."

O’Connell has discussed the matter in recent paper, O’Connell (2015), from the point of view of manipulative therapies. That’s an area where there has been resistance to doing proper RCTs, with many people saying that it’s better to look at “real world” outcomes. This usually means that you look at how a patient changes after treatment. The hazards of this procedure are obvious from Artus et al.,Fig 2, above. It maximises the risk of being deceived by regression to the mean. As O’Connell commented

"Within-patient change in outcome might tell us how much an individual’s condition improved, but it does not tell us how much of this improvement was due to treatment."

In order to eliminate this effect it’s essential to do a proper RCT with control and treatment groups tested in parallel. When that’s done the control group shows the same regression to the mean as the treatment group. and any additional response in the latter can confidently attributed to the treatment. Anything short of that is whistling in the wind.

Needless to say, the suboptimal methods are most popular in areas where real effectiveness is small or non-existent. This, sad to say, includes low back pain. It also includes just about every treatment that comes under the heading of alternative medicine. Although these problems have been understood for over a century, it remains true that

|

"It is difficult to get a man to understand something, when his salary depends upon his not understanding it."

Upton Sinclair (1935) |

Responders and non-responders?

One excuse that’s commonly used when a treatment shows only a small effect in proper RCTs is to assert that the treatment actually has a good effect, but only in a subgroup of patients ("responders") while others don’t respond at all ("non-responders"). For example, this argument is often used in studies of anti-depressants and of manipulative therapies. And it’s universal in alternative medicine.

There’s a striking similarity between the narrative used by homeopaths and those who are struggling to treat depression. The pill may not work for many weeks. If the first sort of pill doesn’t work try another sort. You may get worse before you get better. One is reminded, inexorably, of Voltaire’s aphorism "The art of medicine consists in amusing the patient while nature cures the disease".

There is only a handful of cases in which a clear distinction can be made between responders and non-responders. Most often what’s observed is a smear of different responses to the same treatment -and the greater the variability, the greater is the chance of being deceived by regression to the mean.

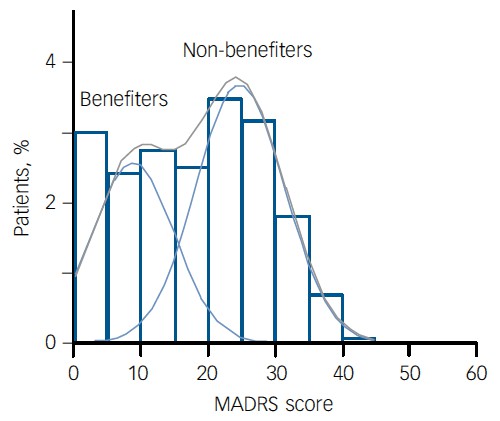

For example, Thase et al., (2011) looked at responses to escitalopram, an SSRI antidepressant. They attempted to divide patients into responders and non-responders. An example (Fig 1a in their paper) is shown.

The evidence for such a bimodal distribution is certainly very far from obvious. The observations are just smeared out. Nonetheless, the authors conclude

"Our findings indicate that what appears to be a modest effect in the grouped data – on the boundary of clinical significance, as suggested above – is actually a very large effect for a subset of patients who benefited more from escitalopram than from placebo treatment. "

I guess that interpretation could be right, but it seems more likely to be a marketing tool. Before you read the paper, check the authors’ conflicts of interest.

The bottom line is that analyses that divide patients into responders and non-responders are reliable only if that can be done before the trial starts. Retrospective analyses are unreliable and unconvincing.

Some more reading

Senn, 2011 provides an excellent introduction (and some interesting history). The subtitle is

"Here Stephen Senn examines one of Galton’s most important statistical legacies – one that is at once so trivial that it is blindingly obvious, and so deep that many scientists spend their whole career being fooled by it."

The examples in this paper are extended in Senn (2009), “Three things that every medical writer should know about statistics”. The three things are regression to the mean, the error of the transposed conditional and individual response.

You can read slightly more technical accounts of regression to the mean in McDonald & Mazzuca (1983) "How much of the placebo effect is statistical regression" (two quotations from this paper opened this post), and in Stephen Senn (2015) "Mastering variation: variance components and personalised medicine". In 1988 Senn published some corrections to the maths in McDonald (1983).

The trials that were used by Hróbjartsson & Gøtzsche (2010) to investigate the comparison between placebo and no treatment were looked at again by Howick et al., (2013), who found that in many of them the difference between treatment and placebo was also small. Most of the treatments did not work very well.

Regression to the mean is not just a medical deceiver: it’s everywhere

Although this post has concentrated on deception in medicine, it’s worth noting that the phenomenon of regression to the mean can cause wrong inferences in almost any area where you look at change from baseline. A classical example concern concerns the effectiveness of speed cameras. They tend to be installed after a spate of accidents, and if the accident rate is particularly high in one year it is likely to be lower the next year, regardless of whether a camera had been installed or not. To find the true reduction in accidents caused by installation of speed cameras, you would need to choose several similar sites and allocate them at random to have a camera or no camera. As in clinical trials. looking at the change from baseline can be very deceptive.

Statistical postscript

Lastly, remember that it you avoid all of these hazards of interpretation, and your test of significance gives P = 0.047. that does not mean you have discovered something. There is still a risk of at least 30% that your ‘positive’ result is a false positive. This is explained in Colquhoun (2014),"An investigation of the false discovery rate and the misinterpretation of p-values". I’ve suggested that one way to solve this problem is to use different words to describe P values: something like this.

|

P > 0.05 very weak evidence

P = 0.05 weak evidence: worth another look P = 0.01 moderate evidence for a real effect P = 0.001 strong evidence for real effect |

But notice that if your hypothesis is implausible, even these criteria are too weak. For example, if the treatment and placebo are identical (as would be the case if the treatment were a homeopathic pill) then it follows that 100% of positive tests are false positives.

Follow-up

12 December 2015

It’s worth mentioning that the question of responders versus non-responders is closely-related to the classical topic of bioassays that use quantal responses. In that field it was assumed that each participant had an individual effective dose (IED). That’s reasonable for the old-fashioned LD50 toxicity test: every animal will die after a sufficiently big dose. It’s less obviously right for ED50 (effective dose in 50% of individuals). The distribution of IEDs is critical, but it has very rarely been determined. The cumulative form of this distribution is what determines the shape of the dose-response curve for fraction of responders as a function of dose. Linearisation of this curve, by means of the probit transformation used to be a staple of biological assay. This topic is discussed in Chapter 10 of Lectures on Biostatistics. And you can read some of the history on my blog about Some pharmacological history: an exam from 1959.

This discussion seemed to be of sufficient general interest that we submitted is as a feature to eLife, because this journal is one of the best steps into the future of scientific publishing. Sadly the features editor thought that " too much of the article is taken up with detailed criticisms of research papers from NEJM and Science that appeared in the altmetrics top 100 for 2013; while many of these criticisms seems valid, the Features section of eLife is not the venue where they should be published". That’s pretty typical of what most journals would say. It is that sort of attitude that stifles criticism, and that is part of the problem. We should be encouraging post-publication peer review, not suppressing it. Luckily, thanks to the web, we are now much less constrained by journal editors than we used to be.

Here it is.

Scientists don’t count: why you should ignore altmetrics and other bibliometric nightmares

David Colquhoun1 and Andrew Plested2

1 University College London, Gower Street, London WC1E 6BT

2 Leibniz-Institut für Molekulare Pharmakologie (FMP) & Cluster of Excellence NeuroCure, Charité Universitätsmedizin,Timoféeff-Ressowsky-Haus, Robert-Rössle-Str. 10, 13125 Berlin Germany.

Jeffrey Beall is librarian at Auraria Library, University of Colorado Denver. Although not a scientist himself, he, more than anyone, has done science a great service by listing the predatory journals that have sprung up in the wake of pressure for open access. In August 2012 he published “Article-Level Metrics: An Ill-Conceived and Meretricious Idea. At first reading that criticism seemed a bit strong. On mature consideration, it understates the potential that bibliometrics, altmetrics especially, have to undermine both science and scientists.

Altmetrics is the latest buzzword in the vocabulary of bibliometricians. It attempts to measure the “impact” of a piece of research by counting the number of times that it’s mentioned in tweets, Facebook pages, blogs, YouTube and news media. That sounds childish, and it is. Twitter is an excellent tool for journalism. It’s good for debunking bad science, and for spreading links, but too brief for serious discussions. It’s rarely useful for real science.

Surveys suggest that the great majority of scientists do not use twitter (7 — 13%). Scientific works get tweeted about mostly because they have titles that contain buzzwords, not because they represent great science.

What and who is Altmetrics for?

The aims of altmetrics are ambiguous to the point of dishonesty; they depend on whether the salesperson is talking to a scientist or to a potential buyer of their wares.

At a meeting in London , an employee of altmetric.com said “we measure online attention surrounding journal articles” “we are not measuring quality …” “this whole altmetrics data service was born as a service for publishers”, “it doesn’t matter if you got 1000 tweets . . .all you need is one blog post that indicates that someone got some value from that paper”.

These ideas sound fairly harmless, but in stark contrast, Jason Priem (an author of the altmetrics manifesto) said one advantage of altmetrics is that it’s fast “Speed: months or weeks, not years: faster evaluations for tenure/hiring”. Although conceivably useful for disseminating preliminary results, such speed isn’t important for serious science (the kind that ought to be considered for tenure) which operates on the timescale of years. Priem also says “researchers must ask if altmetrics really reflect impact” . Even he doesn’t know, yet altmetrics services are being sold to universities, before any evaluation of their usefulness has been done, and universities are buying them. The idea that altmetrics scores could be used for hiring is nothing short of terrifying.

The problem with bibliometrics

The mistake made by all bibliometricians is that they fail to consider the content of papers, because they have no desire to understand research. Bibliometrics are for people who aren’t prepared to take the time (or lack the mental capacity) to evaluate research by reading about it, or in the case of software or databases, by using them. The use of surrogate outcomes in clinical trials is rightly condemned. Bibliometrics are all about surrogate outcomes.

If instead we consider the work described in particular papers that most people agree to be important (or that everyone agrees to be bad), it’s immediately obvious that no publication metrics can measure quality. There are some examples in How to get good science (Colquhoun, 2007). It is shown there that at least one Nobel prize winner failed dismally to fulfil arbitrary biblometric productivity criteria of the sort imposed in some universities (another example is in Is Queen Mary University of London trying to commit scientific suicide?).

Schekman (2013) has said that science

“is disfigured by inappropriate incentives. The prevailing structures of personal reputation and career advancement mean the biggest rewards often follow the flashiest work, not the best.”

Bibliometrics reinforce those inappropriate incentives. A few examples will show that altmetrics are one of the silliest metrics so far proposed.

The altmetrics top 100 for 2103

The superficiality of altmetrics is demonstrated beautifully by the list of the 100 papers with the highest altmetric scores in 2013 For a start, 58 of the 100 were behind paywalls, and so unlikely to have been read except (perhaps) by academics.

The second most popular paper (with the enormous altmetric score of 2230) was published in the New England Journal of Medicine. The title was Primary Prevention of Cardiovascular Disease with a Mediterranean Diet. It was promoted (inaccurately) by the journal with the following tweet:

Many of the 2092 tweets related to this article simply gave the title, but inevitably the theme appealed to diet faddists, with plenty of tweets like the following:

The interpretations of the paper promoted by these tweets were mostly desperately inaccurate. Diet studies are anyway notoriously unreliable. As John Ioannidis has said

"Almost every single nutrient imaginable has peer reviewed publications associating it with almost any outcome."

This sad situation comes about partly because most of the data comes from non-randomised cohort studies that tell you nothing about causality, and also because the effects of diet on health seem to be quite small.

The study in question was a randomized controlled trial, so it should be free of the problems of cohort studies. But very few tweeters showed any sign of having read the paper. When you read it you find that the story isn’t so simple. Many of the problems are pointed out in the online comments that follow the paper. Post-publication peer review really can work, but you have to read the paper. The conclusions are pretty conclusively demolished in the comments, such as:

“I’m surrounded by olive groves here in Australia and love the hand-pressed EVOO [extra virgin olive oil], which I can buy at a local produce market BUT this study shows that I won’t live a minute longer, and it won’t prevent a heart attack.”

We found no tweets that mentioned the finding from the paper that the diets had no detectable effect on myocardial infarction, death from cardiovascular causes, or death from any cause. The only difference was in the number of people who had strokes, and that showed a very unimpressive P = 0.04.

Neither did we see any tweets that mentioned the truly impressive list of conflicts of interest of the authors, which ran to an astonishing 419 words.

“Dr. Estruch reports serving on the board of and receiving lecture fees from the Research Foundation on Wine and Nutrition (FIVIN); serving on the boards of the Beer and Health Foundation and the European Foundation for Alcohol Research (ERAB); receiving lecture fees from Cerveceros de España and Sanofi-Aventis; and receiving grant support through his institution from Novartis. Dr. Ros reports serving on the board of and receiving travel support, as well as grant support through his institution, from the California Walnut Commission; serving on the board of the Flora Foundation (Unilever). . . “

And so on, for another 328 words.

The interesting question is how such a paper came to be published in the hugely prestigious New England Journal of Medicine. That it happened is yet another reason to distrust impact factors. It seems to be another sign that glamour journals are more concerned with trendiness than quality.

One sign of that is the fact that the journal’s own tweet misrepresented the work. The irresponsible spin in this initial tweet from the journal started the ball rolling, and after this point, the content of the paper itself became irrelevant. The altmetrics score is utterly disconnected from the science reported in the paper: it more closely reflects wishful thinking and confirmation bias.

The fourth paper in the altmetrics top 100 is an equally instructive example.

|

This work was also published in a glamour journal, Science. The paper claimed that a function of sleep was to “clear metabolic waste from the brain”. It was initially promoted (inaccurately) on Twitter by the publisher of Science. After that, the paper was retweeted many times, presumably because everybody sleeps, and perhaps because the title hinted at the trendy, but fraudulent, idea of “detox”. Many tweets were variants of “The garbage truck that clears metabolic waste from the brain works best when you’re asleep”. |

But this paper was hidden behind Science’s paywall. It’s bordering on irresponsible for journals to promote on social media papers that can’t be read freely. It’s unlikely that anyone outside academia had read it, and therefore few of the tweeters had any idea of the actual content, or the way the research was done. Nevertheless it got “1,479 tweets from 1,355 accounts with an upper bound of 1,110,974 combined followers”. It had the huge Altmetrics score of 1848, the highest altmetric score in October 2013.

Within a couple of days, the story fell out of the news cycle. It was not a bad paper, but neither was it a huge breakthrough. It didn’t show that naturally-produced metabolites were cleared more quickly, just that injected substances were cleared faster when the mice were asleep or anaesthetised. This finding might or might not have physiological consequences for mice.

Worse, the paper also claimed that “Administration of adrenergic antagonists induced an increase in CSF tracer influx, resulting in rates of CSF tracer influx that were more comparable with influx observed during sleep or anesthesia than in the awake state”. Simply put, giving the sleeping mice a drug could reduce the clearance to wakeful levels. But nobody seemed to notice the absurd concentrations of antagonists that were used in these experiments: “adrenergic receptor antagonists (prazosin, atipamezole, and propranolol, each 2 mM) were then slowly infused via the cisterna magna cannula for 15 min”. Use of such high concentrations is asking for non-specific effects. The binding constant (concentration to occupy half the receptors) for prazosin is less than 1 nM, so infusing 2 mM is working at a million times greater than the concentration that should be effective. That’s asking for non-specific effects. Most drugs at this sort of concentration have local anaesthetic effects, so perhaps it isn’t surprising that the effects resembled those of ketamine.

The altmetrics editor hadn’t noticed the problems and none of them featured in the online buzz. That’s partly because to find it out you had to read the paper (the antagonist concentrations were hidden in the legend of Figure 4), and partly because you needed to know the binding constant for prazosin to see this warning sign.

The lesson, as usual, is that if you want to know about the quality of a paper, you have to read it. Commenting on a paper without knowing anything of its content is liable to make you look like an jackass.

A tale of two papers

Another approach that looks at individual papers is to compare some of one’s own papers. Sadly, UCL shows altmetric scores on each of your own papers. Mostly they are question marks, because nothing published before 2011 is scored. But two recent papers make an interesting contrast. One is from DC’s side interest in quackery, one was real science. The former has an altmetric score of 169, the latter has an altmetric score of 2.

|

The first paper was “Acupuncture is a theatrical placebo”, which was published as an invited editorial in Anesthesia and Analgesia [download pdf]. The paper was scientifically trivial. It took perhaps a week to write. Nevertheless, it got promoted it on twitter, because anything to do with alternative medicine is interesting to the public. It got quite a lot of retweets. And the resulting altmetric score of 169 put it in the top 1% of all articles altmetric have tracked, and the second highest ever for Anesthesia and Analgesia. As well as the journal’s own website, the article was also posted on the DCScience.net blog (May 30, 2013) where it soon became the most viewed page ever (24,468 views as of 23 November 2013), something that altmetrics does not seem to take into account. |

|

Compare this with the fate of some real, but rather technical, science.

|

My [DC] best scientific papers are too old (i.e. before 2011) to have an altmetrics score, but my best score for any scientific paper is 2. This score was for Colquhoun & Lape (2012) “Allosteric coupling in ligand-gated ion channels”. It was a commentary with some original material. The altmetric score was based on two tweets and 15 readers on Mendeley. The two tweets consisted of one from me (“Real science; The meaning of allosteric conformation changes http://t.co/zZeNtLdU ”). The only other tweet as abusive one from a cyberstalker who was upset at having been refused a job years ago. Incredibly, this modest achievement got it rated “Good compared to other articles of the same age (71st percentile)”. |

|

Conclusions about bibliometrics

Bibliometricians spend much time correlating one surrogate outcome with another, from which they learn little. What they don’t do is take the time to examine individual papers. Doing that makes it obvious that most metrics, and especially altmetrics, are indeed an ill-conceived and meretricious idea. Universities should know better than to subscribe to them.

Although altmetrics may be the silliest bibliometric idea yet, much this criticism applies equally to all such metrics. Even the most plausible metric, counting citations, is easily shown to be nonsense by simply considering individual papers. All you have to do is choose some papers that are universally agreed to be good, and some that are bad, and see how metrics fail to distinguish between them. This is something that bibliometricians fail to do (perhaps because they don’t know enough science to tell which is which). Some examples are given by Colquhoun (2007) (more complete version at dcscience.net).

Eugene Garfield, who started the metrics mania with the journal impact factor (JIF), was clear that it was not suitable as a measure of the worth of individuals. He has been ignored and the JIF has come to dominate the lives of researchers, despite decades of evidence of the harm it does (e.g.Seglen (1997) and Colquhoun (2003) ) In the wake of JIF, young, bright people have been encouraged to develop yet more spurious metrics (of which ‘altmetrics’ is the latest). It doesn’t matter much whether these metrics are based on nonsense (like counting hashtags) or rely on counting links or comments on a journal website. They won’t (and can’t) indicate what is important about a piece of research- its quality.

People say – I can’t be a polymath. Well, then don’t try to be. You don’t have to have an opinion on things that you don’t understand. The number of people who really do have to have an overview, of the kind that altmetrics might purport to give, those who have to make funding decisions about work that they are not intimately familiar with, is quite small. Chances are, you are not one of them. We review plenty of papers and grants. But it’s not credible to accept assignments outside of your field, and then rely on metrics to assess the quality of the scientific work or the proposal.

It’s perfectly reasonable to give credit for all forms of research outputs, not only papers. That doesn’t need metrics. It’s nonsense to suggest that altmetrics are needed because research outputs are not already valued in grant and job applications. If you write a grant for almost any agency, you can put your CV. If you have a non-publication based output, you can always include it. Metrics are not needed. If you write software, get the numbers of downloads. Software normally garners citations anyway if it’s of any use to the greater community.

When AP recently wrote a criticism of Heather Piwowar’s altmetrics note in Nature, one correspondent wrote: "I haven’t read the piece [by HP] but I’m sure you are mischaracterising it". This attitude summarizes the too-long-didn’t-read (TLDR) culture that is increasingly becoming accepted amongst scientists, and which the comparisons above show is a central component of altmetrics.

Altmetrics are numbers generated by people who don’t understand research, for people who don’t understand research. People who read papers and understand research just don’t need them and should shun them.

But all bibliometrics give cause for concern, beyond their lack of utility. They do active harm to science. They encourage “gaming” (a euphemism for cheating). They encourage short-term eye-catching research of questionable quality and reproducibility. They encourage guest authorships: that is, they encourage people to claim credit for work which isn’t theirs. At worst, they encourage fraud.

No doubt metrics have played some part in the crisis of irreproducibility that has engulfed some fields, particularly experimental psychology, genomics and cancer research. Underpowered studies with a high false-positive rate may get you promoted, but tend to mislead both other scientists and the public (who in general pay for the work). The waste of public money that must result from following up badly done work that can’t be reproduced but that was published for the sake of “getting something out” has not been quantified, but must be considered to the detriment of bibliometrics, and sadly overcomes any advantages from rapid dissemination. Yet universities continue to pay publishers to provide these measures, which do nothing but harm. And the general public has noticed.

It’s now eight years since the New York Times brought to the attention of the public that some scientists engage in puffery, cheating and even fraud.

Overblown press releases written by journals, with connivance of university PR wonks and with the connivance of the authors, sometimes go viral on social media (and so score well on altmetrics). Yet another example, from Journal of the American Medical Association involved an overblown press release from the Journal about a trial that allegedly showed a benefit of high doses of Vitamin E for Alzheimer’s disease.

This sort of puffery harms patients and harms science itself.

We can’t go on like this.

What should be done?

Post publication peer review is now happening, in comments on published papers and through sites like PubPeer, where it is already clear that anonymous peer review can work really well. New journals like eLife have open comments after each paper, though authors do not seem to have yet got into the habit of using them constructively. They will.

It’s very obvious that too many papers are being published, and that anything, however bad, can be published in a journal that claims to be peer reviewed . To a large extent this is just another example of the harm done to science by metrics –the publish or perish culture.

Attempts to regulate science by setting “productivity targets” is doomed to do as much harm to science as it has in the National Health Service in the UK. This has been known to economists for a long time, under the name of Goodhart’s law.

Here are some ideas about how we could restore the confidence of both scientists and of the public in the integrity of published work.

- Nature, Science, and other vanity journals should become news magazines only. Their glamour value distorts science and encourages dishonesty.

- Print journals are overpriced and outdated. They are no longer needed. Publishing on the web is cheap, and it allows open access and post-publication peer review. Every paper should be followed by an open comments section, with anonymity allowed. The old publishers should go the same way as the handloom weavers. Their time has passed.

- Web publication allows proper explanation of methods, without the page, word and figure limits that distort papers in vanity journals. This would also make it very easy to publish negative work, thus reducing publication bias, a major problem (not least for clinical trials)

- Publish or perish has proved counterproductive. It seems just as likely that better science will result without any performance management at all. All that’s needed is peer review of grant applications.

- Providing more small grants rather than fewer big ones should help to reduce the pressure to publish which distorts the literature. The ‘celebrity scientist’, running a huge group funded by giant grants has not worked well. It’s led to poor mentoring, and, at worst, fraud. Of course huge groups sometimes produce good work, but too often at the price of exploitation of junior scientists

- There is a good case for limiting the number of original papers that an individual can publish per year, and/or total funding. Fewer but more complete and considered papers would benefit everyone, and counteract the flood of literature that has led to superficiality.

- Everyone should read, learn and inwardly digest Peter Lawrence’s The Mismeasurement of Science.

A focus on speed and brevity (cited as major advantages of altmetrics) will help no-one in the end. And a focus on creating and curating new metrics will simply skew science in yet another unsatisfactory way, and rob scientists of the time they need to do their real job: generate new knowledge.

It has been said

“Creation is sloppy; discovery is messy; exploration is dangerous. What’s a manager to do?

The answer in general is to encourage curiosity and accept failure. Lots of failure.”

And, one might add, forget metrics. All of them.

Follow-up

17 Jan 2014

This piece was noticed by the Economist. Their ‘Writing worth reading‘ section said

"Why you should ignore altmetrics (David Colquhoun) Altmetrics attempt to rank scientific papers by their popularity on social media. David Colquohoun [sic] argues that they are “for people who aren’t prepared to take the time (or lack the mental capacity) to evaluate research by reading about it.”"

20 January 2014.

Jason Priem, of ImpactStory, has responded to this article on his own blog. In Altmetrics: A Bibliographic Nightmare? he seems to back off a lot from his earlier claim (cited above) that altmetrics are useful for making decisions about hiring or tenure. Our response is on his blog.

20 January 2014.

Jason Priem, of ImpactStory, has responded to this article on his own blog, In Altmetrics: A bibliographic Nightmare? he seems to back off a lot from his earlier claim (cited above) that altmetrics are useful for making decisions about hiring or tenure. Our response is on his blog.

23 January 2014

The Scholarly Kitchen blog carried another paean to metrics, A vigorous discussion followed. The general line that I’ve followed in this discussion, and those mentioned below, is that bibliometricians won’t qualify as scientists until they test their methods, i.e. show that they predict something useful. In order to do that, they’ll have to consider individual papers (as we do above). At present, articles by bibliometricians consist largely of hubris, with little emphasis on the potential to cause corruption. They remind me of articles by homeopaths: their aim is to sell a product (sometimes for cash, but mainly to promote the authors’ usefulness).

It’s noticeable that all of the pro-metrics articles cited here have been written by bibliometricians. None have been written by scientists.

28 January 2014.

Dalmeet Singh Chawla,a bibliometrician from Imperial College London, wrote a blog on the topic. (Imperial, at least in its Medicine department, is notorious for abuse of metrics.)

29 January 2014 Arran Frood wrote a sensible article about the metrics row in Euroscientist.

2 February 2014 Paul Groth (a co-author of the Altmetrics Manifesto) posted more hubristic stuff about altmetrics on Slideshare. A vigorous discussion followed.

5 May 2014. Another vigorous discussion on ImpactStory blog, this time with Stacy Konkiel. She’s another non-scientist trying to tell scientists what to do. The evidence that she produced for the usefulness of altmetrics seemed pathetic to me.

7 May 2014 A much-shortened version of this post appeared in the British Medical Journal (BMJ blogs)

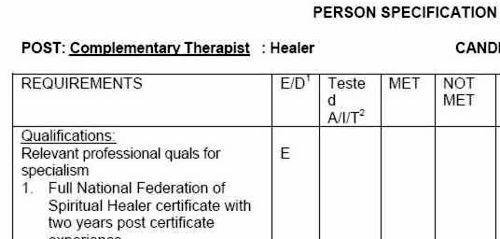

A constant theme of this blog is that the NHS should not pay for useless treatments. By and large, NICE does a good job of preventing that. But NICE has not been allowed by the Department of Health to look at quackery.

I have the impression that privatisation of many NHS services will lead to an increase in the provision of myth-based therapies. That is part of the "bait and switch" tactic that quacks use in the hope of gaining respectability. A prime example is the "College of Medicine", financed by Capita and replete with quacks, as one would expect since it is the reincarnation of the Prince’s Foundation for Integrated Health.

One such treatment is acupuncture. Having very recently reviewed the evidence, we concluded that "Acupuncture is a theatrical placebo: the end of a myth". Any effects it may have are too small to be useful to patients. That’s the background for an interesting case study.

A colleague got a very painful frozen shoulder. His GP referred him to the Camden & Islington NHS Trust physiotherapy service. That service is now provided by a private company, Connect Physical Health.

That proved to be a big mistake. The first two appointments were not too bad, though they resulted in little improvement. But at the third appointment he was offered acupuncture. He hesitated, but agreed, in desperation to try it. It did no good. At the next appointment the condition was worse. After some very painful manipulation, the physiotherapist offered acupuncture again. This time he refused on the grounds that "I hadn’t noticed any effect the first time, because there is no evidence that it works and that I was concerned by her standards of hygiene". The physiotherapist then became "quite rude" and said that she would put down that the patient had refused treatment.

The lack of response was hardly surprising. NHS Evidence says

"There is no clinical evidence to show that other treatments, such as transcutaneous electrical nerve stimulation (TENS), Shiatsu massage or acupuncture are effective in treating frozen shoulder."

In fact it now seems beyond reasonable doubt that acupuncture is no more than a theatrical placebo.

According to Connect’s own web site “Our services are evidence-based”. That is evidently untrue in this case, so I asked them for the evidence that acupuncture was effective.

I’d noticed that in other places, Connect Physical Health also offers the obviously fraudulent craniosacral therapy (for example, here) and discredited chiropractic quackery. So I asked them about the evidence for their effectiveness too.

This is what they said.

|

Many thanks for your comments via our web site. In response, we thought you might like to access the sources for some of the evidence which underpins our MSK services: Integrating Evidence-Based Acupuncture into Physiotherapy for the Benefit of the Patient – you can obtain the information you require from www.aacp.org.uk The General Chiropractic Council www.gcc-uk.org/page.cfm We have also attached a copy of the NICE Guidelines. |

So, no Cochrane reviews, no NHS Evidence. Instead I was referred to the very quack organisations that promote the treatments in question, the Acupuncture Association of Chartered Physiotherapists, and the totally discredited General Chiropractic Council.

The NICE guidelines that they sent were nothing to do with frozen shoulder. They were the guidelines for low back pain which caused such a furore when they were first issued (and which, in any case, don’t recommend chiropractic explicitly).

When I pointed out these deficiencies I got this.

|

Your email below has been forwarded to me. I am sorry if you feel that that that information we pointed you towards to enable you to make your own investigations into the evidence base for the services provided by Connect Physical Health and your hospital did not meet with your expectations. ‘ ‘ ‘ Please understand that our NHS services in Camden were commissioned by the Primary Care Trust. The fully integrated MSK service model included provision for acupuncture and other manual therapy provided by our experienced Chartered Physiotherapists. If you have any problems with the evidence base for the use of acupuncture or manual therapy within the service, which has been commissioned on behalf of the GPs in Camden Borough, then I would politely recommend that you direct your observations to the clinical commissioning authorities and other professional bodies who do spend time evidencing best practice and representing the academic arguments. I am sure they will be pleased to pick up discussions with you about the relative merits of the interventions being procured by the NHS. Yours sincerely, Mark Mark Philpott BSc BSc MSc MMACP MCSP

Head of Operations, Community MSK Services Connect Physical Health 35 Apex Business Village Cramlington Northumberland NE23 7BF |

So, "don’t blame us, blame the PCT". A second letter asked why they were shirking the little matter of evidence.

|

In response to your last email, I would like to say that Connect does not wish to be drawn into a debate over two therapeutic options (acupuncture and craniosacral therapy) that are widely practiced [sic] within and outside the NHS by very respectable practitioners. You will be as aware, as Connect is, that there are lots of treatments that don’t have a huge evidence base that are practiced in mainstream medicine. Connect has seen many carefully selected patients helped by acupuncture and manual therapy (craniosacral therapy / chiropractic) over many years. Lack of evidence doesn’t mean they don’t work, just that benefit is not proven. Furthermore, nowhere on our website do we state that ALL treatments / services / modalities that Connect offer are ‘Evidence Based’. We do however offer many services that are evidence based, where the evidence exists. We aim to offer ‘choice’ to patients, from a range of services that are safe and delivered by suitably trained professionals and practitioners in line with Codes of Practice and Guidelines from the relevant governing bodies. Connect’s service provision in Camden is meticulously assessed and of a high standard and we are proud of the services provided. |

This response is so wrong, on so many levels, that I gave up on Mr Philpott at this point. At least he admitted implicitly that all of their treatments are not evidence-based. In that case their web site needs to change that claim.

If, by "governing bodies" he means jokes like the GCC or the CNHC then I suppose the behaviour of their employees is not surprising. Mr Philpott is evidently not aware that "craniosacral therapy" has been censured by the Advertising Standards Authority. Well he is now, but evidently doesn’t seem to give a damn.

Next I wrote to the PCT and it took several mails to find out who was responsible for the service. Three mails produced no response so I sent a Freedom of Information Act request. In the end I got some

"Connect PHC provide the Community musculoskeletal service for Camden. The specification for the service specifically asks for the provision of evidence based management and treatments see paragraph on Governance page 14 of attached.. Patients are treated with acupuncture as per the NICE Guidelines (May 2009) for the management of low back pain … . .. Chiropractors are not employed in the service and craniosacral therapy is not provided as part of the service either."

Another letter, pointing out that they were using acupuncture for things other than low back pain got no more information. They did send a copy of the contract with Connect. It makes no mention whatsoever of alternative treatments. It should have done, so part of the responsibility for the failure must lie with the PCT.

The contract does, however, say (page 18)

The service to be led by a lead clinician/manager who can effectively demonstrate ongoing and evidence-based development of clinical guidelines, policies and protocols for effective working practices within the service

In my opinion, Connect Physical Health are in breach of contract

Another example of Connect ignoring evidence

The Connect Physical Health web site has an article about osteoarthritis of the knee

Physiotherapy can be extremely beneficial to help to reduce the symptoms of OA. Treatments such as mobilizations, rehab exercises, acupuncture and taping can help to reduce pain, increase range of movement, increase muscle strength and aid return to functional activities and sports.

There is little enough evidence that physiotherapy does any of these things, but at least it is free of mystical mumbo-jumbo. Although at one time the claim for acupuncture was thought to have some truth, the 2010 Cochrane review concludes otherwise

Sham-controlled trials show statistically significant benefits; however, these benefits are small, do not meet our pre-defined thresholds for clinical relevance, and are probably due at least partially to placebo effects from incomplete blinding.

This conclusion is much the same as has been reached for acupuncture treatments of almost everything. Two major meta-analyses come to similar conclusions. Madsen Gøtzsche & Hróbjartsson (2009) and Vickers et al (2012) both conclude that if there is an effect at all (dubious) then it is too small to be noticeable to the patient. (Be warned that in the case of Vickers et al. you need to read the paper itself because of the spin placed on the results in the abstract.). These papers are discussed in detail in our recent paper.

Why is Connect Physical Health not aware of this?

Their head of operations told me (see above) that

"Connect does not wish to be drawn into a debate [about acupuncture and craniosacral therapy]".

That outlook was confirmed when I left a comment on their osteoarthritis post. This is what it looked like almost a month later.

Guess what? The comment has never appeared..

The attitude of Connect Physical Health to evidence is simply to ignore it if it gets in the way of making money, and to censor any criticism.

What have Camden NHS done about it?

The patient and I both complained to Camden NHS in August 2012. At first, they simply forwarded the complaints to Connect Physical Health with the unsatisfactory results shown above. It took until May 2013 to get any sort of reasonable response. That seems a very long time. In fact by the time the response arrived the PCT had been renamed a Clinical Commissioning Group (CCG) because of the vast top-down reorganisation inposed by Lansley’s Health and Social Care Act.

On 8 May 2013, this response was sent to the patient, Here is part of it.

|

I have received your email of complaint from the NHSNCL complaints department regarding your care. You raise some very clear concerns and I will attempt to address these in order. 1) The fact that you felt pressurised into having acupuncture is a concern as everybody should be given a choice. As part of the informed consent relating to acupuncture you should have been told about the treatment, it’s [sic] benefits and risks and then you sign to confirm you are happy to proceed. I understand that this was the case in your situation but I have reinforced that the consent is important and must be adhered to by the provider Connect Physical Health. There are clear standards of clinical practice that all Chartered Physiotherapists must follow which I will discuss further with the Connect Camden team Manager Nick Downing. I do disagree with you around acupuncture; there is no conclusive evidence for acupuncture in frozen shoulder but I have referenced a systematic review which concludes the studies were too small to draw any conclusions although shoulder function was significantly improved at 4 weeks (Green S et al. Acupuncture for shoulder pain. Cochrane Database Syst Rev 2005; 18: CD005319). There is a growing body of evidence supporting the use of acupuncture and until such time as there is specific evidence against it I don’t think we would be absolutely against the practice of this modality alongside other treatments. .Best wishes Strategy and Planning Directorate |

This response raises more questions than it answers.

For example, what is "informed consent" worth if the therapist is his/herself misinformed about the treatment? It is the eternal dilemma of alternative medicine that it is no use referring to well-trained practitioners, when their training has inculcated myths and untruths.

There is not a "growing body of evidence supporting the use of acupuncture". Precisely the opposite is true.

And the statement "until such time as there is specific evidence against it I don’t think we would be absolutely against the practice of this modality alongside" betrays a basic misunderstanding of the scientific process.

So I sent the writer of this letter a reprint of our paper, "Acupuncture is a theatrical placebo: the end of a myth" (the blog version alone has had over 12,000 page views). A few days later we had an amiable lunch together and we had a constructive discussion about the problems of deciding what should be commissioned and what shouldn’t.

It seems to me to be clear that CCGs should take better advice before boasting that they commission evidence-based treatments.

Postscript

Stories like this are worrying to the majority of physiotherapists who don’t go in for mystical mumbo-jumbo of acupuncture. One of the best is Neil O’Connell who blogs at BodyInMind. He tweeted

Physio fail, sigh RT@david_colquhoun: Yet more #acupuncture. Sold to the NHS by private contractor @ConnectPHC http://t.co/HylkwMCVTh

— Neil O'Connell (@NeilOConnell) June 10, 2013

It isn’t clear how many physiotherapists embrace nonsense, but the Acupuncture Association of Chartered Physiotherapists has around 6000 members, compared with 47,000 chartered physiotherapists (AACP), so it’s a smallish minority. The AACP claims that it is “Integrating Evidence-Based Acupuncture into Physiotherapy”. Like most politicians, the term “evidence-based” is thrown around with gay abandon. Clearly they don’t understand evidence.

Follow-up

12 June 2013

The Advertising Standards Authority has, one again, upheld complaints against the UCLH Trust, for making false claims in its advertising. This time, appropriately, it’s about acupuncture. Just about everything in their advertising leaflets was held to be unjustifiable. They’ve been in trouble before about false claims for homeopathy, hypnosis and craniosacral "therapy".

Of course all of these embarrassments come from one very small corner of the UCLH Trust, the Royal London Hospital for Integrated Medicine (previously known as the Royal London Homeopathic Hospital).

Why is it tolerated in an otherwise excellent NHS Trust? Well, the patron is the Queen herself (not Charles, aka the Quacktitioner Royal), She seems to exert more power behind the scenes than is desirable in In a constitutional monarchy

29 June 2013

I wrote to Dr Gill Gaskin about the latest ASA judgement against RLHIM. She is the person at the UCLH Trust who has responsibility for the quack hospital. She previously refused to do anything about the craniosacral nonsense that is promoted there. This time the ASA seems to have stung them into action at long last. I was told

|

In response to your question about proposed action: All written information for patients relating to the services offered by the Royal London Hospital for Integrated Medicine are being withdrawn for review in the light of the ASA’s rulings (and the patient leaflets have already been withdrawn). It will be reviewed and modified where necessary item by item, and only reintroduced after sign-off through the Queen Square divisional clinical governance processes and the Trust’s patient information leaflet team. With best wishes Gill Gaskin Dr Gill Gaskin |

It remains to be seen whether the re-written information is accurate or not.

The rules for advertising

The Advertising Standards Authority gives advice for advertisers about what’s permitted and what isn’t.

Acupuncture The CAP advice

Craniosacral therapy The CAP advice

Homeopathy The CAP advice and 2013 update

Chiropractic The CAP advice.

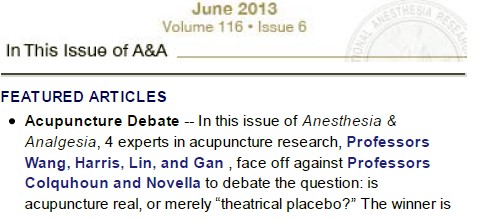

Anesthesia & Analgesia is the official journal of the International Anesthesia Research Society. In 2012 its editor, Steven Shafer, proposed a head-to-head contest between those who believe that acupuncture works and those who don’t. I was asked to write the latter. It has now appeared in June 2013 edition of the journal [download pdf]. The pro-acupuncture article written by Wang, Harris, Lin and Gan appeared in the same issue [download pdf].

Acupuncture is an interesting case, because it seems to have achieved greater credibility than other forms of alternative medicine, despite its basis being just as bizarre as all the others. As a consequence, a lot more research has been done on acupuncture than on any other form of alternative medicine, and some of it has been of quite high quality. The outcome of all this research is that acupuncture has no effects that are big enough to be of noticeable benefit to patients, and it is, in all probablity, just a theatrical placebo.

After more than 3000 trials, there is no need for yet more. Acupuncture is dead.

Acupuncture is a theatrical placebo

David Colquhoun (UCL) and Steven Novella (Yale)

Anesthesia & Analgesia, June 2013 116:1360-1363.

Pain is a big problem. If you read about pain management centres you might think it had been solved. It hasn’t. And when no effective treatment exists for a medical problem, it leads to a tendency to clutch at straws. Research has shown that acupuncture is little more than such a straw.

Although it is commonly claimed that acupuncture has been around for thousands of years, it hasn’t always been popular even in China. For almost 1000 years it was in decline and in 1822 Emperor Dao Guang issued an imperial edict stating that acupuncture and moxibustion should be banned forever from the Imperial Medical Academy.

Acupuncture continued as a minor fringe activity in the 1950s. After the Chinese Civil War, the Chinese Communist Party ridiculed traditional Chinese medicine, including acupuncture, as superstitious. Chairman Mao Zedong later revived traditional Chinese Medicine as part of the Great Proletarian Cultural Revolution of 1966 (Atwood, 2009). The revival was a convenient response to the dearth of medically-trained people in post-war China, and a useful way to increase Chinese nationalism. It is said that Chairman Mao himself preferred Western medicine. His personal physician quotes him as saying “Even though I believe we should promote Chinese medicine, I personally do not believe in it. I don’t take Chinese medicine” Li {Zhisui Li. Private Life Of Chairman Mao: Random House, 1996}.

The political, or perhaps commercial, bias seems to still exist. It has been reported by Vickers et al. (1998) (authors who are sympathetic to alternative medicine) that

"all trials [of acupuncture] originating in China, Japan, Hong Kong, and Taiwan were positive"(4).

Acupuncture was essentially defunct in the West until President Nixon visited China in 1972. Its revival in the West was largely a result of a single anecdote promulgated by journalist James Reston in the New York Times, after he’d had acupuncture in Beijing for post-operative pain in 1971. Despite his eminence as political journalist, Reston had no scientific background and evidently didn’t appreciate the post hoc ergo propter hoc fallacy, or the idea of regression to the mean.

After Reston’s article, acupuncture quickly became popular in the West. Stories circulated that patients in China had open heart surgery using only acupuncture (Atwood, 2009). The Medical Research Council (UK) sent a delegation, which included Alan Hodgkin, to China in 1972 to investigate these claims , about which they were skeptical. In 2006 the claims were repeated in 2006 in a BBC TV program, but Simon Singh (author of Fermat’s Last Theorem) discovered that the patient had been given a combination of three very powerful sedatives (midazolam, droperidol, fentanyl) and large volumes of local anaesthetic injected into the chest. The acupuncture needles were purely cosmetic.

Curiously, given that its alleged principles are as bizarre as those on any other sort of pre-scientific medicine, acupuncture seemed to gain somewhat more plausibility than other forms of alternative medicine. The good thing about that is that more research has been done on acupuncture than on just about any other fringe practice.