statistics

This is a transcript of the talk that I gave to the RIOT science club on 1st October 2020. The video of the talk is on YouTube . The transcript was very kindly made by Chris F Carroll, but I have modified it a bit here to increase clarity. Links to the original talk appear throughout.

My title slide is a picture of UCL’s front quad, taken on the day that it was the starting point for the second huge march that attempted to stop the Iraq war. That’s a good example of the folly of believing things that aren’t true.

“Today I speak to you of war. A war that has pitted statistician against statistician for nearly 100 years. A mathematical conflict that has recently come to the attention of the normal people and these normal people look on in fear, in horror, but mostly in confusion because they have no idea why we’re fighting.”

Kristin Lennox (Director of Statistical Consulting, Lawrence Livermore National Laboratory)

That sums up a lot of what’s been going on. The problem is that there is near unanimity among statisticians that p values don’t tell you what you need to know but statisticians themselves haven’t been able to agree on a better way of doing things.

This talk is about the probability that if we claim to have made a discovery we’ll be wrong. This is what people very frequently want to know. And that is not the p value. You want to know the probability that you’ll make a fool of yourself by claiming that an effect is real when in fact it’s nothing but chance.

Just to be clear, what I’m talking about is how you interpret the results of a single unbiased experiment. Unbiased in the sense the experiment is randomized, and all the assumptions made in the analysis are exactly true. Of course in real life false positives can arise in any number of other ways: faults in the randomization and blinding, incorrect assumptions in the analysis, multiple comparisons, p hacking and so on, and all of these things are going to make the risk of false positives even worse. So in a sense what I’m talking about is your minimum risk of making a false positive even if everything else were perfect.

The conclusion of this talk will be:

If you observe a p value close to 0.05 and conclude that you’ve discovered something, then the chance that you’ll be wrong is not 5%, but is somewhere between 20% and 30% depending on the exact assumptions you make. If the hypothesis was an implausible one to start with, the false positive risk will be much higher.

There’s nothing new about this at all. This was written by a psychologist in 1966.

The major point of this paper is that the test of significance does not provide the information concerning phenomena characteristically attributed to it, and that a great deal of mischief has been associated with its use.

Bakan, D. (1966) Psychological Bulletin, 66 (6), 423 – 237

Bakan went on to say this is already well known, but if so it’s certainly not well known, even today, by many journal editors or indeed many users.

The p value

Let’s start by defining the p value. An awful lot of people can’t do this but even if you can recite it, it’s surprisingly difficult to interpret it.

I’ll consider it in the context of comparing two independent samples to make it a bit more concrete. So the p value is defined thus:

If there were actually no effect -for example if the true means of the two samples were equal, so the difference was zero -then the probability of observing a value for the difference between means which is equal to or greater than that actually observed is called the p value.

Now there’s at least five things that are dodgy with that, when you think about it. It sounds very plausible but it’s not.

- “If there are actually no effect …”: first of all this implies that the denominator for the probability is the number of cases in which there is no effect and this is not known.

- “… or greater than…” : why on earth should we be interested in values that haven’t been observed? We know what the effect size that was observed was, so why should we be interested in values that are greater than that which haven’t been observed?

- It doesn’t compare the hypothesis of no effect with anything else. This is put well by Sellke et al in 2001, “knowing that the data are rare when there is no true difference [that’s what the p value tells you] is of little use unless one determines whether or not they are also rare when there is a true difference”. In order to understand things properly, you’ve got to have not only the null hypothesis but also an alternative hypothesis.

- Since the definition assumes that the null hypothesis is true, it’s obvious that it can’t tell us about the probability that the null hypothesis is true.

- The definition invites users to make the error of the transposed conditional. That sounds a bit fancy but it’s very easy to say what it is.

- The probability that you have four legs given that you’re a cow is high but the probability that you’re a cow given that you’ve got four legs is quite low many animals that have four legs that aren’t cows.

- Take a legal example. The probability of getting the evidence given that you’re guilty may be known. (It often isn’t of course — but that’s the sort of thing you can hope to get). But it’s not what you want. What you want is the probability that you’re guilty given the evidence.

- The probability you’re catholic given that you’re the pope is probably very high, but the probability you’re a pope given that you’re a catholic is very low.

So now to the nub of the matter.

- The probability of the observations given that the null hypothesis is the p value. But it’s not what you want. What you want is the probability that the null hypothesis is true given the observations.

The first statement is a deductive process; the second process is inductive and that’s where the problems lie. These probabilities can be hugely different and transposing the conditional simply doesn’t work.

The False Positive Risk

The false positive risk avoids these problems. Define the false positive risk as follows.

If you declare a result to be “significant” based on a p value after doing a single unbiased experiment, the False Positive Risk is the probability that your result is in fact a false positive.

That, I maintain, is what you need to know. The problem is that in order to get it, you need Bayes’ theorem and as soon as that’s mentioned, contention immediately follows.

Bayes’ theorem

Suppose we call the null-hypothesis H0, and the alternative hypothesis H1. For example, H0 can be that the true effect size is zero and H1 can be the hypothesis that there’s a real effect, not just chance. Bayes’ theorem states that the odds on H1 being true, rather than H0 , after you’ve done the experiment are equal to the likelihood ratio times the odds on there being a real effect before the experiment:

In general we would want a Bayes’ factor here, rather than the likelihood ratio, but under my assumptions we can use the likelihood ratio, which is a much simpler thing [explanation here].

The likelihood ratio represents the evidence supplied by the experiment. It’s what converts the prior odds to the posterior odds, in the language of Bayes’ theorem. The likelihood ratio is a purely deductive quantity and therefore uncontentious. It’s the probability of the observations if there’s a real effect divided by the probability of the observations if there’s no effect.

Notice a simplification you can make: if the prior odds equal 1, then the posterior odds are simply equal to the likelihood ratio. “Prior odds of 1” means that it’s equally probable before the experiment that there was an effect or that there’s no effect. Put another way, prior odds of 1 means that the prior probability of H0 and of H1 are equal: both are 0.5. That’s probably the nearest you can get to declaring equipoise.

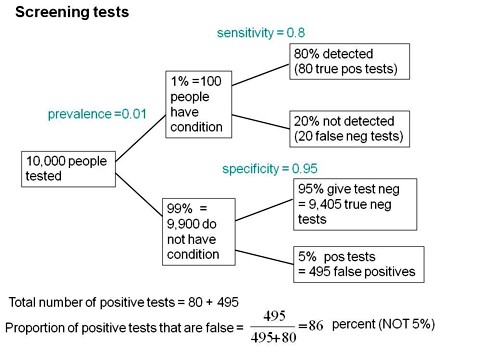

Comparison: Consider Screening Tests

I wrote a statistics textbook in 1971 [download it here] which by and large stood the test of time but the one thing I got completely wrong was the limitations of p values. Like many other people I came to see my errors through thinking about screening tests. These are very much in the news at the moment because of the COVID-19 pandemic. The illustration of the problems they pose which follows is now quite commonplace.

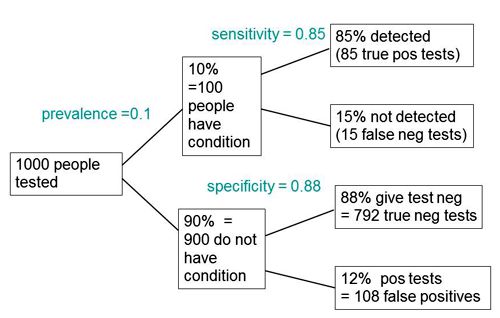

Suppose you test 10,000 people and that 1 in a 100 of those people have the condition, e.g. Covid-19, and 99 don’t have it. The prevalence in the population you’re testing is 1 in a 100. So you have 100 people with the condition and 9,900 who don’t. If the specificity of the test is 95%, you get 5% false positives.

This is very much like a null-hypothesis test of significance. But you can’t get the answer without considering the alternative hypothesis, which null-hypothesis significance tests don’t do. So now add the upper arm to the Figure above.

You’ve got 1% (so that’s 100 people) who have the condition, so if the sensitivity of the test is 80% (that’s like the power of a significance test) then you get to the total number of positive tests is 80 plus 495 and the proportion of tests that are false is 495 false positives divided by the total number of positives, which is 86%. A test that gives 86% false positives is pretty disastrous. It is not 5%! Most people are quite surprised by that when they first come across it.

Now look at significance tests in a similar way

Now we can do something similar for significance tests (though the parallel is not exact, as I’ll explain).

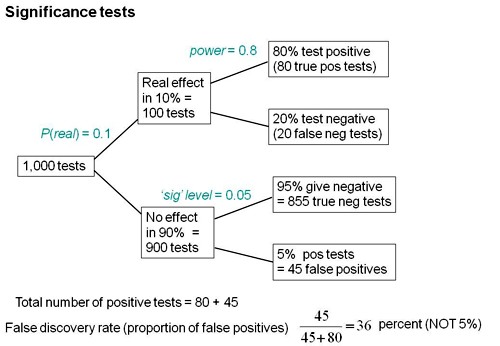

Suppose we do 1,000 tests and in 10% of them there’s a real effect, and in 90% of them there is no effect. If the significance level, so-called, is 0.05 then we get 5% false positive tests, which is 45 false positives.

But that’s as far as you can go with a null-hypothesis significance test. You can’t tell what’s going on unless you consider the other arm. If the power is 80% then we get 80 true positive tests and 20 false negative tests, so the total number of positive tests is 80 plus 45 and the false positive risk is the number of false positives divided by the total number of positives which is 36 percent.

So the p value is not the false positive risk. And the type 1 error rate is not the false positive risk.

The difference between them lies not in the numerator, it lies in the denominator. In the example above, of the 900 tests in which the null-hypothesis was true, there were 45 false positives. So looking at it from the classical point of view, the false positive risk would turn out to be 45 over 900 which is 0.05 but that’s not what you want. What you want is the total number of false positives, 45, divided by the total number of positives (45+80), which is 0.36.

The p value is NOT the probability that your results occurred by chance. The false positive risk is.

A complication: “p-equals” vs “p-less-than”

But now we have to come to a slightly subtle complication. It’s been around since the 1930s and it was made very explicit by Dennis Lindley in the 1950s. Yet it is unknown to most people which is very weird. The point is that there are two different ways in which we can calculate the likelihood ratio and therefore two different ways of getting the false positive risk.

A lot of writers including Ioannidis and Wacholder and many others use the “p less than” approach. That’s what that tree diagram gives you. But it is not what is appropriate for interpretation of a single experiment. It underestimates the false positive risk.

What we need is the “p equals” approach, and I’ll try and explain that now.

Suppose we do a test and we observe p = 0.047 then all we are interested in is, how tests behave that come out with p = 0.047. We aren’t interested in any other different p value. That p value is now part of the data. The tree diagram approach we’ve just been through gave a false positive risk of only 6%, if you assume that the prevalence of true effects was 0.5 (prior odds of 1). 6% isn’t much different from 5% so it might seem okay.

But the tree diagram approach, although it is very simple, still asks the wrong question. It looks at all tests that gives p ≤ 0.05, the “p-less-than” case. If we observe p = 0.047 then we should look only at tests that give p = 0.047 rather than looking at all tests which come out with p ≤ 0.05. If you’re doing it with simulations of course as in my 2014 paper then you can’t expect any tests to give exactly 0.047; what you can do is look at all the tests that come out with p in a narrow band around there, say 0.045 ≤ p ≤ 0.05.

This approach gives a different answer from the tree diagram approach. If you look at only tests that give p values between 0.045 and 0.05, the false positive risk turns out to be not 6% but at least 26%.

I say at least, because that assumes a prior probability of there being a real effect of 50:50. If only 10% of the experiments had a real effect of (a prior of 0.1 in the tree diagram) this rises to 76% of false positives. That really is pretty disastrous. Now of course the problem is you don’t know this prior probability.

The problem with Bayes theorem is that there exists an infinite number of answers. Not everyone agrees with my approach, but it is one of the simplest.

The likelihood-ratio approach to comparing two hypotheses

The likelihood ratio -that is to say, the relative probabilities of observing the data given two different hypotheses, is the natural way to compare two hypotheses. For example, in our case one hypothesis is the zero effect (that’s the null-hypothesis) and the other hypothesis is that there’s a real effect of the observed size. That’s the maximum likelihood estimate of the real effect size. Notice that we are not saying that the effect size is exactly zero; but rather we are asking whether a zero effect explains the observations better than a real effect.

Now this amounts to putting a “lump” of probability on there being a zero effect. If you put a prior probability of 0.5 for there being a zero effect, you’re saying the prior odds are 1. If you are willing to put a lump of probability on the null-hypothesis, then there are several methods of doing that. They all give similar results to mine within a factor of two or so.

Putting a lump of probability on their being a zero effect, for example a prior probability of 0.5 of there being zero effect, is regarded by some people as being over-sceptical (though others might regard 0.5 as high, given that most bright ideas are wrong).

E.J. Wagenmakers summed it up in a tweet:

“at least Bayesians attempt to find an approximate answer to the right question instead of struggling to interpret an exact answer to the wrong question [that’s the p value]”.

Some results.

The 2014 paper used simulations, and that’s a good way to see what’s happening in particular cases. But to plot curves of the sort shown in the next three slides we need exact calculations of FPR and how to do this was shown in the 2017 paper (see Appendix for details).

Comparison of p-equals and p-less-than approaches

The slide at slide at 26:05 is designed to show the difference between the “p-equals” and the “p-less than” cases.

On each diagram the dashed red line is the “line of equality”: that’s where the points would lie if the p value were the same as the false positive risk. You can see that in every case the blue lines -the false positive risk -is greater than the p value. And for any given observed p value, the p-equals approach gives a bigger false positive risk than the p-less-than approach. For a prior probability of 0.5 then the false positive risk is about 26% when you’ve observed p = 0.05.

So from now on I shall use only the “p-equals” calculation which is clearly what’s relevant to a test of significance.

The false positive risk as function of the observed p value for different sample sizes

Now another set of graphs (slide at 27:46), for the false positive risk as a function of the observed p value, but this time we’ll vary the number in each sample. These are all for comparing two independent samples.

The curves are red for n = 4 ; green for n = 8 ; blue for n = 16.

The top row is for an implausible hypothesis with a prior of 0.1, the bottom row for a plausible hypothesis with a prior of 0.5.

The left column shows arithmetic plots; the right column shows the same curves in log-log plots, The power these lines correspond to is:

- n = 4 (red) has power 22%

- n = 8 (green) has power 46%

- n = 16 (blue) one has power 78%

Now you can see these behave in a slightly curious way. For most of the range it’s what you’d expect: n = 4 gives you a higher false positive risk than n = 8 and that still higher than n = 16 the blue line.

The curves behave in an odd way around 0.05; they actually begin to cross, so the false positive risk for p values around 0.05 is not strongly dependent on sample size.

But the important point is that in every case they’re above the line of equality, so the false positive risk is much bigger than the p value in any circumstance.

False positive risk as a function of sample size (i.e. of power)

Now the really interesting one (slide at 29:34). When I first did the simulation study I was challenged by the fact that the false positive risk actually becomes 1 if the experiment is a very powerful one. That seemed a bit odd.

The plot here is the false positive risk FPR50 which I define as “the false positive risk for prior odds of 1, i.e. a 50:50 chance of being a real effect or not a real effect.

Let’s just concentrate on the p = 0.05 curve (blue). Notice that, because the number per sample is changing, the power changes throughout the curve. For example on the p = 0.05 curve for n = 4 (that’s the lowest sample size plotted), power is 0.22, but if we go to the other end of the curve, n = 64 (the biggest sample size plotted), the power is 0.9999. That’s something not achieved very often in practice.

But how is it that p = 0.05 can give you a false positive risk which approaches 100%? Even with p = 0.001 the false positive risk will eventually approach 100% though it does so later and more slowly.

In fact this has been known for donkey’s years. It’s called the Jeffreys-Lindley paradox, though there’s nothing paradoxical about it. In fact it’s exactly what you’d expect. If the power is 99.99% then you expect almost every p value to be very low. Everything is detected if we have a high power like that. So it would be very rare, with that very high power, to get a p value as big as 0.05. Almost every p value will be much less than 0.05, and that’s why observing a p value as big as 0.05 would, in that case, provide strong evidence for the null-hypothesis. Even p = 0.01 would provide strong evidence for the null hypothesis when the power is very high because almost every p value would be much less than 0.01.

This is a direct consequence of using the p-equals definition which I think is what’s relevant for testing hypotheses. So the Jeffreys-Lindley phenomenon makes absolute sense.

In contrast, if you use the p-less-than approach, the false positive risk would decrease continuously with the observed p value. That’s why, if you have a big enough sample (high enough power), even the smallest effect becomes “statistically significant”, despite the fact that the odds may favour strongly the null hypothesis. [Here, ‘the odds’ means the likelihood ratio calculated by the p-equals method.]

A real life example

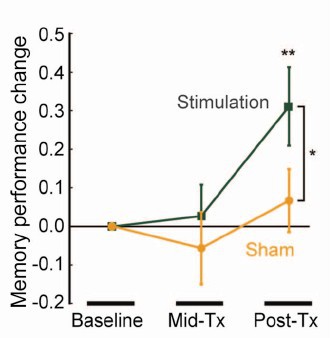

Now let’s consider an actual practical example. The slide shows a study of transcranial electromagnetic stimulation published in Science magazine (so a bit suspect to begin with).

The study concluded (among other things) that an improved associated memory performance was produced by transcranial electromagnetic stimulation, p = 0.043. In order to find out how big the sample sizes were I had to dig right into the supplementary material. It was only 8. Nonetheless let’s assume that they had an adequate power and see what we make of it.

In fact it wasn’t done in a proper parallel group way, it was done as ‘before and after’ the stimulation, and sham stimulation, and it produces one lousy asterisk. In fact most of the paper was about functional magnetic resonance imaging, memory was mentioned only as a subsection of Figure 1, but this is what was tweeted out because it sounds more dramatic than other things and it got a vast number of retweets. Now according to my calculations p = 0.043 means there’s at least an 18% chance that it’s false positive.

How better might we express the result of this experiment?

We should say, conventionally, that the increase in memory performance was 1.88 ± 0.85 (SEM) with confidence interval 0.055 to 3.7 (extra words recalled on a baseline of about 10). Thus p = 0.043. But then supplement this conventional statement with

This implies a false positive risk, FPR50, (i.e. the probability that the results occurred by chance only) of at least 18%, so the result is no more than suggestive.

There are several other ways you can put the same idea. I don’t like them as much because they all suggest that it would be helpful to create a new magic threshold at FPR50 = 0.05, and that’s as undesirable as defining a magic threshold at p = 0.05. For example you could say that the increase in performance gave p = 0.043, and in order to reduce the false positive risk to 0.05 it would be necessary to assume that the prior probability of there being a real effect was 81%. In other words, you’d have to be almost certain that there was a real effect before you did the experiment before that result became convincing. Since there’s no independent evidence that that’s true, the result is no more than suggestive.

Or you could put it this way: the increase in performance gave p = 0.043. In order to reduce the false positive risk to 0.05 it would have been necessary to observe p = 0.0043, so the result is no more than suggestive.

The reason I now prefer the first of these possibilities is because the other two involve an implicit threshold of 0.05 for the false positive risk and that’s just as daft as assuming a threshold of 0.05 for the p value.

The web calculator

Scripts in R are provided with all my papers. For those who can’t master R Studio, you can do many of the calculations very easily with our web calculator [for latest links please go to http://www.onemol.org.uk/?page_id=456]. There are three options : if you want to calculate the false positive risk for a specified p value and prior, you enter the observed p value (e.g. 0.049), the prior probability that there’s a real effect (e.g. 0.5), the normalized effect size (e.g. 1 standard deviation) and the number in each sample. All the numbers cited here are based on an effect size if 1 standard deviation, but you can enter any value in the calculator. The output panel updates itself automatically.

We see that the false positive risk for the p-equals case is 0.26 and the likelihood ratio is 2.8 (I’ll come back to that in a minute).

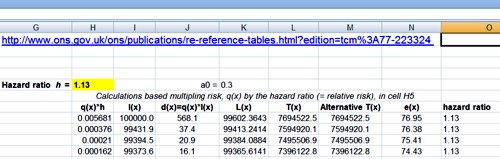

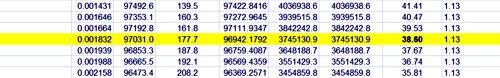

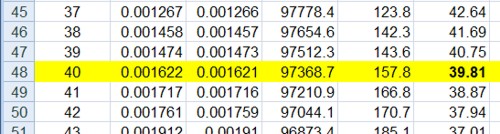

Using the web calculator or using the R programs which are provided with the papers, this sort of table can be very quickly calculated.

The top row shows the results if we observe p = 0.05. The prior probability that you need to postulate to get a 5% false positive risk would be 87%. You’d have to be almost ninety percent sure there was a real effect before the experiment, in order to to get a 5% false positive risk. The likelihood ratio comes out to be about 3; what that means is that your observations will be about 3 times more likely if there was a real effect than if there was no effect. 3:1 is very low odds compared with the 19:1 odds which you might incorrectly infer from p = 0.05. The false positive risk for a prior of 0.5 (the default value) which I call the FPR50, would be 27% when you observe p = 0.05.

In fact these are just directly related to each other. Since the likelihood ratio is a purely deductive quantity, we can regard FPR50 as just being a transformation of the likelihood ratio and regard this as also a purely deductive quantity. For example, 1 / (1 + 2.8) = 0.263, the FPR50. But in order to interpret it as a posterior probability then you do have to go into Bayes’ theorem. If the prior probability of a real effect was only 0.1 then that would correspond to a 76% false positive risk when you’ve observed p = 0.05.

If we go to the other extreme, when we observe p = 0.001 (bottom row of the table) the likelihood ratio is 100 -notice not 1000, but 100 -and the false positive risk, FPR50 , would be 1%. That sounds okay but if it was an implausible hypothesis with only a 10% prior chance of being true (last column of Table), then the false positive risk would be 8% even when you observe p = 0.001: even in that case it would still be above 5%. In fact, to get the FPR down to 0.05 you’d have to observe p = 0.00043, and that’s good food for thought.

So what do you do to prevent making a fool of yourself?

- Never use the words significant or non-significant and then don’t use those pesky asterisks please, it makes no sense to have a magic cut off. Just give a p value.

- Don’t use bar graphs. Show the data as a series of dots.

- Always remember, it’s a fundamental assumption of all significance tests that the treatments are randomized. When this isn’t the case, you can still calculate a test but you can’t expect an accurate result. This is well-illustrated by thinking about randomisation tests.

- So I think you should still state the p value and an estimate of the effect size with confidence intervals but be aware that this tells you nothing very direct about the false positive risk. The p value should be accompanied by an indication of the likely false positive risk. It won’t be exact but it doesn’t really need to be; it does answer the right question. You can for example specify the FPR50, the false positive risk based on a prior probability of 0.5. That’s really just a more comprehensible way of specifying the likelihood ratio. You can use other methods, but they all involve an implicit threshold of 0.05 for the false positive risk. That isn’t desirable.

So p = 0.04 doesn’t mean you discovered something, it means it might be worth another look. In fact even p = 0.005 can under some circumstances be more compatible with the null-hypothesis than with there being a real effect.

We must conclude, however reluctantly, that Ronald Fisher didn’t get it right. Matthews (1998) said,

“the plain fact is that 70 years ago Ronald Fisher gave scientists a mathematical machine for turning boloney into breakthroughs and flukes into funding”.

Robert Matthews Sunday Telegraph, 13 September 1998.

But it’s not quite fair to blame R. A. Fisher because he himself described the 5% point as a “quite a low standard of significance”.

Questions & Answers

Q: “There are lots of competing ideas about how best to deal with the issue of statistical testing. For the non-statistician it is very hard to evaluate them and decide on what is the best approach. Is there any empirical evidence about what works best in practice? For example, training people to do analysis in different ways, and then getting them to analyze data with known characteristics. If not why not? It feels like we wouldn’t rely so heavily on theory in e.g. drug development, so why do we in stats?

A: The gist: why do we rely on theory and statistics? Well, we might as well say, why do we rely on theory in mathematics? That’s what it is! You have concrete theories and concrete postulates. Which you don’t have in drug testing, that’s just empirical.

Q: Is there any empirical evidence about what works best in practice, so for example training people to do analysis in different ways? and then getting them to analyze data with known characteristics and if not why not?

A: Why not: because you never actually know unless you’re doing simulations what the answer should be. So no, it’s not known which works best in practice. That being said, simulation is a great way to test out ideas. My 2014 paper used simulation, and it was only in the 2017 paper that the maths behind the 2014 results was worked out. I think you can rely on the fact that a lot of the alternative methods give similar answers. That’s why I felt justified in using rather simple assumptions for mine, because they’re easier to understand and the answers you get don’t differ greatly from much more complicated methods.

In my 2019 paper there’s a comparison of three different methods, all of which assume that it’s reasonable to test a point (or small interval) null-hypothesis (one that says that treatment effect is exactly zero), but given that assumption, all the alternative methods give similar answers within a factor of two or so. A factor of two is all you need: it doesn’t matter if it’s 26% or 52% or 13%, the conclusions in real life are much the same.

So I think you might as well use a simple method. There is an even simpler one than mine actually, proposed by Sellke et al. (2001) that gives a very simple calculation from the p value and that gives a false positive risk of 29 percent when you observe p = 0.05. My method gives 26%, so there’s no essential difference between them. It doesn’t matter which you use really.

Q: The last question gave an example of training people so maybe he was touching on how do we teach people how to analyze their data and interpret it accurately. Reporting effect sizes and confidence intervals alongside p values has been shown to improve interpretation in teaching contexts. I wonder whether in your own experience that you have found that this helps as well? Or can you suggest any ways to help educators, teachers, lecturers, to help the next generation of researchers properly?

A: Yes I think you should always report the observed effect size and confidence limits for it. But be aware that confidence intervals tell you exactly the same thing as p values and therefore they too are very suspect. There’s a simple one-to-one correspondence between p values and confidence limits. So if you use the criterion, “the confidence limits exclude zero difference” to judge whether there’s a real effect you’re making exactly the same mistake as if you use p ≤ 0.05 to to make the judgment. So they they should be given for sure, because they’re sort of familiar but you do need, separately, some sort of a rough estimate of the false positive risk too.

Q: I’m struggling a bit with the “p equals” intuition. How do you decide the band around 0.047 to use for the simulations? Presumably the results are very sensitive to this band. If you are using an exact p value in a calculation rather than a simulation, the probability of exactly that p value to many decimal places will presumably become infinitely small. Any clarification would be appreciated.

A: Yes, that’s not too difficult to deal with: you’ve got to use a band which is wide enough to get a decent number in. But the result is not at all sensitive to that: if you make it wider, you’ll get larger numbers in both numerator and denominator so the result will be much the same. In fact, that’s only a problem if you do it by simulation. If you do it by exact calculation it’s easier. To do a 100,000 or a million t-tests with my R script in simulation, doesn’t take long. But it doesn’t depend at all critically on the width of the interval; and in any case it’s not necessary to do simulations, you can do the exact calculation.

Q: Even if an exact calculation can’t be done—it probably can—you can get a better and better approximation by doing more simulations and using narrower and narrower bands around 0.047?

A: Yes, the larger the number of simulated tests that you do, the more accurate the answer. I did check it with a million occasionally. But once you’ve done the maths you can get exact answers much faster. The slide at 53:17 shows how you do the exact calculation.

• The Student’s t value along the bottom

• Probability density at the side

• The blue line is the distribution you get under the null-hypothesis, with a mean of 0 and a standard deviation of 1 in this case.

• So the red areas are the rejection areas for a t-test.

• The green curve is the t distribution (it’s a non-central t-distribution which is what you need in this case) for the alternative hypothesis.

• The yellow area is the power of the test, which here is 78%

• The orange area is (1 – power) so it’s 22%

The p-less-than calculation considers all values in the red area or in the yellow area as being positives. The p-equals calculation uses not the areas, but the ordinates here, the probability densities. The probability (density) of getting a t value of 2.04 under the null hypothesis is y0 = 0.053. And the probability (density) under the alternative hypothesis is y1 = 0.29. It’s true that the probability of getting t = 2.04 exactly is infinitesimally small (the area of an infinitesimally narrow band around t = 2.04) but the ratio if the two infinitesimally small probabilities is perfectly well-define). so for the p-equals approach, the likelihood ratio in favour of the alternative hypothesis would be L10 = y1 / 2y0 (the factor of 2 arises because of the two red tails) and that gives you a likelihood ratio of 2.8. That corresponds to an FPR50 of 26% as we explained. That’s exactly what you get from simulation. I hope that was reasonably clear. It may not have been if you aren’t familiar with looking at those sorts of things.

Q: To calculate FPR50 -false positive risk for a 50:50 prior -I need to assume an effect size. Which one do you use in the calculator? Would it make sense to calculate FPR50 for a range of effect sizes?

A: Yes if you use the web calculator or the R scripts then you need to specify what the normalized effect size is. You can use your observed one. If you’re trying to interpret real data, you’ve got an estimated effect size and you can use that. For example when you’ve observed p = 0.05 that corresponds to a likelihood ratio of 2.8 when you use the true effect size (that’s known when you do simulations). All you’ve got is the observed effect size. So they’re not the same of course. But you can easily show with simulations, that if you use the observed effect size in place of the the true effect size (which you don’t generally know) then that likelihood ratio goes up from about 2.8 to 3.6; it’s around 3, either way. You can plug your observed normalised effect size into the calculator and you won’t be led far astray. This shown in section 5 of the 2017 paper (especially section 5.1).

Q: Consider hypothesis H1 versus H2 which is the interpretation to go with?

A: Well I’m not quite clear still what the two interpretations the questioner is alluding to are but I shouldn’t rely on the p value. The most natural way to compare two hypotheses is the calculate the likelihood ratio.

You can do a full Bayesian analysis. Some forms of Bayesian analysis can give results that are quite similar to the p values. But that can’t possibly be generally true because are defined differently. Stephen Senn produced an example where there was essentially no problem with p value, but that was for a one-sided test with a fairly bizarre prior distribution.

In general in Bayes, you specify a prior distribution of effect sizes, what you believe before the experiment. Now, unless you have empirical data for what that distribution is, which is very rare indeed, then I just can’t see the justification for that. It’s bad enough making up the probability that there’s a real effect compared with there being no real effect. To make up a whole distribution just seems to be a bit like fantasy.

Mine is simpler because by considering a point null-hypothesis and a point alternative hypothesis, what in general would be called Bayes’ factors become likelihood ratios. Likelihood ratios are much easier to understand than Bayes’ factors because they just give you the relative probability of observing your data under two different hypotheses. This is a special case of Bayes’ theorem. But as I mentioned, any approach to Bayes’ theorem which assumes a point null hypothesis gives pretty similar answers, so it doesn’t really matter which you use.

There was edition of the American Statistician last year which had 44 different contributions about “the world beyond p = 0.05″. I found it a pretty disappointing edition because there was no agreement among people and a lot of people didn’t get around to making any recommendation. They said what was wrong, but didn’t say what you should do in response. The one paper that I did like was the one by Benjamin & Berger. They recommended their false positive risk estimate (as I would call it; they called it something different but that’s what it amounts to) and that’s even simpler to calculate than mine. It’s a little more pessimistic, it can give a bigger false positive risk for a given p value, but apart from that detail, their recommendations are much the same as mine. It doesn’t really matter which you choose.

Q: If people want a procedure that does not too often lead them to draw wrong conclusions, is it fine if they use a p value?

A: No, that maximises your wrong conclusions, among the available methods! The whole point is, that the false positive risk is a lot bigger than the p value under almost all circumstances. Some people refer to this as the p value exaggerating the evidence; but it only does so if you incorrectly interpret the p value as being the probability that you’re wrong. It certainly is not that.

Q: Your thoughts on, there’s lots of recommendations about practical alternatives to p values. Most notably the Nature piece that was published last year—something like 400 signatories—that said that we should retire the p value. Their alternative was to just report effect sizes and confidence intervals. Now you’ve said you’re not against anything that should be standard practice, but I wonder whether this alternative is actually useful, to retire the p value?

A: I don’t think the 400 author piece in Nature recommended ditching p values at all. It recommended ditching the 0.05 threshold, and just stating a p value. That would mean abandoning the term “statistically significant” which is so shockingly misleading for the reasons I’ve been talking about. But it didn’t say that you shouldn’t give p values, and I don’t think it really recommended an alternative. I would be against not giving p values because it’s the p value which enables you to calculate the equivalent false positive risk which would be much harder work if people didn’t give the p value.

If you use the false positive risk, you’ll inevitably get a larger false negative rate. So, if you’re using it to make a decision, other things come into it than the false positive risk and the p value. Namely, the cost of missing an effect which is real (a false negative), and the cost of getting a false positive. They both matter. If you can estimate the costs associated with either of them, then then you can draw some sort of optimal conclusion.

Certainly the costs of getting false positives or rather low for most people. In fact, there may be a great advantage to your career to publish a lot of false positives, unfortunately. This is the problem that the RIOT science club is dealing with I guess.

Q: What about changing the alpha level? To tinker with the alpha level has been popular in the light of the replication crisis, to make it even a more difficult test pass when testing your hypothesis. Some people have said that it should be 0.005 should be the threshold.

A: Daniel Benjamin said that and a lot of other authors. I wrote to them about that and they said that they didn’t really think it was very satisfactory but it would be better than the present practice. They regarded it as a sort of interim thing.

It’s true that you would have fewer false positives if you did that, but it’s a very crude way of treating the false positive risk problem. I would much prefer to make a direct estimate, even though it’s rough, of the false positive risk rather than just crudely reducing to p = 0.005. I do have a long paragraph in one of the papers discussing this particular thing {towards the end of Conclusions in the 2017 paper).

If you were willing to assume a 50:50 prior chance of there being a real effect the p = 0.005 would correspond to FPR50 = 0.034, which sounds satisfactory (from Table, above, or web calculator).

But if, for example, you are testing a hypothesis about teleportation or mind-reading or homeopathy then you probably wouldn’t be willing to give a prior of 50% to that being right before the experiment. If the prior probability of there being a real effect were 0.1, rather than 0.5, the Table above shows that observation of p = 0.005 would suggest, in my example, FPR = 0.24 and a 24% risk of a false positive would still be disastrous. In this case you would have to have observed p = 0.00043 in order to reduce the false positive risk to 0.05.

So no fixed p value threshold will cope adequately with every problem.

Links

- For up-to-date links to the web calculator, and to papers, start at http://www.onemol.org.uk/?page_id=456

- Colquhoun, 2014, An investigation of the false discovery rate and the

misinterpretation of p-values

https://royalsocietypublishing.org/doi/full/10.1098/rsos.140216 - Colquhoun, 2017, The reproducibility of research and the misinterpretation

of p-values https://royalsocietypublishing.org/doi/10.1098/rsos.171085 - Colquhoun, 2019, The False Positive Risk: A Proposal Concerning What to Do About p-Values

https://www.tandfonline.com/doi/full/10.1080/00031305.2018.1529622 - Benjamin & Berger, Three Recommendations for Improving the Use of p-Values

https://www.tandfonline.com/doi/full/10.1080/00031305.2018.1543135 - Sellke, T., Bayarri, M. J., and Berger, J. O. (2001), “Calibration of p Values for Testing Precise Null Hypotheses,” The American Statistician, 55, 62–71. DOI: 10.1198/000313001300339950. [Taylor & Francis Online],

This piece is almost identical with today’s Spectator Health article.

This week there has been enormously wide coverage in the press for one of the worst papers on acupuncture that I’ve come across. As so often, the paper showed the opposite of what its title and press release, claimed. For another stunning example of this sleight of hand, try Acupuncturists show that acupuncture doesn’t work, but conclude the opposite: journal fails, published in the British Journal of General Practice).

Presumably the wide coverage was a result of the hyped-up press release issued by the journal, BMJ Acupuncture in Medicine. That is not the British Medical Journal of course, but it is, bafflingly, published by the BMJ Press group, and if you subscribe to press releases from the real BMJ. you also get them from Acupuncture in Medicine. The BMJ group should not be mixing up press releases about real medicine with press releases about quackery. There seems to be something about quackery that’s clickbait for the mainstream media.

As so often, the press release was shockingly misleading: It said

Acupuncture may alleviate babies’ excessive crying Needling twice weekly for 2 weeks reduced crying time significantly

This is totally untrue. Here’s why.

|

Luckily the Science Media Centre was on the case quickly: read their assessment. The paper made the most elementary of all statistical mistakes. It failed to make allowance for the jelly bean problem. The paper lists 24 different tests of statistical significance and focusses attention on three that happen to give a P value (just) less than 0.05, and so were declared to be "statistically significant". If you do enough tests, some are bound to come out “statistically significant” by chance. They are false postives, and the conclusions are as meaningless as “green jelly beans cause acne” in the cartoon. This is called P-hacking and it’s a well known cause of problems. It was evidently beyond the wit of the referees to notice this naive mistake. It’s very doubtful whether there is anything happening but random variability. And that’s before you even get to the problem of the weakness of the evidence provided by P values close to 0.05. There’s at least a 30% chance of such values being false positives, even if it were not for the jelly bean problem, and a lot more than 30% if the hypothesis being tested is implausible. I leave it to the reader to assess the plausibility of the hypothesis that a good way to stop a baby crying is to stick needles into the poor baby. If you want to know more about P values try Youtube or here, or here. |

One of the people asked for an opinion on the paper was George Lewith, the well-known apologist for all things quackish. He described the work as being a "good sized fastidious well conducted study ….. The outcome is clear". Thus showing an ignorance of statistics that would shame an undergraduate.

On the Today Programme, I was interviewed by the formidable John Humphrys, along with the mandatory member of the flat-earth society whom the BBC seems to feel obliged to invite along for "balance". In this case it was professional acupuncturist, Mike Cummings, who is an associate editor of the journal in which the paper appeared. Perhaps he’d read the Science media centre’s assessment before he came on, because he said, quite rightly, that

"in technical terms the study is negative" "the primary outcome did not turn out to be statistically significant"

to which Humphrys retorted, reasonably enough, “So it doesn’t work”. Cummings’ response to this was a lot of bluster about how unfair it was for NICE to expect a treatment to perform better than placebo. It was fascinating to hear Cummings admit that the press release by his own journal was simply wrong.

Listen to the interview here

Another obvious flaw of the study is that the nature of the control group. It is not stated very clearly but it seems that the baby was left alone with the acupuncturist for 10 minutes. A far better control would have been to have the baby cuddled by its mother, or by a nurse. That’s what was used by Olafsdottir et al (2001) in a study that showed cuddling worked just as well as another form of quackery, chiropractic, to stop babies crying.

Manufactured doubt is a potent weapon of the alternative medicine industry. It’s the same tactic as was used by the tobacco industry. You scrape together a few lousy papers like this one and use them to pretend that there’s a controversy. For years the tobacco industry used this tactic to try to persuade people that cigarettes didn’t give you cancer, and that nicotine wasn’t addictive. The main stream media obligingly invite the representatives of the industry who convey to the reader/listener that there is a controversy, when there isn’t.

Acupuncture is no longer controversial. It just doesn’t work -see Acupuncture is a theatrical placebo: the end of a myth. Try to imagine a pill that had been subjected to well over 3000 trials without anyone producing convincing evidence for a clinically useful effect. It would have been abandoned years ago. But by manufacturing doubt, the acupuncture industry has managed to keep its product in the news. Every paper on the subject ends with the words "more research is needed". No it isn’t.

Acupuncture is pre-scientific idea that was moribund everywhere, even in China, until it was revived by Mao Zedong as part of the appalling Great Proletarian Revolution. Now it is big business in China, and 100 percent of the clinical trials that come from China are positive.

if you believe them, you’ll truly believe anything.

Follow-up

29 January 2017

Soon after the Today programme in which we both appeared, the acupuncturist, Mike Cummings, posted his reaction to the programme. I thought it worth posting the original version in full. Its petulance and abusiveness are quite remarkable.

I thank Cummings for giving publicity to the video of our appearance, and for referring to my Wikipedia page. I leave it to the reader to judge my competence, and his, in the statistics of clinical trials. And it’s odd to be described as a "professional blogger" when the 400+ posts on dcscience.net don’t make a penny -in fact they cost me money. In contrast, he is the salaried medical director of the British Medical Acupuncture Society.

It’s very clear that he has no understanding of the error of the transposed conditional, nor even the mulltiple comparison problem (and neither, it seems, does he know the meaning of the word ‘protagonist’).

I ignored his piece, but several friends complained to the BMJ for allowing such abusive material on their blog site. As a result a few changes were made. The “baying mob” is still there, but the Wikipedia link has gone. I thought that readers might be interested to read the original unexpurgated version. It shows, better than I ever could, the weakness of the arguments of the alternative medicine community. To quote Upton Sinclair:

“It is difficult to get a man to understand something, when his salary depends upon his not understanding it.”

It also shows that the BBC still hasn’t learned the lessons in Steve Jones’ excellent “Review of impartiality and accuracy of the BBC’s coverage of science“. Every time I appear in such a programme, they feel obliged to invite a member of the flat earth society to propagate their make-believe.

Acupuncture for infantile colic – misdirection in the media or over-reaction from a sceptic blogger?26 Jan, 17 | by Dr Mike Cummings So there has been a big response to this paper press released by BMJ on behalf of the journal Acupuncture in Medicine. The response has been influenced by the usual characters – retired professors who are professional bloggers and vocal critics of anything in the realm of complementary medicine. They thrive on oiling up and flexing their EBM muscles for a baying mob of fellow sceptics (see my ‘stereotypical mental image’ here). Their target in this instant is a relatively small trial on acupuncture for infantile colic.[1] Deserving of being press released by virtue of being the largest to date in the field, but by no means because it gave a definitive answer to the question of the efficacy of acupuncture in the condition. We need to wait for an SR where the data from the 4 trials to date can be combined. So what about the research itself? I have already said that the trial was not definitive, but it was not a bad trial. It suffered from under-recruiting, which meant that it was underpowered in terms of the statistical analysis. But it was prospectively registered, had ethical approval and the protocol was published. Primary and secondary outcomes were clearly defined, and the only change from the published protocol was to combine the two acupuncture groups in an attempt to improve the statistical power because of under recruitment. The fact that this decision was made after the trial had begun means that the results would have to be considered speculative. For this reason the editors of Acupuncture in Medicine insisted on alteration of the language in which the conclusions were framed to reflect this level of uncertainty. DC has focussed on multiple statistical testing and p values. These are important considerations, and we could have insisted on more clarity in the paper. P values are a guide and the 0.05 level commonly adopted must be interpreted appropriately in the circumstances. In this paper there are no definitive conclusions, so the p values recorded are there to guide future hypothesis generation and trial design. There were over 50 p values reported in this paper, so by chance alone you must expect some to be below 0.05. If one is to claim statistical significance of an outcome at the 0.05 level, ie a 1:20 likelihood of the event happening by chance alone, you can only perform the test once. If you perform the test twice you must reduce the p value to 0.025 if you want to claim statistical significance of one or other of the tests. So now we must come to the predefined outcomes. They were clearly stated, and the results of these are the only ones relevant to the conclusions of the paper. The primary outcome was the relative reduction in total crying time (TC) at 2 weeks. There were two significance tests at this point for relative TC. For a statistically significant result, the p values would need to be less than or equal to 0.025 – neither was this low, hence my comment on the Radio 4 Today programme that this was technically a negative trial (more correctly ‘not a positive trial’ – it failed to disprove the null hypothesis ie that the samples were drawn from the same population and the acupuncture intervention did not change the population treated). Finally to the secondary outcome – this was the number of infants in each group who continued to fulfil the criteria for colic at the end of each intervention week. There were four tests of significance so we need to divide 0.05 by 4 to maintain the 1:20 chance of a random event ie only draw conclusions regarding statistical significance if any of the tests resulted in a p value at or below 0.0125. Two of the 4 tests were below this figure, so we say that the result is unlikely to have been chance alone in this case. With hindsight it might have been good to include this explanation in the paper itself, but as editors we must constantly balance how much we push authors to adjust their papers, and in this case the editor focussed on reducing the conclusions to being speculative rather than definitive. A significant result in a secondary outcome leads to a speculative conclusion that acupuncture ‘may’ be an effective treatment option… but further research will be needed etc… Now a final word on the 3000 plus acupuncture trials that DC loves to mention. His point is that there is no consistent evidence for acupuncture after over 3000 RCTs, so it clearly doesn’t work. He first quoted this figure in an editorial after discussing the largest, most statistically reliable meta-analysis to date – the Vickers et al IPDM.[2] DC admits that there is a small effect of acupuncture over sham, but follows the standard EBM mantra that it is too small to be clinically meaningful without ever considering the possibility that sham (gentle acupuncture plus context of acupuncture) can have clinically relevant effects when compared with conventional treatments. Perhaps now the best example of this is a network meta-analysis (NMA) using individual patient data (IPD), which clearly demonstrates benefits of sham acupuncture over usual care (a variety of best standard or usual care) in terms of health-related quality of life (HRQoL).[3] |

30 January 2017

I got an email from the BMJ asking me to take part in a BMJ Head-to-Head debate about acupuncture. I did one of these before, in 2007, but it generated more heat than light (the only good thing to come out of it was the joke about leprechauns). So here is my polite refusal.

|

Hello Thanks for the invitation, Perhaps you should read the piece that I wrote after the Today programme Why don’t you do these Head to Heads about genuine controversies? To do them about homeopathy or acupuncture is to fall for the “manufactured doubt” stratagem that was used so effectively by the tobacco industry to promote smoking. It’s the favourite tool of snake oil salesman too, and th BMJ should see that and not fall for their tricks. Such pieces night be good clickbait, but they are bad medicine and bad ethics. All the best David |

This post arose from a recent meeting at the Royal Society. It was organised by Julie Maxton to discuss the application of statistical methods to legal problems. I found myself sitting next to an Appeal Court Judge who wanted more explanation of the ideas. Here it is.

Some preliminaries

The papers that I wrote recently were about the problems associated with the interpretation of screening tests and tests of significance. They don’t allude to legal problems explicitly, though the problems are the same in principle. They are all open access. The first appeared in 2014:

http://rsos.royalsocietypublishing.org/content/1/3/140216

Since the first version of this post, March 2016, I’ve written two more papers and some popular pieces on the same topic. There’s a list of them at http://www.onemol.org.uk/?page_id=456.

I also made a video for YouTube of a recent talk.

In these papers I was interested in the false positive risk (also known as the false discovery rate) in tests of significance. It turned out to be alarmingly large. That has serious consequences for the credibility of the scientific literature. In legal terms, the false positive risk means the proportion of cases in which, on the basis of the evidence, a suspect is found guilty when in fact they are innocent. That has even more serious consequences.

Although most of what I want to say can be said without much algebra, it would perhaps be worth getting two things clear before we start.

The rules of probability.

(1) To get any understanding, it’s essential to understand the rules of probabilities, and, in particular, the idea of conditional probabilities. One source would be my old book, Lectures on Biostatistics (now free), The account on pages 19 to 24 give a pretty simple (I hope) description of what’s needed. Briefly, a vertical line is read as “given”, so Prob(evidence | not guilty) means the probability that the evidence would be observed given that the suspect was not guilty.

(2) Another potential confusion in this area is the relationship between odds and probability. The relationship between the probability of an event occurring, and the odds on the event can be illustrated by an example. If the probability of being right-handed is 0.9, then the probability of being not being right-handed is 0.1. That means that 9 people out of 10 are right-handed, and one person in 10 is not. In other words for every person who is not right-handed there are 9 who are right-handed. Thus the odds that a randomly-selected person is right-handed are 9 to 1. In symbols this can be written

\[ \mathrm{probability=\frac{odds}{1 + odds}} \]

In the example, the odds on being right-handed are 9 to 1, so the probability of being right-handed is 9 / (1+9) = 0.9.

Conversely,

\[ \mathrm{odds =\frac{probability}{1 – probability}} \]

In the example, the probability of being right-handed is 0.9, so the odds of being right-handed are 0.9 / (1 – 0.9) = 0.9 / 0.1 = 9 (to 1).

With these preliminaries out of the way, we can proceed to the problem.

The legal problem

The first problem lies in the fact that the answer depends on Bayes’ theorem. Although that was published in 1763, statisticians are still arguing about how it should be used to this day. In fact whenever it’s mentioned, statisticians tend to revert to internecine warfare, and forget about the user.

Bayes’ theorem can be stated in words as follows

\[ \mathrm{\text{posterior odds ratio} = \text{prior odds ratio} \times \text{likelihood ratio}} \]

“Posterior odds ratio” means the odds that the person is guilty, relative to the odds that they are innocent, in the light of the evidence, and that’s clearly what one wants to know. The “prior odds” are the odds that the person was guilty before any evidence was produced, and that is the really contentious bit.

Sometimes the need to specify the prior odds has been circumvented by using the likelihood ratio alone, but, as shown below, that isn’t a good solution.

The analogy with the use of screening tests to detect disease is illuminating.

Screening tests

A particularly straightforward application of Bayes’ theorem is in screening people to see whether or not they have a disease. It turns out, in many cases, that screening gives a lot more wrong results (false positives) than right ones. That’s especially true when the condition is rare (the prior odds that an individual suffers from the condition is small). The process of screening for disease has a lot in common with the screening of suspects for guilt. It matters because false positives in court are disastrous.

The screening problem is dealt with in sections 1 and 2 of my paper. or on this blog (and here). A bit of animation helps the slides, so you may prefer the Youtube version.

The rest of my paper applies similar ideas to tests of significance. In that case the prior probability is the probability that there is in fact a real effect, or, in the legal case, the probability that the suspect is guilty before any evidence has been presented. This is the slippery bit of the problem both conceptually, and because it’s hard to put a number on it.

But the examples below show that to ignore it, and to use the likelihood ratio alone, could result in many miscarriages of justice.

In the discussion of tests of significance, I took the view that it is not legitimate (in the absence of good data to the contrary) to assume any prior probability greater than 0.5. To do so would presume you know the answer before any evidence was presented. In the legal case a prior probability of 0.5 would mean assuming that there was a 50:50 chance that the suspect was guilty before any evidence was presented. A 50:50 probability of guilt before the evidence is known corresponds to a prior odds ratio of 1 (to 1) If that were true, the likelihood ratio would be a good way to represent the evidence, because the posterior odds ratio would be equal to the likelihood ratio.

It could be argued that 50:50 represents some sort of equipoise, but in the example below it is clearly too high, and if it is less that 50:50, use of the likelihood ratio runs a real risk of convicting an innocent person.

The following example is modified slightly from section 3 of a book chapter by Mortera and Dawid (2008). Philip Dawid is an eminent statistician who has written a lot about probability and the law, and he’s a member of the legal group of the Royal Statistical Society.

My version of the example removes most of the algebra, and uses different numbers.

Example: The island problem

The “island problem” (Eggleston 1983, Appendix 3) is an imaginary example that provides a good illustration of the uses and misuses of statistical logic in forensic identification.

A murder has been committed on an island, cut off from the outside world, on which 1001 (= N + 1) inhabitants remain. The forensic evidence at the scene consists of a measurement, x, on a “crime trace” characteristic, which can be assumed to come from the criminal. It might, for example, be a bit of the DNA sequence from the crime scene.

Say, for the sake of example, that the probability of a random member of the population having characteristic x is P = 0.004 (i.e. 0.4% ), so the probability that a random member of the population does not have the characteristic is 1 – P = 0.996. The mainland police arrive and arrest a random islander, Jack. It is found that Jack matches the crime trace. There is no other relevant evidence.

How should this match evidence be used to assess the claim that Jack is the murderer? We shall consider three arguments that have been used to address this question. The first is wrong. The second and third are right. (For illustration, we have taken N = 1000, P = 0.004.)

(1) Prosecutor’s fallacy

Prosecuting counsel, arguing according to his favourite fallacy, asserts that the probability that Jack is guilty is 1 – P , or 0.996, and that this proves guilt “beyond a reasonable doubt”.

The probability that Jack would show characteristic x if he were not guilty would be 0.4% i.e. Prob(Jack has x | not guilty) = 0.004. Therefore the probability of the evidence, given that Jack is guilty, Prob(Jack has x | Jack is guilty), is one 1 – 0.004 = 0.996.

But this is Prob(evidence | guilty) which is not what we want. What we need is the probability that Jack is guilty, given the evidence, P(Jack is guilty | Jack has characteristic x).

To mistake the latter for the former is the prosecutor’s fallacy, or the error of the transposed conditional.

Dawid gives an example that makes the distinction clear.

“As an analogy to help clarify and escape this common and seductive confusion, consider the difference between “the probability of having spots, if you have measles” -which is close to 1 and “the probability of having measles, if you have spots” -which, in the light of the many alternative possible explanations for spots, is much smaller.”

(2) Defence counter-argument

Counsel for the defence points out that, while the guilty party must have characteristic x, he isn’t the only person on the island to have this characteristic. Among the remaining N = 1000 innocent islanders, 0.4% have characteristic x, so the number who have it will be NP = 1000 x 0.004 = 4 . Hence the total number of islanders that have this characteristic must be 1 + NP = 5 . The match evidence means that Jack must be one of these 5 people, but does not otherwise distinguish him from any of the other members of it. Since just one of these is guilty, the probability that this is Jack is thus 1/5, or 0.2— very far from being “beyond all reasonable doubt”.

(3) Bayesian argument

The probability of the having characteristic x (the evidence) would be Prob(evidence | guilty) = 1 if Jack were guilty, but if Jack were not guilty it would be 0.4%, i.e. Prob(evidence | not guilty) = P. Hence the likelihood ratio in favour of guilt, on the basis of the evidence, is

\[ LR=\frac{\text{Prob(evidence } | \text{ guilty})}{\text{Prob(evidence }|\text{ not guilty})} = \frac{1}{P}=250 \]

In words, the evidence would be 250 times more probable if Jack were guilty than if he were innocent. While this seems strong evidence in favour of guilt, it still does not tell us what we want to know, namely the probability that Jack is guilty in the light of the evidence: Prob(guilty | evidence), or, equivalently, the odds ratio -the odds of guilt relative to odds of innocence, given the evidence,

To get that we must multiply the likelihood ratio by the prior odds on guilt, i.e. the odds on guilt before any evidence is presented. It’s often hard to get a numerical value for this. But in our artificial example, it is possible. We can argue that, in the absence of any other evidence, Jack is no more nor less likely to be the culprit than any other islander, so that the prior probability of guilt is 1/(N + 1), corresponding to prior odds on guilt of 1/N.

We can now apply Bayes’s theorem to obtain the posterior odds on guilt:

\[ \text {posterior odds} = \text{prior odds} \times LR = \left ( \frac{1}{N}\right ) \times \left ( \frac{1}{P} \right )= 0.25 \]

Thus the odds of guilt in the light of the evidence are 4 to 1 against. The corresponding posterior probability of guilt is

\[ Prob( \text{guilty } | \text{ evidence})= \frac{1}{1+NP}= \frac{1}{1+4}=0.2 \]

This is quite small –certainly no basis for a conviction.

This result is exactly the same as that given by the Defence Counter-argument’, (see above). That argument was simpler than the Bayesian argument. It didn’t explicitly use Bayes’ theorem, though it was implicit in the argument. The advantage of using the former is that it looks simpler. The advantage of the explicitly Bayesian argument is that it makes the assumptions more clear.

In summary The prosecutor’s fallacy suggested, quite wrongly, that the probability that Jack was guilty was 0.996. The likelihood ratio was 250, which also seems to suggest guilt, but it doesn’t give us the probability that we need. In stark contrast, the defence counsel’s argument, and equivalently, the Bayesian argument, suggested that the probability of Jack’s guilt as 0.2. or odds of 4 to 1 against guilt. The potential for wrong conviction is obvious.

Conclusions.

Although this argument uses an artificial example that is simpler than most real cases, it illustrates some important principles.

(1) The likelihood ratio is not a good way to evaluate evidence, unless there is good reason to believe that there is a 50:50 chance that the suspect is guilty before any evidence is presented.

(2) In order to calculate what we need, Prob(guilty | evidence), you need to give numerical values of how common the possession of characteristic x (the evidence) is the whole population of possible suspects (a reasonable value might be estimated in the case of DNA evidence), We also need to know the size of the population. In the case of the island example, this was 1000, but in general, that would be hard to answer and any answer might well be contested by an advocate who understood the problem.

These arguments lead to four conclusions.

(1) If a lawyer uses the prosecutor’s fallacy, (s)he should be told that it’s nonsense.

(2) If a lawyer advocates conviction on the basis of likelihood ratio alone, s(he) should be asked to justify the implicit assumption that there was a 50:50 chance that the suspect was guilty before any evidence was presented.

(3) If a lawyer uses Defence counter-argument, or, equivalently, the version of Bayesian argument given here, (s)he should be asked to justify the estimates of the numerical value given to the prevalence of x in the population (P) and the numerical value of the size of this population (N). A range of values of P and N could be used, to provide a range of possible values of the final result, the probability that the suspect is guilty in the light of the evidence.

(4) The example that was used is the simplest possible case. For more complex cases it would be advisable to ask a professional statistician. Some reliable people can be found at the Royal Statistical Society’s section on Statistics and the Law.

If you do ask a professional statistician, and they present you with a lot of mathematics, you should still ask these questions about precisely what assumptions were made, and ask for an estimate of the range of uncertainty in the value of Prob(guilty | evidence) which they produce.

Postscript: real cases

Another paper by Philip Dawid, Statistics and the Law, is interesting because it discusses some recent real cases: for example the wrongful conviction of Sally Clark because of the wrong calculation of the statistics for Sudden Infant Death Syndrome.

On Monday 21 March, 2016, Dr Waney Squier was struck off the medical register by the General Medical Council because they claimed that she misrepresented the evidence in cases of Shaken Baby Syndrome (SBS).

This verdict was questioned by many lawyers, including Michael Mansfield QC and Clive Stafford Smith, in a letter. “General Medical Council behaving like a modern inquisition”

The latter has already written “This shaken baby syndrome case is a dark day for science – and for justice“..

The evidence for SBS is based on the existence of a triad of signs (retinal bleeding, subdural bleeding and encephalopathy). It seems likely that these signs will be present if a baby has been shake, i.e Prob(triad | shaken) is high. But this is irrelevant to the question of guilt. For that we need Prob(shaken | triad). As far as I know, the data to calculate what matters are just not available.

It seem that the GMC may have fallen for the prosecutor’s fallacy. Or perhaps the establishment won’t tolerate arguments. One is reminded, once again, of the definition of clinical experience: “Making the same mistakes with increasing confidence over an impressive number of years.” (from A Sceptic’s Medical Dictionary by Michael O’Donnell. A Sceptic’s Medical Dictionary BMJ publishing, 1997).

Appendix (for nerds). Two forms of Bayes’ theorem

The form of Bayes’ theorem given at the start is expressed in terms of odds ratios. The same rule can be written in terms of probabilities. (This was the form used in the appendix of my paper.) For those interested in the details, it may help to define explicitly these two forms.

In terms of probabilities, the probability of guilt in the light of the evidence (what we want) is

\[ \text{Prob(guilty } | \text{ evidence}) = \text{Prob(evidence } | \text{ guilty}) \frac{\text{Prob(guilty })}{\text{Prob(evidence })} \]

In terms of odds ratios, the odds ratio on guilt, given the evidence (which is what we want) is

\[ \frac{ \text{Prob(guilty } | \text{ evidence})} {\text{Prob(not guilty } | \text{ evidence}} =

\left ( \frac{ \text{Prob(guilty)}} {\text {Prob((not guilty)}} \right )

\left ( \frac{ \text{Prob(evidence } | \text{ guilty})} {\text{Prob(evidence } | \text{ not guilty}} \right ) \]

or, in words,

\[ \text{posterior odds of guilt } =\text{prior odds of guilt} \times \text{likelihood ratio} \]

This is the precise form of the equation that was given in words at the beginning.

A derivation of the equivalence of these two forms is sketched in a document which you can download.

Follow-up

23 March 2016

It’s worth pointing out the following connection between the legal argument (above) and tests of significance.

(1) The likelihood ratio works only when there is a 50:50 chance that the suspect is guilty before any evidence is presented (so the prior probability of guilt is 0.5, or, equivalently, the prior odds ratio is 1).

(2) The false positive rate in signiifcance testing is close to the P value only when the prior probability of a real effect is 0.5, as shown in section 6 of the P value paper.

However there is another twist in the significance testing argument. The statement above is right if we take as a positive result any P < 0.05. If we want to interpret a value of P = 0.047 in a single test, then, as explained in section 10 of the P value paper, we should restrict attention to only those tests that give P close to 0.047. When that is done the false positive rate is 26% even when the prior is 0.5 (and much bigger than 30% if the prior is smaller –see extra Figure), That justifies the assertion that if you claim to have discovered something because you have observed P = 0.047 in a single test then there is a chance of at least 30% that you’ll be wrong. Is there, I wonder, any legal equivalent of this argument?

|

“Statistical regression to the mean predicts that patients selected for abnormalcy will, on the average, tend to improve. We argue that most improvements attributed to the placebo effect are actually instances of statistical regression.”

“Thus, we urge caution in interpreting patient improvements as causal effects of our actions and should avoid the conceit of assuming that our personal presence has strong healing powers.” |

In 1955, Henry Beecher published "The Powerful Placebo". I was in my second undergraduate year when it appeared. And for many decades after that I took it literally, They looked at 15 studies and found that an average 35% of them got "satisfactory relief" when given a placebo. This number got embedded in pharmacological folk-lore. He also mentioned that the relief provided by placebo was greatest in patients who were most ill.

Consider the common experiment in which a new treatment is compared with a placebo, in a double-blind randomised controlled trial (RCT). It’s common to call the responses measured in the placebo group the placebo response. But that is very misleading, and here’s why.

The responses seen in the group of patients that are treated with placebo arise from two quite different processes. One is the genuine psychosomatic placebo effect. This effect gives genuine (though small) benefit to the patient. The other contribution comes from the get-better-anyway effect. This is a statistical artefact and it provides no benefit whatsoever to patients. There is now increasing evidence that the latter effect is much bigger than the former.

How can you distinguish between real placebo effects and get-better-anyway effect?

The only way to measure the size of genuine placebo effects is to compare in an RCT the effect of a dummy treatment with the effect of no treatment at all. Most trials don’t have a no-treatment arm, but enough do that estimates can be made. For example, a Cochrane review by Hróbjartsson & Gøtzsche (2010) looked at a wide variety of clinical conditions. Their conclusion was:

“We did not find that placebo interventions have important clinical effects in general. However, in certain settings placebo interventions can influence patient-reported outcomes, especially pain and nausea, though it is difficult to distinguish patient-reported effects of placebo from biased reporting.”

In some cases, the placebo effect is barely there at all. In a non-blind comparison of acupuncture and no acupuncture, the responses were essentially indistinguishable (despite what the authors and the journal said). See "Acupuncturists show that acupuncture doesn’t work, but conclude the opposite"

So the placebo effect, though a real phenomenon, seems to be quite small. In most cases it is so small that it would be barely perceptible to most patients. Most of the reason why so many people think that medicines work when they don’t isn’t a result of the placebo response, but it’s the result of a statistical artefact.

Regression to the mean is a potent source of deception

The get-better-anyway effect has a technical name, regression to the mean. It has been understood since Francis Galton described it in 1886 (see Senn, 2011 for the history). It is a statistical phenomenon, and it can be treated mathematically (see references, below). But when you think about it, it’s simply common sense.

You tend to go for treatment when your condition is bad, and when you are at your worst, then a bit later you’re likely to be better, The great biologist, Peter Medawar comments thus.

|

"If a person is (a) poorly, (b) receives treatment intended to make him better, and (c) gets better, then no power of reasoning known to medical science can convince him that it may not have been the treatment that restored his health"

(Medawar, P.B. (1969:19). The Art of the Soluble: Creativity and originality in science. Penguin Books: Harmondsworth). |

This is illustrated beautifully by measurements made by McGorry et al., (2001). Patients with low back pain recorded their pain (on a 10 point scale) every day for 5 months (they were allowed to take analgesics ad lib).